Container Tech Evolution - Learn like a baby with baby steps

raja mani

raja maniTable of contents

- what is containers?

- Install kali docker from public registry on a mac system

- How to install and use Docker:

- 1. List Stored Docker Images

- 2. Where Docker Stores Images on macOS

- 3. Access Docker Files and Volumes

- 4. Where Docker Stores Its Data on the Host

- 5. Cleaning Up Images

- Use Cases for Containers

- 1. Legacy/Monolithic Application

- Characteristics:

- Advantages:

- Disadvantages:

- 2. Containerised Application

- Characteristics:

- Advantages:

- Disadvantages:

- Comparison

- Summary

- Technology Evolution lessons

- Sun Microsystem

- Second story of non adaptation

what is containers?

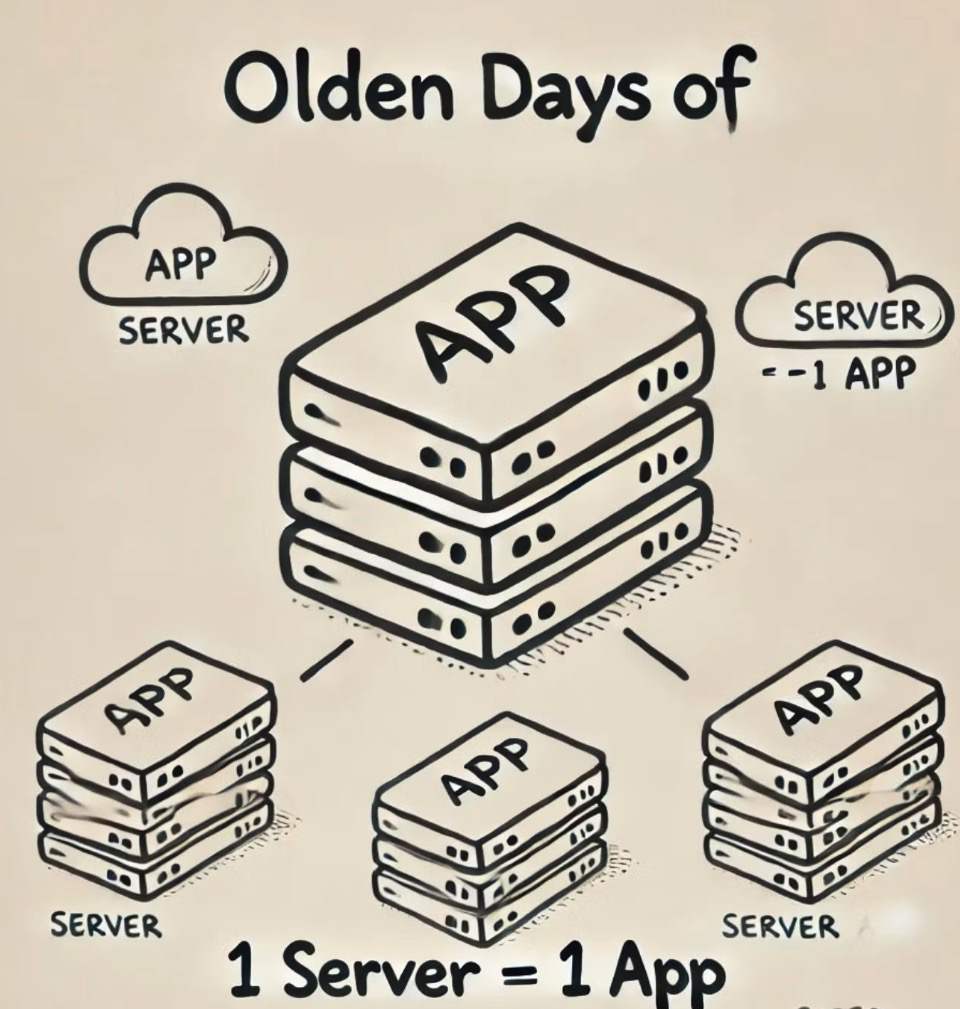

OLDEN DAYS

Application runs the business; no app, no business.

Early 90s to mid-2000s

→ One app for every server

Inefficient Resource Utilization: Servers were often underutilized, leading to wasted hardware resources like CPU, memory, and storage.

High Costs: Running a separate physical server for each application increased hardware, maintenance, and energy costs significantly.

Scalability Challenges: Scaling required purchasing new hardware, which was time-consuming and expensive, limiting the ability to grow quickly.

Complex Management: Managing multiple physical servers for different applications increased operational complexity, requiring more time for updates, patches, and monitoring.

Okay, now the question is, what kind of server will this application need to host it?

Nobody knows :)

Since we need faster applications, businesses invested in massively overpowered server racks where an app runs at a maximum of 5% utilization, leaving 95% dormant.

A businessman would see this as a business opportunity.

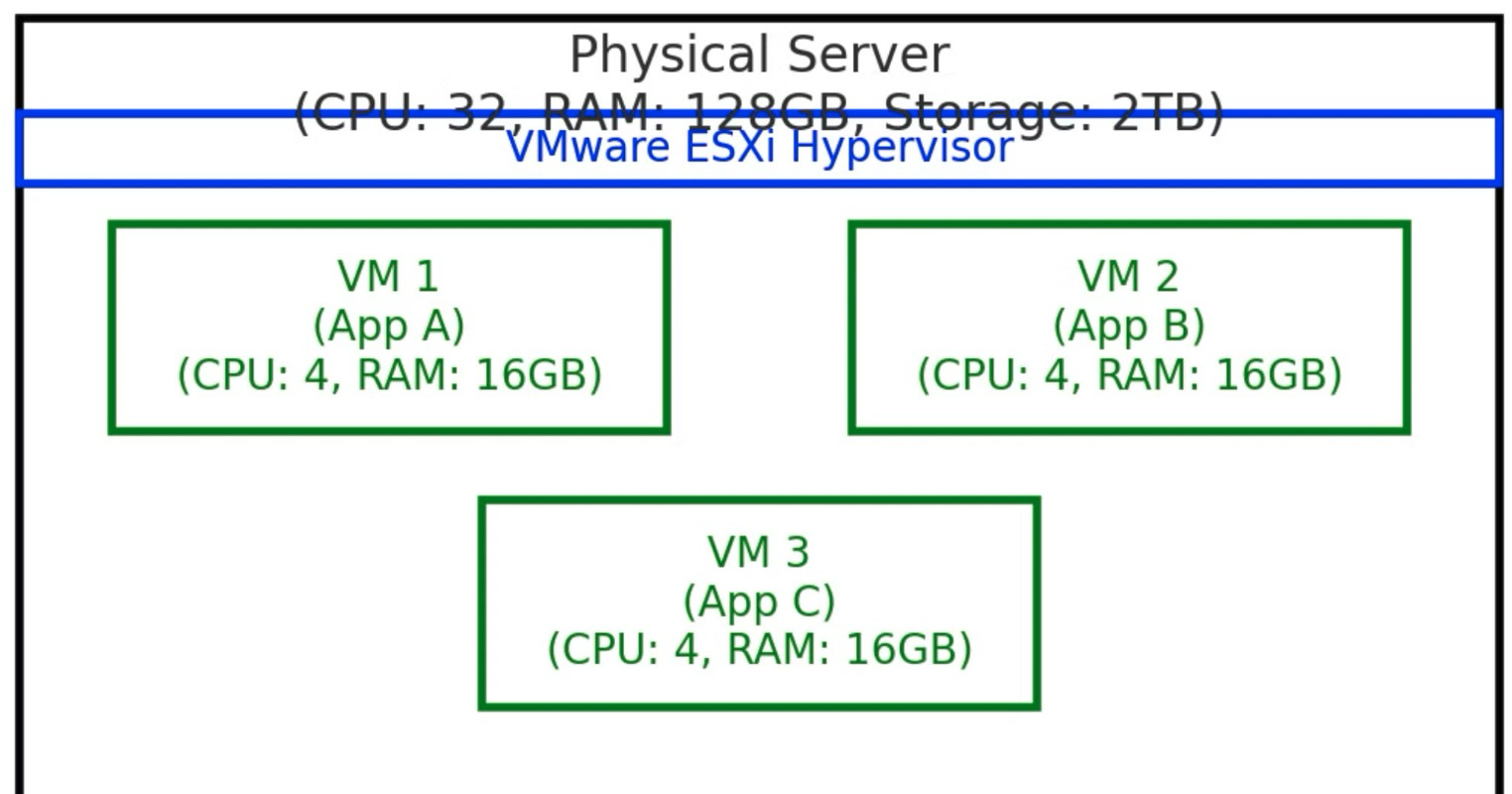

Then came VMware, a truly brilliant idea that revolutionized the way businesses managed their IT infrastructure. By introducing virtualization, VMware allowed multiple virtual machines (VMs) to run on a single physical server, drastically improving resource utilization and reducing costs. This innovation made many traditional business processes irrelevant, such as purchasing separate hardware for every application or manually managing individual servers. IT teams could now provision virtual servers in minutes, scale effortlessly, and simplify backup and disaster recovery. VMware laid the foundation for modern cloud computing and set the stage for future advancements like Docker, transforming infrastructure management forever.

On down side

OS licenses

OS steals cpu,memory , disk

Complex management for admins

patching

AV and security

On the downside

OS licenses

OS uses CPU, memory, and disk

Complex management for admins

Patching

AV and security

The introduction of containers with tools like Docker was designed to address many of these challenges, by allowing applications to share the host OS and eliminating much of the overhead caused by running separate OS instances for each application. This creates a more efficient and lightweight system, where the focus is on running applications, not managing multiple operating systems.

What is a Container?

A container is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, runtime, system libraries, and settings. Containers allow developers and IT professionals to package and run applications consistently across different environments.

Containers have gained significant popularity due to their ability to solve the "it works on my machine" problem, where software behaves differently in development, testing, or production environments.

Key Concepts:

Isolation: Containers isolate the application and its dependencies from the underlying system and other containers running on the same host. This isolation is achieved using Linux kernel features such as namespaces (for resource isolation) and cgroups (for limiting resource usage).

Lightweight: Containers share the host system’s kernel, making them more lightweight compared to traditional virtual machines (VMs). While VMs include a full operating system in each instance, containers use minimal overhead.

Portability: Since a container bundles an application with all of its dependencies, it can run on any system that has a container runtime (e.g., Docker), regardless of differences between underlying operating systems.

Consistency: Containers ensure that the software behaves the same in any environment, whether on a developer’s local machine, in a testing environment, or in production.

How Containers Differ from Virtual Machines (VMs)

| Aspect | Containers | Virtual Machines (VMs) |

| Isolation | Process-level isolation, shares the host OS kernel | Full OS virtualization, complete isolation |

| Overhead | Lightweight, minimal overhead, fast startup | Higher overhead, includes OS kernel, slower startup |

| Portability | Highly portable, environment-independent | Portability is more limited due to larger size and dependencies |

| Resource Usage | Efficient resource usage, shares resources of the host OS | Uses more resources, each VM requires its own OS |

| Startup Time | Fast (seconds) | Slow (minutes) |

| Security | More lightweight isolation, slightly less secure than VMs | Stronger isolation, more secure |

Basic Architecture of Containers

Containers operate in a similar manner to regular processes on a Linux system, but they are isolated from the rest of the system. The key components that enable containers to function include:

Namespaces: These provide isolation of system resources, such as:

PID namespace (process IDs)

NET namespace (network interfaces, IP addresses)

MNT namespace (file systems and mount points)

UTS namespace (hostnames)

IPC namespace (inter-process communication)

Cgroups (Control Groups): These limit and monitor resource usage (CPU, memory, network) by containers.

Union Filesystem: Containers often use a layered filesystem (e.g., OverlayFS or AUFS), where different layers represent changes made to the base image. This allows for reuse of base images and reduces storage space requirements.

Containerization Tools

Docker: Docker is the most popular tool for building, running, and managing containers. It provides a comprehensive ecosystem for container management, including the Docker Engine (runtime) and Docker Hub (a registry for container images).

Podman: Podman is another container management tool, designed as a Docker alternative. It offers rootless operation and improved security features without requiring a daemon.

Kubernetes: While Docker focuses on building and running individual containers, Kubernetes is a platform for orchestrating large numbers of containers across multiple hosts. Kubernetes is used for automating the deployment, scaling, and management of containerized applications.

Example of a Container Lifecycle

Build: A developer writes code, and a Dockerfile defines how the application and its dependencies are packaged. For example:

# Use an official Node.js runtime as a base image FROM node:14 # Set the working directory in the container WORKDIR /usr/src/app # Copy package.json and install dependencies COPY package*.json ./ RUN npm install # Copy the rest of the application code COPY . . # Expose the port the app runs on EXPOSE 8080 # Start the application CMD ["node", "server.js"]Image Creation: Using Docker, the developer builds the container image with the command:

docker build -t my-app .Run: The containerized application can now be run in a consistent environment with:

docker run -p 8080:8080 my-appPush and Share: The image can be pushed to a registry like Docker Hub for others to pull and run in their own environment.

Install kali docker from public registry on a mac system

How to install and use Docker:

Step 1: Download and install Docker Desktop on your Mac.

Step 2: Open the terminal and pull the official Kali Linux Docker image with:

docker pull kalilinux/kali-rollingThis downloads a Kali Linux environment pre-packaged with tools.

Step 3: Start a Kali Linux container with:

docker run -it kalilinux/kali-rolling /bin/bashThis opens an interactive terminal inside a Kali Linux container, where you can run commands just like in a Linux terminal.

Step 4: You can install additional tools in the container with

apt-get. For example:apt-get update apt-get install nmap

Since you’re in a Kali Linux Docker container, you can test out various Kali features. Let’s get you started with some of the key tools and features that Kali offers for testing.

1. Check Installed Tools

Kali comes pre-loaded with various tools for penetration testing, but in Docker, you might need to install additional tools depending on the image.

You can check what’s installed by using:

apt list --installed

List of things i did

whoami

apt-get update

apt list --installed

apt-get install nmap

apt-get install metasploit-framework

apt-get install wireshark

nmap scanme.nmap.org

ping google.com

curl google.com

apt-get update

apt-get install iputils-ping

ping google.com

apt-get install nikto

nikto -h http://example.com

msfconsole

apt-get install aircrack-ng

On a Mac, Docker images and containers are stored in a hidden location managed by Docker Desktop. By default, Docker runs inside a virtualized environment (like a lightweight VM), and the files are not directly accessible from the Mac filesystem as regular files. However, you can still access Docker's storage and related files using Docker commands.

Here’s how to manage and inspect Docker images:

1. List Stored Docker Images

To see the Docker images you've pulled (including the Kali Linux image), use the following command:

docker images

This will display all downloaded images along with their size, repository name, and image ID.

2. Where Docker Stores Images on macOS

Docker uses a VM to store images on macOS, and you won't find them in a traditional folder like /Applications. Instead, they are stored inside a Docker virtual machine. Technically, Docker stores all of its data in a virtual disk image managed by Docker Desktop.

You can inspect where Docker stores its data on your Mac by checking the Docker Desktop settings:

Open Docker Desktop.

Go to Preferences -> Resources -> Advanced.

Here you’ll see how much disk space is allocated to Docker’s virtual disk image, but the actual images are stored within the VM, not directly accessible in a Mac folder structure.

3. Access Docker Files and Volumes

If you want to inspect or interact with the files inside the container, you can run a container and access its filesystem directly:

Start a container from your image:

docker run -it kalilinux/kali-linux-docker /bin/bashOnce inside the running container, you can explore the Kali Linux filesystem.

4. Where Docker Stores Its Data on the Host

Docker uses a virtual machine file to store images and containers, typically located in:

~/Library/Containers/com.docker.docker/Data/vms/0/

However, these files are not directly usable without Docker running, as they are part of Docker’s virtualized environment.

5. Cleaning Up Images

If you ever need to remove old images or containers to free up space, you can do so with the following commands:

Remove unused images:

docker image pruneRemove a specific image:

docker rmi <image_id>

Use Cases for Containers

Microservices Architecture: Containers are perfect for running individual microservices, where each microservice runs in its own isolated container. This ensures that updates and changes to one microservice do not affect others.

Development and Testing: Developers can easily replicate production environments on their local machines, ensuring consistency between development, testing, and production environments.

CI/CD Pipelines: Containers enable rapid and consistent deployments, making them ideal for Continuous Integration/Continuous Deployment (CI/CD) pipelines. They ensure that the code behaves the same during every stage of the deployment process.

Cloud and Edge Computing: Containers are widely used in cloud-native applications, enabling seamless scaling and efficient resource utilization. Kubernetes, in particular, makes it easy to manage containerized applications in a cloud environment.Legacy/Monolithic App vs. Containerized App Primer

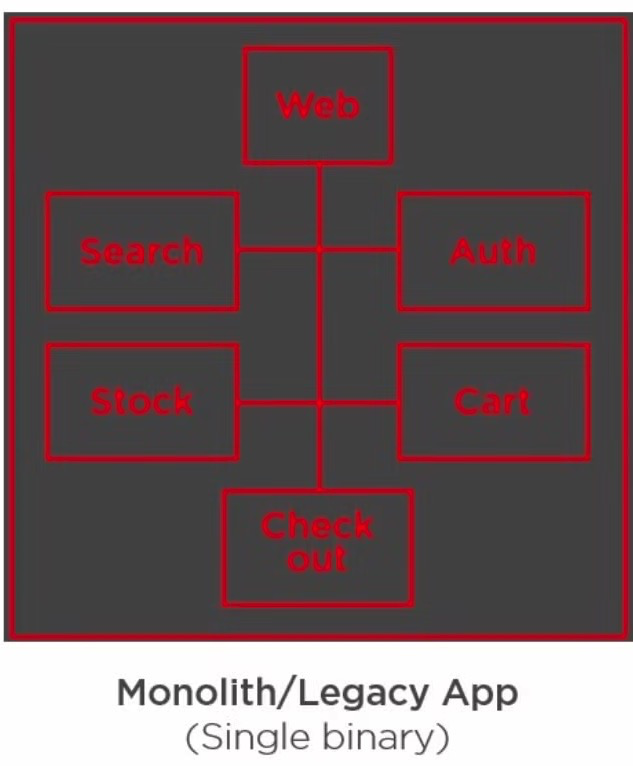

1. Legacy/Monolithic Application

A legacy or monolithic application refers to traditional software architectures where the entire application (including its user interface, business logic, and data management) is tightly coupled and runs as a single, unified process.

Characteristics:

Single Codebase: All the functionality of the application is bundled together in a single codebase.

Tightly Coupled: All components (UI, backend logic, database access, etc.) are interdependent. Any change in one part of the system might affect the entire application.

Single Deployment: The application is deployed as one unit (e.g., a WAR or EAR file for Java, or an executable for desktop applications).

Scaling Challenges: Scaling requires scaling the entire application rather than individual components. For example, even if only the database or a specific service needs more resources, the whole application must be scaled up (usually by adding more instances or servers).

Difficult to Maintain: Over time, monolithic applications become complex, making them harder to maintain, debug, and update. Every minor update requires the entire application to be redeployed.

Advantages:

Simplicity: Easy to develop in the initial stages as everything is in one place, and deployment is straightforward.

Performance: Generally faster in communication between components since everything runs within the same process.

Disadvantages:

Poor Scalability: Hard to scale individual parts of the application independently.

Difficult to Update: Any change requires a full redeployment of the application, increasing the risk of issues in production.

Limited Flexibility: Integrating new technologies or features is challenging due to tight coupling.

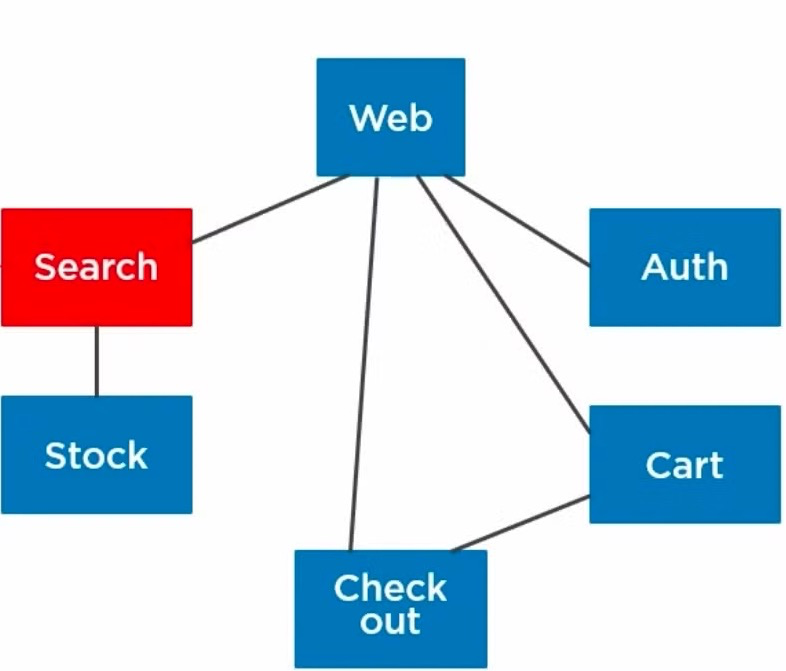

2. Containerised Application

A containerised application is built using modern development practices where each part of the application (often referred to as a service or microservice) is packaged into its own container. These containers run isolated from one another and can communicate via APIs or other means.

Characteristics:

Microservices Architecture: The application is broken down into independent services, each responsible for a specific function (e.g., authentication, database access, payment processing). These services are containerized individually.

Loose Coupling: Each service operates independently and communicates with other services through APIs, making the application modular.

Containerization: Each microservice is packaged in a container along with its dependencies. Docker is a common containerization platform used to achieve this.

Scaling: Each microservice can be scaled independently based on demand. For example, if one service is under heavy load, it can be scaled up without affecting other parts of the application.

Easier Updates: Updating individual services is easier and less risky because they are independent of the entire system. This allows for rolling updates or blue-green deployments.

Advantages:

Scalability: You can scale individual services independently, leading to more efficient use of resources.

Faster Development: Teams can work on different services in parallel without being blocked by dependencies in other parts of the system.

Fault Isolation: If one service fails, the rest of the application continues to run, minimising downtime.

Portability: Containers are environment-agnostic, meaning they can run the same way in development, staging, and production environments.

Disadvantages:

Complexity: Managing multiple containers and services introduces operational complexity, especially in orchestration (handled by platforms like Kubernetes).

Inter-Service Communication: Since services are decoupled and communicate over the network, latency can increase, and the risk of failure in communication arises.

Overhead: While containers are lightweight compared to VMs, they still introduce some overhead, especially when you have many containers running at once.

Comparison

| Aspect | Legacy/Monolithic Application | Containerized Application |

| Architecture | Tightly coupled, single codebase | Loosely coupled, microservices-based |

| Deployment | Deployed as a single unit | Deployed as independent containers |

| Scalability | Scaling the entire app | Scale individual services independently |

| Maintainability | Hard to maintain, updates require redeployment of the entire app | Easier to maintain, services can be updated independently |

| Flexibility | Difficult to adopt new technologies | Highly flexible, new tech can be introduced in specific services |

| Fault Isolation | Failure in one part can bring down the whole app | Failures are isolated to specific services |

| Resource Utilization | Requires more resources, harder to optimize | More efficient resource use, containers share host resources |

| Technology Stack | Same stack for the entire app | Each service can use its own technology stack |

Summary

Containers are lightweight, portable units of software packaging and execution that encapsulate an application and its dependencies.

They provide isolation and consistency across different environments.

Compared to VMs, containers are faster to start and more efficient in terms of resource usage, but they provide slightly less isolation.

Tools like Docker and Kubernetes play a central role in the development, deployment, and orchestration of containerized applications.

Containers have revolutionized software development and deployment by simplifying the creation of consistent, portable, and efficient runtime environments.

Legacy/Monolithic Applications are simpler to develop and deploy in the beginning but become difficult to scale, maintain, and update as they grow. They’re tightly coupled, and any change requires significant effort and risk.

Containerised Applications follow a microservices-based architecture, offering scalability, flexibility, and easier maintenance. While they introduce complexity in orchestration and inter-service communication, they enable more efficient resource utilisation and faster development cycles.

In modern software development, containerisation has become the preferred approach for building scalable, resilient, and maintainable applications, especially for cloud-native environments. However, monolithic architectures still persist in legacy systems, and transitioning to containerisation requires careful planning and a solid understanding of the business requirements.

Technology Evolution lessons

Companies failed because of lack of adaptation here are few lessons and case study.

Sun Microsystem

One of the most notable stories of a company losing out to cloud computing is the decline of Sun Microsystems.

Founded in 1982, Sun Microsystems was a giant in the hardware and software industry, renowned for its high-performance workstations, servers, and the creation of the Java programming language. Sun's early innovations helped shape the internet and computing infrastructure of the 1990s and early 2000s. At its peak, the company was a major supplier of expensive, high-end servers that powered many enterprise applications and websites.

The Beginning of the Decline

As the 2000s approached, the tech world was shifting. Cloud computing was emerging, offering companies the ability to rent computing power and storage instead of buying expensive hardware like Sun's servers. Companies like Amazon, Google, and Microsoft began to pioneer cloud computing services, offering more cost-effective, scalable, and flexible solutions to businesses.

At the same time, the open-source movement was gaining steam. Linux-based systems and cheaper, commodity x86 servers started gaining popularity. This shift eroded the demand for Sun's proprietary hardware and operating systems, such as its Solaris OS.

Sun was slow to recognize and adapt to these changes. Instead of embracing cloud computing, Sun doubled down on its traditional business model of selling high-end, proprietary hardware. The company made several attempts to pivot, but its moves were either too late or ineffective. For instance, Sun tried to focus more on software with products like Java and MySQL, but it couldn’t compete with the rapidly growing cloud services market, which was centered on delivering infrastructure as a service (IaaS) and software as a service (SaaS) over the internet.

Key Challenges and Missed Opportunities

Amazon Web Services (AWS): While Sun was still selling its expensive servers, Amazon launched AWS in 2006, offering cloud infrastructure that allowed businesses to rent compute power on demand. This was a major disruption in the industry. AWS became the de facto choice for startups and enterprises looking to save on infrastructure costs, scaling their operations easily without the need for expensive hardware. Sun missed the opportunity to offer a similar service, clinging to its hardware-centric model.

Open Source and Commodity Hardware: Sun was known for its proprietary hardware and software. However, the rise of open-source software like Linux and the use of cheap, commodity x86 servers quickly undercut Sun's expensive products. Many companies started opting for Linux on x86 machines instead of Sun's costly Solaris on SPARC servers.

Failure to Fully Leverage Java: Sun developed the Java programming language, which became one of the most widely used languages in the world, particularly for enterprise applications. Despite Java's success, Sun failed to effectively monetize it in a way that could have helped it compete against emerging cloud and software-as-a-service giants.

Acquisition and the End of Sun Microsystems: By 2009, Sun was struggling financially. Its hardware business had been undercut by cheaper alternatives, and its attempts to pivot into software and cloud services were not successful enough. Oracle, a major software company, acquired Sun Microsystems in 2010 for $7.4 billion. Oracle was primarily interested in Sun's software assets like Java and MySQL, but Sun's hardware business was not seen as valuable in the growing cloud-centric world.

The Lesson:

Sun Microsystems' downfall is a classic example of what can happen when a company fails to adapt to a changing technological landscape. The company’s reliance on selling expensive hardware, combined with its slow embrace of the cloud and open-source movements, left it vulnerable.

The rise of cloud computing fundamentally changed the way businesses think about IT infrastructure, moving from owning to renting computing resources. Companies that adapted early, like Amazon and Microsoft, thrived, while those like Sun Microsystems that failed to recognize the shift were left behind.

In today’s world, companies like Sun, which specialized in on-premise, proprietary hardware solutions, have largely faded into obscurity or pivoted to more cloud-focused models. Cloud computing continues to dominate, with providers like AWS, Microsoft Azure, and Google Cloud leading the charge, making infrastructure, platforms, and software accessible and scalable for everyone, from startups to multinational enterprises.

Second story of non adaptation

Another example of a company that lost to cloud computing is Blockbuster, the once-dominant video rental company that failed to adapt to the rise of cloud-based streaming services, particularly Netflix.

Blockbuster’s Rise to Dominance

Founded in 1985, Blockbuster was the go-to destination for movie rentals. At its peak, it had more than 9,000 stores worldwide, generating billions of dollars in revenue by renting DVDs, VHS tapes, and video games. Blockbuster's model relied heavily on brick-and-mortar stores, late fees, and a rental system that required customers to physically visit stores to rent and return movies.

The Shift in Consumer Preferences

In the early 2000s, consumer behavior began to change. People wanted more convenience and flexibility in how they watched movies. Instead of driving to a store and dealing with late fees, they were looking for ways to access movies from home. This shift in consumer demand laid the groundwork for the rise of digital media and cloud-based services.

Enter Netflix: The Rise of Streaming and Cloud-based Media

Netflix, which started in 1997 as a DVD-by-mail service, saw an opportunity in the early 2000s to take advantage of the internet and digital media. In 2007, Netflix launched its streaming service, allowing customers to watch movies and TV shows directly from the cloud over the internet, rather than relying on physical DVDs.

The convenience, flexibility, and cost-effectiveness of Netflix's streaming service attracted millions of subscribers, while Blockbuster was slow to recognize the shift toward cloud-based, on-demand media consumption.

Blockbuster's Missed Opportunities

Failure to Embrace Digital Media and Cloud Services: Netflix embraced the future by moving away from physical media and investing heavily in its cloud-based streaming service. Blockbuster, on the other hand, continued to rely on its brick-and-mortar stores and DVD rentals, underestimating how quickly streaming would take off.

Netflix Acquisition Offer Rejected: One of the most well-known stories in the Blockbuster downfall is that in 2000, Netflix’s co-founder Reed Hastings approached Blockbuster with an offer to sell Netflix for $50 million. At the time, Blockbuster’s leadership laughed off the offer, viewing Netflix as a small player in the video rental industry. This decision proved to be one of the biggest missed opportunities in business history, as Netflix is now worth over $150 billion.

Subscription Model vs. Late Fees: Netflix introduced a subscription-based model with unlimited rentals for a monthly fee, while Blockbuster relied on charging customers per rental and collecting revenue from late fees. Netflix’s no-late-fee model resonated with customers, while Blockbuster's late fees became a source of frustration for its users. Blockbuster eventually introduced a subscription service in response, but by then, Netflix had already gained significant market share.

Late Pivot to Streaming: Blockbuster did eventually try to launch its own streaming service in partnership with Enron in 2000 (called Blockbuster On Demand), but it failed due to technological limitations and lack of focus. The company later introduced its own standalone streaming service, but it was too late to compete with Netflix, which had already become synonymous with online streaming. Blockbuster’s streaming efforts were inconsistent and poorly executed.

Blockbuster’s Decline and Bankruptcy

By the late 2000s, Blockbuster was struggling financially, burdened by the high costs of maintaining thousands of stores and the changing landscape of digital media. In 2010, Blockbuster filed for bankruptcy. Its business model, based on physical rentals, simply couldn’t compete with the low-cost, cloud-based streaming model pioneered by Netflix and other companies like Hulu and Amazon Prime Video.

While Blockbuster initially blamed its decline on external factors such as the economic downturn and competition, the truth was that it failed to innovate and adapt to the cloud-based world. By the time Blockbuster realized the importance of streaming and digital content, Netflix and other cloud-based services had already captured a massive share of the market.

Netflix's Success and the Power of the Cloud

Netflix's success can be largely attributed to its ability to harness cloud computing. It moved beyond just a video rental service to a cloud-based platform that could deliver content directly to consumers anywhere, anytime. Netflix invested heavily in cloud infrastructure, partnering with companies like Amazon Web Services (AWS) to power its streaming platform. This allowed Netflix to scale quickly, offer personalized recommendations, and provide a seamless viewing experience across devices.

As Netflix evolved, it even became a content creator, producing hit shows like House of Cards and Stranger Things. This shift solidified Netflix's position as a leader in the cloud-based entertainment industry, moving beyond just a distributor to becoming a major player in media production.

Key Lessons from Blockbuster’s Fall

Failure to Adapt to Cloud and Digital Disruption: Blockbuster failed to foresee the shift from physical media to cloud-based streaming and digital content. This inability to adapt to new technologies led to its eventual collapse.

Underestimating the Cloud’s Potential: Blockbuster missed the potential of the cloud to deliver a superior customer experience. Netflix’s cloud-based infrastructure allowed it to offer a more convenient, cost-effective, and scalable service, which outclassed Blockbuster's physical stores.

Legacy Business Model: Blockbuster's reliance on its old business model of charging per rental and late fees became obsolete in the face of Netflix’s subscription-based, cloud-powered service. Sticking to legacy systems, while ignoring consumer preferences, proved to be fatal.

Missed Acquisition Opportunity: By not acquiring Netflix early on, Blockbuster missed the chance to dominate the streaming market. Netflix’s early pivot to cloud services and digital content distribution allowed it to leapfrog the competition.

Blockbuster’s decline is a classic example of how a once-dominant company can fail to survive technological disruption, particularly cloud computing and digital transformation. While Netflix and other streaming platforms embraced the power of the cloud to deliver content on demand, Blockbuster stuck to its outdated physical rental model. The failure to innovate in the face of cloud disruption turned Blockbuster from a powerhouse into a relic of the past.

We learn this technology because companies adapt as companies adapt threat actors as well adapt so we defenders need to be aware how technology works and how it was abused and then we reach how to defend hangon on the learning journey.

Subscribe to my newsletter

Read articles from raja mani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

raja mani

raja mani

✨🌟💫Threat Hunter 💫🌟✨