Power BI Data Connection Quiz

Mohamad Mahmood

Mohamad MahmoodTable of contents

QUESTION 1

Which of the following sources can Power BI connect to?

Ⓐ SQL Database.

Ⓑ Google Analytics.

Ⓒ R scripts.

Ⓓ All of the above.

.

QUESTION 2

In which storage mode are tables solely stored in-memory and queries fulfilled by cached data?

Ⓐ Import.

Ⓑ DirectQuery.

Ⓒ Dual.

Ⓓ Native.

.

QUESTION 3

Given the following data sources:

| Name | Type | Data size |

| Source1 | Azure SQL database | 2 GB |

| Source2 | Microsoft Excel spreadsheet | 5 MB |

Your report must adhere to the following requirements:

Report data must be current as of 7 AM Pacific Time each day.

The reports must provide fast response times when users interact with a visualization.

You need to create the dataset (semantic model).

Which dataset mode should you use?

Ⓐ Import.

Ⓑ DirectQuery.

Ⓒ Composite.

Ⓓ Live connection.

.

QUESTION 4

Refer the following exhibit.

When should you use DirectQuery?

Ⓐ You need to access the most

current data.

Ⓑ You need to minimize the

storage space for your Power

BI report.

Ⓒ Your dataset is too large to

be stored in-memory.

Ⓓ You want to maintain control

over data access and security.

Ⓔ All of the above.

.

QUESTION 5

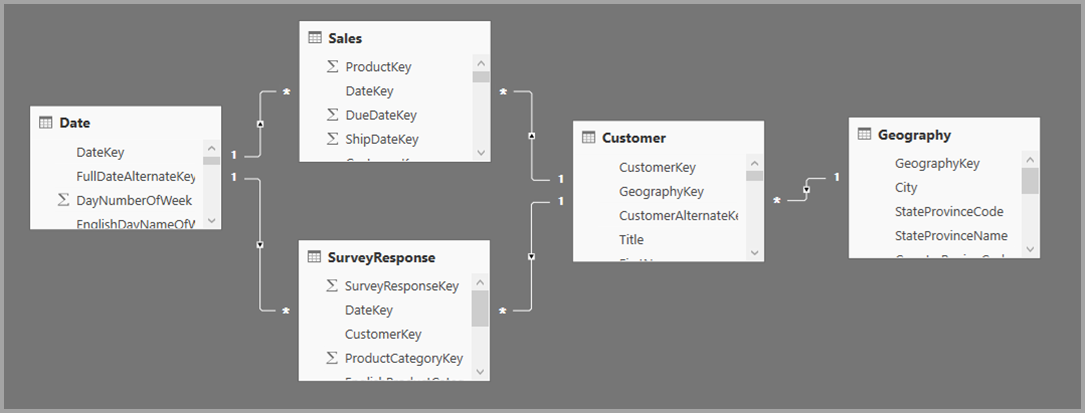

Consider the following model, where all the tables are from a single source that supports Import and DirectQuery.

All tables in this model are initially set to DirectQuery. If you then change the Storage mode of the SurveyResponse table to Import, what would be the best storage mode for the other tables?

⑴ Date:

Ⓐ DirectQuery.

Ⓑ Dual.

Ⓒ Import.

⑵ Customer:

Ⓐ DirectQuery.

Ⓑ Dual.

Ⓒ Import.

⑶ Geography:

Ⓐ DirectQuery.

Ⓑ Dual.

Ⓒ Import.

⑷ Sales:

Ⓐ DirectQuery.

Ⓑ Dual.

Ⓒ Import.

.

QUESTION 6

Refer the following exhibit.

You have an Azure SQL database that contains sales transactions. The database is updated frequently. You need to generate reports from the data to detect fraudulent transactions. The data must be visible within five minutes of an update.

How should you configure the data connection?

Ⓐ Add a SQL statement.

Ⓑ Set Data Connectivity mode

to DirectQuery.

Ⓒ Set the Command timeout in

minutes setting.

Ⓓ Set Data Connectivity mode

to Import.

.

QUESTION 7

This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are modeling data by using Microsoft Power BI. Part of the data model is a large Microsoft SQL Server table named Order that has more than 100 million records.

During the development process, you need to import a sample of the data from the Order table.

Solution: You add a WHERE clause to the SQL statement.

Does this meet the goal?

Ⓐ Yes.

Ⓑ No.

.

QUESTION 8

You use Power BI Desktop to load data from a Microsoft SQL Server database.

While waiting for the data to load, you receive the following error:

ERROR [08001] timeout expired

You need to resolve the error.

What are two ways to achieve the goal?

Each correct answer presents a complete solution.

Ⓐ Reduce the number of rows

and columns returned by each

query.

Ⓑ Split log running queries

into subsets of columns and

use Power Query to merge the

queries.

Ⓒ Set the Command timeout in

minutes setting.

Ⓓ Set Data Connectivity mode

to Import.

.

QUESTION 9

Refer the following exhibit.

Select the answer choice that completes each statement based on the information presented in the graphic.

⑴ The default timeout for the

connection from Power BI

Desktop to the database will

be ____ .

Ⓐ unlimited

Ⓑ one minute

Ⓑ 10 minutes

⑵ The navigator will display ____ .

Ⓐ all the tables

Ⓑ only tables that contain data

Ⓑ only tables that contain hierarchies

.

QUESTION 10

Which query language do you use to extract data from Microsoft SQL Server?

Ⓐ DAX.

Ⓑ T-SQL.

Ⓒ MDX

.

QUESTION 11

You’re creating a Power BI report with data from an Azure Analysis Services Cube. When the data refreshes in the cube, you would like to see it immediately in the Power BI report.

How should you connect?

Ⓐ Connect Live.

Ⓑ Import.

Ⓒ Direct Query.

.

QUESTION 12

What can you do to improve performance when you are getting data in Power BI?

Ⓐ Only pull data into the

Power BI service, not Power BI

Desktop.

Ⓑ Use the Select SQL statement

in your SQL queries when you

are pulling data from a

relational database.

Ⓒ Combine date and time

columns into a single column.

Ⓓ Do some calculations in the

original data source.

.

QUESTION 13

Which technology improves performance by generating a single query statement to retrieve and transform source data?

Ⓐ Query folding.

Ⓑ Adding index columns.

Ⓒ Adding custom columns with complex logic.

.

QUESTION 14

You have the following three versions of an Azure SQL database:

Test.

Production.

Development.

You have a dataset that uses the development database as a data source. You need to configure the dataset so that you can easily change the data source between the (1) Development, (2) Test, and (3) Production database servers from powerbi.com.

Which should you do?

Ⓐ Create a JSON file that

contains the database server

names. Import the JSON file to

the dataset.

Ⓑ Create a parameter and

update the queries to use the

parameter.

Ⓒ Create a query for each

database server and hide the

development tables.

Ⓓ Set the data source privacy

level to Organizational and

use the ReplaceValue Power

Query M function.

.

QUESTION 15

You create a report by using Microsoft Power BI Desktop.

The report uses data from a Microsoft SQL Server Analysis Services (SSAS) cube located on your company's internal network.

You plan to publish the report to the Power BI Service.

What should you implement to ensure that users who consume the report from the Power BI Service have the most up-to-date data from the cube?

Ⓐ an OData feed.

Ⓑ an On-premises data gateway.

Ⓒ a subscription.

Ⓓ a scheduled refresh of the dataset.

.

QUESTION 16

When would you need to access the Data Source Settings?

Ⓐ If you need to connect to a new data source.

Ⓑ If you need to edit and existing query.

Ⓒ If the file name or location changes.

Ⓓ All of the above.

.

QUESTION 17

How can you use parameters when connecting to data?

Ⓐ To connect to a JSON file.

Ⓑ To change data source values dynamically.

Ⓒ To create "What-If" scenarios.

Ⓓ To shape and transform data in the Query Editor.

.

QUESTION 18

Which of the following sources lets you connect your data to other business applications?

Ⓐ Microsoft Dataverse.

Ⓑ Microsoft Dataplatform.

Ⓒ Microsoft Dataflows.

Ⓓ Microsoft Excel.

.

QUESTION 19

You plan to create several datasets by using the Power BI service.

You have the files configured as shown in the following table.

| File name | File type | Size | Location |

| Data 1 | TSV | 50 MB | Microsoft OneDrive |

| Data 2 | XLSX | 3 GB | Local |

| Data 3 | XML | 100 MB | Microsoft OneDrive for Business |

| Data 4 | CSV | 2 GB | Microsoft OneDrive |

| Data 5 | JPG | 5 MB | Local |

You need to identify which files can be used as datasets (semantic models).

Which two files should you identify?

Ⓐ Data 1.

Ⓑ Data 2.

Ⓒ Data 3.

Ⓓ Data 4.

Ⓔ Data 5.

.

QUESTION 20

You plan to publish your SSAS Tabular (live connection) data model to Power BI Service. What must be used in order for this to be possible?

Ⓐ Data Gateway.

Ⓑ Dual Storage Mode.

Ⓒ Parameters.

Ⓓ Admin Privileges.

.

QUESTION 21

Which storage mode leaves the data at the data source?

Ⓐ Import.

Ⓑ Direct Query.

Ⓒ Dual.

.

QUESTION 22

What type of import error might leave a column blank?

Ⓐ Keep errors.

Ⓑ Unpivot columns.

Ⓒ Data type error.

.

QUESTION 23

Which of the following sources lets users connect to a set of pre-wired connections?

Ⓐ PBIDS Files

Ⓑ JSON Files

Ⓒ Dataflows

Ⓓ SSAS Tabular

.

QUESTION 24

You plan to publish your SSAS Tabular (live connection) data model to Power BI Service. What must be used in order for this to be possible?

Ⓐ Data Gateway.

Ⓑ Dual Storage Mode.

Ⓒ Parameters.

Ⓓ Admin Privileges.

.

QUESTION 25

A business intelligence (BI) developer creates a dataflow in Power BI that uses DirectQuery to access tables from an on-premises Microsoft SQL server. The

Enhanced Dataflows Compute Engine is turned on for the dataflow.

You need to use the dataflow in a report. The solution must meet the following requirements:

Minimize online processing operations.

Minimize calculation times and render times for visuals.

Include data from the current year, up to and including the previous day.

What should you do?

Ⓐ Create a dataflows

connection that has

DirectQuery mode selected.

Ⓑ Create a dataflows

connection that has

DirectQuery mode selected and

configure a gateway connection

for the dataset.

Ⓒ Create a dataflows

connection that has Import

mode selected and schedule a

daily refresh.

Ⓓ Create a dataflows

connection that has Import

mode selected and create a

Microsoft Power Automate

solution to refresh the data

hourly.

.

QUESTION 26

You publish a dataset that contains data from an on-premises Microsoft SQL Server database.

The dataset must be refreshed daily.

You need to ensure that the Power BI service can connect to the database and refresh the dataset.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Ⓐ Add the dataset owner to the data source.

Ⓑ Configure an on-premises data gateway.

Ⓒ Configure a virtual network data gateway.

Ⓓ Add a data source.

Ⓔ Configure a scheduled refresh.

.

QUESTION 27

You have the Azure SQL databases shown in the following table.

You plan to build a single PBIX file to meet the following requirements:

• Data must be consumed from the database that corresponds to each stage of the development lifecycle.

• Power BI deployment pipelines must NOT be used.

• The solution must minimize administrative effort.

What should you do?

Subscribe to my newsletter

Read articles from Mohamad Mahmood directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mohamad Mahmood

Mohamad Mahmood

Mohamad's interest is in Programming (Mobile, Web, Database and Machine Learning). He studies at the Center For Artificial Intelligence Technology (CAIT), Universiti Kebangsaan Malaysia (UKM).