Multi-Stage Docker Build

Rajat Chauhan

Rajat Chauhan

Containerization has become a critical part of modern application development and deployment workflows. Docker allows developers to package their applications, including all dependencies, into a portable image. One of the best practices in Dockerfile creation is using multistage builds, which helps in creating lightweight, production-ready images. In this blog, we will walk through the process of containerizing a Node.js ToDo app using Docker, while following Dockerfile best practices.

Benefits of Docker Multistage Builds:

Reduced Image Size: Multistage builds allow you to separate the build environment from the runtime environment. This means that all build dependencies, source code, and other large assets can be excluded from the final image, significantly reducing its size.

Improved Security: By eliminating unnecessary files and dependencies, you reduce the attack surface of your containers. Only the minimal set of files needed to run the application is included in the final image.

Simplified Build Process: Multistage builds allow you to define multiple stages in a single Dockerfile, improving maintainability. This simplifies the build process by consolidating everything into one file.

Environment Optimization: By separating stages, you can use different base images to build and run your application. For example, you might use a full Node.js image to build your app but switch to an optimized Nginx image to serve the built files.

Best Practices for Writing Dockerfiles:

Before we dive into the technical steps, here are some best practices when creating a Dockerfile to ensure efficient, secure, and maintainable Docker images:

Use Official Images: Start from minimal, official base images, such as

alpineor specific language runtime images likenode:alpine, to minimize vulnerabilities and unnecessary software.Leverage Docker’s Layer Caching: Order your

RUN,COPY, andADDcommands wisely. Docker caches each layer, so placing commands that change frequently (like copying the source code) near the end will help leverage caching and speed up builds.Minimize Layers: Each

RUN,COPY, andADDthe command creates a new layer in the image. To optimize image size, combine multiple commands into a singleRUNinstruction when possible.Exclude Unnecessary Files: Use

.dockerignoreto exclude files and directories that are not needed in the container, such as local development files,node_modules(if building in a multistage), or configuration files.Use Multi-Stage Builds: As discussed earlier, multistage builds allow for separate build and deployment stages, resulting in smaller, more secure images.

Now that we’ve covered the benefits and best practices, let’s dive into containerizing our Node.js application.

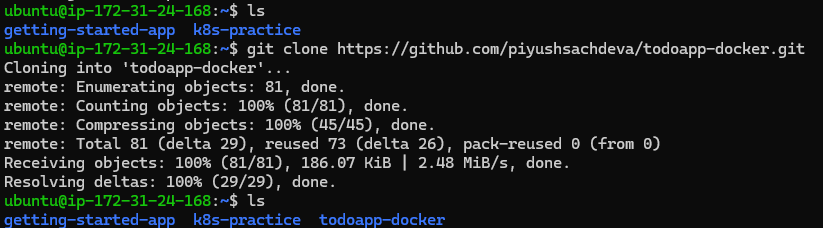

Step 1: Clone the Application Repository

We’ll start by cloning a sample ToDo app repository, which has a simple Node.js web application. You can use the one linked below or any other Node.js application of your choice.

git clone https://github.com/piyushsachdeva/todoapp-docker.git

cd todoapp-docker/

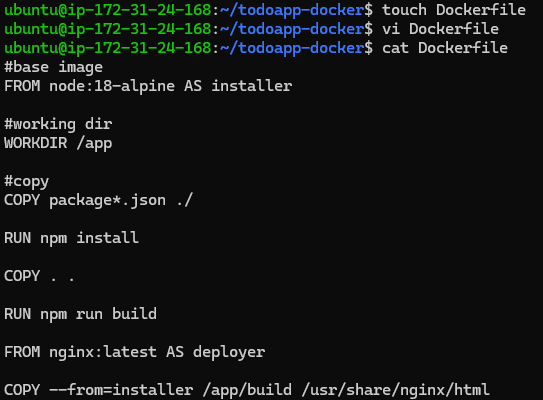

Step 2: Create a Dockerfile

Now, we’ll create a Dockerfile. A Dockerfile contains instructions to build a Docker image. In this file, we’ll specify how to set up the environment for our application.

touch Dockerfile

Open the Dockerfile in your favorite text editor (e.g., nano, vim, or Visual Studio Code), and paste the following content:

# Stage 1: Build the application

FROM node:18-alpine AS installer

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Stage 2: Run the application using Nginx

FROM nginx:latest AS deployer

COPY --from=installer /app/build /usr/share/nginx/html

Explanation:

First Stage (installer):

We are using the

node:18-alpineimage, which is a lightweight Node.js image.The

WORKDIRthe command sets the working directory inside the container to/app.The

COPY package*.json ./copies both thepackage.jsonandpackage-lock.jsonfiles from the host system to the/appdirectory in the container.The

RUN npm installthe command installs the necessary dependencies.The

COPY . .the command copies the entire project to the/appdirectory.The

RUN npm run buildcommand builds the production-ready version of the application.

Second Stage (deployer):

We are using the

nginx:latestimage to serve our static files.The

COPY --from=installer /app/build /usr/share/nginx/htmlcommand copies the built application from the first stage into the Nginx container’s HTML directory, which serves the web application.

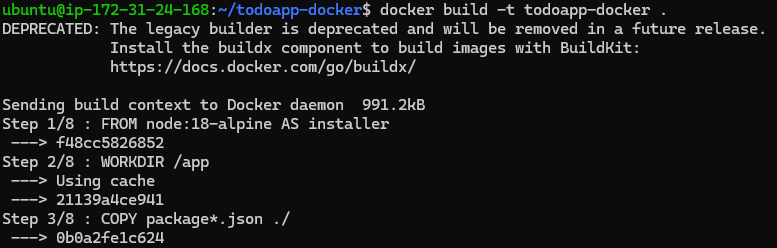

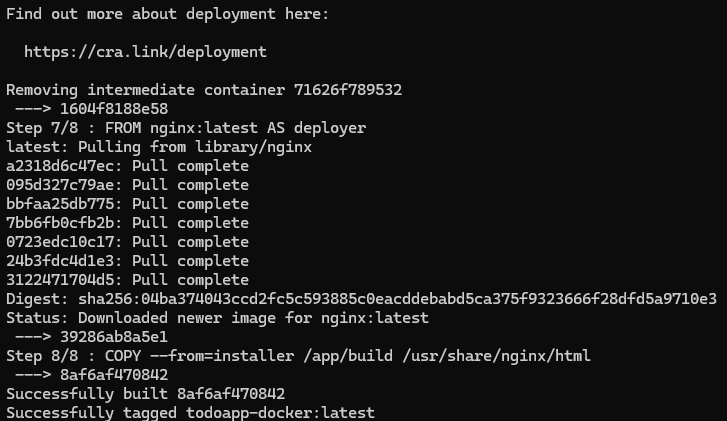

Step 3: Build the Docker Image

Now, let’s build the Docker image using the docker build command.

docker build -t todoapp-docker .

This command creates an image with the tag todoapp-docker. The -t option is used to name the image, and the . at the end refers to the current directory, which contains the Dockerfile and the application code.

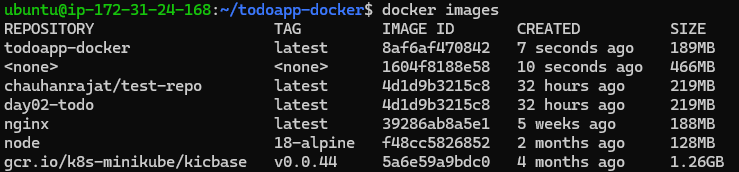

Step 4: Verify the Docker Image

Once the image is built, you can verify that it’s created and stored locally by running:

docker images

This command lists all the images present on your local Docker environment.

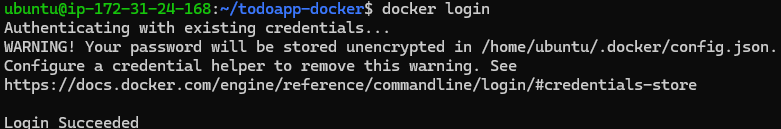

Step 5: Push the Image to Docker Hub

To share the image with others or deploy it to different environments, you can push the Docker image to a public repository on Docker Hub. First, login to Docker Hub using:

docker login

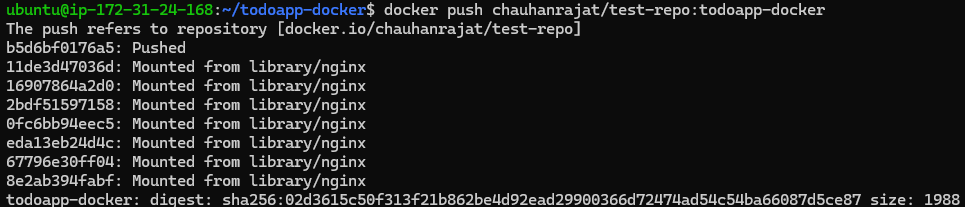

Once logged in, tag the image so that it can be pushed to your Docker Hub account.

docker tag todoapp-docker:latest chauhanrajat/test-repo:todoapp-docker

Now, push the image to your Docker Hub repository:

docker push chauhanrajat/test-repo:todoapp-docker

Step 6: Pull the Image on Another Environment

To pull this Docker image from another environment or machine, use the docker pull command:

docker pull chauhanrajat/test-repo:todoapp-docker

Step 7: Run the Docker Container

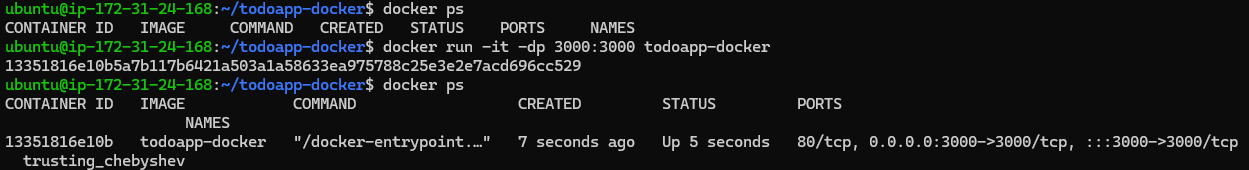

To start a container from the Docker image and expose it on port 3000, run the following command:

docker run -dp 3000:80 chauhanrajat/test-repo:todoapp-docker

This command maps port 80 inside the container to port 3000 on the host system.

Step 8: Verify the Application

If you’ve followed the steps correctly, your app should now be accessible via http://localhost:3000. Open a browser and navigate to that URL to see the ToDo app running inside the Docker container.

Step 9: Accessing the Running Container

To enter into the running container, use the docker exec command:

docker exec -it containername sh

You can also use the container ID instead of the container name.

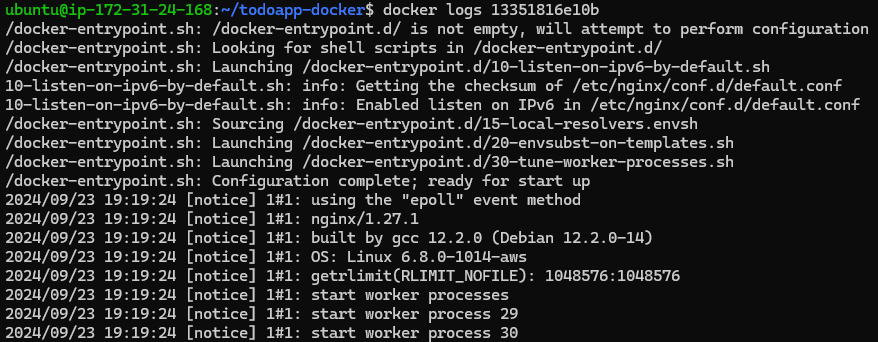

Step 10: Viewing Docker Logs

To view the logs generated by the container, you can use the following commands:

docker logs containername

# or

docker logs containerid

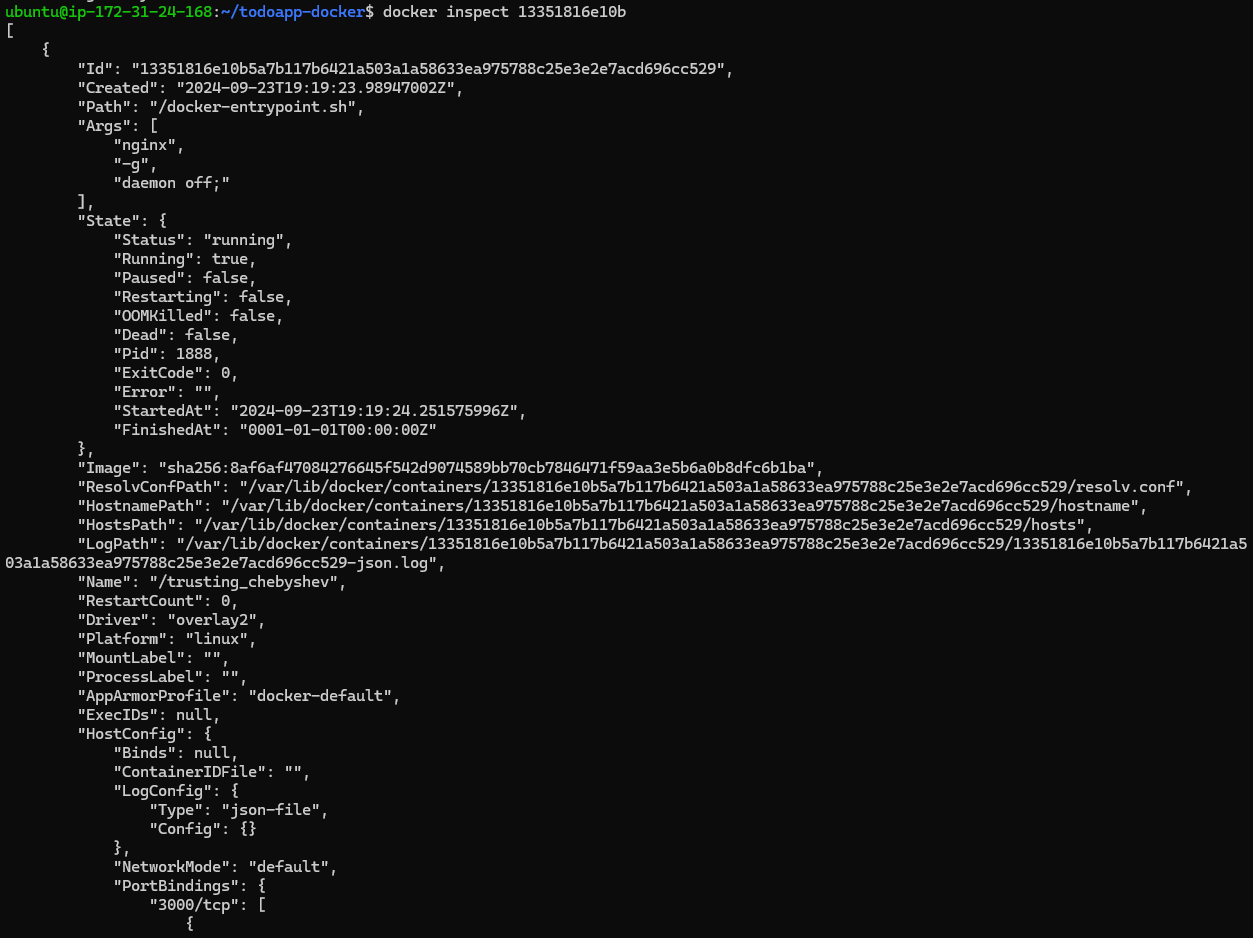

Step 11: Inspecting the Container

To inspect the details of the container, such as its IP address, volumes, or network settings, use:

docker inspect containername

Step 12: Clean Up Old Docker Images

Over time, old Docker images can pile up and take up space. You can remove unused images using the following command:

docker image rm image-id

You can get the image ID by running docker images and removing the ones you no longer need.

💡 If you need help or have any questions, just leave them in the comments! 📝 I would be happy to answer them!

💡 If you found this post useful, please give it a thumbs up 👍 and consider following for more helpful content. 😊

Thank you for taking the time to read! 💚

Subscribe to my newsletter

Read articles from Rajat Chauhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rajat Chauhan

Rajat Chauhan

Rajat Chauhan is a skilled Devops Engineer, having experience in automating, configuring, deploying releasing and monitoring the applications on cloud environment. • Good experience in areas of DevOps, CI/CD Pipeline, Build and Release management, Hashicorp Terraform, Containerization, AWS, and Linux/Unix Administration. • As a DevOps Engineer, my objective is to strengthen the company’s applications and system features, configure servers and maintain networks to reinforce the company’s technical performance. • Ensure that environment is performing at its optimum level, manage system backups and provide infrastructure support. • Experience working on various DevOps technologies/ tools like GIT, GitHub Actions, Gitlab, Terraform, Ansible, Docker, Kubernetes, Helm, Jenkins, Prometheus and Grafana, and AWS EKS, DevOps, Jenkins. • Positive attitude, strong work ethic, and ability to work in a highly collaborative team environment. • Self-starter, Fast learner, and a Team player with strong interpersonal skills • Developed shell scripts (Bash) for automating day-to-day maintenance tasks on top of that have good python scripting skills. • Proficient in communication and project management with good experience in resolving issues.