Java Concurrency with Project Loom in JDK 21

Ashwin Padiyar

Ashwin Padiyar

This page will outline the shortcomings of the current concurrency model and how virtual threads help address these challenges.

I will clarify that the traditional method of creating threads remains relevant and effective. Virtual threads are meant to enhance concurrency, not replace the existing thread model.

Java concurrency model

Java uses shared state concurrency model:

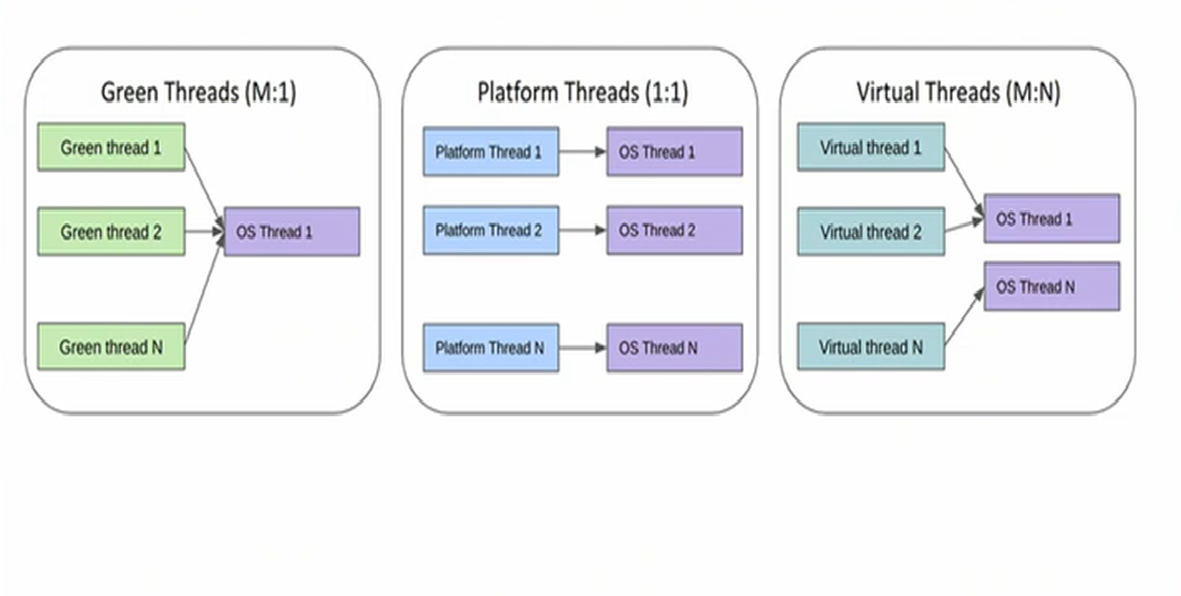

- This indicates that multiple threads can concurrently access the same resource or data during execution. Java offers mechanisms such as synchronization and locking to safely control and manage access to these resources or dataBefore the introduction of Project Loom, each Java thread was mapped to a platform thread in a one-to-one relationship.

Platform threads are essentially operating system threads.Each time a thread is created in Java using the traditional method, the following costs are incurred:

Thread Load Time: Approximately 1 MS

Thread Stack Size: 2 MB

The size can be adjusted using the

-XssJVM option, but increasing the stack size will increase memory consumption per thread.In Java’s shared state concurrency model, multiple threads share access to common data, such as variables or data structures, that they can read from and write to concurrently. This approach is efficient for many use cases, but it introduces challenges when multiple threads attempt to modify the same data simultaneously, as it can lead to issues like race conditions and deadlocks. To handle these, Java provides synchronization mechanisms (like

synchronizedblocks and locks) that control thread access to shared data, ensuring only one thread modifies the data at a time.In contrast, a message-passing concurrency model avoids direct sharing of memory. Instead of accessing the same variables or data structures, threads or processes communicate by sending and receiving messages. This way, each thread operates independently, and data is exchanged in a controlled manner through messages, reducing the risk of concurrency issues. This model is often associated with languages and frameworks like Erlang or Actor-based models, where processes or "actors" only interact via messages, maintaining data isolation and making the program more predictable and easier to scale.

Key Differences:

Shared State: Threads operate on the same data in shared memory, with synchronization mechanisms to prevent conflicts.

Message Passing: Threads/processes work independently and exchange data only through messages, eliminating shared memory but often introducing higher latency.

In Project Loom, while Java still fundamentally uses shared state concurrency, virtual threads help mitigate some limitations by making concurrency simpler and less resource-intensive.

Purpose of Threads in Java

Threads in Java serve two primary purposes:

Performing Computations: Threads are used for parallel or concurrent processing, enabling applications to handle multiple tasks at the same time, such as processing data in parallel or performing background computations.

Handling Blocking Operations: Threads are essential for managing blocking operations, like I/O activities and socket communication. By isolating these blocking tasks within their threads, Java applications can maintain responsiveness and avoid halting the entire application during these operations.

Traditional vs. Virtual Threads

In Java, platform threads are directly tied to OS threads with a 1:1 mapping, which brings inherent limitations in resource management. As the number of threads increases, managing these OS threads becomes a bottleneck, affecting both performance and memory usage.

Virtual threads, introduced with Project Loom, aim to address these bottlenecks by providing threads that are lighter in resource usage. Unlike traditional threads, virtual threads are managed by the Java runtime rather than the OS, which enables Java to handle millions of threads with much less overhead.

Why Traditional Threads Fall Short

Java’s traditional threading model, while powerful, often struggles with blocking operations and can lead to reduced throughput. Here are some key challenges:

Fixed Stack Size: Traditional threads use the stack memory, which is fixed in size. This can quickly become a limitation as threads increase.

Blocking I/O: Blocking operations, such as I/O and socket communication, tie up resources, reducing the application’s throughput.

Resource Intensive: Creating and managing a large number of OS threads leads to increased resource consumption.

Developers often implement workarounds, such as asynchronous programming, thread pools, and scaling infrastructure. However, these solutions add complexity to the codebase and require more resources, making applications harder to maintain.

Virtual Threads: A Solution with Flexibility

Unlike traditional threads that rely on the stack, virtual threads operate on the heap, which can dynamically adjust its size based on available memory. This flexibility significantly reduces memory issues, especially in applications requiring massive concurrency.

Some of the advantages of virtual threads include:

Reduced Overhead: Virtual threads have minimal memory usage, allowing applications to manage millions of threads efficiently.

Non-blocking I/O Made Simple: With virtual threads, non-blocking I/O operations are easier to implement, as threads can be suspended without tying up OS resources.

Enhanced Throughput: By using virtual threads, Java applications can achieve higher throughput, especially for applications with high concurrency needs.

Code Example: Traditional vs. Virtual Threads

To illustrate the difference, here’s a simplified comparison between traditional threads and virtual threads in Java.

Traditional Thread Example:

Thread thread = new Thread(() -> {

System.out.println("Hello from a traditional thread!");

});

thread.start();

Virtual Thread Example (Java 19 and beyond):

Thread vThread = Thread.startVirtualThread(() -> {

System.out.println("Hello from a virtual thread!");

});

With virtual threads, you no longer need to manage thread pools or worry about creating and destroying threads for each task. This change dramatically simplifies code while improving performance and scalability.

Benefits of Project Loom for Modern Java Applications

Improved Resource Management: With virtual threads, Java applications can operate with fewer resources while managing more tasks concurrently.

Simplified Concurrency Model: Virtual threads make asynchronous programming more accessible, reducing the need for complex thread management.

Scalability: Applications can scale more efficiently, managing thousands to millions of threads without compromising performance.

// The following code uses virtual threads, which complete execution more quickly.

// To observe the difference, try commenting out the virtual thread invocation

// and use traditional threads instead (commented line below for traditional invocation).

public class SampleLoomTest {

public static void doWork(){

try{

Thread.sleep(2000);

} catch(InterruptedException e){

System.out.println("InterruptedException");

}

}

public static void main(String[] args) {

int MAX = 1000000;

for(int i = 0; i < MAX; i++){

//new Thread(() -> doWork()).start();

Thread.startVirtualThread(SampleLoomTest::doWork);

}

try{

Thread.sleep(5000);

} catch (Exception e) {

throw new RuntimeException(e);

}

System.out.println("Done");

}

}

Subscribe to my newsletter

Read articles from Ashwin Padiyar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ashwin Padiyar

Ashwin Padiyar

I have over 14 years of experience in the software industry. When I am coding or designing, I like to keep track of anything I learn. This blog is a sort of technical diary where I note down technical things. I am glad if anyone finds them helpful.