How to Set Up a Simple Rendering Loop in WebGPU

Gift Mugweni

Gift Mugweni

Hello 🙋♂️🙋♂️ you good? How have you been 😁? I'm great and all, sorry for the delayed article but I think we both know my release consistency by now. So with that out the way let’s get down to business.

In my last article, I showed how to set up the WebGPU project for TypeScript which I find to be a nicer working experience but your mileage may vary. It worked great and we got to see our lovely, beautiful, one-of-a-kind triangle aaaaaannnnndddd that’s the problem.

As I mentioned previously, we're making a game engine and well, we need to do a loooottt more than just drawing a single triangle on the screen but hey, baby steps you know. So, let’s add more features to our rendering class to make it a bit more interesting.

By the end of this article, you should have done the following

Implemented automatic canvas resizing to fill the window

Learned a bit more about WebGPU features (uniforms, vertex buffers, bind groups, etc)

Created slightly more complex shaders that render arbitrary shapes and colours

Created a rendering loop using RequestAnimationFrame

Created a wrapper class for our Game Engine features

Canvas Resizing

This is a simple piece of code which will automatically resize the canvas to fill the screen. It’s not perfect but I found it works well enough for my purpose.

// Renderer.ts

private setupCanvasResizing() {

function resize(renderer: Renderer) {

renderer.canvas.width = Math.max(1, Math.min(document.body.clientWidth, renderer.device.limits.maxTextureDimension2D))

renderer.canvas.height = Math.max(1, Math.min(document.body.clientHeight, renderer.device.limits.maxTextureDimension2D))

}

window.addEventListener('resize', () => resize(this))

resize(this)

}

As seen from the function, it detects when the window resizing event occurs and sets the canvas to fill the window but it has a min of 1 and a max value based on the maximum texture dimension supported by the GPU device.

Rendering Abitrary Shapes

At the moment our shaders look like this

/** Vertext Shader **/

// data structure to store output of vertex function

struct VertexOut {

@builtin(position) pos: vec4f,

@location(0) color: vec4f

};

// process the points of the triangle

@vertex

fn vs(

@builtin(vertex_index) vertexIndex : u32

) -> VertexOut {

let pos = array(

vec2f( 0, 0.8), // top center

vec2f(-0.8, -0.8), // bottom left

vec2f( 0.8, -0.8) // bottom right

);

let color = array(

vec4f(1.0, .0, .0, .0),

vec4f( .0, 1., .0, .0),

vec4f( .0, .0, 1., .0)

);

var out: VertexOut;

out.pos = vec4f(pos[vertexIndex], 0.0, 1.0);

out.color = color[vertexIndex];

return out;

}

/** Fragment Shader **/

// set the colors of the area within the triangle

@fragment

fn fs(in: VertexOut) -> @location(0) vec4f {

return in.color;

}

The issue with the shader is that it is not configurable. Once you’ve run it you’ll always get back the same triangle with the same color. To resolve this, we need to use more functionality of the GPU namely, Uniforms and Vertex Buffers. Now you might ask what are these things?

What are Uniforms and Vertex Buffers?

Let’s start by explaining vertex buffers. As you recall from one of my prior posts, when the GPU is rendering things, it uses the vertex and fragment shader. The vertex shader defines the bounds of where the rendering will occur and the fragment shader is responsible for filling in the shape with the appropriate colors.

Whilst an oversimplification of the process, it gets the idea across and if you want to learn more, check out this article. As you can imagine, the vertex buffer is probably gonna get used by the vertex shader but for what? Well in short, we use the vertex buffer to pass in whatever information we want to the vertex shader but you might typically add in all the vertices that define the boundary of the shape we want to render. To learn more about vertex buffers check out this article.

Moving on to uniforms, they’re also a facility offered by GPUs where we can pass in information to the GPU but whose contents will remain constant across each render. They can also be used by both the vertex and fragment shaders and can be thought of as global variables. This is again an oversimplification and you can learn more from this article.

With this all said, let’s now refactor our code to use uniforms and vertex buffers.

Rewriting the shaders

Create a new file called shaders.ts and you can dump in the following new shader code.

export const Uniforms = /* wgsl */ `

@group(0) @binding(0) var<uniform> color: vec4f;

`

export const SimpleVS = /* wgsl */ `

struct VertexIn {

@location(0) pos: vec2f,

@builtin(vertex_index) index: u32

}

${Uniforms}

@vertex

fn vs(in: VertexIn) -> @builtin(position) vec4f {

return vec4f(in.pos, .0, 1.);

}

`

export const SimpleFS = /* wgsl */ `

${Uniforms}

@fragment

fn fs() -> @location(0) vec4f {

return color;

}

`

The first thing you’ll notice is the new uniform that I defined that will store the colour information of my final output. For my purposes, I only care about rendering things with a constant colour and not an interpolated colour because it’ll make things easier to predict as time goes by. I’m also passing in the input into the vertex shader as a struct because it’ll keep things neater.

An interesting thing you might notice is the @group(0) @binding(0) and the @location(0) in the struct. These will be used in TypeScript land to map the information we want to pass into our uniform and vertex buffer respectively as is shown below.

Configuring the buffers

In our Renderer.ts we now need to rewrite some of our code to allow the loading and setting up of the shaders and buffers.

First, we rewrite our function for loading in the shaders.

public loadShaders(vertexShader?: string, fragmentShader?: string) {

this.loadVertexShader(vertexShader)

this.loadFragmentShader(fragmentShader)

this.configureBindGroupLayout()

this.configurePipeline()

}

You’ll notice that we now also have the ability to pass in our vertex and fragment shaders respectively. We also introduced the configuring of the pipeline and something called the bind group layout. This is because we need to reconfigure the pipeline to reference the new shaders.

Moving on, let’s look at the loading of the vertex and fragment shaders

private loadVertexShader(shader?: string) {

this.vertexShader = this.device.createShaderModule({

label: "Vertex Shader",

code: shader ?? /* wgsl */`

@vertex

fn vs() -> @builtin(position) vec4f {

return vec4f(.0);

}

`

})

}

private loadFragmentShader(shader?: string) {

this.fragmentShader = this.device.createShaderModule({

label: "Fragment Shader",

code: shader ?? /* wgsl */`

@fragment

fn fs() -> @location(0) vec4f {

return vec4f(.0);

}

`,

})

}

As you can see, these functions are relatively simple with the addition of default shaders shaders that effectively render nothing.

Next let’s configure the pipeline

private configureBindGroupLayout() {

this.bindGroupLayout = this.device.createBindGroupLayout({

label: "Bind Group Layout",

entries: [

{

binding: 0,

visibility: GPUShaderStage.VERTEX | GPUShaderStage.FRAGMENT,

buffer: { type: "uniform" }

}

]

})

this.pipelineLayout = this.device.createPipelineLayout({

label: "Pipeline Layout",

bindGroupLayouts: [

this.bindGroupLayout

]

})

}

private configurePipeline() {

this.pipeline = this.device.createRenderPipeline({

label: "Render Pipeline",

layout: this.pipelineLayout,

vertex: {

module: this.vertexShader,

buffers: [

{

arrayStride: 2 * 4,

attributes: [

{ shaderLocation: 0, offset: 0, format: "float32x2" }

]

}

]

},

fragment: {

module: this.fragmentShader,

targets: [{ format: this.presentationFormat }]

}

})

}

Something to take note of now is that we are specifically creating a layout for the pipeline instead of passing in the ‘auto’ keyword that tries to generate the layout automatically. This is no longer possible because we have uniforms that need specific mappings. Aside from that, we also set provisions for the vertex buffer in the vertex pipeline. Now there are a lot of moving parts here but you can find out why we need to do this by reading this, this, and that article. 😁 Enjoy yourself.

Rendering Loop

To lay the ground work for more complex rending, we’re gonna need to set up a rendering loop. This will allow us to add things like animation in due time but baby steps as they say. So lets rewrite the init function to do this.

public async init() {

await this.getGPUDevice()

this.setupCanvasResizing()

this.configCanvas()

this.loadShaders()

this.confiureBuffers()

this.configureBindGroup()

this.configureRenderPassDescriptor()

this.startAnimation(60, this)

}

Most of the functions should look familiar from the previous article but we do now have a few new kids in the block namely, configureBuffers, configureBindGroup, and startAnimation. Lets check them out.

private confiureBuffers() {

const vertices = new Float32Array([

// X Y

-0.5, -0.5,

0.5, 0.5,

-0.5, 0.5,

-0.5, -0.5,

0.5, -0.5,

0.5, 0.5

])

const fillColor = new Float32Array([1, 1, 1, 1])

this.loadVertexBuffer(vertices)

this.configFillColorUniform()

this.setFillColor(fillColor)

}

public loadVertexBuffer(data: Float32Array) {

this.vertexData = data

this.vertexBuffer = this.device.createBuffer({

label: "Vertex Buffer",

size: this.vertexData.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST

})

this.device.queue.writeBuffer(this.vertexBuffer, 0, this.vertexData)

}

private configFillColorUniform() {

this.fillColorBuffer = this.device.createBuffer({

label: "Color Fill Uniform Buffer",

size: 4 * 4,

usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST

})

}

public setFillColor(data: Float32Array) {

if (data.length != 4) {

throw new Error("Data must have 4 elements")

}

data.forEach((entry) => {

if (entry < 0 || entry > 1) {

throw new Error("Data values must be between 0 and 1")

}

})

this.fillColor = data

this.device.queue.writeBuffer(this.fillColorBuffer, 0, this.fillColor)

}

The code should look pretty straightforward. Here, we’re asking the GPU to create a Vertex Buffer and a Uniform Buffer of a known fixed size of 16 bytes (i.e 4 elements * 4 bytes/element) for the uniform buffer and 48 bytes(i.e. 12 elements * 4 bytes/element) for the vertex buffer. This is because memory allocation is not handled automatically in WebGPU and we are responsible for the allocating and freeing up of allocated memory.

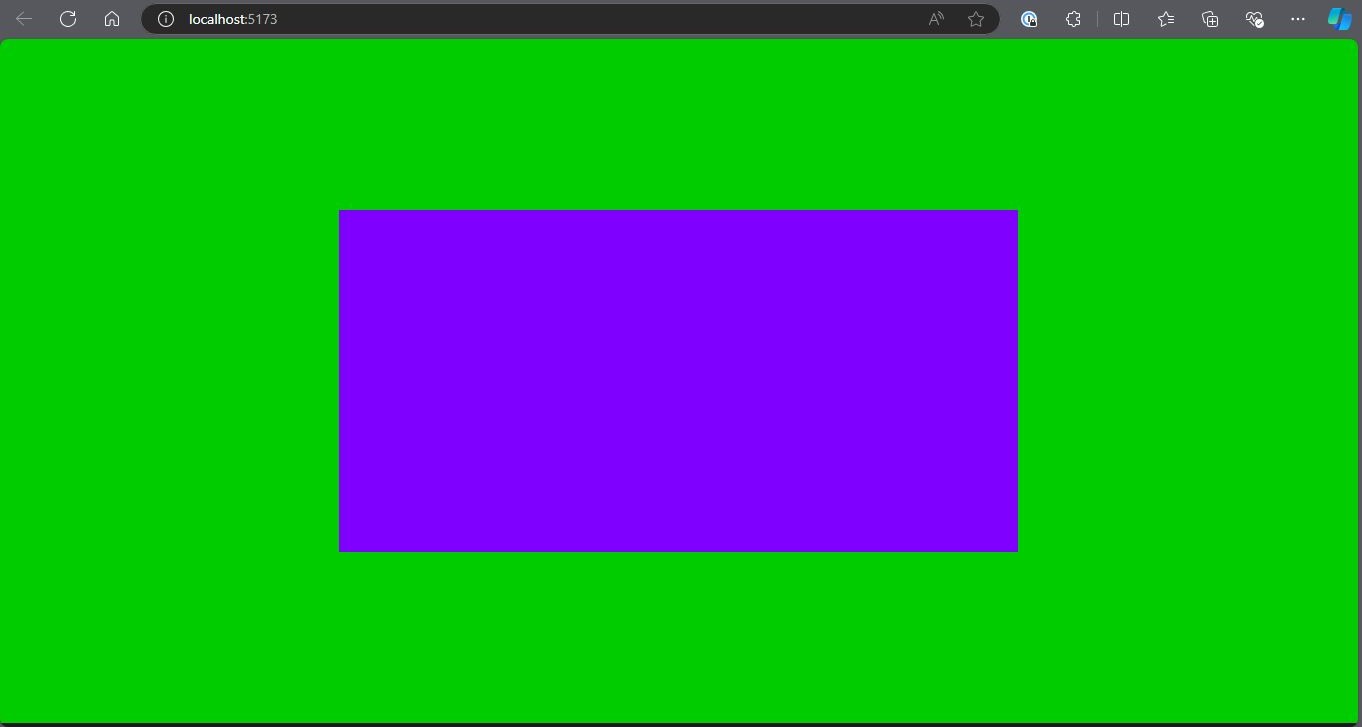

At the moment we’re planning on rendering a purple square hence, the all ones for the fill colour and the symmetrical vertex positions. An interesting thing you may notice is that some of the (x,y) are duplicated. This is because GPUs only generate fragments using triangles hence to draw a square, we need to use two triangles.

Let’s move on to the configureBindGroup function.

private configureBindGroup() {

this.bindGroup = this.device.createBindGroup({

label: "Bind Group",

layout: this.bindGroupLayout,

entries: [

{ binding: 0, resource: { buffer: this.fillColorBuffer } }

]

})

}

This function creates our bind group which is the glue between our uniforms in GPU land and the Buffer we’ve created in TypeScript land. As you might notice, the fillColorBuffer is mapped to a binding value of 0. This corresponds to the @binding(0) value in our shaders.

Lastly, let’s set up our animation loop and connect all these moving parts.

private startAnimation(targetFps: number, renderer: Renderer) {

let start: number | undefined = undefined

const targetFrameTime = Math.round(1000 / targetFps)

renderer.render()

requestAnimationFrame(animate)

function animate(timeStep: number) {

if (start === undefined) {

start = timeStep

}

const elapsed = timeStep - start

if (elapsed >= targetFrameTime) {

start = undefined

renderer.render()

}

requestAnimationFrame(animate)

}

}

private render() {

for (const colorAtachments of this.renderPassDescriptor.colorAttachments) {

if (colorAtachments?.view) {

colorAtachments.view = this.context.getCurrentTexture().createView()

}

}

const encoder = this.device.createCommandEncoder({ label: "render encoder" })

const pass = encoder.beginRenderPass(this.renderPassDescriptor)

pass.setPipeline(this.pipeline)

pass.setVertexBuffer(0, this.vertexBuffer)

pass.setBindGroup(0, this.bindGroup)

pass.draw(this.vertexData.length / 2)

pass.end()

this.device.queue.submit([encoder.finish()])

}

This now looks a bit more complex but we’re essentially using the requestAnimationFrame function to call the render function at our ideal target framerate. Aside from this, we’re also adding the vertex buffer and the bindGroup to our render pass. Lastly, we now call the draw function based on the number of points in the vertex buffer.

Game Engine Wrapper

As you might now appreciate, there are a lot of moving parts going on in the rendering and for the potential game developer, they don’t need to care about this so let’s create a wrapper around all these things that will be visible to the developer.

Create a file called EngineCore.ts and add the following code.

import { Renderer } from "./Renderer"

export class gEngine {

private constructor() { }

// graphics context for drawing

private static _Renderer: Renderer

public static get GL() { return this._Renderer }

public static async initializeWebGPU(htmlCanvasId: string) {

document.body.style.backgroundColor = "black"

const canvas = document.getElementById(htmlCanvasId) as HTMLCanvasElement

if (!this._Renderer) {

this._Renderer = new Renderer(canvas)

}

await this._Renderer.init()

}

}

This sets up a global static class that initializes the renderer and also exposes the renderer’s public functions.

We also need to rewrite our main.ts file to use our new code structure.

import { main } from "./MyGame";

main()

In the MyGame folder in the index.ts the following is added

import { gEngine } from "../EngineCore";

import { SimpleVS, SimpleFS } from "../shaders";

export async function main() {

await gEngine.initializeWebGPU("GLCanvas")

gEngine.GL.loadShaders(SimpleVS, SimpleFS)

gEngine.GL.setFillColor(new Float32Array([0.5, 0, 1, 1]))

}

As you can see, this is a much cleaner code for the developer and they need not worry about all the wizardry we had to do to get this to work. and now BEHOLD your beautiful square.

🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔🤔

Some of you might be thinking “That ain’t a square Gift. Did you make a mistake?”. Well, not quite, the issue is that our screen is not a square and as such, although coordinates range from -1 to +1 in clip space for the horizontal and vertical axis, in actuality, the horizontal axis is longer and as such, the final output looks like a rectangle. This isn’t a big issue and can be fixed by taking the canvas resolution into account which we’ll see in a future article.

Conclusion

🎉🎉 Congrats for finishing the article. Although the visual might look underwhelming, we’ve learnt many powerful concepts about WebGPU such as buffers, bind groups, bindgroup layouts, the rendering loop, etc. I hope you enjoyed the process and in the next article, we’ll add more and more functionality to our engine as we continue to learn more about WebGPU and other things. 👋👋

Subscribe to my newsletter

Read articles from Gift Mugweni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gift Mugweni

Gift Mugweni

Just your chilled programmer sharing random facts I've learnt along my journey.