Automatically deploy your Resume Web Application to an AWS Static Website with Gitlab CI/CD Pipeline

ferozekhan

ferozekhan

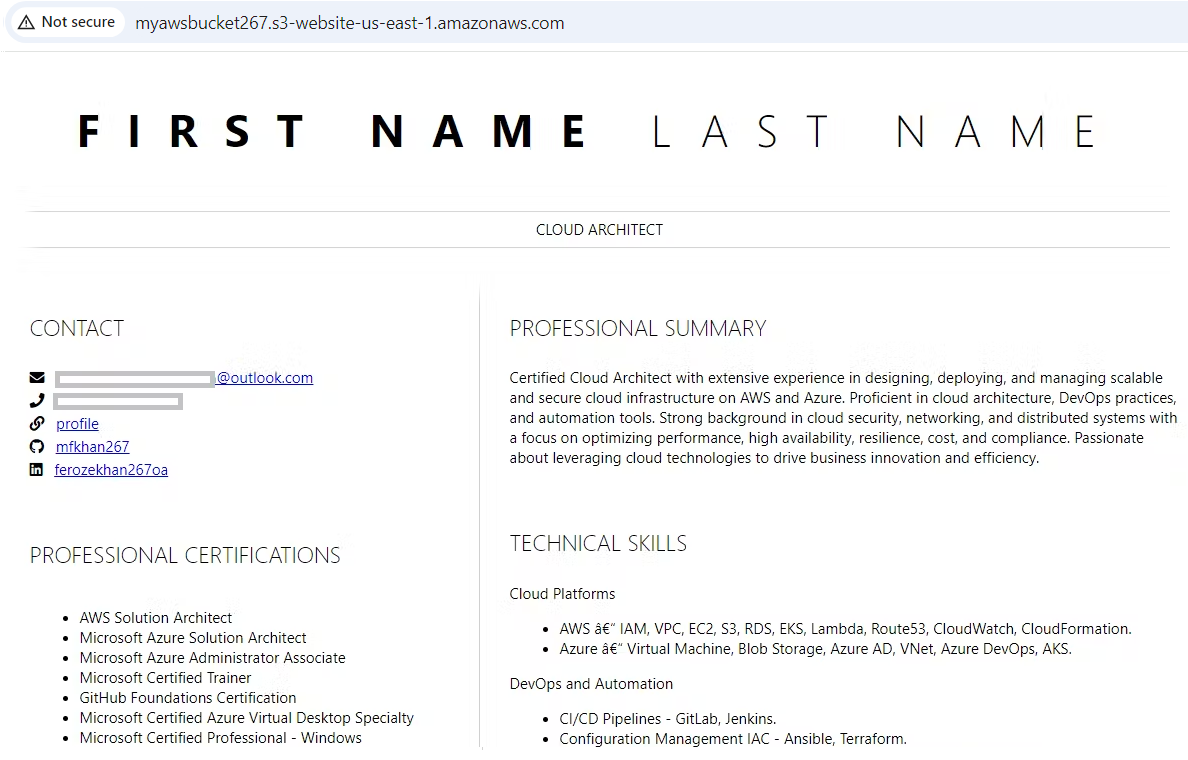

For the purpose of this demo, I have already created a simple HTML based Resume App that is available HERE. Feel free to download the GitLab repository and modify as per your own requirements and profiling. You may use any other similar HTML project files in order to complete this mini project.

Link to the Gitlab Repository

https://gitlab.com/static_website_267/aws_static_website.git

The Overview

The above diagram describes the whole process, right from a developer pushing the code changes, to the point where the GitLab CI/CD Pipeline deploys the html content to the AWS S3 Bucket (Object Storage) that is enabled with Static Website hosting.

Developer updates the html files locally, and then commits and pushes the code changes to the version control system, which is in our case GitLab.

GitLab then executes a series of tasks that is mentioned as part of the the CI/CD pipeline gitlab-ci.yml file.

The GitLab CI/CD Pipeline Deploys the Resume Application static files (html, css, javascript) inside the web directory, by uploading these files to an AWS S3 Bucket.

Once the static content has been uploaded to the AWS S3 Bucket, the Static Website should be updated with the latest changes.

Now since we have understood the overall flow for Deployment, lets get started with the step by step instructions.

Since we will be deploying our Resume App to the AWS S3 Bucket, let us first create a new AWS S3 Bucket with Static Website Hosting enabled.

Static websites deliver HTML, JavaScript, images, video and other files to your website visitors. Static websites are very low cost, provide high-levels of reliability, require almost no IT administration, and scale to handle enterprise-level traffic with no additional work.

You may find more details on Static Website Hosting HERE

CREATE THE AWS S3 BUCKET

An AWS S3 Bucket contains all of your website files like HTML, JavaScript, images, video and other files that are rendered to your website visitors as static webpage. The AWS S3 Bucket provides a unique namespace for your objects that are accessible from anywhere in the world over HTTP or HTTPS. Data in your AWS S3 Bucket is durable and highly available, secure, and massively scalable.

Creating an AWS S3 Bucket Or Using an existing AWS S3 Buckets

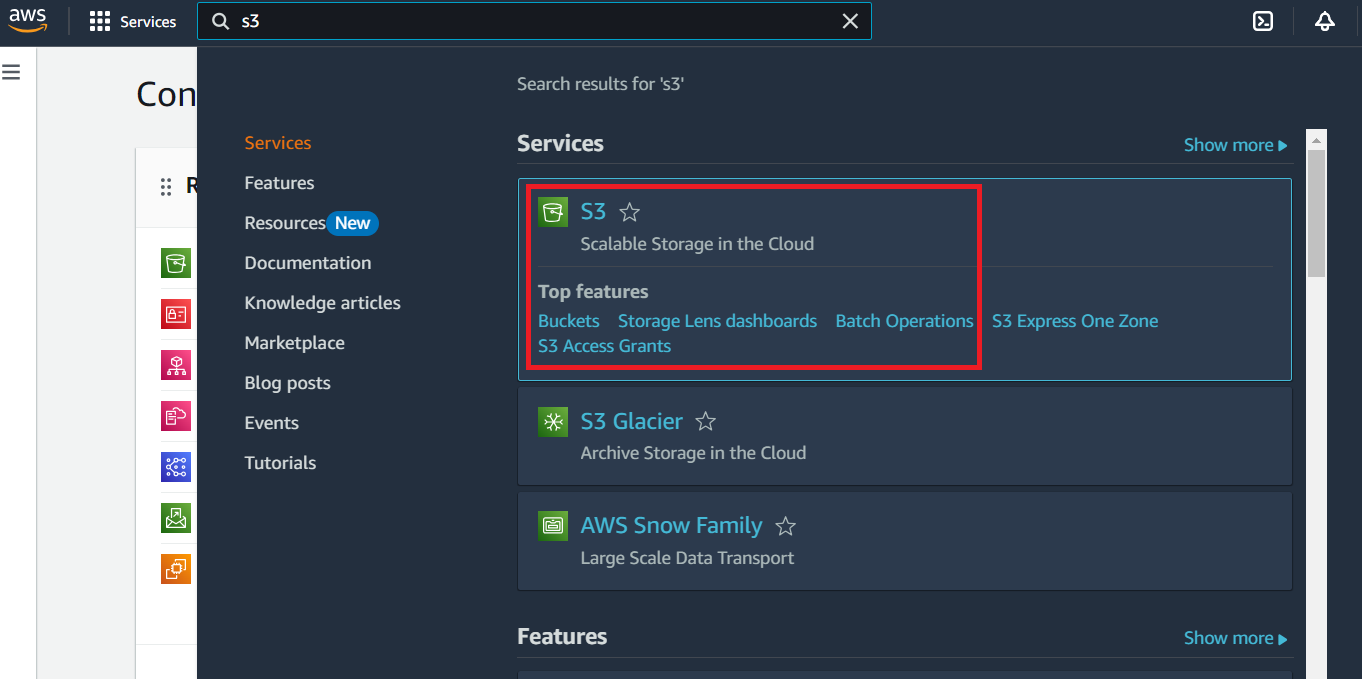

Sign in to the AWS Management Console and open the Amazon S3 console at https://console.aws.amazon.com/s3/

In the navigation bar on the upper right corner, choose the Region where you want to create the bucket. Choose a Region that is geographically close to you to minimize latency and costs, or to address regulatory requirements. The Region that you choose determines your Amazon S3 website endpoint. Click Create Bucket

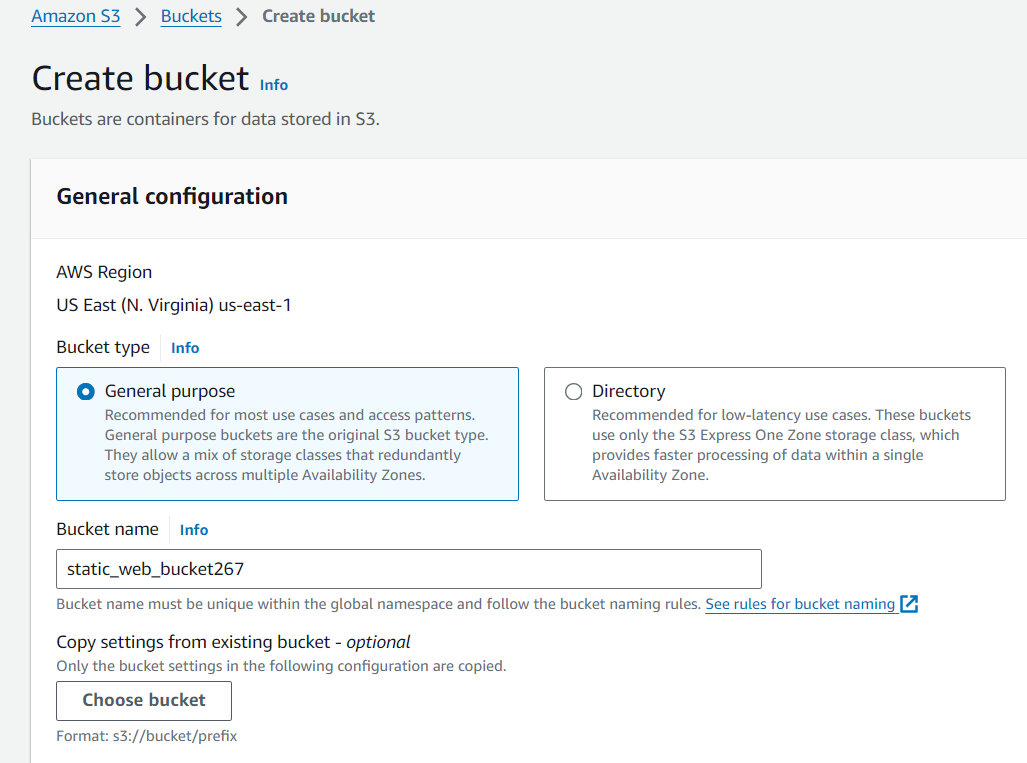

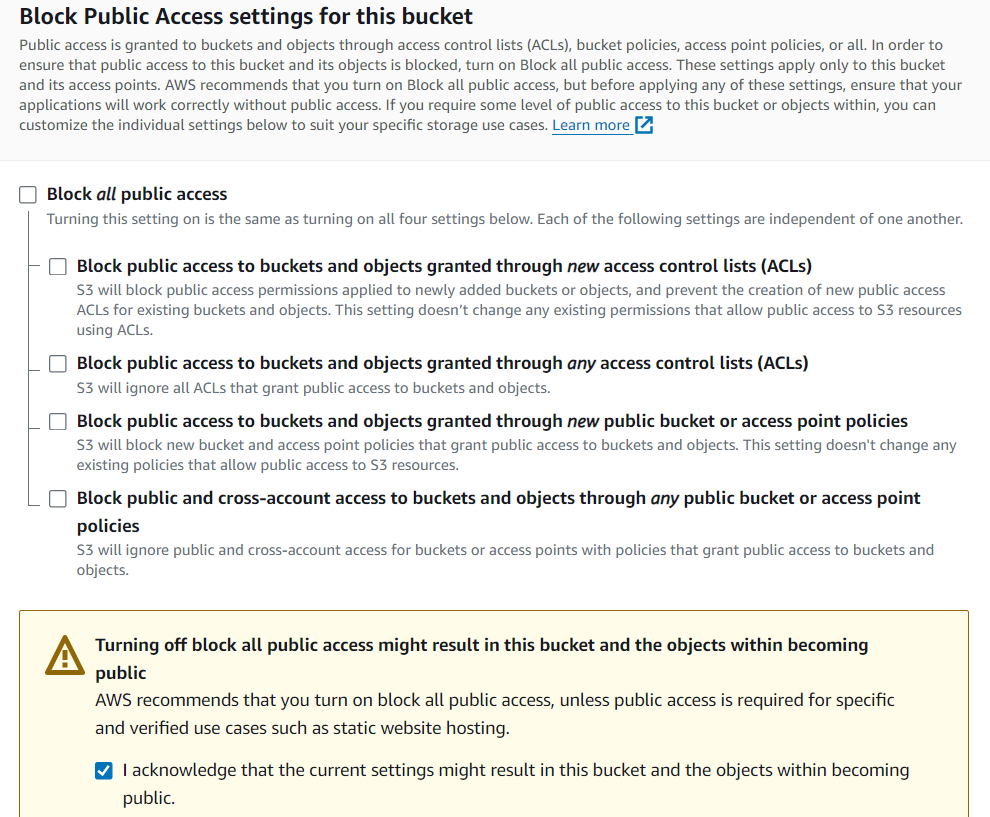

Give your S3 Bucket a globally unique name myawsbucket267 » uncheck Block all public access and acknowledge the public access permissions

Click Create Bucket

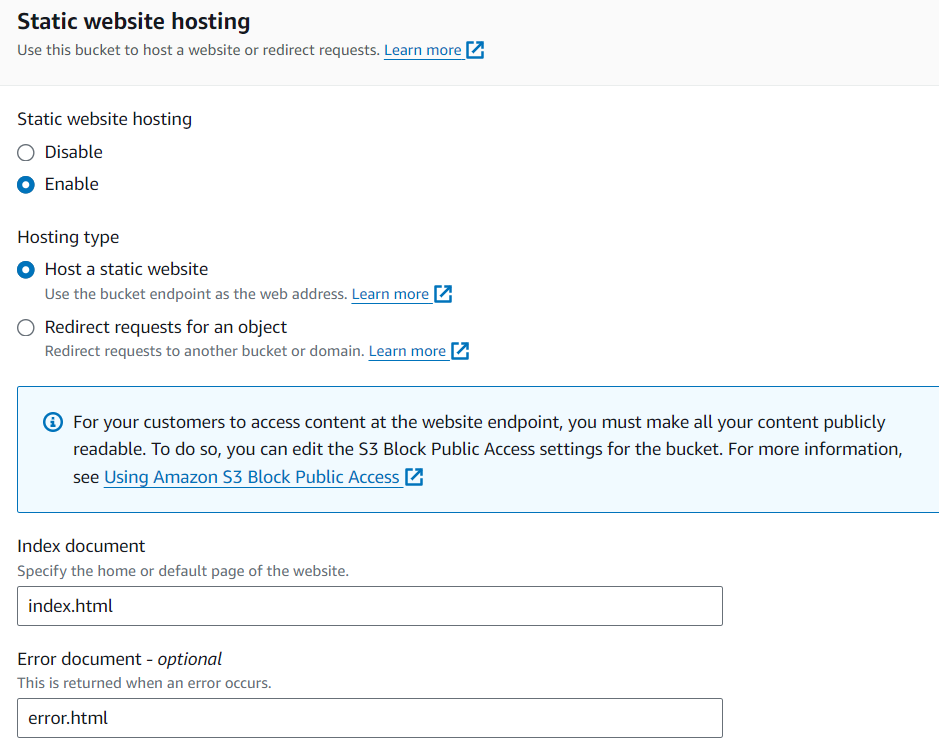

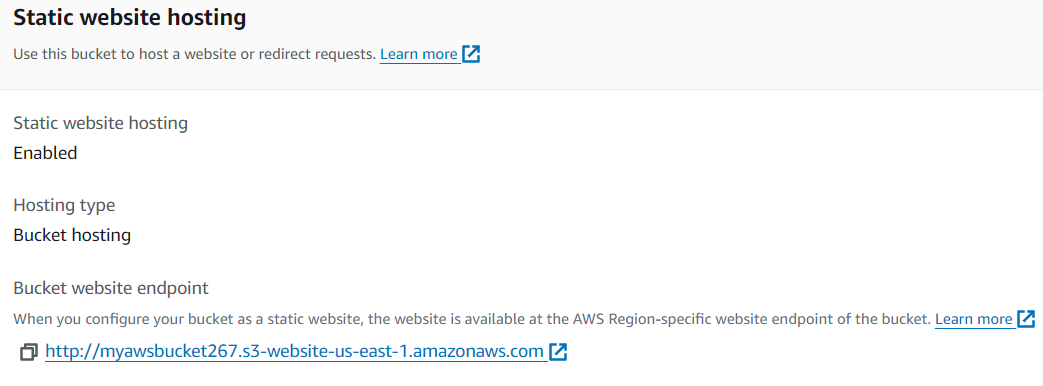

Configuring Static Website Hosting

Static website hosting is a feature that you have to enable on the AWS S3 Bucket.

Go to the S3 service » Select Buckets on the left hand side menu » Select the Bucket that you just created in the above step » under Properties tab » Scroll down to the Static Website section » Click Edit » Enable » Select Host a static Website » Enter index.html and error.html for index document and error document respectively » Click Save changes.

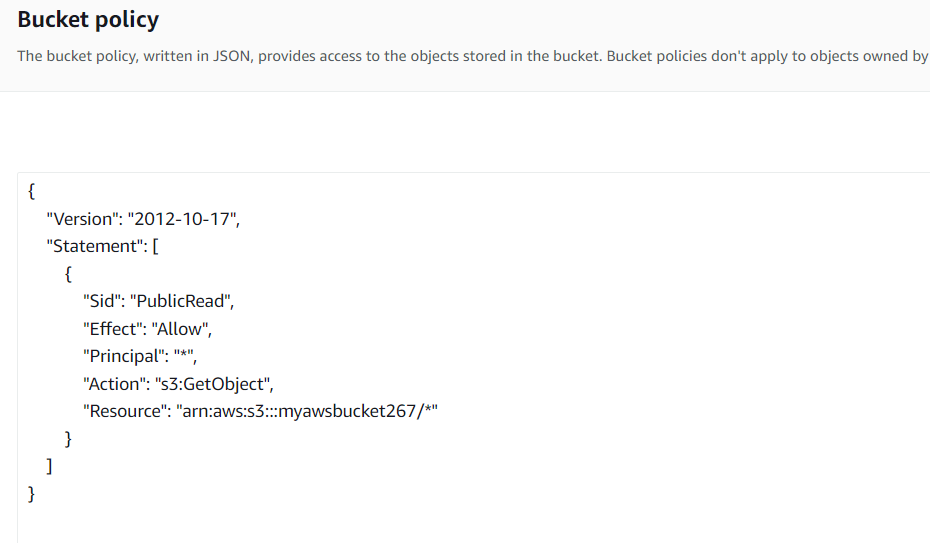

On the bucket properties page, select the Permissions tab, got the Bucket policy section, click Edit and copy / paste the content of the below json file. Ensure to replace the examplebucket placeholder with the actual name of your S3 Bucket. In my case the name of my demo bucket is myawsbucket267. The policy below enables public read access to all obects with the S3 bucket.

{

"Version":"2012-10-17",

"Statement":[

{

"Sid":"PublicRead",

"Effect":"Allow",

"Principal": "*",

"Action":["s3:GetObject"],

"Resource":["arn:aws:s3:::myawsbucket267/*"]

}

]

}

Creating Access Keys for making programmatic API calls to AWS

We will be using Access Keys and Secret Access keys to send programmatic API calls to AWS from the AWS CLI, AWS Tools for PowerShell, AWS SDKs, or direct AWS API calls.

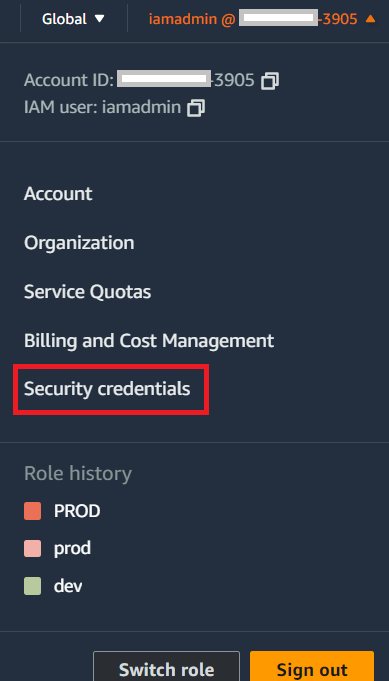

For Creating AWS Access Keys, in the navigation bar on the upper right, choose your user name, and then choose Security credentials, go to the Access Keys section and select create access keys. Make a note of the values of the Access Key and Secret Access Key, that we will need later in the GitLab Variables section of this mini project. You may also download the CSV file for later.

For detailed step on creating access keys, you may refer the AWS Documentation HERE.

GITLAB VARIABLES

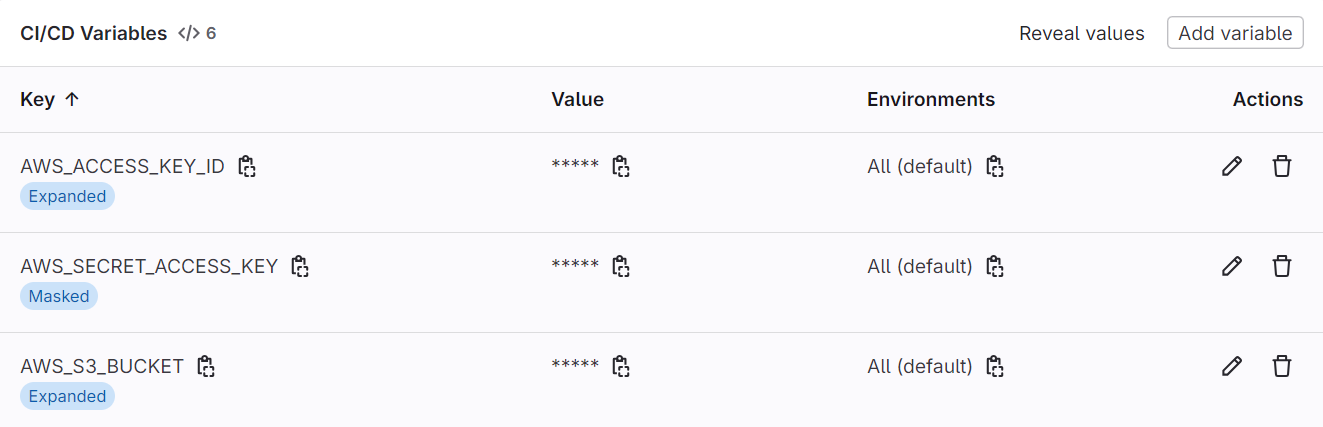

Before we discuss the GitLab pipeline, let us first configure a few variables that are used during the execution of the pipeline. We will need the following variables: AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and AWS_S3_BUCKET, that needs to be defined as project-specific variables in GitLab UI. This can be done in the Settings tab under CI/CD menu for the project repository.

AWS_ACCESS_KEY_ID is the Access Key from the previous step.

AWS_SECRET_ACCESS_KEY is the Secret Access Key from the previous step.

AWS_S3_BUCKET is the Bucket Name from the bucket creation steps.

Note that the AWS_SECRET_ACCESS_KEY variable is set as Masked and will not show up in the CI/CD logs.

GITLAB CI/CD PIPELINE

Let us now configure the CI/CD pipeline on GitLab, as we already know that GitLab provides a simple way to configure CI/CD on each repository by mentioning all the steps in the .gitlab-ci.yml file.

https://gitlab.com/static_website_267/aws_static_website/-/blob/main/.gitlab-ci.yml

Link to this GitLab-repo contains the static files for the Resume App along with the gitLab-ci.yml pipeline file.

Section 1: Only contains one stage “Deploy”

stages:

- deploy

Section 2: Refers to the docker image from AWS that already has the necessary tools like AWS CLI installed. We have selected this docker image with respect to the aws tasks we wish to perform within the CI/CD stages for the purpose of AWS authentication, managing AWS S3 Bucket and the objects within it.

Deploy to S3:

stage: deploy

rules:

- if: $CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH

image:

name: amazon/aws-cli:2.17.57

Uploading files to an AWS S3 Bucket is pretty easy with the AWS CLI using the following components:

AWS S3 Bucket (already created in the steps above)

AWS Access Keys that is used for authentication and authorization to AWS Services including the AWS S3 Bucket.

When Gitlab runs the pipeline, it already does the overhead of cloning the repository with the branch on the which commit changes are pushed, so taking that into consideration we can now go ahead and perform the following steps:

The specified commands do the following:

AWS CLI automatically resolves the Access Keys for authentication. If the Variables exist and with correct values, then the AWS CLI is able to authenticate to AWS.

Upload files from the ${CI_PROJECT_DIR}/web directory to the S3 Bucket.

That is all that we need to do to setup a fully automated GitLab CI/CD Pipeline that runs automatically everytime new changes to your Resume App are committed and pushed to the GitLab Repository and is eventually deployed to your Static Website. The final gitlab-ci.yaml file should look like this:

stages:

- deploy

Deploy to S3:

stage: deploy

rules:

- if: $CI_COMMIT_BRANCH == $CI_DEFAULT_BRANCH

image:

name: amazon/aws-cli:2.17.57

entrypoint: [""]

script:

- aws --version

- aws s3 ls

- aws s3 sync web s3://$AWS_S3_BUCKET --delete

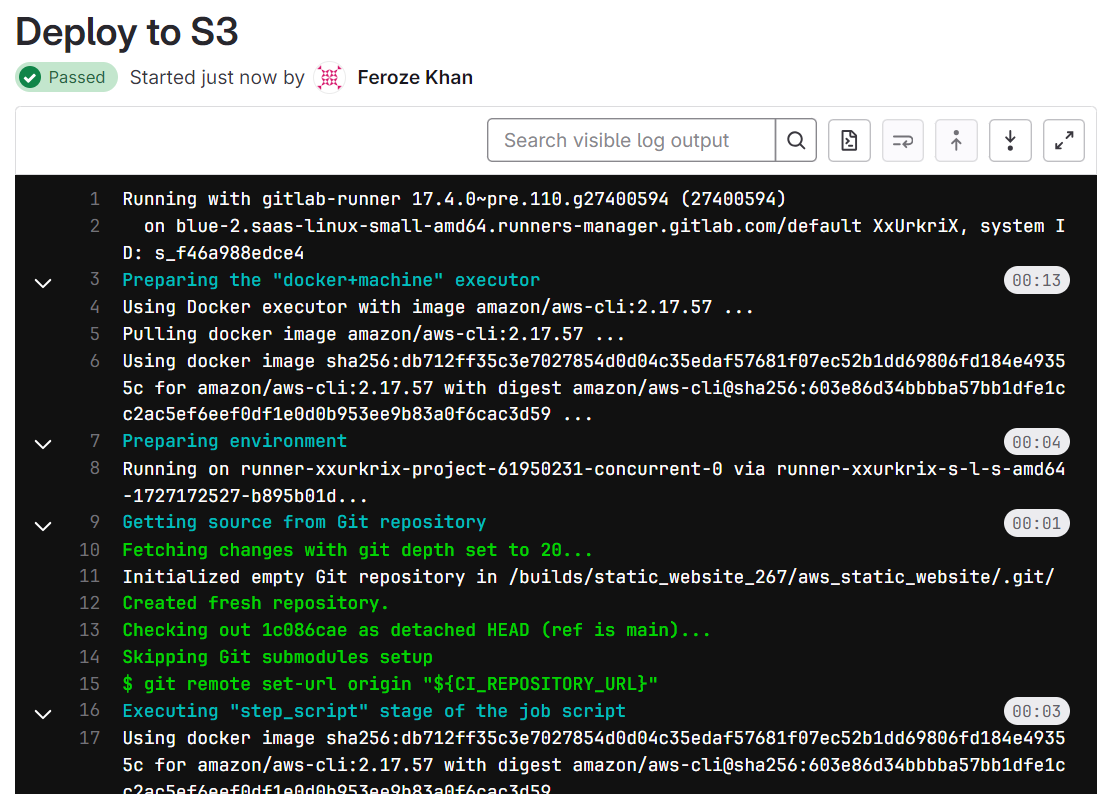

Let us now commit the YAML File to the GitLab repository and see the pipeline get executed.

Now if the pipeline was successfully, you should see the Static Website get updated with the latest changes. Let us fetch the primary bucket website endpoint of our Resume Website from the AWS Management Portal and open in a browser of your choice.

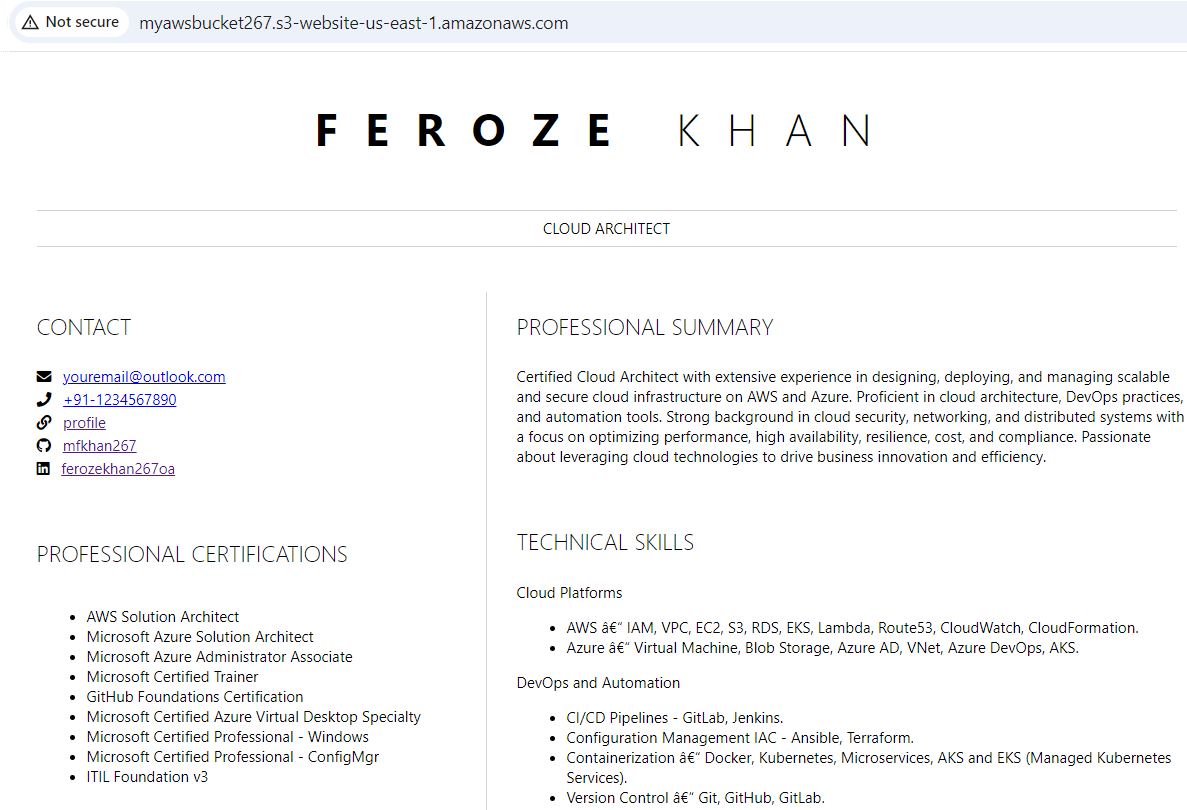

I will now replace the FIRST NAME and LAST NAME with real name in the index.html file and commit the changes. This will trigger the GitLab pipeline and deploy the changes to the AWS S3 Bucket, thereby updating my Resume App hosted a static website on the AWS S3 Bucket.

Let us browse the website endpoint once again.

There you have it! The changes have been successfully deployed automatically with the GitLab CI/CD Pipeline.

That's all folks. Hope you enjoyed the mini project with GitLab CI/CD, AWS S3 Bucket, and AWS Static Website Hosting. Kindly share with the community. Until I see you next time. Cheers !

Subscribe to my newsletter

Read articles from ferozekhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by