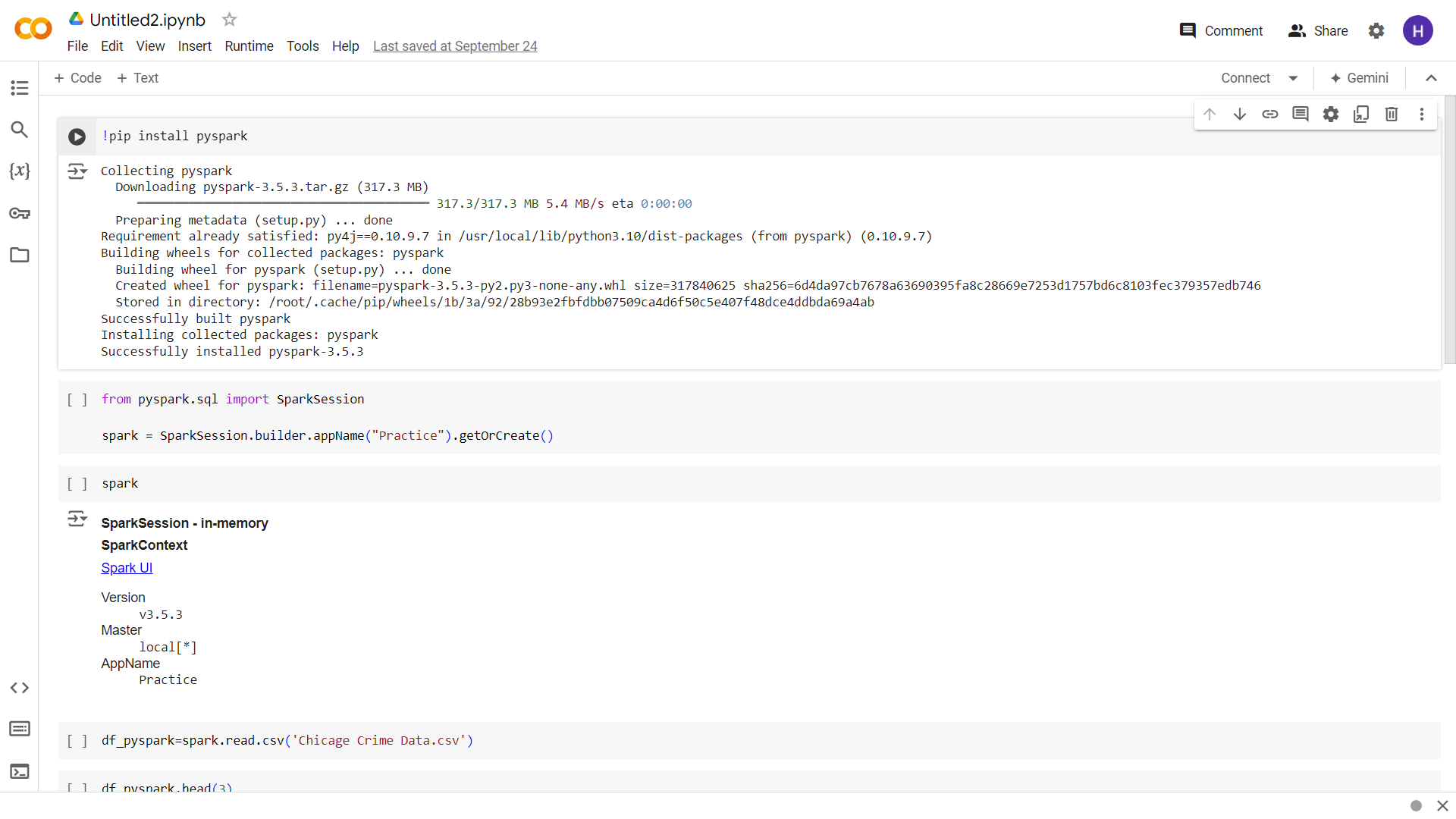

Installing dependencies for learning PySpark

Harvey Ducay

Harvey Ducay1 min read

I’ve had a lot of issues in downloading pyspark locally and with all the support from forums online such as stackoverflow, etc. I still wasn’t able to fix my dependency issues from running PySpark. I said to myself, maybe this is the time I start utilizing cloud computing such as google colab in learning or even testing some production ready deployments. At least I think with using google colab, I’ll be much closer to where I’ll be deploying, in GCP…

0

Subscribe to my newsletter

Read articles from Harvey Ducay directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by