What LLM's For Coding Should Actually Look Like

Sotiris Kourouklis

Sotiris Kourouklis

I’ve been using LLMs since the first day they were officially released. I say officially because even before GitHub Copilot and ChatGPT-3, other solutions existed but didn’t work so well.

Now, moving into the end of 2024, we have CursorAI. Let’s be real, if you’ve tried Cursor, you can’t go back to regular Copilot. Yet all of these LLMs actually make you a terrible coder.

I think that good LLMs don’t have a future; only the bad ones will eventually exist. What do I mean by that? Let’s take a look.

Lose Touch With The Codebase

If you start working on a new project that you want to complete quickly and it is not very hard or complex, LLMs can help you by generating code very fast. These types of projects are usually small SPAs, 4-5 page React applications, or simple APIs where you don't really care about code quality because the project is very simple.

When you get to actual programming, LLMs make you lose track of your codebase. Before LLMs, we might spend a couple of days just looking at the codebase to understand how it works. Now we just hit tab, throw the errors back to the LLM, and hit tab again.

Eventually, after a few tries, we get the code working, but we have no actual idea how it works. If we do, we only understand part of the code. I tried this with Cursor on some of my applications that have relatively large codebases, and after a few hours, I lost track of what I did. Yes, things worked, but do I know how? No, I don't know how they worked, and I don't know if the quality of the code is good whatsoever.

For simple tasks, that might not be a problem, but when you want to get actual solutions to problems that not only perform well and utilize data structures or design patterns but also understand how they work, LLMs are starting to create problems.

LLM’s Should migrate into Code Autocomplete.

With great power comes great responsibility, this is what comes with the current LLM’s available. I don’t only want to discuss the problem but for whatever I write I want to propose solutions as well.

Only Suggest Repeatable Code or methods

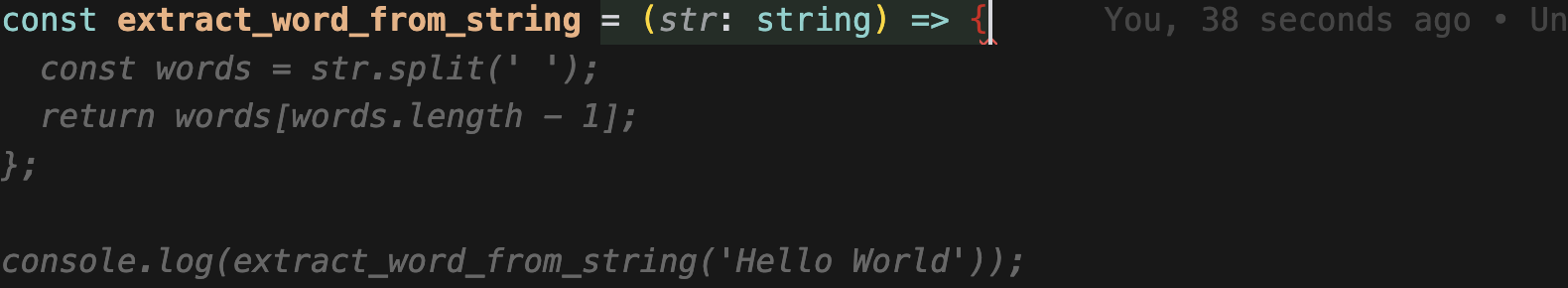

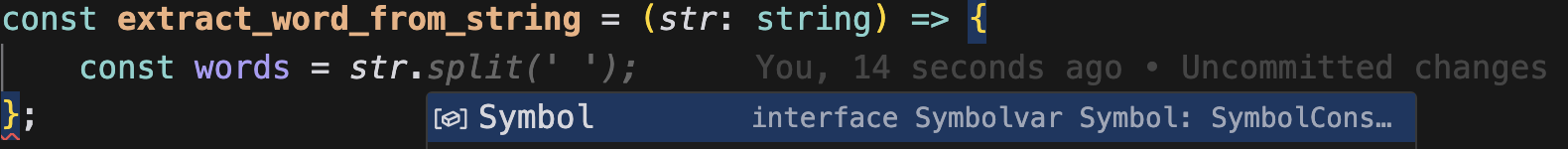

Now even Github Copilot suggests everything, you just write the name of a function for example extract_word_from_string and it autocompletes the whole function.

This should not be the case. What should happen is not to suggest the entire function but only methods or repeatable code. Let’s take a look at two examples.

Below we see what should happen with the extract_word_from_string function. Write the whole function yourself and suggest the method from the str. This way, you don’t lose track of what you are doing, and you actually learn to code as well.

Because even senior programmers, when they code in a new language, if they don’t spend time coding the default API methods and the syntax of the language, they will never learn to code in that language. They might create simple tasks, but gaining deeper knowledge of the language will never come.

Repeatable Code

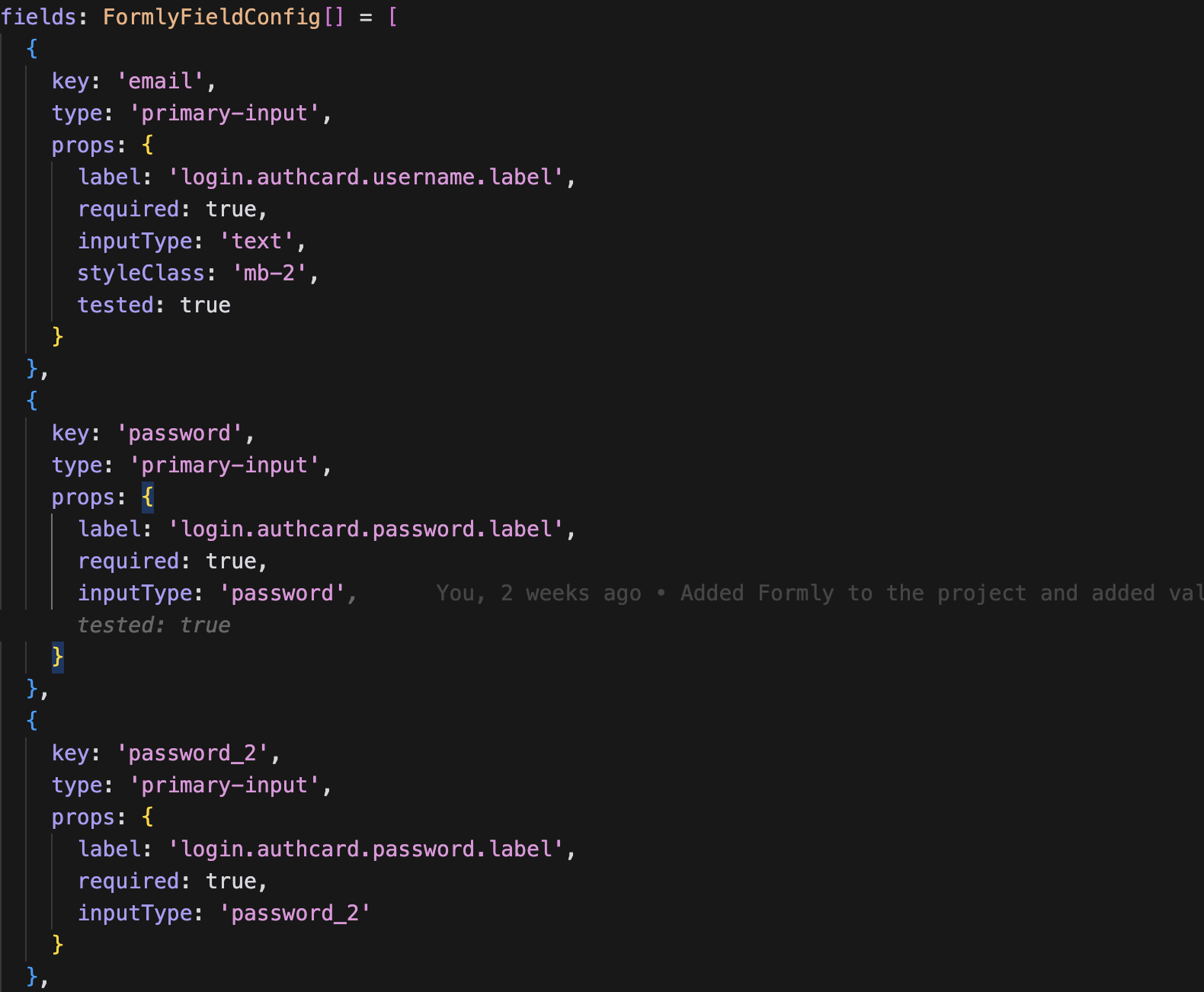

Now, another case that should be suggested by LLMs is when you are repeating yourself. Let’s look at an example with an array.

Below we have a TypeScript array. I’ve added tested: true on the email input, and the LLM suggested adding tested on the password as well. In this case, this is really helpful because we might have an even larger array than that in our code with 10 or 20 elements.

What LLM’s for coding should look like.

This is more of an AI app idea and will help you understand what a healthy coding environment with LLM’s should be.

As I mentioned above, the auto-suggest feature should work only for function methods and when you are repeating yourself. Also, having an option to toggle between full auto-completion and partial auto-completion should be available.

The sidebar chat should not be a simple chat where you ask questions. Instead, it should be AI-enhanced documentation that understands your current file and provides docs from verified authorities like Stack Overflow or official language docs, not just some random guy's docs from a GitHub Gist written 4 years ago.

In the Chat you should not be able to write whatsoever. Just open ChatGPT if you want to chat.

Best Practices Recommendations

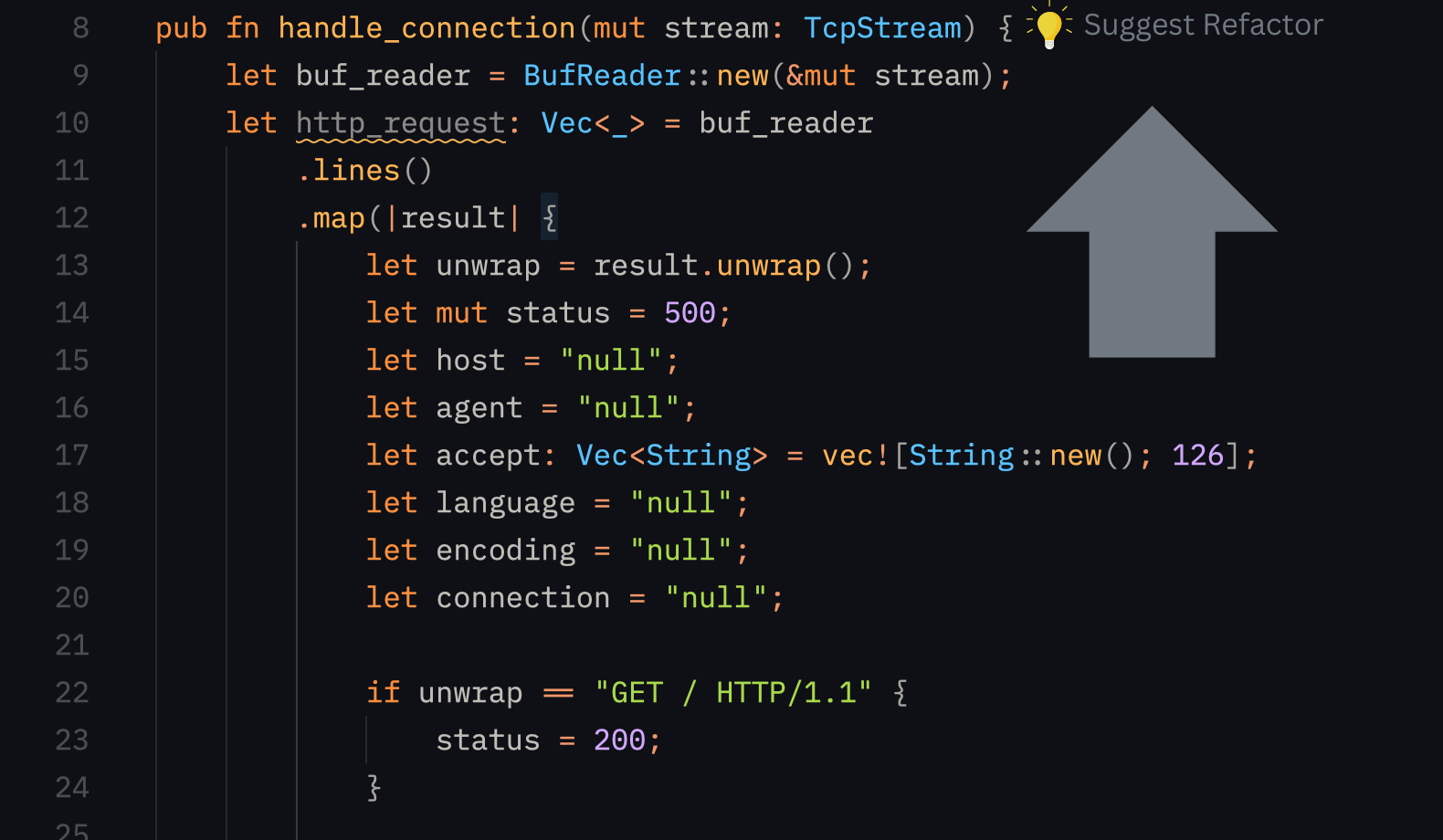

Refactoring is a huge aspect of programming. Writing code is not a problem; writing good code is the problem. As you can see below, I have some Rust code for handle_connection. LLMs should be able to suggest refactoring or a code improvement button only when needed, which will suggest how to make your code better by utilizing best practices.

In a nutshell

Companies believe that the less code you write, the better their LLM is, but this ends up feeding their AI garbage. It’s like God trying to make humans to replace Him; this is not possible and is a completely wrong idea. The same applies to LLM’s; we should not work for LLMs but with them and have them as a sidekick to learn better code.

Because the ultimate goal is not to write more code, but to make the engineer better at his job. Computer intelligence can never surpass human intelligence. Anyone with a functioning brain should understand that. There is something unique to humans that cannot be replaced.

It is called a soul, that God put there to make us what we are. Good code or good ideas do not come from the brain but from the heart, and this is where the soul lives.

Thanks for reading, and I hope you found this article helpful. If you have any questions, feel free to email me at x@sotergreco.com, and I will respond.

You can also keep up with my latest updates by checking out my X here: x.com/sotergreco

Subscribe to my newsletter

Read articles from Sotiris Kourouklis directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sotiris Kourouklis

Sotiris Kourouklis

Software Engineer, sharing daily programming insights and articles - Orthodox Christian 👑