Complexities in DSA

Kingsley Ihemelandu

Kingsley Ihemelandu

What are Complexities

One of the main reasons for Data Structures and Algorithms (DSA), is to solve problems effectively and efficiently. To determine the efficiency of a program, we look at two types of complexities:

Time Complexity: This tells us how much time our code takes to run.

Space Complexity: This tells us how much memory our code uses.

Types of Complexities

Constant (1)

Logarithmic (log n)

Linear (n)

Quadratic (n²)

Factorial (n!)

Exponential (c^n)

Asymptotic Notation

To compare efficiencies of algorithms, we use what we call asymptotic notation, a mathematical tool that estimates time based on input size without running the code. It focuses on the number of basic operations in the program.

As stated above, the time is based on input size and not on the actual time it takes to process the information. This is because using the actual time won’t be fair, as different users have different hardware, different applications running or even different programming languages: which will definitely affect the speed of the operation.

There are multiple Asymptotic Notations which include (but not limited to):

Big-O (O)

Omega (Ω)

Theta (θ)

Little-o

Little-omega

For this article, we are only going to be looking at the three major ones which are:

Big-O

Omega

Theta

Worst Case - Big-O notation

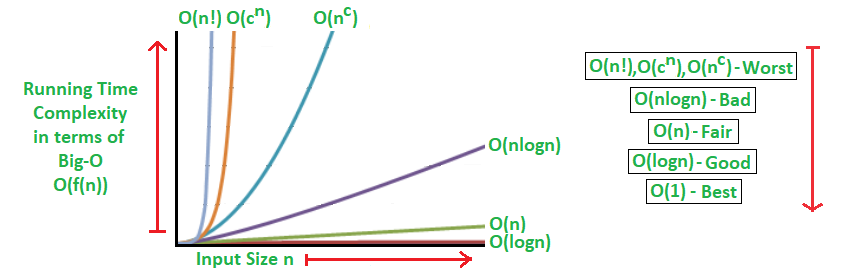

The most commonly used notation for code analysis is Big O Notation, providing an upper limit or upper bound on the running time or memory usage concerning the input size.

You may ask, why start with the worst case scenario? Well that is because, when designing systems, you will want to go with a solution where even at the worst possible case, it still performs well.

Mathematically, the Big-O notation is expressed as:

O (g(n)) = {f(n): there exist positive constants c and n0 such that f(n) ≤ c\g(n) for all n ≥ n0}.*

This means that

f(n) = O(g(n)), If there are positive constants n0 and c such that, to the right of n0 the f(n) always lies on or below c\g(n).*

By using big O- notation, we can asymptotically limit the expansion of a running time to a range of constant factors above and below. It is a model for quantifying algorithm performance.

Best Case - Omega

Omega notation represents the lower bound of the runtime of an algorithm. It gives the best-case complexity of an algorithm.

It is defined as the condition that allows an algorithm to complete statement execution in the shortest amount of time.

Mathematically, this is represented as:

Ω (g(n)) = {f(n): there exist positive constants c and n0 such that 0 ≤ c\g(n) ≤ f(n) for all n ≥ n0}.*

This means that,

This means that, f(n) = Ω(g(n)), If there are positive constants n0 and c such that, to the right of n0 the f(n) always lies on or above c*g(n).

Average Case - Theta Notation

Mathematically, it is represented as

Θ (g(n)) \= {f(n): there exist positive constants c1, c2 and n0 such that 0 ≤ c1 g(n) ≤ f(n) ≤ c2 g(n) for all n ≥ n0}

This means,

f(n) = Θ(g(n)), If there are positive constants n0 and c such that, to the right of n0 the f(n) always lies on or above c1*g(n) and below c2*g(n).

Steps To Calculate Complexity in Big-O

Sum up all the complexities of the operation.

Find the fastest growing term in the function.

If the fastest growing term has a coefficient, remove it.

Note the following:

Assignments have constant time O(1)

Return statements have constant time.

Mathematical operations like addition, subtraction, division and multiplication all have constant times: O(1).

Loops have linear time O(n).

When there is an operation in a loop, the time complexity of the loop is n*(complexity of inner operation). For this reason, if you have a for loop inside another for loop, the time complexity will be n*n=n². That will give a quadratic time complexity: O(n²).

As time goes on and as we look at different data structures and algorithms, we will analyze the complexities of the different operations that can be done on these data structures and the time complexities of different algorithms as well.

For a more comprehensive guide to complexities, you can check out this article on GFG. https://www.geeksforgeeks.org/complete-guide-on-complexity-analysis/

Subscribe to my newsletter

Read articles from Kingsley Ihemelandu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by