Create Graphs using Llama3.1 and Postgres: Exploring LLMs — 5

Abou Zuhayr

Abou Zuhayr

In our previous installment, we enhanced our chatbot by adding memory capabilities using LangChain's ConversationBufferMemory. This allowed the chatbot to retain conversation context and provide more coherent and personalized responses (if you are new to this series, consider going through the Exploring LLMs series). Now, we'll take our chatbot to the next level by enabling it to generate graphs and charts based on natural language queries.

The entire code for this tutorial can be found here.

Introduction

Visual representations of data can significantly enhance user understanding. By allowing users to request graphs through natural language, we make data exploration more intuitive and interactive. In this tutorial, we'll modify our existing chatbot to:

Detect when a user wants to generate a graph.

Generate the appropriate SQL query to retrieve the necessary data.

Use the SQL result to generate plotting code using

matplotlib.Execute the plotting code and display the graph to the user.

Let's dive into the steps required to achieve this functionality.

Step 1: Detecting Graph Intent in User Queries

What We Need:

Before we can generate a graph, we need to determine when the user intends to request one. This involves analyzing the user's question for keywords that indicate a desire for a visual representation.

Why We Need It:

By detecting graph intent, we can conditionally execute additional steps in our chain, such as generating plotting code and executing it to produce the graph.

How to Implement It:

We'll add a method called detect_graph_intent to our ChatLLM class.

def detect_graph_intent(self, question):

# Check if the user intends to draw a graph by looking for specific keywords

graph_keywords = ["graph", "plot", "chart", "visualize"]

return any(keyword in question.lower() for keyword in graph_keywords)

Explanation:

We define a list of keywords that are commonly associated with graph requests.

The method checks if any of these keywords are present in the user's question.

It returns

Trueif a graph is requested, andFalseotherwise.

You can also use LLMs to detect the intent of the user, but it seemed like an overkill to me, that’s why I just used a simple function.

Step 2: Generating Plotting Code Using LLM

What We Need:

Once we've detected that the user wants a graph, we need to generate the Python code required to create the graph using the SQL query results.

Why We Need It:

The LLM can assist in generating syntactically correct plotting code based on the SQL results and the user's question, saving us from manually writing the code.

How to Implement It:

2.1 Adding a Prompt Template for Plotting Code

We'll create a new prompt template, plot_code_prompt, which instructs the LLM to generate the plotting code.

self.plot_code_prompt = PromptTemplate(

input_variables=["sql_result", "question"],

template="""

Given the following SQL query result and the user's question, generate Python code using matplotlib and pandas to plot the graph that answers the question. Use the data in the SQL result to create the plot. The SQL result is provided as a variable 'sql_result', which is a list of dictionaries. You can convert it into a pandas DataFrame for plotting. Ensure the code is syntactically correct and uses proper labels and titles.

SQL Result:

{sql_result}

Question:

{question}

Provide only the code, without any explanations or additional text.

Python code:

import matplotlib.pyplot as plt

import pandas as pd

# Assuming sql_result is a list of dictionaries

df = pd.DataFrame(sql_result)

# Create the figure and axis objects

fig, ax = plt.subplots(figsize=(10, 6))

# Your plotting code here using 'ax'

# Do not call plt.show()

"""

)

Explanation:

The prompt instructs the LLM to generate Python code that creates a plot using

matplotlibandpandas.It provides the

sql_resultas a variable and asks the LLM to use it to create aDataFrame.It emphasizes that the code should be syntactically correct and self-contained.

The placeholder

# Your plotting code here using 'ax'indicates where the LLM should insert the plotting logic.

2.2 Creating a Chain for Plot Code Generation

We need to create an LLMChain that uses this prompt to generate the plotting code.

self.generate_plot_code_chain = LLMChain(

llm=self.llm,

prompt=self.plot_code_prompt

)

Explanation:

We instantiate a new

LLMChaincalledgenerate_plot_code_chain.It uses the same LLM instance but with the

plot_code_prompt.

Step 3: Executing the Generated Plotting Code

What We Need:

After generating the plotting code, we need to execute it safely to produce the graph. We also need to handle any potential errors during code execution.

Why We Need It:

Executing arbitrary code can be risky. We need to ensure that the code runs in a controlled environment and that any exceptions are properly handled.

How to Implement It:

3.1 Extracting Code from the LLM's Response

We'll define a helper function extract_code_from_response to extract the code block from the LLM's response.

def extract_code_from_response(response):

# Use regex to extract code within code blocks

code_pattern = re.compile(r'```python(.*?)```', re.DOTALL)

match = code_pattern.search(response)

if match:

code = match.group(1).strip()

else:

# If no code block, just return the response

code = response.strip()

return code

Explanation:

We use a regular expression to find code enclosed within triple backticks and the

pythonlanguage specifier.If found, we extract the code inside the code block.

If no code block is present, we assume the entire response is code.

3.2 Executing the Plotting Code Safely

We'll add a method execute_plot_code to execute the extracted code.

def execute_plot_code(self, code, sql_result):

# Create a local namespace for exec()

local_vars = {'sql_result': sql_result}

try:

exec(code, {}, local_vars)

fig = local_vars.get('fig', None)

if fig:

self.figures.append(fig)

else:

print("No figure object 'fig' was created in the plot code.")

except Exception as e:

print(f"Error executing plot code: {e}")

Explanation:

We define a local namespace with

sql_resultavailable for the code to use.We use

exec()to execute the code in a restricted namespace.We attempt to retrieve the

figobject created by the plotting code.If successful, we store the figure in

self.figuresfor later use.We handle any exceptions and print error messages for debugging.

Step 4: Modifying the Chain to Incorporate Plotting

What We Need:

We need to integrate the plotting logic into our existing chain so that when a graph is requested, the chatbot generates and displays it.

Why We Need It:

By modifying the chain, we ensure that all the steps—from detecting graph intent to executing the plotting code—are seamlessly integrated into the chatbot's workflow.

How to Implement It:

We'll update the extract_and_execute_sql function within our _create_chain method.

4.1 Updating the extract_and_execute_sql Function

def extract_and_execute_sql(inputs):

response = inputs.get('response', '')

question = inputs.get('question', '')

# Extract the SQL query from the response

pattern = re.compile(r'SQLQuery:\s*\n(.*)', re.DOTALL)

match = pattern.search(response)

if match:

sql_query = match.group(1).strip()

if not sql_query.lower().startswith("select"):

result = "Invalid SQL query generated by the LLM."

else:

try:

# Execute the SQL query

new_result = self.db._execute(sql_query)

result = str(new_result)

except Exception as e:

result = f"Error executing SQL query: {e}"

# If graph intent is detected, generate and execute plotting code

if self.detect_graph_intent(question):

# Prepare inputs for plotting code generation

plot_code_inputs = {

'sql_result': str(new_result),

'question': question

}

# Generate the plotting code

plot_code_response = self.generate_plot_code_chain.run(plot_code_inputs)

# Extract the code from the response

plot_code = extract_code_from_response(plot_code_response)

# Execute the plotting code

self.execute_plot_code(plot_code, new_result)

# Return the result indicating that the graph was displayed

return {

"question": question,

"query": sql_query,

"result": "Graph has been generated and stored."

}

else:

return {

"question": question,

"query": sql_query,

"result": result

}

else:

return {

"question": question,

"query": None,

"result": "No SQL query found in the response."

}

Explanation:

After executing the SQL query, we check if a graph is requested using

self.detect_graph_intent(question).If a graph is requested:

We prepare inputs for the plotting code generation, including the

sql_resultand the originalquestion.We run the

generate_plot_code_chainto get the plotting code.We extract the code from the LLM's response using

extract_code_from_response.We execute the plotting code using

self.execute_plot_code.We return a special result indicating that a graph has been generated.

If no graph is requested, we proceed as usual by returning the SQL result.

4.2 Updating the Answer Prompt

We need to modify the answer_prompt to handle the case when a graph has been generated.

self.answer_prompt = PromptTemplate.from_template(

"""Database Description:

{database_description}

{chat_history}

Given the following user question, corresponding SQL query, and SQL result, answer the user question.

Question: {question}

SQL Query: {query}

SQL Result: {result}

If the SQL Result is "Graph has been generated and stored.", respond only and only with "Here's the graph you asked for."

Otherwise, provide a detailed answer.

Answer:"""

)

Explanation:

We specify that if the

SQL Resultindicates a graph has been generated, the chatbot should respond with a specific message.This ensures the chatbot's response aligns with the generated output.

4.3 Adding Figures List to Store Generated Graphs

At the beginning of our ChatLLM class, we add an attribute to store the figures.

self.figures = []

Explanation:

This list will hold all the figures generated during the chatbot's session.

We can later retrieve and display these figures in our application (e.g., in a Streamlit app).

Step 5: Testing the Enhanced Chatbot

Now that we've integrated all the necessary components, it's time to test our chatbot.

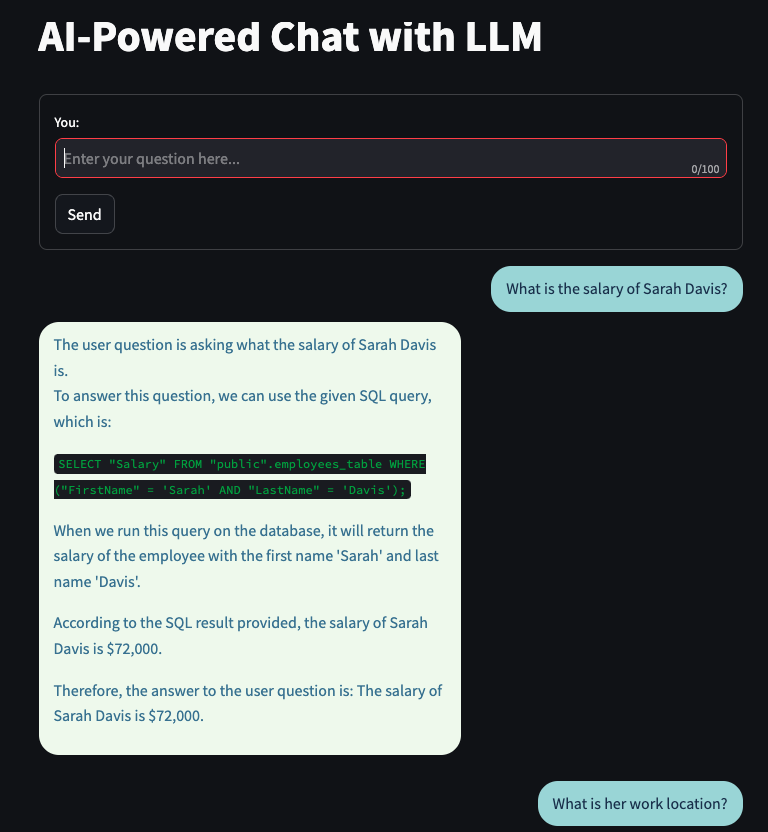

Asking Generic Questions

These questions do not need a graph to be generated, notice that during such calls the graphing chain is not getting executed

Retaining the context

Notice, that the context is retained while answering the second question, while still not generating a graph.

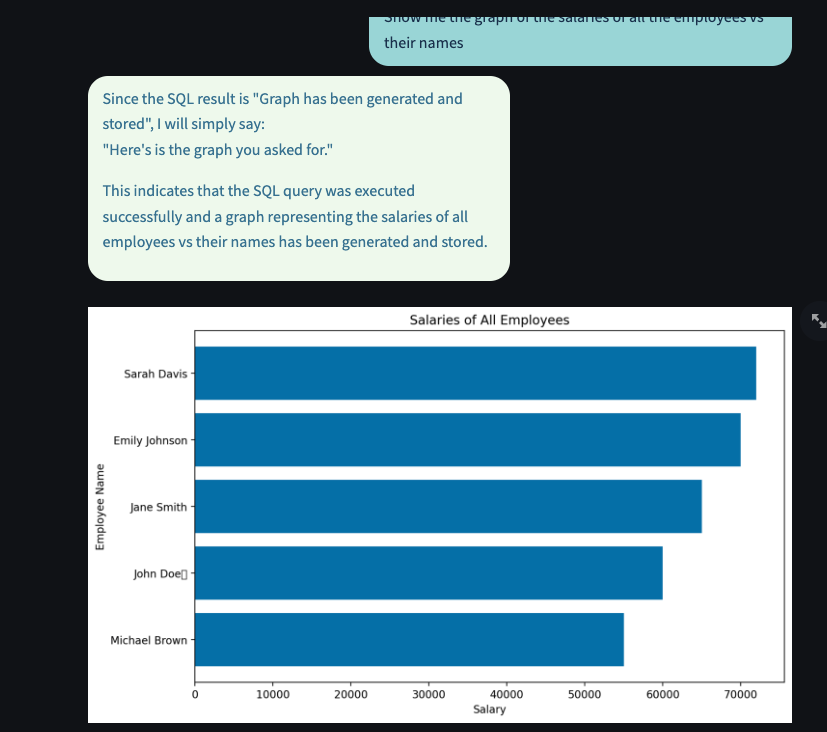

Generating Graphs

As can be seen below, when the user had an intent to plot a graph, it was correctly shown by our LLM agent.

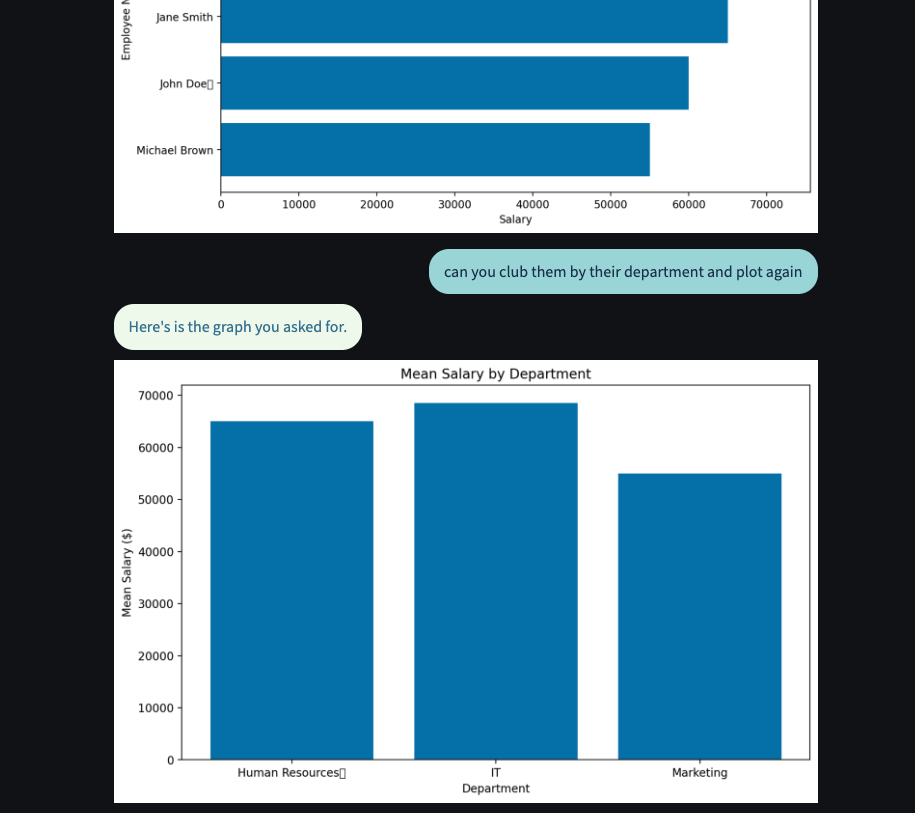

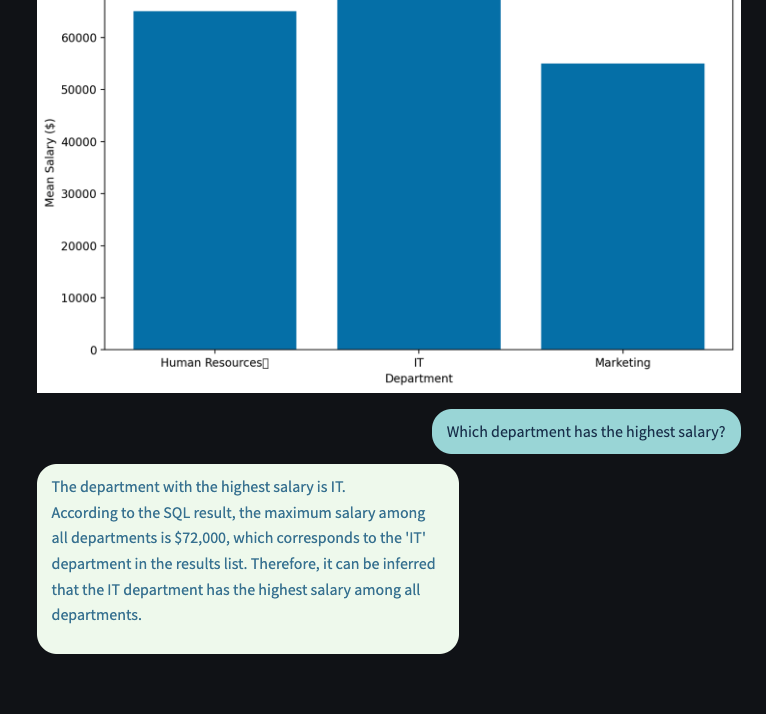

Still Retaining Context

Since we have context to the previous chat messages, notice below how our prompt was able to generate a different graph for the same set of data by grouping them into departments.

While still being able to answer non-graph based questions as intended

Note that we are not discussing the streamlit part of the code, since it is relatively straight forward and can be found in the code repo mentioned in the starting of this blog post.

Conclusion

By following these steps, we've successfully enhanced our chatbot to generate graphs and charts based on natural language queries. This feature greatly improves user experience by providing visual data representations, making data analysis more accessible.

Key Takeaways:

Intent Detection: Identifying user intent is crucial for providing relevant responses and actions.

Dynamic Code Generation: Leveraging LLMs to generate code can automate complex tasks, but safety precautions are necessary.

Integration: Seamlessly integrating new functionalities into existing workflows enhances the capabilities of applications without overcomplicating the architecture.

What's Next?

Our chatbot is becoming increasingly powerful, but there's always room for improvement. In our next blog post, we’ll explore how we can add more sources of information to our chatbot (like PDFs, Excel sheets etc).

Till then, Happy Coding!

Note: Always ensure that executing dynamically generated code is done in a secure and controlled environment to prevent potential security risks.

🚀 Hey there, code sorcerers! 🚀

Don't be a stranger! Like, clap, comment, and let's keep this party coding! 🎉🖥️ And while you're here, why not follow along?

Subscribe to my newsletter

Read articles from Abou Zuhayr directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Abou Zuhayr

Abou Zuhayr

Hey, I’m Abou Zuhayr, Android developer by day, wannabe rockstar by night. With 6 years of Android wizardry under my belt and currently working at Gather.ai, I've successfully convinced multiple devices to do my bidding while pretending I'm not secretly just turning them off and on again. I’m also deeply embedded (pun intended) in systems that make you question if they’re alive. When I'm not fixing bugs that aren’t my fault (I swear!), I’m serenading my code with guitar riffs or drumming away the frustration of yet another NullPointerException. Oh, and I write sometimes – mostly about how Android development feels like extreme sport mixed with jazz improvisation. Looking for new challenges in tech that don’t involve my devices plotting against me!