Llama 3.2 – A Deep Dive

Ahmad W Khan

Ahmad W Khan

Today, we're diving deep into Meta's latest open-source Large Language Model (LLM), Llama 3.2, and exploring how it's transforming the landscape of edge AI and vision tasks. Grab your favorite beverage, and let's pop the hood, shall we.

Llama 3.2: A New Era in Open-Source LLMs

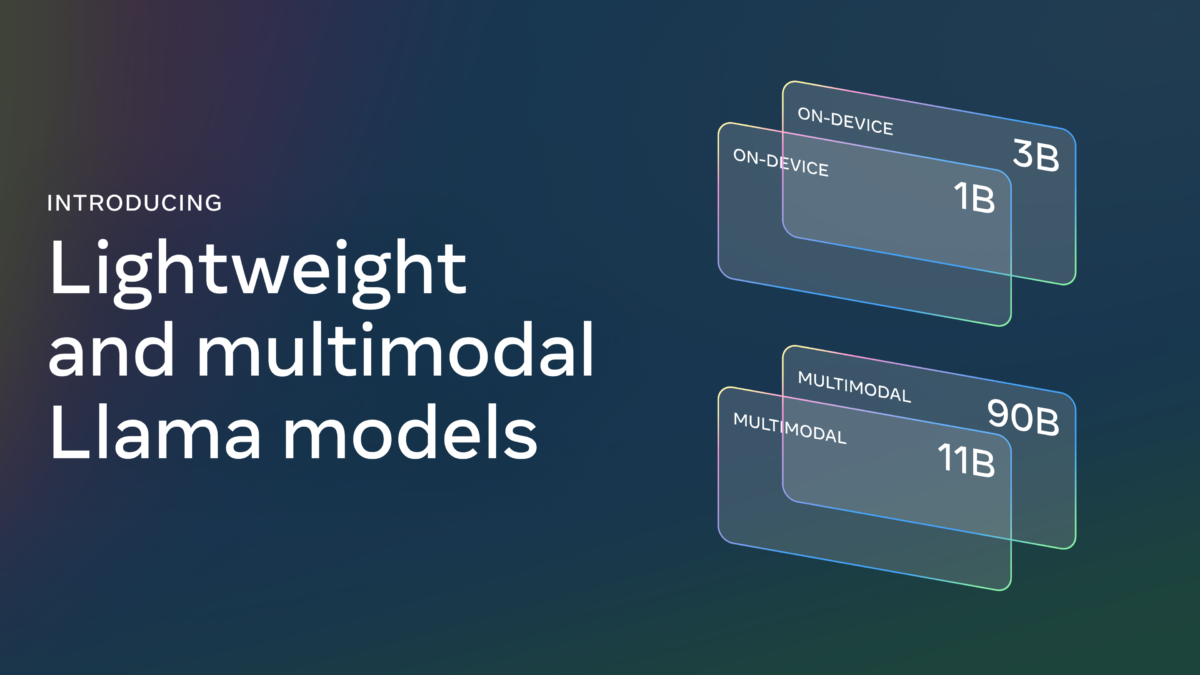

Meta's Llama 3.2 introduces several exciting updates, pushing the boundaries of open-source LLMs and enabling developers to build innovative, safe, and responsible AI applications for edge, vision, and mobile use cases. Let's dive into the key features and improvements of this latest release.

1. Vision LLMs: Understanding the Visual World

1.1 Architecture Overview

Llama 3.2 includes small (11B) and medium-sized (90B) vision LLMs, integrating image understanding capabilities into the models. The architecture of these vision LLMs combines an image encoder with the pre-trained language model using adapter weights. The adapter consists of cross-attention layers that feed image encoder representations into the language model, allowing the model to understand and generate text based on visual inputs.

1.2 Training Pipeline

To develop these vision models, Meta followed a multi-stage training pipeline:

Pre-trained Text Models: The process begins with pre-trained Llama 3.1 text models as the foundation.

Image Adapter and Encoder Addition: Next, image adapters and encoders are added, creating a multimodal model that can process both text and image inputs.

Pretraining on Large-scale Data: The model is then pretrained on large-scale noisy (image, text) pair data, aligning image representations with language representations.

Fine-tuning on High-Quality Data: Finally, the model is fine-tuned on medium-scale high-quality in-domain and knowledge-enhanced (image, text) pair data.

1.3 Capabilities and Use Cases

With these vision models, Llama 3.2 can now understand and generate text based on visual inputs, enabling a wide range of applications:

Image Captioning: The model can generate descriptive captions for images, making it useful for tasks like accessibility or creating metadata for multimedia content.

Visual Grounding: Llama 3.2 can pinpoint and describe objects within images, enabling tasks like object detection, tracking, or scene description.

Document Understanding: The model can process and understand documents with mixed text and image content, making it ideal for tasks like summarizing reports or extracting relevant information from visual data.

2. Lightweight, Text-Only Models: Empowering Edge and Mobile Devices

2.1 Model Creation Techniques

Llama 3.2 introduces 1B and 3B text-only models optimized for edge and mobile devices. These models were created using two primary techniques:

a. Pruning: Structured pruning is employed to reduce the model size while retaining as much knowledge and performance as possible. For the 1B and 3B models, Meta used a single-shot approach, systematically removing parts of the network and adjusting weight magnitudes to create smaller, more efficient models.

b. Knowledge Distillation: This technique involves transferring knowledge from larger models to smaller ones, enabling the smaller model to achieve better performance using a teacher model. For the 1B and 3B models, Meta incorporated logits from the Llama 3.1 8B and 70B models into the pre-training stage, using the larger models' outputs as token-level targets.

2.2 Model Evaluation and Capabilities

Meta's evaluation suggests that the 3B model outperforms Gemma 2.6B and Phi 3.5-mini on tasks such as following instructions, summarization, and tool use, while the 1B model is competitive with Gemma. These lightweight models support context lengths up to 128K tokens, making them highly capable for various edge and mobile use cases, such as:

Personalized AI Assistants: Developers can create on-device AI assistants that understand and generate text based on user inputs, all while maintaining privacy, as data never leaves the device.

Accessibility Tools: Build applications that generate descriptions of images or objects for visually impaired users, making digital content more accessible.

Localized Search and Recommendation: Create efficient search and recommendation systems that process and understand text inputs on the device, improving response times and reducing dependency on cloud-based services.

🏋️♀️ Llama 3.2 vs. the Competition

Meta's evaluation indicates that Llama 3.2's vision models compete with leading foundation models like Claude 3 Haiku and GPT-4o-mini on image recognition and visual understanding tasks. The 3B model outperforms Gemma 2.6B and Phi 3.5-mini on tasks such as following instructions, summarization, and tool use, while the 1B model is competitive with Gemma.

🛠️ Setting Up and Fine-Tuning Llama 3.2 locally

1. Installation and Setup

- Environment Setup: Ensure you have the necessary hardware and software requirements. For optimal performance, use a GPU-enabled machine with sufficient RAM. Additionally, install the required libraries, including Python 3.8, PyTorch, and the Hugging Face transformers library.

pip install torch transformers

- Download the Model: Download the desired model weights (e.g., Llama-3b-v2-hf for the 3B model).

wget https://huggingface.co/decapoda-research/llama-3b-v2-hf/resolve/main/model.bin -O llama-3b-v2-model.bin

- Import and Initialize the Model: Import the model and tokenizer using the transformers library.

from transformers import LlamaForCausalLM, LlamaTokenizer

model = LlamaForCausalLM.from_pretrained("path/to/llama-3b-v2-model.bin")

tokenizer = LlamaTokenizer.from_pretrained("decapoda-research/llama-3b-v2-hf")

2. Fine-Tuning

Fine-tuning Llama 3.2 involves adjusting the model's parameters to better suit a specific use case or dataset. Here's a step-by-step guide using the Trainer API from the Hugging Face transformers library:

a. Prepare the Dataset: Preprocess and format your dataset according to the model's input requirements. For vision models, this includes pairing images with relevant text descriptions or captions.

b. Define Training Arguments: Set up the training arguments, specifying parameters like the output directory, number of training epochs, batch sizes, warmup steps, weight decay, and logging settings.

from transformers import TrainingArguments

training_args = TrainingArguments(

output_dir="./llama-3b-v2-finetuned",

num_train_epochs=3,

per_device_train_batch_size=1,

per_device_eval_batch_size=1,

warmup_steps=500,

weight_decay=0.01,

logging_dir="./logs",

logging_steps=10,

evaluation_strategy="steps",

eval_steps=500,

save_steps=500,

load_best_model_at_end=True,

metric_for_best_model="loss",

greater_is_better=False,

)

c. Initialize the Trainer: Initialize the Trainer object, passing the model, training arguments, and your training and evaluation datasets.

from transformers import Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=your_train_dataset,

eval_dataset=your_eval_dataset,

)

d. Train the Model: Finally, train the model using the trainer.train() method.

trainer.train()

3. Inference and Deployment

Once fine-tuned, you can use the model for inference and deploy it in various environments, depending on your use case:

Local Deployment: Deploy the fine-tuned model locally on a single machine or within a private network.

Cloud Deployment: Upload the fine-tuned model weights to a cloud storage service and use them with a cloud-based inference service like AWS Lambda, Google Cloud Functions, or Azure Functions.

On-Device Deployment: For edge and mobile use cases, package the fine-tuned model with a mobile framework like TensorFlow Lite or ONNX Runtime, enabling deployment on resource-constrained devices.

🔒 Ensuring Safety with Llama Guard

To ensure safe and responsible use of Llama 3.2, Meta has released Llama Guard 3 – an updated safety filter that supports the new image understanding capabilities and has a reduced deployment cost for on-device use. Llama Guard 3 is integrated into the reference implementations, demos, and applications, making it ready for the open-source community to use on day one.

Llama Stack: Simplifying Deployment and Interoperability

Meta is introducing the Llama Stack, a standardized interface for canonical toolchain components (fine-tuning, synthetic data generation) to customize Llama models and build agentic applications. The Llama Stack includes:

Llama CLI: A command-line interface to build, configure, and run Llama Stack distributions.

Client Code: Client code in multiple languages, including Python, Node.js, Kotlin, and Swift.

Docker Containers: Docker containers for Llama Stack Distribution Server and Agents API Provider.

Multiple Distributions: Single-node Llama Stack Distribution via Meta internal implementation and Ollama, cloud distributions via AWS, Databricks, Fireworks, and Together, on-device distribution on iOS implemented via PyTorch ExecuTorch, and on-prem distribution supported by Dell.

With Llama 3.2, Meta is pushing the boundaries of open-source LLMs, empowering developers to build innovative, safe, and responsible AI applications for edge, vision, and mobile use cases. Embrace this revolution, and happy coding!

Subscribe to my newsletter

Read articles from Ahmad W Khan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by