Llama 3.2 is Revolutionizing AI for Edge and Mobile Devices

Fotie M. Constant

Fotie M. Constant

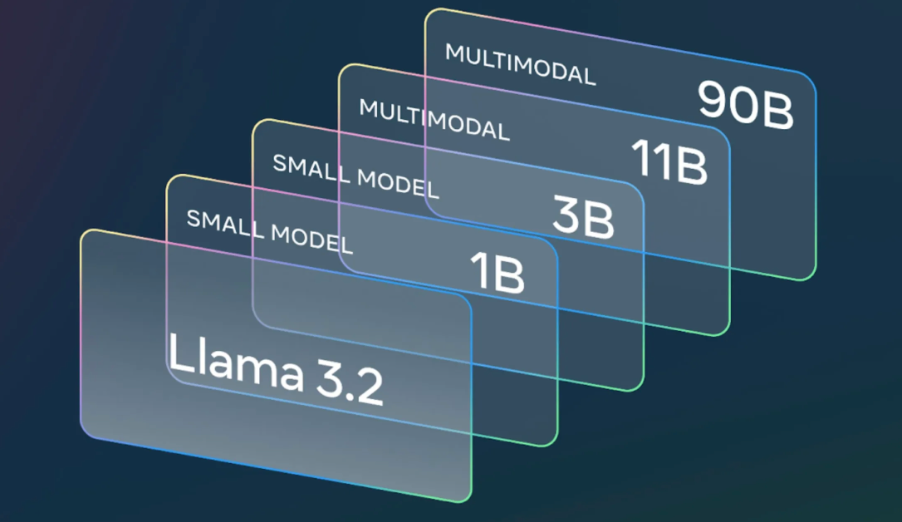

The latest release of Llama 3.2 marks a significant milestone in AI innovation, especially for edge and mobile devices. Meta’s Llama models have seen tremendous growth in recent years, and this newest version offers incredible flexibility for developers. Llama 3.2 introduces powerful large language models (LLMs) designed to fit seamlessly on edge devices, mobile hardware, and even cloud environments. With models ranging from lightweight text-only models to vision-capable LLMs, Llama 3.2 is set to drive the next wave of AI applications.

Features of Llama 3.2

Llama 3.2 includes models of varying sizes, from 1B and 3B lightweight models, optimized for edge and mobile use, to larger 11B and 90B vision models capable of advanced tasks like document understanding and image captioning. These models are pre-trained and available in instruction-tuned versions, making them easily adaptable to a wide variety of applications. The ability to support context lengths of up to 128K tokens means these models can handle complex tasks like summarization, instruction-following, and rewriting.

Vision LLMs: A New Frontier

Llama 3.2 introduces vision-enabled LLMs with the 11B and 90B models, which are designed for image understanding tasks such as document comprehension, image captioning, and visual reasoning. This makes them direct competitors with closed-source models like Claude 3 Haiku, but with the added flexibility of being open and modifiable.

These vision models excel at tasks like:

- Captioning images and extracting meaningful data from visuals.

- Understanding charts and graphs in documents.

- Answering questions based on visual content, such as pinpointing objects on a map.

Lightweight Models for Edge and Mobile

One of the most exciting aspects of Llama 3.2 is its support for lightweight models that fit on mobile and edge devices. The 1B and 3B models are optimized for on-device AI applications, meaning developers can run AI workloads locally, without relying on cloud infrastructure. This brings two key benefits:

- Instantaneous Responses: Since the model runs locally, there’s no need to send data back and forth to the cloud, resulting in near-instant responses.

- Enhanced Privacy: By processing data on the device itself, sensitive information like messages or personal data never needs to leave the device, ensuring greater privacy.

These models are particularly suited for real-time tasks like summarizing recent messages, following instructions, and rewriting content—all within the confines of mobile hardware.

Integration with Mobile and Edge Hardware

Llama 3.2 has been pre-optimized for popular mobile and edge platforms, working closely with Qualcomm, MediaTek, and Arm processors. This integration ensures that developers can run powerful AI models directly on mobile devices, offering an efficient way to deploy AI across a wide range of hardware.

Some of the key benefits of this integration include:

- Improved power efficiency on mobile devices.

- Support for multilingual text generation and tool calling.

- Instant, real-time AI capabilities without the need for internet connectivity.

Advancements in Fine-Tuning and Customization

For developers looking to build custom AI models, Llama 3.2 offers immense flexibility through fine-tuning capabilities. Models can be pre-trained and fine-tuned using Meta’s Torchtune framework, enabling developers to create custom applications tailored to their specific needs. These models also serve as drop-in replacements for previous versions like Llama 3.1, ensuring backward compatibility.

Whether it’s vision tasks or text-based applications, fine-tuning makes it easy to adapt Llama 3.2 to any specific use case.

Llama Stack Distribution: Simplifying AI Development

With the introduction of Llama Stack, developers now have access to a simplified framework for deploying AI models across various environments, including on-device, cloud, single-node, and on-premise solutions. This is supported by a vast ecosystem of partners like AWS, Databricks, Dell Technologies, and more, making Llama 3.2 incredibly versatile.

With Llama Stack, developers can:

- Seamlessly integrate retrieval-augmented generation (RAG).

- Deploy AI across multi-cloud environments or local infrastructure.

- Use turnkey solutions to speed up the development process.

Safety and Responsible AI with Llama 3.2

In addition to being highly capable, Llama 3.2 emphasizes safety and responsible AI. Meta has introduced Llama Guard 3, a system designed to filter input and output when handling sensitive text or image prompts. This is crucial for maintaining ethical standards in AI deployment, ensuring that AI applications do not propagate harmful or biased content.

By adding these safeguards, Llama 3.2 enables developers to build secure and responsible AI applications while still benefiting from its powerful performance.

Performance Benchmarks and Evaluations

Llama 3.2 has been rigorously evaluated against over 150 benchmark datasets, proving its competitiveness against other leading models, including GPT4o-mini and Claude 3 Haiku. The 3B model outperformed the Gemma 2 2.6B and Phi 3.5-mini models in tasks like summarization, instruction-following, and tool-use. Even the 1B model performed well, rivaling other lightweight models on the market.

Efficient Model Creation: Pruning and Distillation

Llama 3.2’s 1B and 3B models were made more efficient through a combination of pruning and knowledge distillation. These techniques reduce the size of the models while retaining performance, enabling their deployment on edge devices without sacrificing speed or accuracy.

Pruning allows for the removal of redundant network components, while distillation transfers knowledge from larger models (like Llama 3.1 8B and 70B) to smaller ones, ensuring the smaller models retain their high-performance levels.

Use Cases and Applications of Llama 3.2

Llama 3.2 offers exciting possibilities for a variety of applications, including:

- Real-time text summarization on mobile devices.

- AI-enabled business tools for managing tasks like scheduling and follow-up meetings.

- Personalized AI agents that maintain user privacy by processing data locally.

With its flexibility and efficiency, Llama 3.2 is perfect for edge AI and on-device AI applications, providing real-time capabilities without compromising on security or performance.

Openness and Collaboration in AI Development

One of the most compelling aspects of Llama 3.2 is Meta’s commitment to openness and collaboration. By making these models available on platforms like Hugging Face and llama.com, Meta is ensuring that developers worldwide can access and build upon Llama 3.2’s powerful capabilities.

Collaboration with leading tech giants, including AWS, Intel, Google Cloud, NVIDIA, and more, has further enhanced the deployment and optimization of Llama 3.2 models. This collective effort underscores Meta’s commitment to open innovation.

Conclusion: The Impact of Llama 3.2 on AI Innovation

Llama 3.2 represents a significant leap forward for AI on edge and mobile devices, bringing unprecedented power and flexibility to developers. Its lightweight models, seamless integration with mobile hardware, and emphasis on safety make it a game-changer in the AI space.

With a broad range of applications, from real-time text summarization to complex visual reasoning, Llama 3.2 is shaping the future of AI development for both enterprises and individual developers.

FAQs

1. What makes Llama 3.2 suitable for edge and mobile devices?

Llama 3.2’s lightweight models (1B and 3B) are optimized for edge and mobile hardware, enabling real-time AI capabilities with enhanced privacy.

2. How do Llama 3.2 vision models compare to other models?

The 11B and 90B vision models excel in image understanding tasks, making them competitive with closed models like Claude 3 Haiku while offering the advantage of being open-source.

3. What are the advantages of running Llama 3.2 locally?

Running Llama 3.2 locally allows for instant responses and enhanced privacy, as data processing stays on the device without relying on cloud infrastructure.

4. How does Llama 3.2 promote responsible AI?

With Llama Guard 3, developers can ensure their AI models handle sensitive input responsibly, filtering harmful or inappropriate content while maintaining model performance.

5. Where can developers access Llama 3.2 models?

Llama 3.2 models are available for download on llama.com and Hugging Face, and are supported by a broad ecosystem of partners like AWS, Dell, and Databricks.

Subscribe to my newsletter

Read articles from Fotie M. Constant directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Fotie M. Constant

Fotie M. Constant

Widely known as fotiecodes, an open source enthusiast, software developer, mentor and SaaS founder. I'm passionate about creating software solutions that are scalable and accessible to all, and i am dedicated to building innovative SaaS products that empower businesses to work smarter, not harder.