Automate Image Upload to Azure Blob Storage With Terraform

Airat Yusuff

Airat YusuffTable of contents

September has been a month with new experiences, and one of them involved me getting a bit hands-on with Microsoft Azure, which in turn, means a blogpost!🫣

As an AWS Community Builder, I have mostly stuck to exploring AWS services for my personal projects (and there’s more than enough of those tbh). This means I have never had a need to dive into other cloud providers because well, I don’t work as a platform engineer (yet).

However, I recently got an opportunity to try out Azure briefly and one of the tasks I enjoyed was automating the upload of a file to a blob storage with Terraform. It was pretty easy and the documentation was informative enough to know what resources to provision.

In this post, I’ll share the scripts I wrote to provision the necessary Azure resources including the image to be uploaded.

In AWS terms, automate uploading a file to an S3 bucket.

Prerequisites

Existing Microsoft Azure account, or a new one - new accounts get $200 worth of free credits that you can use to explore.

Terraform installed on your local machine

IDE of your choice

Useful Resources

Project Setup

While you can create a folder and simply use one file main.tf, the skeleton template I used had some best practices I mentioned in my first Terraform project.

This was my directory structure:

azure_exercise

|-- main.tf

|-- output.tf

|-- provider.tf

|-- variables.tf

variables.tf

# Define your Variables here

# See: https://www.terraform.io/docs/language/values/variables.html

variable "region" {

description = "Deployment Region"

type = string

default = # set value

}

variable "unique-var" {

description = "User ID"

type = string

default = # set value

}

provider.tf

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 2.0"

}

}

}

provider "azurerm" {

features {}

# any other necessary arguments depending on your account

}

data "azurerm_client_config" "current" {}

output.tf

# Define your Outputs here

# See: https://www.terraform.io/docs/language/values/outputs.html

output "urltouploadedfile" {

value = azurerm_storage_blob.samplefile.url

description = "URL of the uploaded file"

}

main.tf

resource "azurerm_resource_group" "tresourcegroup" {

name = "rg-blog-${var.unique-var}"

location = var.region

tags = {

# add preferred tags

}

}

resource "azurerm_storage_account" "storageacc" {

name = "acc${var.unique-var}"

resource_group_name = azurerm_resource_group.tresourcegroup.name

location = azurerm_resource_group.tresourcegroup.location

account_tier = "Standard"

account_replication_type = "LRS"

allow_blob_public_access = true

}

resource "azurerm_storage_container" "storagectr" {

name = "ctr-${var.unique-var}"

storage_account_name = azurerm_storage_account.storageacc.name

container_access_type = "blob"

}

resource "azurerm_storage_blob" "samplefile" {

name = "${var.unique-var}-sample.jpg"

storage_account_name = azurerm_storage_account.storageacc.name

storage_container_name = azurerm_storage_container.storagectr.name

type = "Block"

content_type = "image/png"

source = # absolute path to the image

}

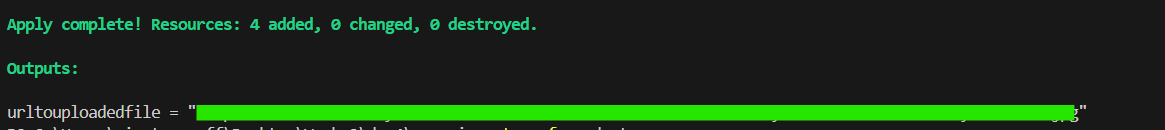

And that’s it! Run the necessary terraform commands:

terraform initterraform planand review if necessaryterraform apply

After successful provisioning, the console should have the publicly available file URL.

NOTE:

You might run into a minor issue with the image downloading via URL instead of opening in browser if you changed the arguments a bit.

It happened to me before I specified the content_type of the azurerm_storage_blob resource. Without specifying, it defaults to application/octet-stream, which is basically code for “I’m not sure what type of file this is, so I’m just going to label it a random binary file”; hence causing the download.

This StackOverflow discussion helped.

I also worked with some other services and built Azure pipelines for CI/CD; overall, it was a good learning experience to try another cloud provider (a bit frustrating but still nice).

Thanks for reading!

Subscribe to my newsletter

Read articles from Airat Yusuff directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Airat Yusuff

Airat Yusuff

Software Engineer learning about Cloud/DevOps. Computing (Software Engineering) MSc. Computer Engineering BSc. Honours.