End-to-end Guide to building a Reliable Authentication System for a Startup MVP - Part 6

Taiwo Ogunola

Taiwo Ogunola

In this series, we have built a basic yet robust and scalable authentication system using battle-tested libraries. In this part, we will explore how to enhance the security of our backend application, make it more scalable, and improve its performance.

Here is what we are going to cover:

Rate-limiting (Security): Prevent brute-force attacks;

Performance and Scaling: Queue System, Fastify and Postgres;

Rate Limiting

Rate-limiting is a common technique used to protect applications from brute-force attacks. We can implement this within our application using the @nestjs/throttler package. This means you don’t allow a user with the same IP to call the same API endpoints within a time frame.

$ npm i --save @nestjs/throttler

Inside our app.module.ts, we can configure the ThrottlerModule with forRoot or forRootAsync method like any other Nest package:

import { seconds, ThrottlerModule } from '@nestjs/throttler';

@Module({

imports: [

... other imports

ThrottlerModule.forRoot([

{

name: 'short',

ttl: seconds(1),

limit: 2,

},

{

name: 'medium',

ttl: seconds(10),

limit: 15,

},

{

name: 'long',

ttl: seconds(60),

limit: 40,

},

]),

],

...others

})

export class AppModule {}

We have configured our ThrottlerModule to allow no more than 2 calls in a second, 15 calls in 10 seconds, and 40 calls in a minute.

We are going to bind the guard globally with the ThrottlerGuard provider inside AppModule:

import { APP_GUARD } from '@nestjs/core';

import { ThrottlerGuard } from '@nestjs/throttler';

@Module({

// ...others

providers: [

{

provide: APP_GUARD,

useClass: ThrottlerGuard,

},

AppService,

],

})

export class AppModule {}

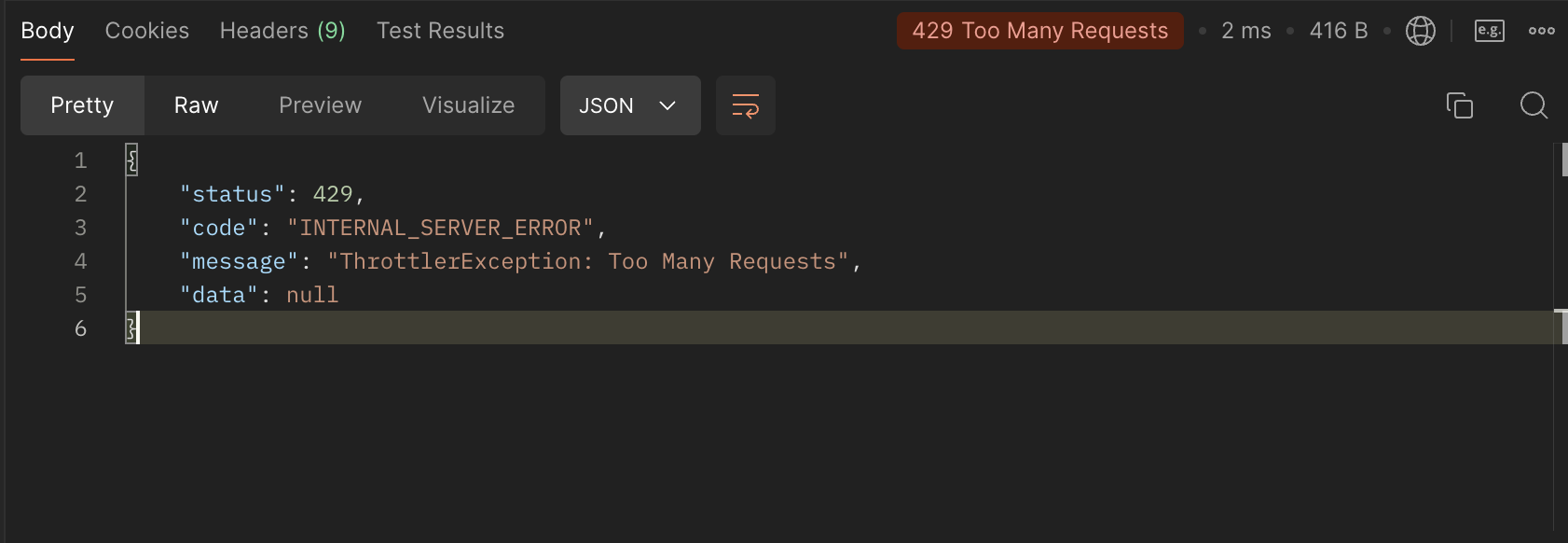

There you go, we have successfully added rate limiting feature into our backend system. Try sending too many requests to a single endpoint:

We can make the error message user-friendly for our clients by overriding the errorMessage inside the ThrottlerGuard class like this:

import { ThrottlerGuard } from '@nestjs/throttler';

import { Injectable } from '@nestjs/common';

@Injectable()

export class ApiThrottlerGuard extends ThrottlerGuard {

protected errorMessage: string = 'Too Many Requests';

}

And inside AppModule, we can replace ThrottlerGuard with our new custom guard class:

{

provide: APP_GUARD,

useClass: ApiThrottlerGuard,

}

You can read more about the @nestjs/throttler package here.

Queue System

A Queue is a data structure that follows the first-in-first-out (FIFO) model. Queues are common in computer programs, like the Node.js Event Loop, and are used in distributed systems and microservice architectures.

Queueing system helps manage background tasks by deferring work that doesn't need to be completed in real time. This is crucial in a scalable architecture where user actions may trigger resource-intensive operations. For instance, sending emails, processing uploads, or interacting with external APIs can introduce delays if done synchronously.

By introducing a queuing system, we can offload these tasks to a background worker, allowing our application to remain responsive. This helps with:

Scalability: As our application grows, the number of tasks will increase. A queue system allows you to distribute these tasks across multiple workers, which can scale independently of the application.

Resilience: Queue systems can handle retries and failure scenarios gracefully. If an email fails to send, it can retry according to your configured back-off strategy. This ensures that transient failures don’t become bottlenecks in your system.

Performance: Offloading resource-intensive tasks allows your primary application to maintain performance and handle user interactions more efficiently.

In our case, we are going to use BullMQ which is Redis to persist job data. It supports features like job prioritization, rate limiting, and concurrency, which allow you to fine-tune performance as your system grows.

Email Service Queue

We are going to add a queue system to our current application by decoupling our email sending service from the request-response cycle, keeping the user experience smooth.

Install the necessary dependencies:

$ npm i bullmq @nestjs/bullmq

For our local development, we are going to use docker-compose to add a redis image. Add a docker-compose.yml file auth-backend root folder:

services:

redis:

image: redis:7-alpine

container_name: redis

ports:

- '6370:6379'

networks:

- nest-network

networks:

nest-network:

driver: bridge

The default redis port is 6379, but in our image we expose port 6370 to our host machine, this is useful if you have lots of redis image on my computer, you can change it to any available you like.

Pull and run the images:

$ docker compose up -d

Update .env file:

QUEUE_HOST=localhost

QUEUE_PORT=6370

Update config/index.ts file:

// ...other values

queue_host: process.env.QUEUE_HOST,

queue_port: process.env.QUEUE_PORT,

And in our libs folder, we are going to add the following new files:

├── mailer

│ ├── mailer-queue.service.ts

│ ├── mailer.module.ts

│ ├── mailer.processor.ts

│ ├── mailer.service.ts ← auth/services/email.service.ts

│ └── mailer.type.ts

├── queue

│ ├── queue.module.ts

│ └── queue.type.ts

Let’s start with the queue.module.ts file:

import { Module } from '@nestjs/common';

import { BullModule } from '@nestjs/bullmq';

import { ConfigModule, ConfigService } from '@nestjs/config';

@Module({

imports: [

BullModule.forRootAsync({

imports: [ConfigModule],

useFactory: async (configService: ConfigService) => ({

connection: {

host: configService.getOrThrow<string>('queue_host'),

port: configService.getOrThrow<number>('queue_port'),

},

}),

inject: [ConfigService],

}),

],

})

export class QueueModule {}

// Inside queue.type.ts

export enum QueueNameEnum {

MAILER = 'mailer',

}

Add the QueueModule class to your imports in the root app, in our AppModule class.

We will add a MailerModule class inside the mailer.module.ts file. In this module, we will use the registerQueue method to register our mailer queue:

import { Module } from '@nestjs/common';

import { BullModule } from '@nestjs/bullmq';

import { ConfigModule } from '@nestjs/config';

import { MailerProcessor } from './mailer.processor';

import { MailerQueueService } from './mailer-queue.service';

import { MailerService } from './mailer.service';

import { QueueNameEnum } from 'libs/queue/queue.type';

@Module({

imports: [

ConfigModule,

BullModule.registerQueue({

name: QueueNameEnum.MAILER,

}),

],

providers: [MailerProcessor, MailerService, MailerQueueService],

exports: [MailerQueueService],

})

export class MailerModule {}

Job Producers

Job producers adds jobs to queues. Producers are basically nestjs services, we are going to create our

import { Injectable } from '@nestjs/common';

import { Queue } from 'bullmq';

import { MailerJobNameEmum } from './mailer.type';

import { InjectQueue } from '@nestjs/bullmq';

import { QueueNameEnum } from 'libs/queue/queue.type';

@Injectable()

export class MailerQueueService {

constructor(@InjectQueue(QueueNameEnum.MAILER) private emailQueue: Queue) {}

async addMagicLink(email: string, link: string) {

await this.emailQueue.add(MailerJobNameEmum.SEND_MAGIC_LINK, {

email,

link,

});

}

async addForgetPasswordLink(email: string, link: string) {

await this.emailQueue.add(MailerJobNameEmum.SEND_FORGOT_PASSWORD_LINK, {

email,

link,

});

}

}

Mailer Queue Worker

Workers perform tasks based on the jobs added to the queue. They act like "message" receivers in a traditional message queue. If a worker completes the task, the job is marked as "completed". If an error occurs, the job is marked as "failed".

Inside our mailer.processor.ts file:

import { Processor, WorkerHost } from '@nestjs/bullmq';

import { Logger } from '@nestjs/common';

import { Job } from 'bullmq';

import { MailerJobNameEmum, EmailLinkJobData } from './mailer.type';

import { MailerService } from './mailer.service';

import { QueueNameEnum } from 'libs/queue/queue.type';

@Processor(QueueNameEnum.MAILER)

export class MailerProcessor extends WorkerHost {

private readonly logger = new Logger(MailerProcessor.name);

constructor(private readonly mailerService: MailerService) {

super();

}

async process(job: Job<EmailLinkJobData>) {

switch (job.name) {

case MailerJobNameEmum.SEND_MAGIC_LINK: {

const { email, link } = job.data;

await this.mailerService.sendMagicLink(email, link);

return;

}

case MailerJobNameEmum.SEND_FORGOT_PASSWORD_LINK: {

const { email, link } = job.data;

await this.mailerService.sendForgetPasswordLink(email, link);

return;

}

default: {

this.logger.error(`Unknown job type: ${job.name}`);

throw new Error(`Unknown job type: ${job.name}`);

}

}

}

}

Move mailer.service.ts ← auth/services/email.service.ts

We basically moved contents of auth/services/email.service.ts into our new mailer library. The only significant change was renaming EmailService to MailerService.

Add sending Forgot password and magic link email to queue

The code inside our ForgetPasswordAuthController doesn't change much; we just switch from using the EmailService provider to the new MailerQueueService provider:

import { MailerQueueService } from 'libs/mailer/mailer-queue.service';

@Controller('forget-password')

export class ForgetPasswordAuthController {

constructor(

// ...others

private readonly mailerQueueService: MailerQueueService,

) {}

@Post()

async requestResetPasswordLink(@Body('email') email: string) {

// ...previous code

await this.mailerQueueService.addForgetPasswordLink(

user.email,

resetPasswordLink,

);

}

}

The same goes for sending Magic link email:

import { MailerQueueService } from 'libs/mailer/mailer-queue.service';

@Controller('magic-link')

export class MagicLinkController {

constructor(

private readonly mailerQueueService: MailerQueueService,

) {}

@Post('request')

async requestMagicLink(@Body() { email }: MagicLinkRequestDto) {

await this.mailerQueueService.addMagicLink(user.email, magicLink);

}

}

This essentially decouples the email sending service from our main application, which greatly improves performance. You can test the performance before and after switching the services.

Migrate to Fastify and Postgres (Optional)

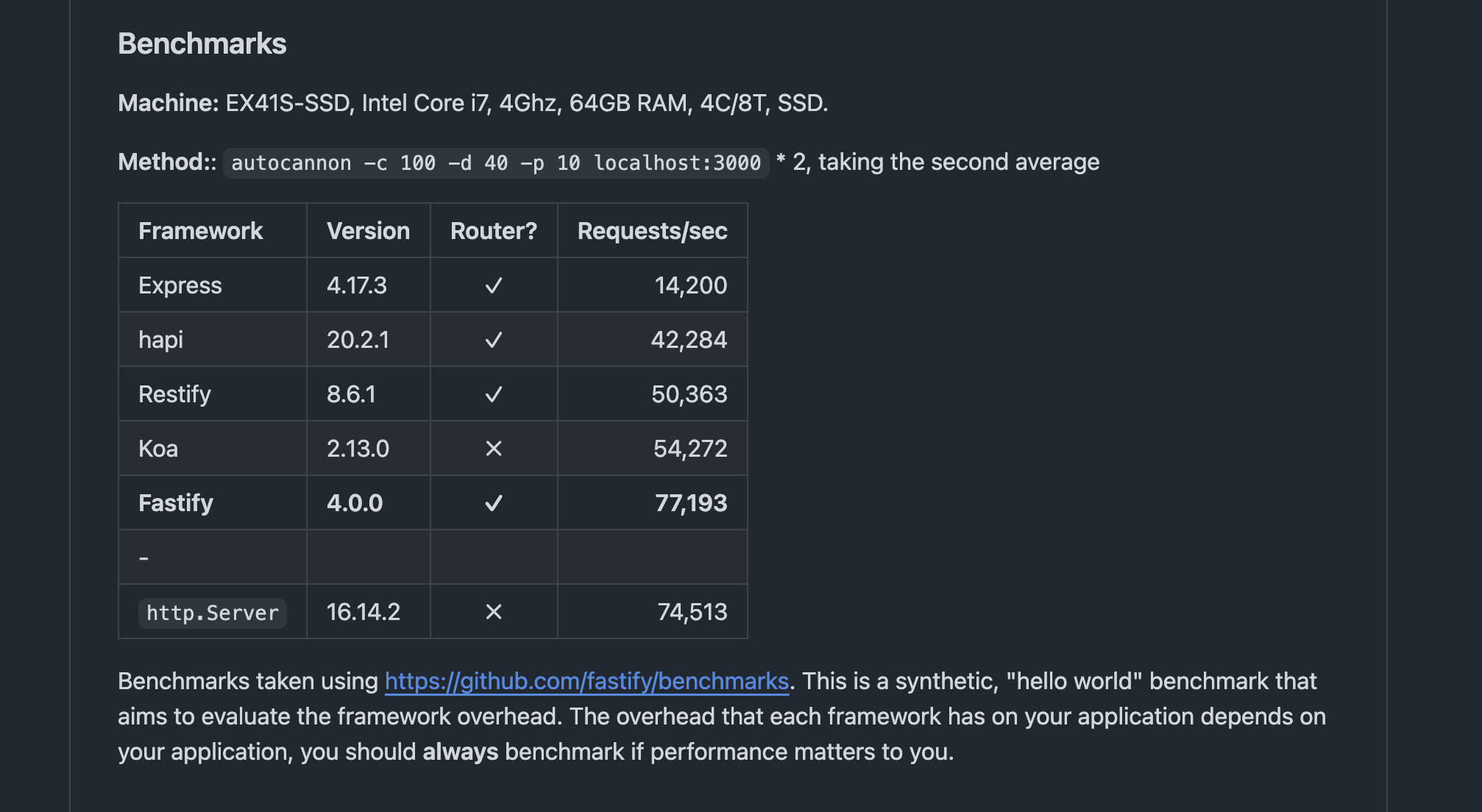

NestJS uses the Express library by default. It is also compatible with other libraries such as Fastify and Koa because it is platform-agnostic.

Fastify is a good alternative framework that is much faster than Express, achieving almost twice the benchmark results. Fastify can be a better choice if you prioritize very fast performance.

To get started, let’s install the necessary packages:

$ npm i @nestjs/platform-fastify fastify @fastify/cookie

$ npm uninstall @nestjs/platform-express cookie-parser

Edit bootstrap function inside of main.ts

import { FastifyAdapter, NestFastifyApplication } from '@nestjs/platform-fastify';

import fastifyCookie from '@fastify/cookie';

const app = await NestFactory.create<NestFastifyApplication>(

AppModule,

new FastifyAdapter(),

);

await app.register(fastifyCookie, {

secret: cookieSecret,

});

Also, in our routes that requires redirects, we need to explicitly set the status code to 302 like this:

res

.status(HttpStatus.FOUND)

.redirect(

this.configService.getOrThrow<string>('frontend_client_origin'),

);

We create a types.ts file to export the underlying framework types when you need to use those method:

export { FastifyRequest as Request, FastifyReply as Response } from 'fastify';

Issues with using Fastify with Passport

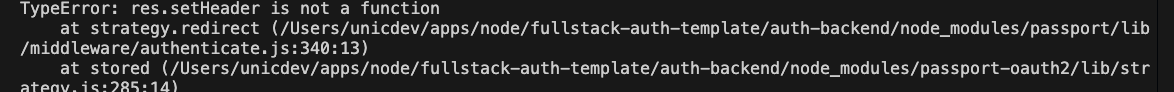

In our current application, Passport is an Express middleware. There is an official equivalent Fastify package called fastify-passport, but it is not yet supported in the @nestjs/passport package. Running the app and testing our oauth flows will result in some errors:

However, we can make it work because Express is very similar to Fastify, and Passport.js is not a heavy package that relies on many Express-specific APIs. We essentially modify our Fastify app to match the Express methods with equivalent fastify methods:

After the NestFactory.create call:

/**

* This is a workaround to add the setHeader and end methods to the reply object

* because passport.js is an express middleware and doesn't exactly work well with fastify

* TODO: We can remove this once nestjs has a support for the fastify-passport package

*/

fastifyInstance.addHook('onRequest', (request, reply, done) => {

(reply as any).setHeader = function (key, value) {

return this.raw.setHeader(key, value);

};

(reply as any).end = function () {

this.raw.end();

};

(request as any).res = reply;

done();

});

Postgres Image

While SQLite is lightweight and great for development or small projects, it has limitations with concurrent connections and large data sets. PostgreSQL, on the other hand, is a robust, enterprise-level database that handles complex queries, large volumes of data, and high levels of traffic more efficiently. It supports better concurrency, horizontal scaling, and advanced features like indexing, partitioning, and transaction control, making it ideal for production systems where performance and scalability are critical.

In our docker-compose.yml file:

services:

postgres:

image: postgres:15-alpine

container_name: postgres

environment:

POSTGRES_USER: auth-template-user

POSTGRES_PASSWORD: auth-template-password

POSTGRES_DB: auth_template_db

ports:

- '5401:5432'

volumes:

- postgres-data:/var/lib/postgresql/data

networks:

- nest-network

volumes:

postgres-data:

driver: local

Update DATABASE_URL:

DATABASE_URL=postgresql://auth-template-user:auth-template-password@localhost:5401/auth_template_db?schema=public&connection_limit=5

Pull and run postgres:

$ docker compose up -d

Before we run migrations, you will need to delete the old prisma/migrations folder or rename it. If you are also migrating a production environment, you would need to write a custom scripts to migrate your data.

Run migrations:

$ npm run prisma:migration:save

$ npm run prisma:migration:up

Conclusion

In this part of our series, we have significantly enhanced the security, scalability, and performance of our authentication system. By implementing rate-limiting, we have fortified our application against brute-force attacks. The introduction of a queue system has allowed us to handle background tasks efficiently, ensuring a smooth user experience.

Additionally, migrating to Fastify and PostgreSQL has provided us with a more robust and high-performance backend. These improvements are crucial as we prepare our startup MVP for real-world usage, ensuring it can handle increased load and provide a secure, responsive service to our users.

All the code can be found on branch feat/BLOG-6-security-scaling-and-performance.

Subscribe to my newsletter

Read articles from Taiwo Ogunola directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Taiwo Ogunola

Taiwo Ogunola

With over 5 years of experience in delivering technical solutions, I specialize in web page performance, scalability, and developer experience. My passion lies in crafting elegant solutions to complex problems, leveraging a diverse expertise in web technologies.