Cache Coherence Problems: Techniques and Approaches for Resolution

Abhilash Kumar

Abhilash Kumar

In modern multi-core and distributed computing systems, cache coherence is a critical challenge that can significantly impact performance and data integrity. Cache coherence problems occur when multiple processors have local caches of shared data, leading to inconsistencies between these caches. When one processor updates its cache, other caches may still hold outdated copies of the data, resulting in incorrect program execution or delays due to synchronization.

Let’s understand the cache coherence problem with an example:

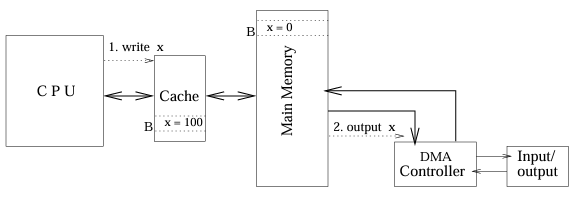

For a single processor system with cache, main memory (MM), and a DMA controller:

Let's assume a cache write-back policy.

If the CPU writes x = 100 to the cache and the output device reads x through the DMA controller, then the value received for x at the output device is 0 (the old/stale value of x).

This causes data inconsistencies in the memory system.

However, if the cache write policy is write-through, then there will be no such data inconsistency.

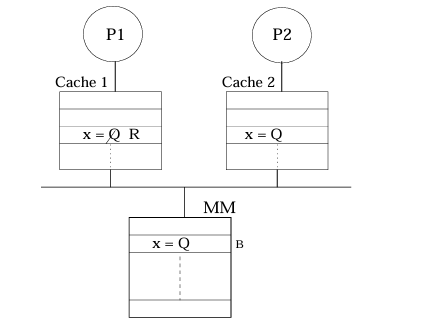

For multiprocessor system-

More than one processor (P1 and P2) may share item x (let's say x = Q) of block B.

If P1 updates x to R in its cache, P2’s cache may still have the old value of x = Q.

This results in inconsistency in the cached copies of block B.

To continue further we need to know what is Write-Back and Write-Through policies.

1) Write-Back Policy:-

In the write-back policy, data is written to the cache first, and the memory is updated only when the cache block is replaced. This reduces the number of memory writes, improving performance, but increases the risk of data inconsistency if a failure occurs before the memory is updated.

2) Write-Through Policy:-

In the write-through policy, data is written to both the cache and memory simultaneously whenever an update occurs. While this ensures data consistency between the cache and memory, it can lead to higher memory traffic, impacting performance.

How to enforce Cache Coherence?

For enforcing cache coherence, a number of schemes, protocols, and hardware are available.

The following schemes are considered for enforcing cache coherence:

Write-invalidate

Write-update

Write-once

Let’s explain each approaches in detail:-

Write-invalidate

In the write-invalidate scheme, a write to shared data X/B by processor-

Invalidates all other cached copies of B (but not the MM copy). However, for write-through, X in MM will be modified.

A subsequent request for B, by another processor other than P2, is treated as a miss.

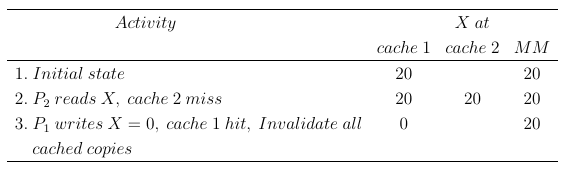

Let’s understand write-invalidate with an example-

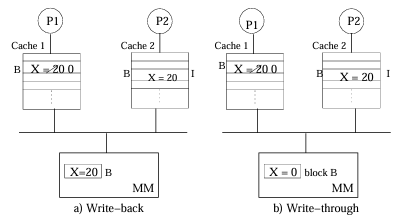

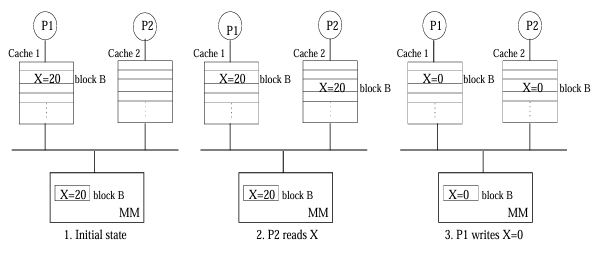

Consider the above picture.

Cache 1 initially holds block B. Assume initially X = 20 in B.

The cache write policy is write-back.

The following points to X in cache 1, cache 2, and MM after different activities.

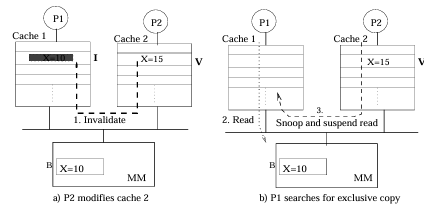

Now, say (Step 4) P2 reads X after P1 invalidates B (Step 3), it is a cache 2 miss.

- How will P2 get the latest copy of B? (Hint: Snooping Protocol)

Write-Update

It is also referred to as the write-broadcast scheme.

In this scheme, an update in block B of Pi’s cache updates all cached copies of B and the MM copy.

Any subsequent request for B from any processor is treated as a hit.

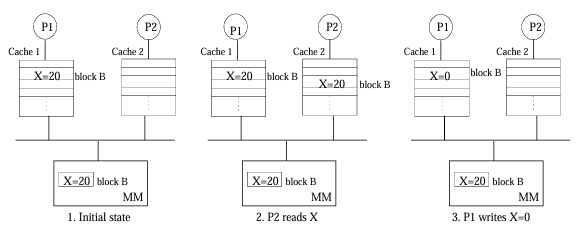

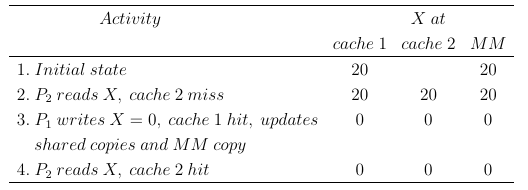

Consider the above picture.

Cache 1 initially holds block B.

Assume X in B is initially 20.

The following points to X in cache 1, cache 2, and MM after different activities.

The policy here is write-back (however, suppressed by write-through of write-update).

Write-update scheme requires high bandwidth as it requires broadcasting.

In write-update, each write is followed by an update. Therefore, consecutive writes to the same block, with no read, require multiple write broadcasts.

In reality, a hybrid scheme (write-update and write-invalidate scheme) is used.

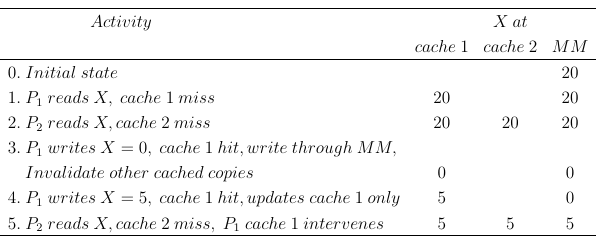

Write-once

The principle of the write-once scheme is:

If a block B in cache Ci at processor Pi is written for the first time, all cached copies of B are declared invalid (I) and the MM copy is updated.

If next time B is updated in Ci, no invalidation message is generated. The MM write follows the write-back policy.

When Pj tries to access B, B is supplied to Cj from Ci. The MM copy is also updated.

Write-once reduces overall bus traffic for consecutive writes by a processor to B.

The X in cache 1, cache 2, and MM after different activities in write-once follows.

In cache coherence, the write-invalidate scheme shows better performance. However, for large block sizes, the write-invalidate scheme reports false sharing misses.

What is false sharing miss?

Consider variables X1 and X2 are in the same block B.

Block B is in cache 1 and cache 2 at time 0.

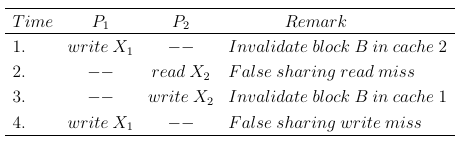

Assume the following activities, in sequence.

False sharing miss is identified at time 2 and 4.

It is due to writes to two different words (X1 and X2) of B by two processors P1/P2.

For small-scale multiprocessors, we adopt a hardware solution for cache coherence.

Two major hardware based coherence solutions are:

Snoopy Protocol

Directory based protocol

What is Snoopy Protocol?

The Snoopy Protocol is a cache coherence mechanism used in multiprocessor systems.

It works by having each cache monitor (or "snoop") the shared bus to track memory operations by other processors.

When a processor modifies a cached block, the protocol ensures consistency by invalidating or updating the block in other caches, preventing stale data.

This approach helps maintain coherence efficiently in small-scale systems.

I hope you found my blog helpful! I'd love to hear your thoughts, so feel free to leave any feedback in the comments. Don’t forget to subscribe to the Hashnode newsletter to stay updated whenever I publish new content.

Have a great day 🙌!

Subscribe to my newsletter

Read articles from Abhilash Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by