Enhancing Data Quality in Snowflake Using a Streamlit Framework

Mohammed Zeeshan

Mohammed Zeeshan

Introduction

In today's data-driven landscape, organizations rely heavily on accurate and reliable data to make informed decisions. Snowflake, as a leading cloud-based data platform, offers robust solutions for managing vast amounts of data. However, ensuring the quality of this data remains a critical challenge.

To address this, I have developed the Snowflake Data Quality Assessment Framework, a Streamlit application designed to assess and enhance data quality within Snowflake databases. This article provides a comprehensive guide on how to use this framework to evaluate your data's quality and improve your data governance practices.

Demo Video: Watch the Framework in Action

Background on Data Quality

Data quality refers to the condition of a set of values of qualitative or quantitative variables. High-quality data is crucial for:

Accurate Analytics: Ensuring business intelligence reports and dashboards reflect true insights.

Regulatory Compliance: Meeting legal and industry standards for data handling.

Operational Efficiency: Reducing errors and inefficiencies in business processes.

Customer Satisfaction: Providing reliable services and products to clients.

Key Dimensions of Data Quality:

Accuracy: The degree to which data correctly describes the real-world object or event.

Completeness: The extent to which all required data is known.

Consistency: The absence of differences when comparing two or more representations of a thing.

Timeliness: The degree to which data is up-to-date and available within a useful time frame.

Uniqueness: No data record is duplicated.

Validity: Data conforms to the syntax (format, type, range) of its definition.

These dimensions are based on the "Dimensions of Data Quality" research paper, which outlines essential criteria for evaluating data quality.

The Snowflake Data Quality Assessment Framework

The framework is an interactive Streamlit application that connects to your Snowflake database to perform data quality assessments based on the key dimensions mentioned above.

Features:

Data Maturity Score: Provides an overall score representing your data's quality level.

Detailed Analysis: Breaks down the assessment by each data quality dimension.

User-Friendly Interface: Easy navigation and interpretation of results.

Real-Time Assessment: Connects directly to your Snowflake database for up-to-date evaluations.

Extensibility: Ability to add more data quality checks as per organizational needs.

Technologies Used:

Python: The core programming language for the application.

Streamlit: For building the interactive web application.

Pandas: For data manipulation and analysis.

Installation and Setup

Prerequisites

- Access to a Snowflake database with appropriate permissions.

Steps

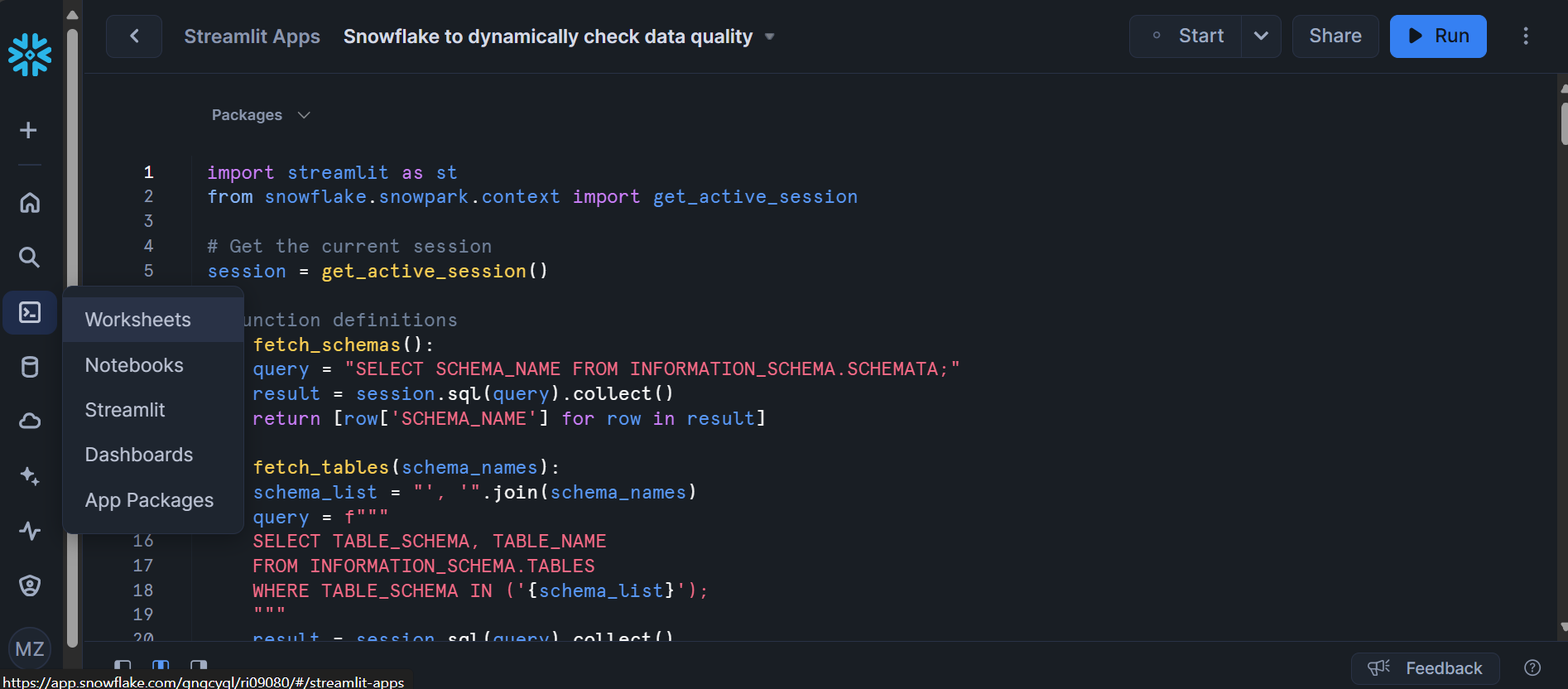

Clone the Repository

bashCopy codegit clone https://github.com/TheMohammedZeeshan/snowflake-data-quality-framework.git cd snowflake-data-quality-frameworkPaste the code in Snowflake Streamlit section

And Thats it!!!

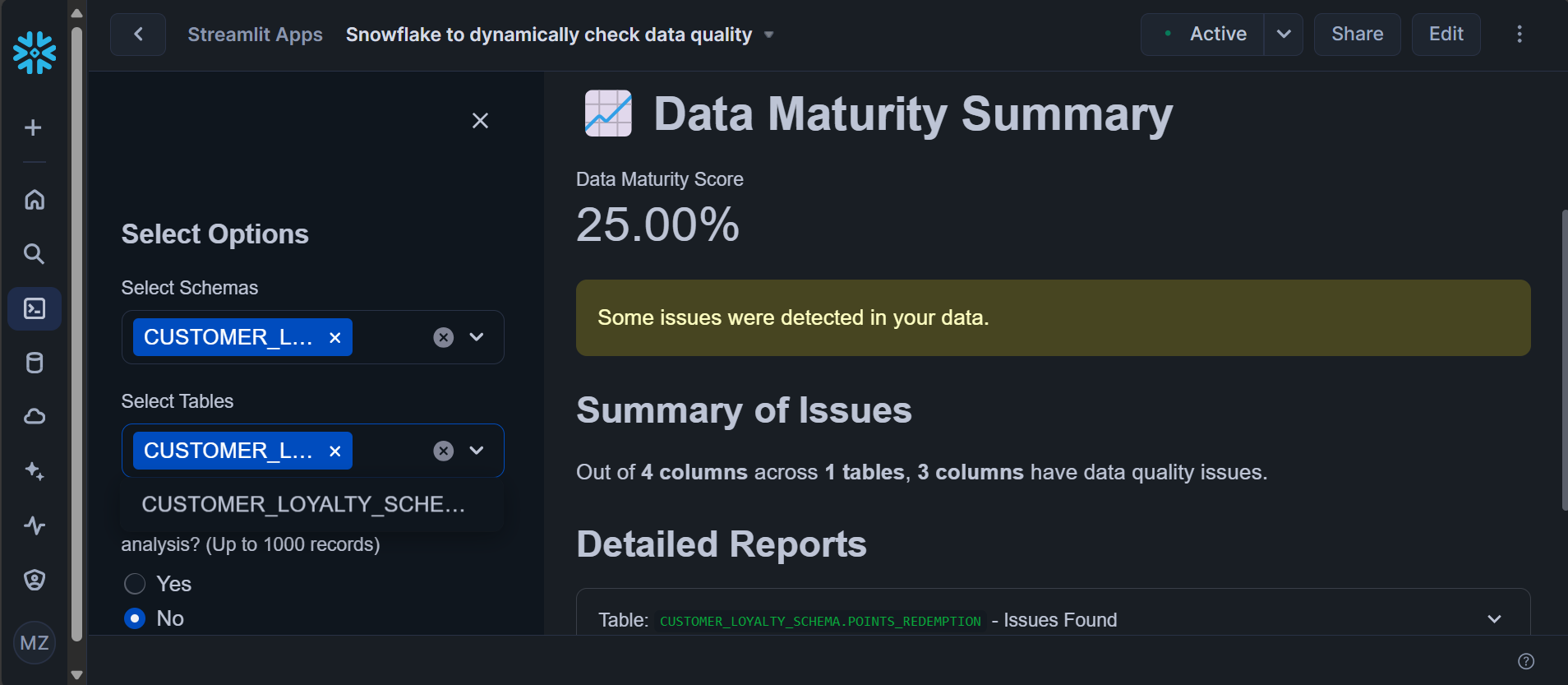

Using the Framework

Step 1: Connect to Snowflake

Upon launching the application, you'll be prompted to connect to your Snowflake database.

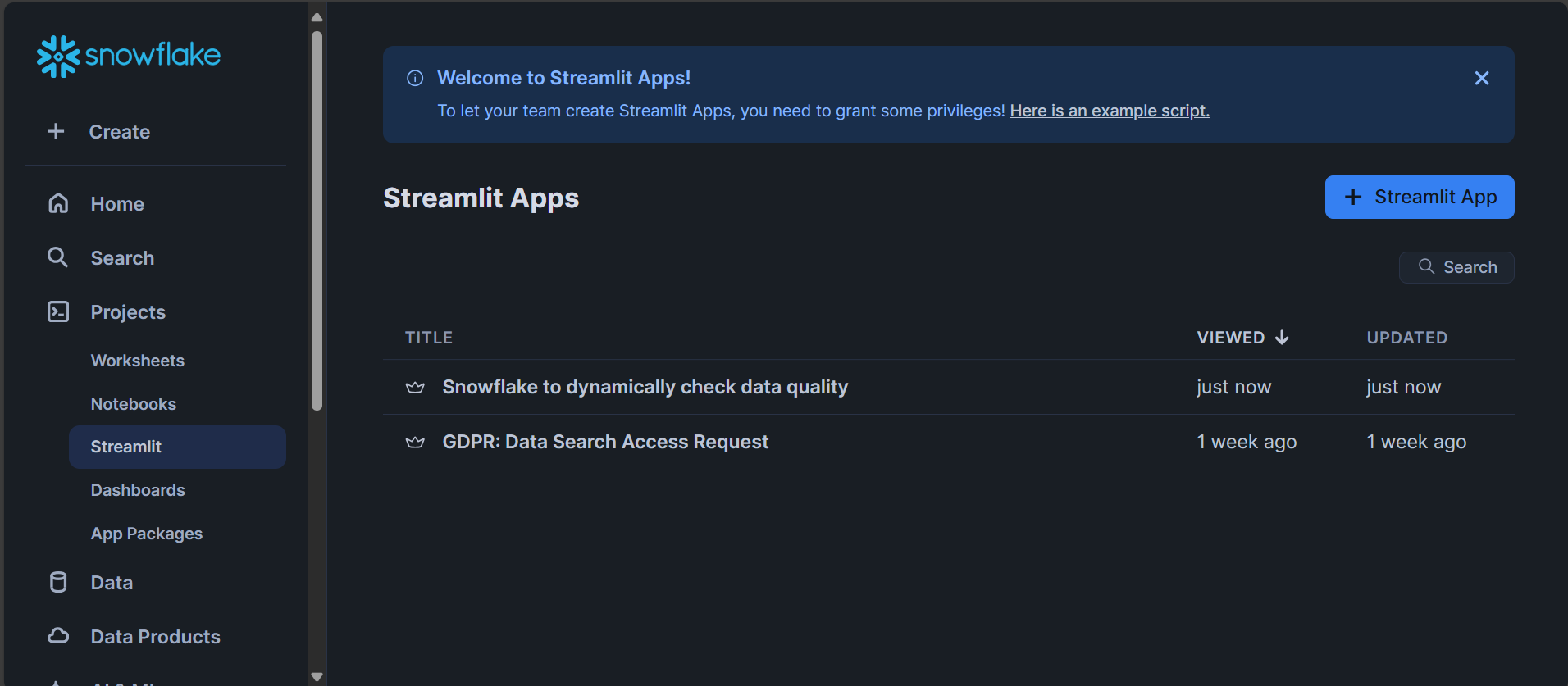

Navigate to Streamlit section under Projects

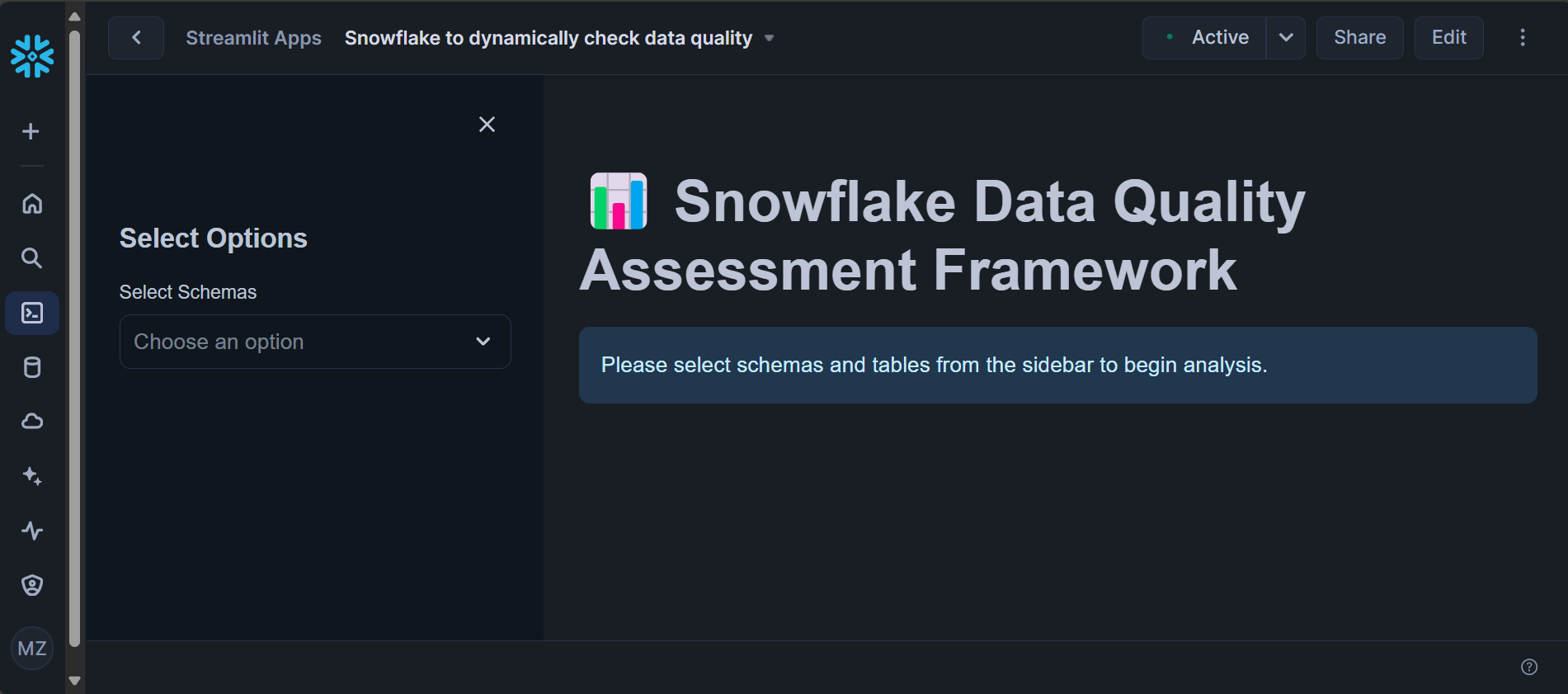

Step 2: Select Schema and Table

Choose the schema and table you wish to assess from dropdown menus.

Step 3: Run Data Quality Assessment

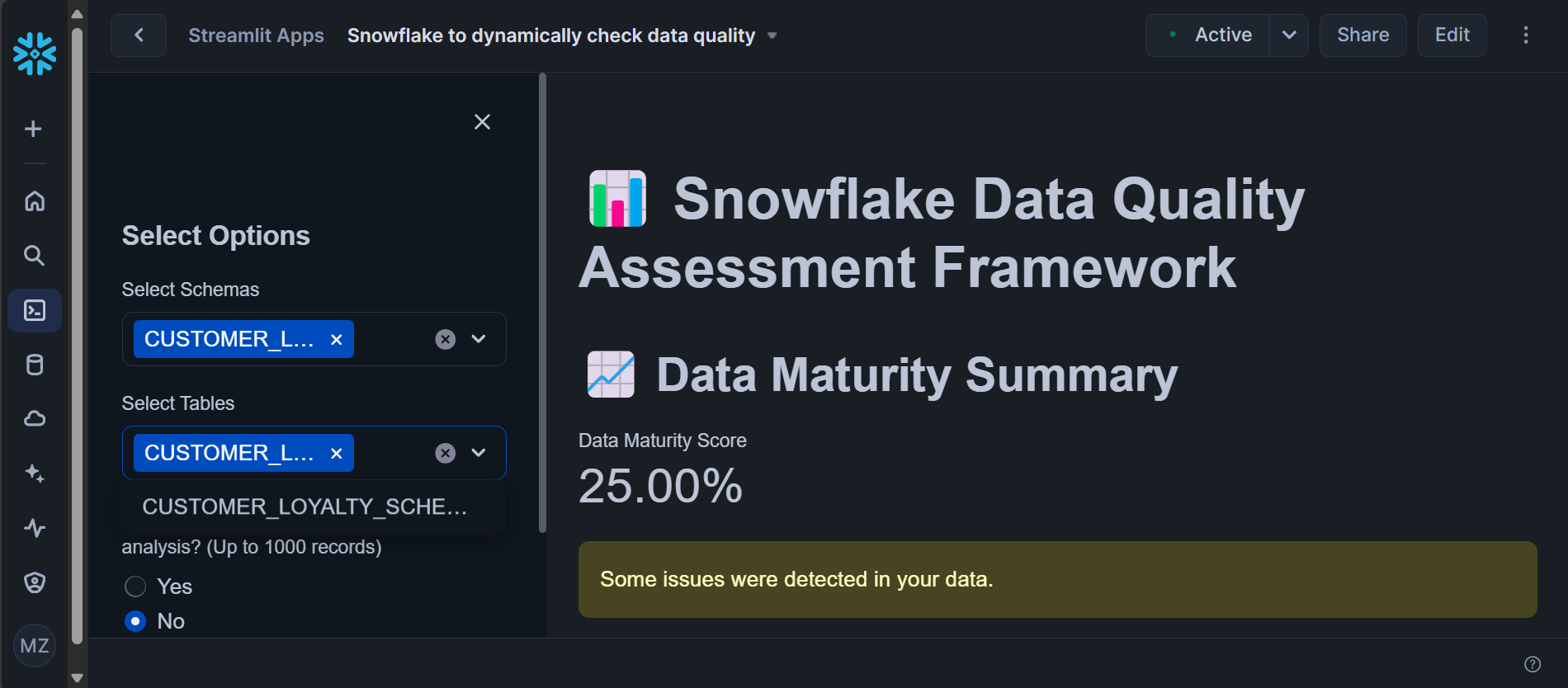

Upon Schema’s and Tables selection, your assessment automatically runs

The application will execute various data quality checks on the selected table.

Step 4: View Results

Once the assessment is complete, the application will display:

Data Maturity Score: Overall score based on all dimensions.

Detailed Reports: Individual scores and findings for each data quality dimension.

Understanding the Results

Data Maturity Score

Definition: An aggregate score representing the overall quality of your data.

Interpretation:

90-100: Excellent data quality.

70-89: Good data quality with some areas for improvement.

50-69: Fair data quality; significant improvements needed.

Below 50: Poor data quality; immediate action required.

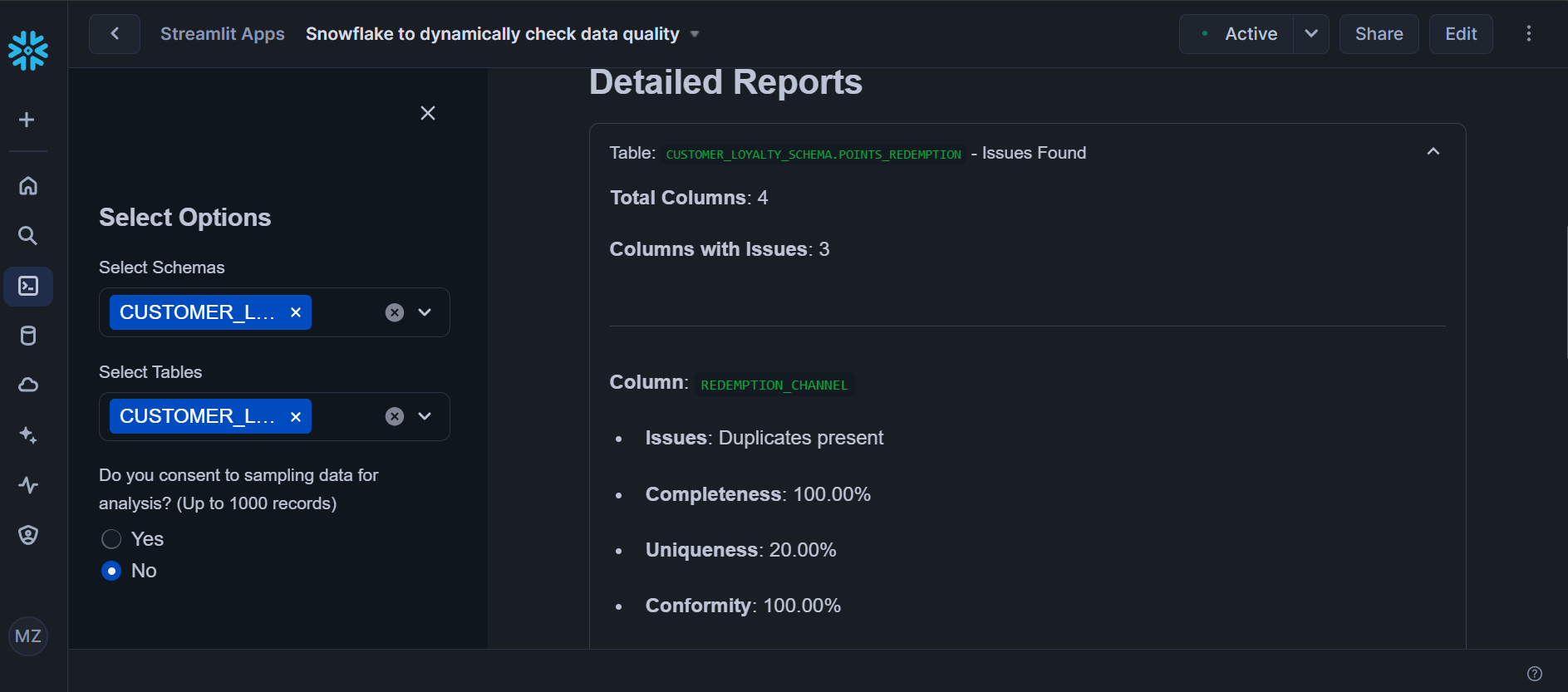

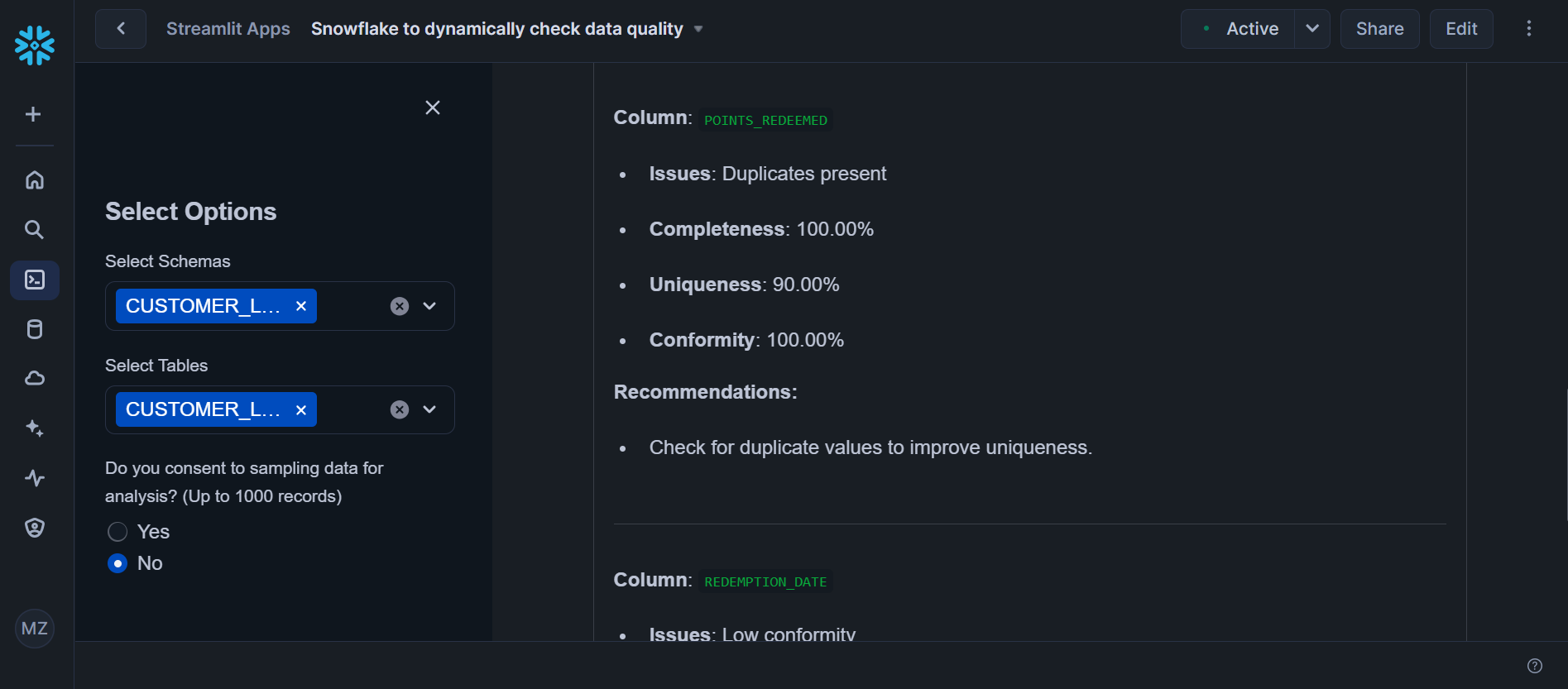

Detailed Dimension Reports

Accuracy

What It Checks: Correctness of data values.

How to Improve: Validate data against reliable sources; implement data validation rules.

Completeness

What It Checks: Presence of all required data fields.

How to Improve: Ensure mandatory fields are not left blank; set default values where appropriate.

Consistency

What It Checks: Uniformity of data across records and tables.

How to Improve: Standardize data formats; enforce data type consistency.

Timeliness

What It Checks: Data is up-to-date and reflects the current state.

How to Improve: Implement regular data refresh schedules; monitor data latency.

Uniqueness

What It Checks: Absence of duplicate records.

How to Improve: Use primary keys and unique constraints; perform de-duplication processes.

Validity

What It Checks: Data conforms to defined formats and rules.

How to Improve: Define and enforce data schemas; use validation checks during data entry.

Use Cases and Benefits

Data Governance

Benefit: Enhances data governance by providing insights into data quality issues.

Application: Helps in setting data standards and policies within the organization.

Regulatory Compliance

Benefit: Assists in meeting compliance requirements by ensuring data accuracy and completeness.

Application: Useful for industries with strict data regulations, such as finance and healthcare.

Business Intelligence

Benefit: Improves the reliability of analytics and reporting.

Application: Enables better decision-making based on high-quality data.

Operational Efficiency

Benefit: Reduces errors and inefficiencies in business processes.

Application: Streamlines operations by identifying and correcting data quality issues.

Conclusion

Ensuring high data quality is essential for leveraging the full potential of your data assets. The Snowflake Data Quality Assessment Framework provides a practical and efficient way to evaluate and enhance the quality of your data stored in Snowflake.

By regularly using this framework, organizations can:

Improve Data Trustworthiness: Build confidence in data-driven decisions.

Enhance Data Governance: Establish robust data management practices.

Increase Operational Efficiency: Reduce costs associated with poor data quality.

Call to Action:

Try the Framework: GitHub Repository

Watch the Demo: YouTube Video

Contribute: Feel free to contribute to the project by submitting issues or pull requests.

References

Dimensions of Data Quality

dama-uk.orgSnowflake Documentation

https://docs.snowflake.com/Streamlit Documentation

https://docs.streamlit.io/Pandas Documentation

https://pandas.pydata.org/docs/

Contact Information:

Author: Mohammed Zeeshan

Email: reach2zeeshan@gmail.com

GitHub: TheMohammedZeeshan

Subscribe to my newsletter

Read articles from Mohammed Zeeshan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mohammed Zeeshan

Mohammed Zeeshan

I am a seasoned Data Engineer with over 10 years of experience in building scalable data pipelines and applications. I specialize in data warehousing, GDPR compliance solutions, and cloud-based platforms like Snowflake and AWS. My recent projects involve creating interactive web apps using Streamlit to simplify complex data processes