Chapter 4: Tokens??

Sawez Faisal

Sawez FaisalTokens are the smallest meaningful part of the source code ( the high level language).

So if your language contains if keyword it should have a token “IF” that would allow the compiler to identify the stream of characters in a source code and then send them further for processing

How do we generate these tokens?

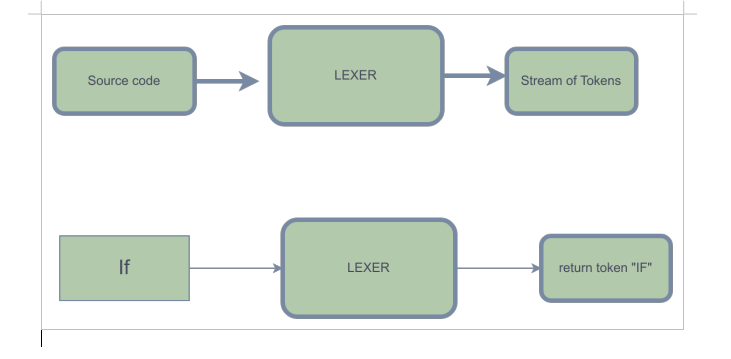

Well for this purpose we have what is called a lexer .

A lexer simply takes in the source file or wherever you have stored the code and match them through a set of predefined tokens .

It then stores these tokens in a list which is further passed for processing.

Since i am using cpp as my go to language , i am using enums to group all of this tokens in a single piece inside the tokens.hpp header

What are enums?

It is a user-defined data type in programming that allows you to define a set of named integral constants. This makes your code more readable and manageable by grouping related values under a single type. For example, you might use an enum to represent days of the week, colors, or states in a finite state machine.

enum Color {

RED,

GREEN,

BLUE

};

Color favoriteColor = GREEN;

Now , the variable favoriteColor cannot hold any thing except for the literals mentioned inside the enum Color

This is how a typical enum would look like in our case

enum TokenType {

TOKEN_IDENTIFIER,

TOKEN_NUMBER,

TOKEN_OPERATOR,

TOKEN_KEYWORD,

TOKEN_END_OF_FILE

};

Every token will have these basic information associated with them that we will implement using a class :

the token type (taken from enum)

the actual word

line number (useful for providing debugging messages)

I have provided a basic layout of how the class will look like

class Token {

public:

//the enum TYPE has been enclosed within a namespace TOKEN

//this will prevent any sort of naming conflicts that might occur later as the code base grows

//you can skip it if you think you are implementing a simple language

TOKEN::TYPE type;

std::string lexeme;

int line=-1;

// Constructor

Token(TOKEN::TYPE type, std::string lexeme, int line)

: type(type), lexeme(lexeme), line(line) {}

// Method to convert Lexer details to string

std::string toString() const {

return "tokenType: " + std::to_string(static_cast<int>(type)) +

" lexeme: " + lexeme +

" lineNo.: " + std::to_string(line);

}

};

That’s it , now this tokens files would be useful when we discuss the lexing phase

Any suggestion is highly appreciated !!

Next up we will have a look at Grammar of the language

Till then if you are using cpp you could have a read about namespace and how could we use it with enums to prevent naming conflicts.

Subscribe to my newsletter

Read articles from Sawez Faisal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sawez Faisal

Sawez Faisal

New to the field and eager to learn how complex systems work smoothly. From building compilers to scalable systems, I’m solving problems as they come—whatever the domain—and sharing all the highs, lows, and lessons along the way!