Demystifying Concurrency

Timon Jucker

Timon Jucker

I often encounter situations where Software Engineers have differing mental models of concurrency. To make matters worse, there are terms that are used (almost) synonymously, like async and parallel. But also definitions for Data parallelism and Task parallelism, Implicit parallelism and Explicit parallelism that seem to suggest that there are multiple kinds of concurrency. There is a wealth of content and numerous definitions on this topic, but I have yet to find a single explanation or definition that clearly delineates the differences. Most explanations only add to the confusion, and even the best ones remain somewhat unclear.

Breaking Down the Confusion: Towards a Common Understanding of Concurrency

There are reasons for this confusion. The most important one is probably that there are multiple incompatible mental models and definitions for these terms in different contexts. For example, in the context of inter-service communication, async is used to describe the non-blocking nature of communication. But, in the context of programming languages, it is used to handle async task execution in a sequentially blocking manner. Regardless of this incompatibility, they get mixed and matched without much thought:

It's telling that the Wikipedia pages for Concurrency (computer science) and Asynchrony (computer programming) explicitly mention their context. However, even within computer science, there are different interpretations of concurrency. The most obvious contexts are programming languages and distributed systems. But there are other contexts as well, such as Asynchronous circuits and networking.

Many attempts have been made to explain the intricacies of concurrency, asynchrony, and parallel programming. And there are even good ones among them. However, even when a good explanation gets attention, like the talk Concurrency is not parallelism - The Go Programming Language, the misleading parts, such as the confusing title, also stick with the public. This has led many to mistakenly believe that concurrency and parallelism are somehow opposites, which the talk never intended to suggest.

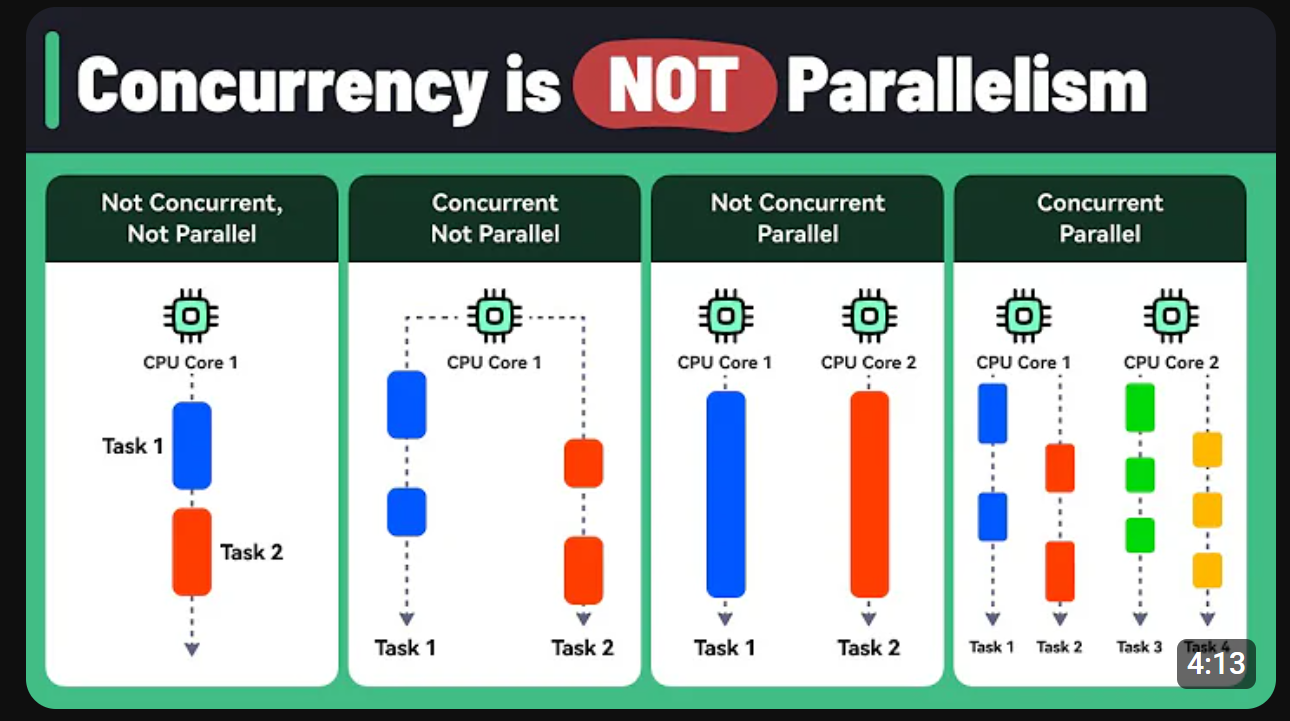

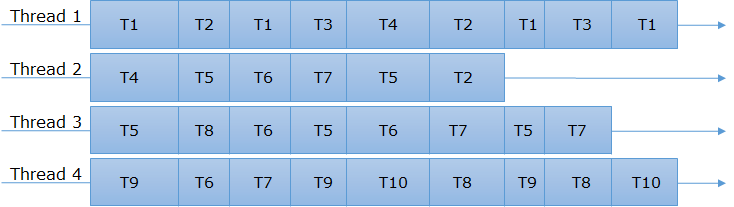

To add to the confusion, some popular sources contradict basic implementations in well-known languages. For example, take this recent video on YouTube from ByteByteGo:

I think it does a good job of separating the different execution modes. However, there is a confusing and contradictory part in the third card of the preview image, which claims there is parallel computing without concurrency. This is exactly the scenario that data structures with the prefix "concurrent" (e.g., ConcurrentDictionary in C# or ConcurrentHashMap in Java) are optimized for. How much is this definition worth if it contradicts how concurrency is interpreted by some of the most popular languages?

It does not help that concurrency is inherently a complex topic. Each language, like C#, Java, JavaScript, Python, Rust, and Swift, has its ways and patterns, which fill entire books. Then there is reactivity, a closely related topic that can be almost as complex as concurrency. Articles like What the hell is Reactive Programming anyway? with all the references mentioned, show how quickly you get into quite complicated topics. And the popular Reactive Manifesto demonstrates that it also extends into the distributed systems realm where the connections to concurrency get more obvious.

All this is unfortunate because having a shared understanding of concurrency is crucial for building stable and efficient software. So in this article, I want to propose a definition of concurrency specifically in the context of programming languages.

Having a solid mental model not only helps in communicating ideas more precisely but more importantly gives you the tools to understand what others are talking about and to identify misunderstandings.

A Tale of Two Models: Parallel vs. Asynchronous Programming

Before I share my definition of concurrency, I would like to introduce it with a little story:

John, a software developer at a tech startup, was puzzled by the difference between parallel and asynchronous programming. Seeking clarity, John approached his tech lead, Dr. Carter, one morning.

"Dr. Carter, I'm confused. Parallel and async programming seem similar, but I know they're different. Can you explain?"

Dr. Carter smiled. "Think of it this way: Parallel programming is like having multiple people do your tasks simultaneously. One person handles laundry, another cooks, and another cleans. They work at the same time, using multiple CPU cores. It's great for CPU-bound tasks."

"Got it," John said, feeling a bit more confident. "But what about async programming?"

"Async programming is a bit different," Dr. Carter explained. "It's like you doing the laundry, but while the washing machine is running, you start cooking. You don't just sit and wait for the laundry to finish. Instead, you switch to another task that doesn't need you to be present the whole time."

John's eyes lit up. "So, parallel is about multiple workers at the same time, and async is about efficient multitasking during idle periods. Got it!"

Dr. Carter grinned. "Exactly. And here's a twist: When you combine both, you achieve the ultimate stage of concurrency. It's like having multiple people, each efficiently switching between tasks, making the most of both parallel execution and idle times. Concurrency is about managing multiple tasks at the same time, whether they're running simultaneously or not."

Excited, John returned to his desk, ready to explore the power of concurrency, understanding how to leverage both parallel and asynchronous programming to make their applications faster and more efficient than ever.

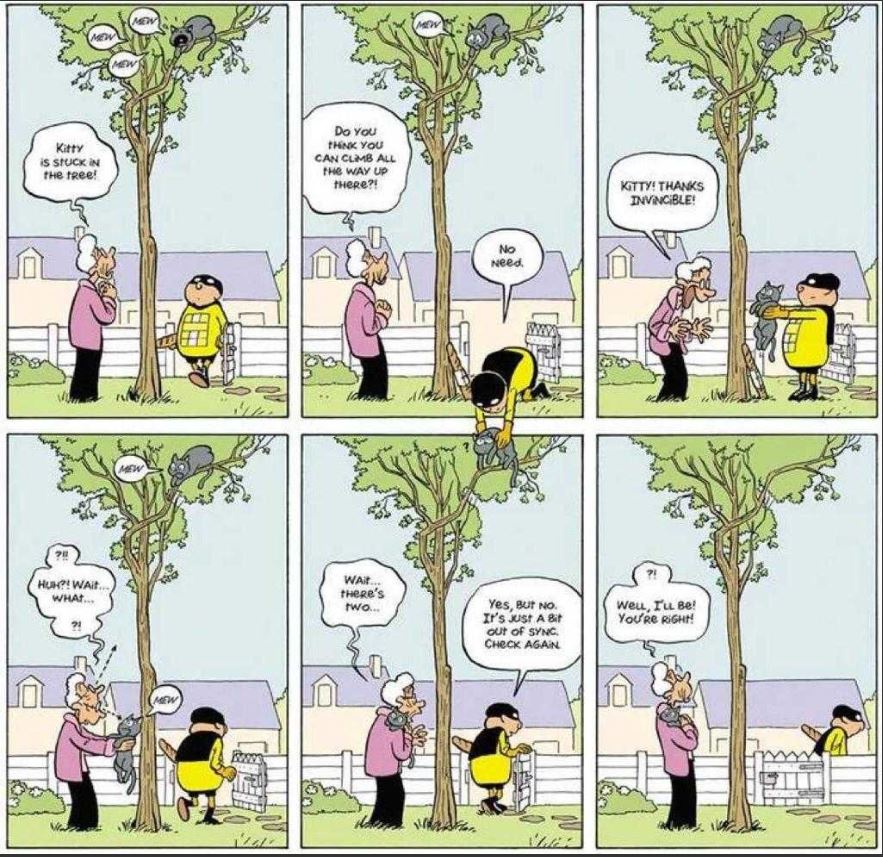

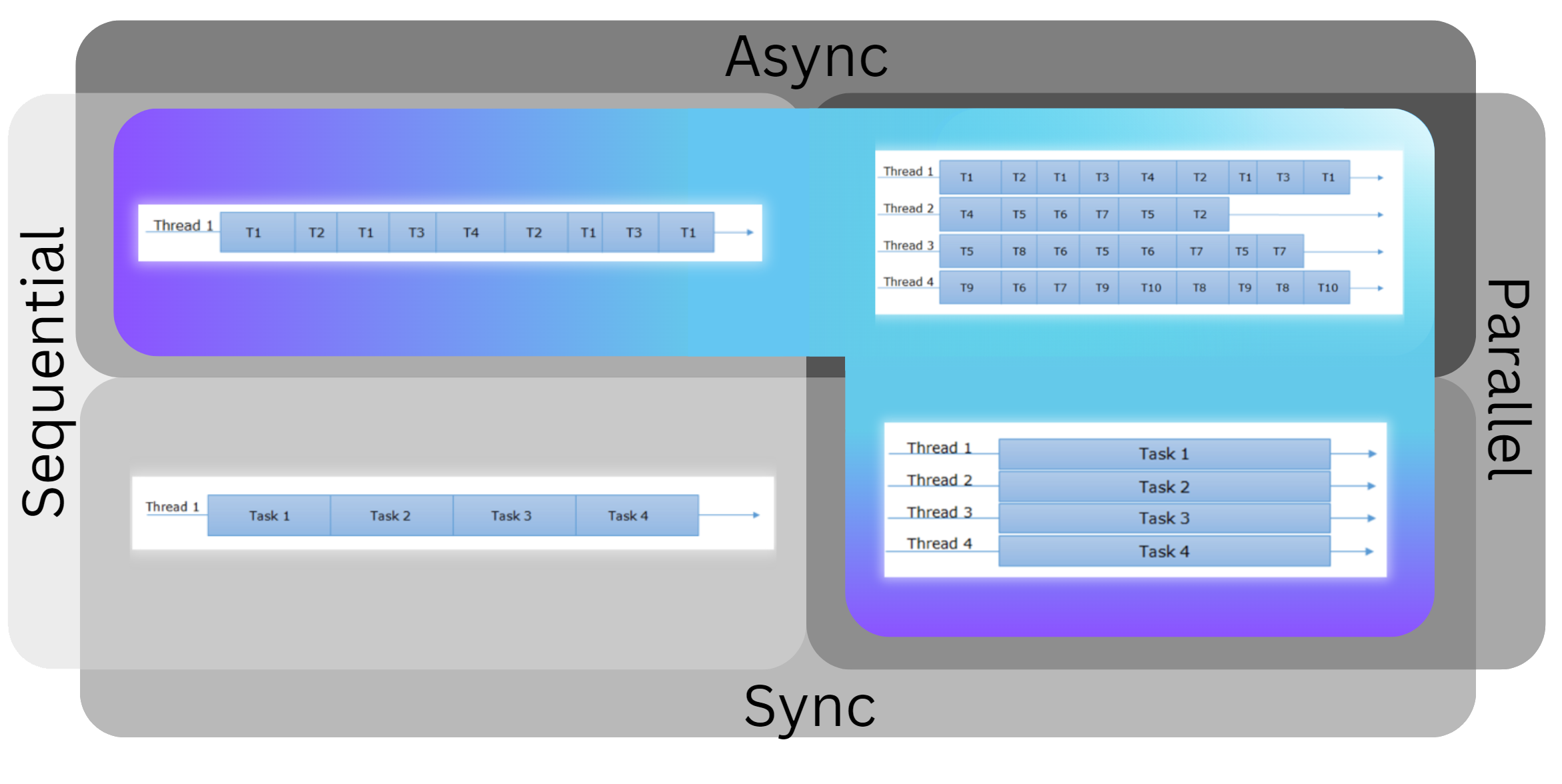

The next twist in this story would likely involve issues with race conditions and language-specific implementation details... But that is not the focus of this article. A better approach is to show a visualization of the definition of concurrency as described in this story:

The Three Quadrants of Concurrency: A Visual Approach

What I want to emphasize is that there are different types of concurrency. It doesn't matter if the work is happening at the same time or if tasks are taking turns to make progress. The best and simplest definition I found is:

"Concurrency: Doing more than one thing at a time."

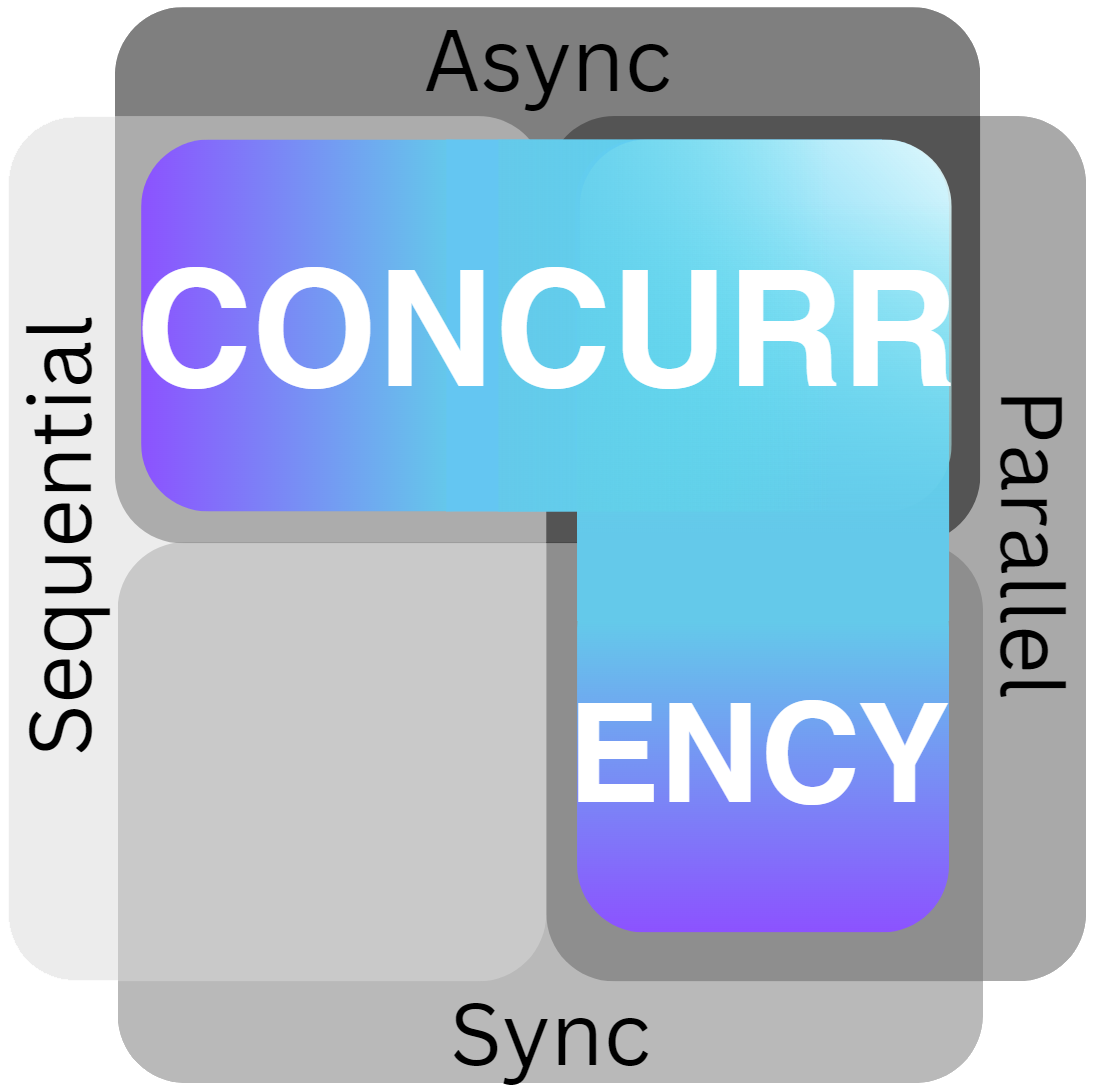

This visualization is inspired by Code Wala. To make it clearer, I added examples from the article into the quadrants. It's a good time to compare it to the visualization by ByteByteGo that I included earlier in this article.

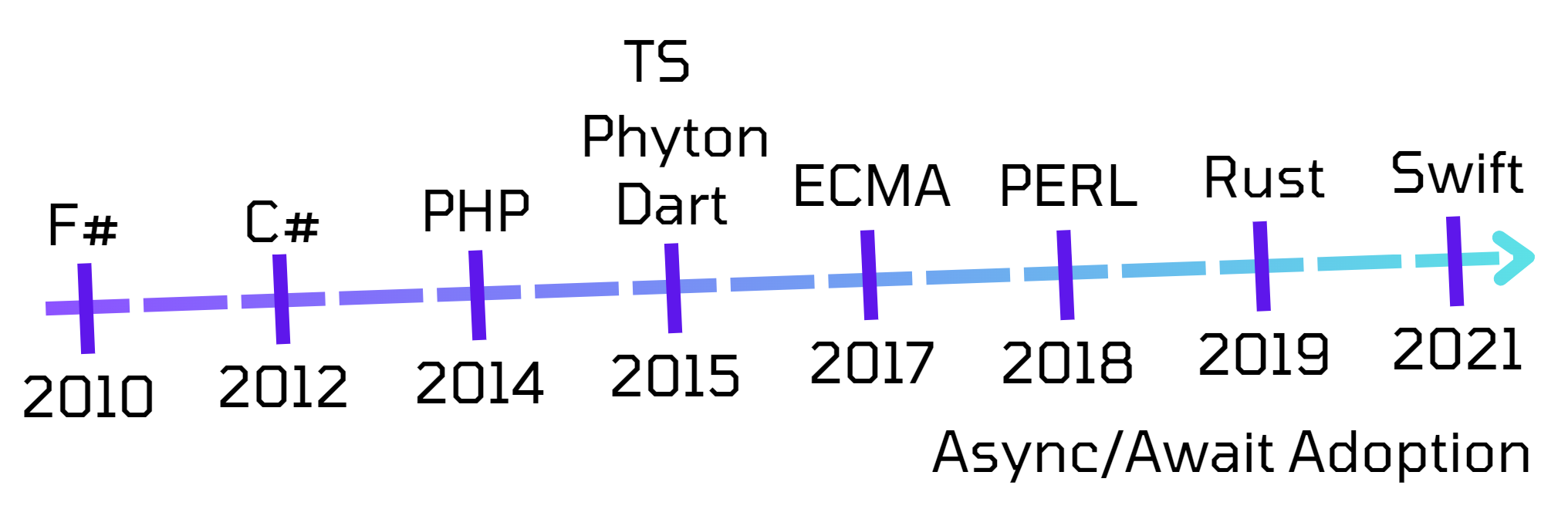

I propose that concurrency includes everything highlighted in blue. My visualization shows the two main aspects of concurrency: one axis separates sequential from parallel, and the other axis separates synchronous from asynchronous. More importantly, it includes async, which is often missing in definitions or explanations of concurrency. Classics like Clean Code: A Handbook of Agile Software Craftsmanship, The Pragmatic Programmer: Your Journey to Mastery, and Concurrent Programming in Java (I've checked many other books and papers) do not even mention async. While there were reasons for this omission a decade ago, they are no longer relevant. With the widespread adoption of async/await in most popular languages, ignoring async is no longer justifiable. In modern definitions of concurrency, including async is essential for a complete understanding.

The key is to understand that there are four distinct ways that code can be executed based on causality and only the first one is not concurrent:

Sequential and Synchronous (not Concurrent): This is the most straightforward method of running code. Tasks are executed one after another, in a specific order. Each task must complete before the next one begins. This is how most people learn to code and how they typically conceptualize program execution. Imagine reading a book, chapter after chapter, without interruption.

Sequential and Asynchronous (Concurrent): In this mode, tasks are still executed one after another, but the program can initiate a task and move on to the next one without waiting for the previous task to complete. This allows for more efficient use of time, especially when dealing with I/O-bound tasks. Imagine that while heating up the water, a single person can simultaneously cut the vegetables.

Parallel and Synchronous (Concurrent): Here, multiple tasks are executed simultaneously. Each task runs independently at the same time, leveraging parallel processing to complete them faster. This approach is particularly effective for CPU-bound tasks that require significant computational power. Imagine a factory floor where every worker does the same work in parallel.

Parallel and Asynchronous (Concurrent): This method combines the benefits of both parallel and asynchronous execution. Multiple tasks run simultaneously, and within each task, asynchronous operations can occur. This allows for highly efficient handling of both CPU-bound and I/O-bound tasks, optimizing the use of computational resources and time. Imagine a restaurant kitchen where cooks and washers work hand in hand to help each other be more efficient.

Understanding these different execution modes is crucial for writing efficient and maintainable code, as it helps in selecting the right approach based on the nature of the tasks and the resources available.

The Evolving Landscape of Concurrency

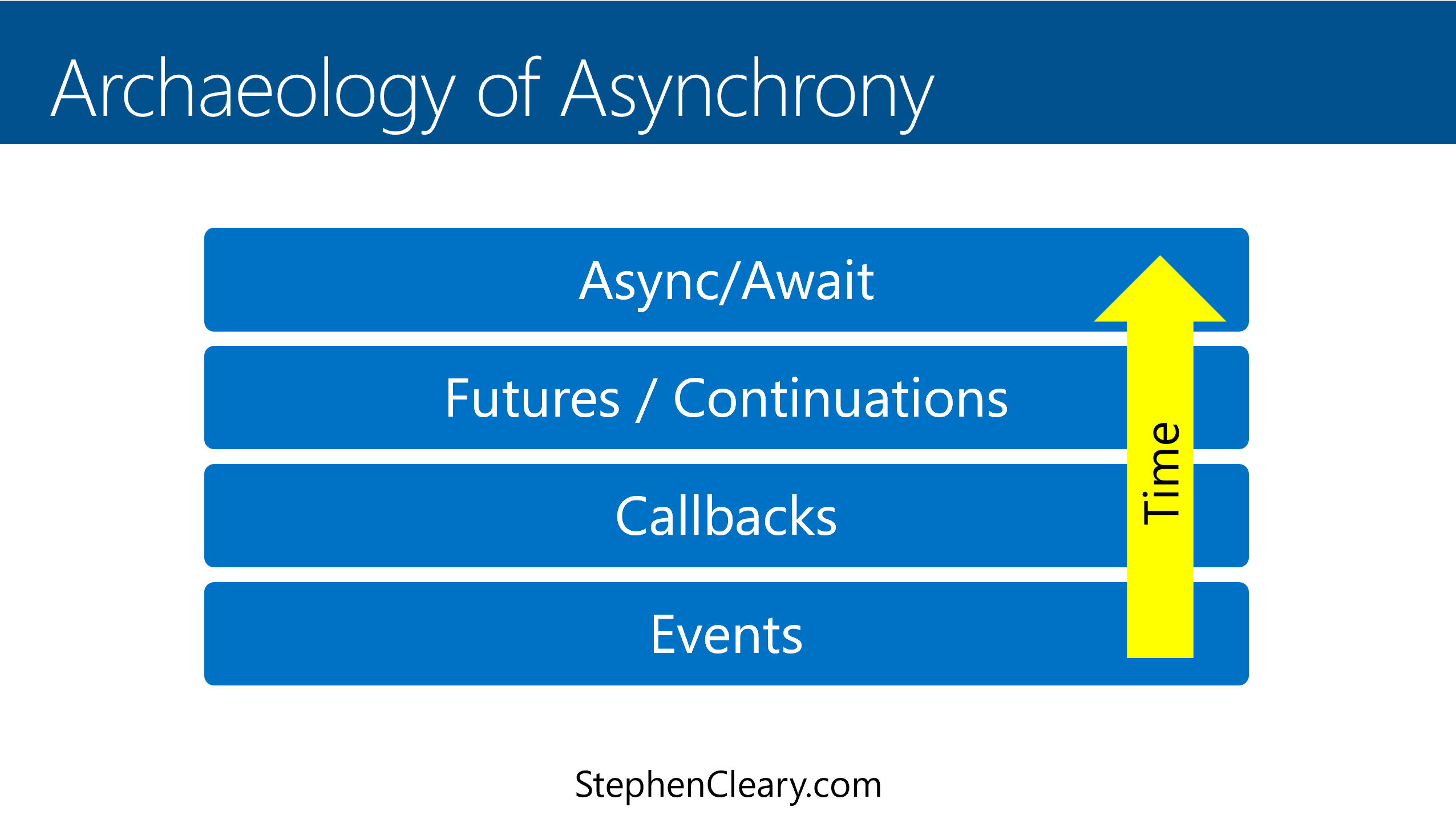

One of the best resources I've found so far is the Concurrency in C# Cookbook by Stephen Cleary. I think C# is uniquely positioned to be a baseline for this topic because it introduced the async/await keywords in a way that has since been adopted by many other languages and significantly shaped our understanding of concurrency. However, other languages are also heavily invested in these patterns and continue to evolve the field. Therefore, I tried to be as language-agnostic as possible in this article.

Most popular languages are adopting the async/await model to handle asynchrony, but there's still room for improvement. Structured Concurrency is an exciting concept first promoted by the Python community and now gaining traction with Swift and Java. Could this lead to a programming model as dominant as structured programming? Is there an ongoing evolution toward a unified approach to concurrency? If so, there could also be a convergence in the definitions of concurrency.

My definition of concurrency is aligned with the current convergence, as it aids in understanding structured concurrency and has proven applicable in various contexts I've encountered, such as distributed systems and even aligns with the other domains for example how GitHub actions use the term concurrency.

Conclusion

Concurrency involves multiple flavors, including parallel programming, where tasks run simultaneously on multiple CPU cores, and asynchronous programming, where tasks are executed in a time-shared fashion, taking turns to progress during idle periods without waiting for one task to complete before starting another.

Concurrency is about managing multiple tasks at the same time, whether they're running simultaneously or not.

Subscribe to my newsletter

Read articles from Timon Jucker directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by