LangGraph Tutorial: A Comprehensive Guide for Beginners

Rounak Show

Rounak ShowTable of contents

Introduction

Building applications with large language models (LLMs) offers exciting opportunities to create sophisticated, interactive systems. But as these applications grow in complexity, especially those involving multiple LLMs working in concert, new challenges arise. How do we manage the flow of information between these agents? How do we ensure they interact seamlessly and maintain a consistent understanding of the task at hand? This is where LangGraph comes in.

Understanding LangGraph

LangGraph, a powerful library within the LangChain ecosystem, provides an elegant solution for building and managing multi-agent LLM applications. By representing workflows as cyclical graphs, LangGraph allows developers to orchestrate the interactions of multiple LLM agents, ensuring smooth communication and efficient execution of complex tasks.

While LangChain excels in creating linear chains of computational tasks (known as Directed Acyclic Graphs or DAGs), LangGraph introduces the ability to incorporate cycles into these workflows. This innovation enables developers to construct more intricate and adaptable systems, mirroring the dynamic nature of intelligent agents that can revisit tasks and make decisions based on evolving information.

If you want to build projects in LangChain, then I would recommend watching this YouTube Video: Mastering NL2SQL with LangChain and LangSmith

Key Concepts

Graph Structures

At the heart of LangGraph's design lies a graph-based representation of the application's workflow. This graph comprises two primary elements:

Nodes - The Building Blocks of Work: Each node in a LangGraph represents a distinct unit of work or action within the application. These nodes are essentially Python functions that encapsulate a specific task. This task could involve a diverse range of operations, such as:

Direct communication with an LLM for text generation, summarization, or other language-based tasks.

Interacting with external tools and APIs to fetch data or perform actions in the real world.

Manipulating data through processes like formatting, filtering, or transformation.

Engaging with users to gather input or display information.

Edges - Guiding the Flow of Information and Control: Edges serve as the connective tissue within a LangGraph, establishing pathways for information flow and dictating the sequence of operations. LangGraph supports multiple edge types:

Simple Edges: These denote a direct and unconditional flow from one node to another. The output of the first node is fed as input to the subsequent node, creating a linear progression.

Conditional Edges: Introducing a layer of dynamism, conditional edges enable the workflow to branch based on the outcome of a specific node's operation. For instance, based on a user's response, the graph might decide to either terminate the interaction or proceed to invoke a tool. This decision-making capability is crucial for creating applications that can adapt to different situations. We will see an example of this in the later part of the article.

State Management

A crucial aspect of managing multi-agent systems is ensuring that all agents operate with a shared understanding of the current state of the task. LangGraph addresses this through automatic state management. This means the library inherently handles the tracking and updating of a central state object as agents execute their tasks.

This state object acts as a repository for critical information that needs to be accessible across different points in the workflow. This might include:

Conversation History: In chatbot applications, the state could store the ongoing conversation between the user and the bot, allowing for context-aware responses.

Contextual Data: Information pertinent to the current task, like user preferences, past behavior, or relevant external data, can be stored in the state for agents to use in decision-making.

Internal Variables: Agents might use the state to keep track of internal flags, counters, or other variables that guide their behavior and decision-making.

Getting Started with LangGraph

Installation

To get started with LangGraph, you will need to install it.

To install LangGraph, open your terminal or command prompt and run the following command:

pip install -U langgraph

This command will download and install the latest version of LangGraph. The -U flag ensures you are getting the most up-to-date version.

Creating a Basic Chatbot in LangGraph

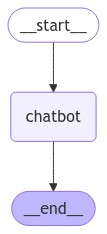

This example is a good starting point for understanding the fundamental concepts of LangGraph.

Import Necessary Libraries: Begin by importing the required classes and modules from LangGraph and other relevant libraries.

from typing import Annotated from typing_extensions import TypedDict from langgraph.graph import StateGraph, START, END from langgraph.graph.message import add_messagesDefine the State Structure: Create a class that defines the structure of the state object, which will hold information that needs to be shared and updated between nodes in the graph.

class State(TypedDict): # 'messages' will store the chatbot conversation history. # The 'add_messages' function ensures new messages are appended to the list. messages: Annotated[list, add_messages] # Create an instance of the StateGraph, passing in the State class graph_builder = StateGraph(State)Initialize the LLM: Instantiate your chosen LLM model, providing any necessary API keys or configuration parameters. This LLM will be used to power the chatbot's responses.

#pip install -U langchain_anthropic from langchain_anthropic import ChatAnthropic llm = ChatAnthropic(model="claude-3-5-sonnet-20240620")Create the Chatbot Node: Define a Python function that encapsulates the logic of the chatbot node. This function will take the current state as input and generate a response based on the LLM's output.

def chatbot(state: State): # Use the LLM to generate a response based on the current conversation history. response = llm.invoke(state["messages"]) # Return the updated state with the new message appended return {"messages": [response]} # Add the 'chatbot' node to the graph, graph_builder.add_node("chatbot", chatbot)Define Entry and Finish Points: Specify the starting and ending points of the workflow within the graph.

# For this basic chatbot, the 'chatbot' node is both the entry and finish point graph_builder.add_edge(START, "chatbot") graph_builder.add_edge("chatbot", END)Compile the Graph: Create a runnable instance of the graph by compiling it.

graph = graph_builder.compile()Visualize the Graph: By running a simple Python code, you can visualize the graph with nodes and edges.

from IPython.display import Image, display try: display(Image(graph.get_graph().draw_mermaid_png())) except Exception: # This requires some extra dependencies and is optional pass

Run the Chatbot: Implement a loop to interact with the user, feeding their input to the graph and displaying the chatbot's response.

while True: user_input = input("User: ") if user_input.lower() in ["quit", "exit", "q"]: print("Goodbye!") break # Process user input through the LangGraph for event in graph.stream({"messages": [("user", user_input)]}): for value in event.values(): print("Assistant:", value["messages"][-1].content)

This code snippet provides a basic structure for a LangGraph chatbot. You can expand upon this by incorporating more sophisticated state management and different LLM models or by connecting to external tools and APIs. The key is to define clear nodes for different tasks and use edges to establish the desired flow of information and control within the chatbot.

Advanced LangGraph Techniques

Tool Integration

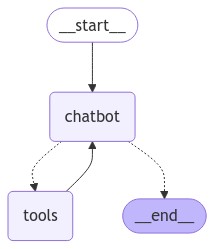

Integrating tools into your LangGraph chatbot can significantly enhance its capabilities by allowing it to access and process information the way you like.

Enhancing our Basic Chatbot with Tool Integration

Let's modify the basic chatbot created in the previous section to include a tool that can search the web for information. We'll use the TavilySearchResults tool from langchain_community.tools.tavily_search . You will need Tavily API key for this example.

#pip install -U tavily-python langchain_community

from typing import Annotated

from langchain_anthropic import ChatAnthropic

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.messages import BaseMessage

from typing_extensions import TypedDict

from langgraph.graph import StateGraph

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_condition

class State(TypedDict):

messages: Annotated[list, add_messages]

graph_builder = StateGraph(State)

tool = TavilySearchResults(max_results=2)

tools = [tool]

llm = ChatAnthropic(model="claude-3-5-sonnet-20240620")

llm_with_tools = llm.bind_tools(tools)

def chatbot(state: State):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

graph_builder.add_node("chatbot", chatbot)

tool_node = ToolNode(tools=[tool])

graph_builder.add_node("tools", tool_node)

graph_builder.add_conditional_edges(

"chatbot",

tools_condition,

)

# Any time a tool is called, we return to the chatbot to decide the next step

graph_builder.add_edge("tools", "chatbot")

graph_builder.set_entry_point("chatbot")

graph = graph_builder.compile()

Explanation:

Import the Tool: Import the necessary tool class, in this case

TavilySearchResults.Define and Bind the Tool: Create an instance of the tool and bind it to the LLM using

llm.bind_tools(). This informs the LLM about the available tools and their usage.Create a ToolNode: Instantiate a

ToolNode, passing in the list of available tools.Add the ToolNode to the Graph: Incorporate the

ToolNodeinto the LangGraph usinggraph_builder.add_node().Conditional Routing: Utilize

graph_builder.add_conditional_edges()to set up routing logic based on whether the LLM decides to call a tool. Thetools_conditionfunction checks if the LLM's response includes tool invocation instructions.Loop Back: After executing the tool, use

graph_builder.add_edge()to direct the flow back to thechatbotnode, allowing the conversation to continue.

Now, when you run the chatbot and ask a question that requires external information, the LLM can choose to invoke the web search tool, retrieve relevant data, and incorporate it into its response.

Adding Memory to the Chatbot

Memory is crucial for creating chatbots that can engage in meaningful conversations by remembering past interactions.

LangGraph's Checkpointing System

Checkpointer: When you compile your LangGraph, you can provide a

checkpointerobject. This object is responsible for saving the state of the graph at different points in time.Thread ID: Each time you invoke your graph, you provide a

thread_id. This ID is used by thecheckpointerto keep track of different conversation threads.Automatic Saving and Loading: LangGraph automatically saves the state after each step of the graph's execution for a given

thread_id. When you invoke the graph again with the samethread_id, it automatically loads the saved state, enabling the chatbot to continue the conversation where it left off.

Implementing Memory with Checkpointing

Building upon the previous code, here's how to add memory using LangGraph's checkpointing:

# ... (Previous code to define State, graph_builder, nodes, and edges)

from langgraph.checkpoint.memory import MemorySaver

# Create a MemorySaver object to act as the checkpointer

memory = MemorySaver()

# Compile the graph, passing in the 'memory' object as the checkpointer

graph = graph_builder.compile(checkpointer=memory)

# ... (Rest of the code to run the chatbot)

Explanation:

Import

MemorySaver: Import theMemorySaverclass fromlanggraph.checkpoint.memory.Create a

MemorySaverObject: Instantiate aMemorySaverobject, which will handle saving and loading the graph's state.Pass to

compile(): When compiling the graph, pass thememoryobject as thecheckpointerargument.

Now, when you run the chatbot, first, use a thread_id to use as the key for this conversation:

config = {"configurable": {"thread_id": "1"}}

Each unique thread_id will have its conversation history stored.

Now begin the conversation:

while True:

user_input = input("User: ")

if user_input.lower() in ["quit", "exit", "q"]:

print("Goodbye!")

break

# Process user input through the LangGraph

for event in graph.stream({"messages": [("user", user_input)]}, config):

for value in event.values():

print("Assistant:", value["messages"][-1].content)

Note: The config was provided as the second positional argument when calling our graph.

Human in the Loop

Human-in-the-loop workflows are essential for situations where you want to incorporate human oversight, verification, or decision-making within your AI application.

Implementing Human-in-the-Loop with Interrupts

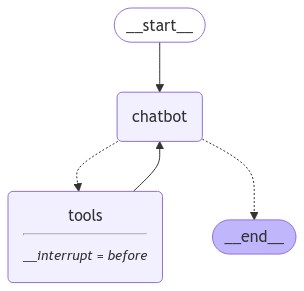

The below code illustrates human-in-the-loop implementation using LangGraph's interrupt_before or interrupt_after functionality. Here's a breakdown:

from typing import Annotated

from langchain_anthropic import ChatAnthropic

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.messages import BaseMessage

from typing_extensions import TypedDict

from langgraph.checkpoint.memory import MemorySaver

from langgraph.graph import StateGraph

from langgraph.graph.message import add_messages

from langgraph.prebuilt import ToolNode, tools_condition

class State(TypedDict):

messages: Annotated[list, add_messages]

graph_builder = StateGraph(State)

tool = TavilySearchResults(max_results=2)

tools = [tool]

llm = ChatAnthropic(model="claude-3-5-sonnet-20240620")

llm_with_tools = llm.bind_tools(tools)

def chatbot(state: State):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

graph_builder.add_node("chatbot", chatbot)

tool_node = ToolNode(tools=[tool])

graph_builder.add_node("tools", tool_node)

graph_builder.add_conditional_edges(

"chatbot",

tools_condition,

)

graph_builder.add_edge("tools", "chatbot")

graph_builder.set_entry_point("chatbot")

memory = MemorySaver()

graph = graph_builder.compile(

checkpointer=memory,

# This is new!

interrupt_before=["tools"],

# Note: can also interrupt __after__ actions, if desired.

# interrupt_after=["tools"]

)

Explanation:

In this particular example, the graph will pause just before executing the tools node. This tools node is responsible for running any tools that the LLM might have requested during its turn. By interrupting at this point, you can essentially allow a human to either:

Approve the Tool Call: The human can review the tool call the LLM wants to make and its input. If they deem it appropriate, they can simply allow the graph to continue, and the tool will be executed.

Modify the Tool Call: If the human sees a need to adjust the LLM's tool call (e.g., refine the search query), they can modify the state of the graph and then resume execution.

Bypass the Tool Call: The human might decide the tool isn't necessary. Perhaps they have the answer the LLM was trying to look up. In this case, they can update the graph state with the appropriate information, and the LLM will receive it as if the tool had returned that information.

Resources: Here is the Notebook to gain a more detailed understanding about the Human in the Loop: https://github.com/langchain-ai/langgraph/blob/main/docs/docs/how-tos/human_in_the_loop/review-tool-calls.ipynb

Real-World Uses for LangGraph

LangGraph lets you build AI systems that are more complex and interactive than simple question-and-answer bots by managing state, coordinating multiple agents, and allowing for human feedback. Here are some of the ways LangGraph could be used:

Smarter Customer Service: Imagine a chatbot for online shopping that can remember your past orders and preferences. It could answer questions about products, track your shipments, and even connect you with a human representative if needed.

AI Research Assistant: Need help with a research project? A LangGraph-powered assistant could search through tons of academic papers and articles, summarize the key findings, and even help you organize your notes.

Personalized Learning: LangGraph could power the next generation of educational platforms. Imagine a system that adapts to your learning style, identifies areas where you need extra help, and recommends personalized resources.

Streamlined Business: Many business processes involve multiple steps and people. LangGraph could automate parts of these workflows, like routing documents for approval, analyzing data, or managing projects.

These examples highlight how LangGraph helps bridge the gap between AI capabilities and the complexities of real-world situations.

Conclusion

This concludes our LangGraph tutorial! As you've learned, LangGraph enables the creation of AI applications that go beyond simple input-output loops by offering a framework for building stateful, agent-driven systems. You've gained hands-on experience defining graphs, managing state, and incorporating tools.

If you found this guide helpful and you're looking to learn more then don’t forget to follow us.

If you want to trace LLM calls of your Langchain project, then you can check out this blog Guide to LangSmith

At FutureSmart AI, we specialize in helping companies build cutting-edge AI solutions similar to the ones discussed in this blog. To explore how we can assist your business, feel free to reach out to us at contact@futuresmart.ai.

For real-world examples of our work, take a look at our case studies, where we showcase the practical value of our expertise.

Subscribe to my newsletter

Read articles from Rounak Show directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Rounak Show

Rounak Show

Learning Data Science and Sharing the journey through Hashnode.