☑️Day 30: Diving into Kubernetes (K8s)🚀

Kedar Pattanshetti

Kedar Pattanshetti

🔹Table of Contents :

Introduction to Kubernetes

Key Differences: Docker vs Kubernetes

Kubernetes Architecture Overview

Kubernetes Pods vs Docker Containers

Main Kubernetes Features

Real-Life Scenario Example

Key Take-away

✅Introduction

Kubernetes (K8s) is a powerful open-source container orchestration system that automates the deployment, scaling, and management of containerized applications across multiple hosts. Unlike Docker, which is primarily designed to work on a single host, Kubernetes addresses the limitations of Docker by offering solutions for multi-host container management, auto-scaling, and healing.

✅Key Differences: Docker vs Kubernetes

Docker (Without Orchestration):

Single Host: Docker manages containers on a single host, making it challenging to scale beyond one machine.

No Auto-Scaling: Docker does not have built-in mechanisms to automatically scale containers based on traffic or resource demands.

No Auto-Healing: If a Docker container crashes, it must be manually restarted.

Networking & Firewall Limitations: Docker's networking is simplistic; Kubernetes offers a more complex and scalable solution.

No API Gateway: Docker lacks enterprise-level support for secure communication and traffic management.

Kubernetes:

Multi-Host Scaling: Easily manages containers across multiple hosts in a cluster.

Auto-Scaling: Automatically adjusts the number of pods (K8s containers) based on demand.

Auto-Healing: Automatically restarts failed containers to maintain high availability.

Advanced Networking: Includes load balancing, API gateways, and secure communication across the network.

Master-Slave Architecture: Decouples management and worker components, making it ideal for enterprise-level container orchestration.

✅Kubernetes Architecture Overview

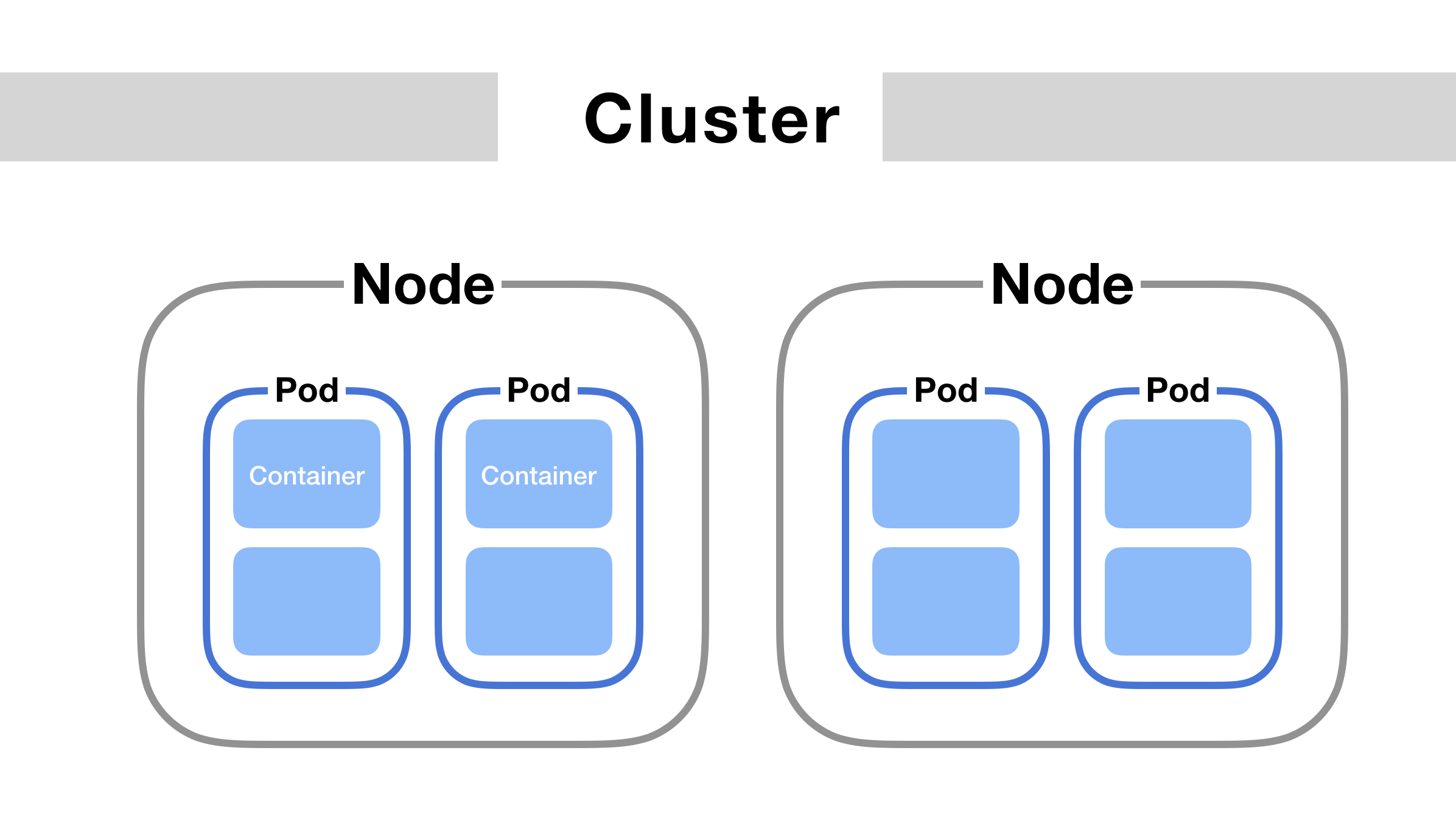

Kubernetes operates with a Master-Slave Architecture:

1. Control Plane (Master Components)

The control plane manages the overall cluster. It makes global decisions (e.g., scheduling) and manages the desired state of the cluster.

API Server: The entry point to the Kubernetes control plane. It exposes the Kubernetes API, which is used to communicate with the cluster.

etcd: A distributed key-value store that stores the entire state of the Kubernetes cluster, including configurations, secrets, and state information.

Scheduler: Responsible for scheduling pods on worker nodes based on available resources and constraints.

Controller Manager: Ensures the cluster is in the desired state. It handles various tasks like scaling, node management, and responding to events.

Cloud Controller Manager (CCM): Manages interactions with the cloud infrastructure (if used), such as load balancing and storage.

2. Data Plane (Node Components/Worker Components)

The data plane is where the actual containers (applications) are running.

Kubelet: The primary node agent that ensures containers are running in a pod. It interacts with the control plane to receive instructions.

Kube Proxy: Handles networking and forwards traffic to the appropriate containers within a node.

Container Runtime: The underlying engine that runs the containers (e.g., Docker, containerd).

✅Kubernetes Pods vs Docker Containers

Docker Container: A single instance of an application running inside a container.

Kubernetes Pod: A pod is the smallest deployable unit in Kubernetes. A pod can have one or more containers running together, sharing resources like storage and network. Pods provide an extra layer of abstraction for better scaling and management.

✅Main Kubernetes Features

Auto-Scaling: K8s automatically scales your application up or down based on resource usage.

Auto-Healing: Automatically restarts containers or pods if they crash or encounter issues.

Load Balancing: Balances network traffic across multiple pods to ensure reliability and performance.

Service Discovery: Kubernetes automatically assigns IPs and DNS names to services so that they can easily communicate with each other.

✅Real-Life Scenario Example

You’re running an e-commerce application with high variability in traffic. Docker allows you to run containers, but scaling across multiple machines manually and ensuring those containers are always running can be difficult.

With Kubernetes:

You deploy your application as pods, which can be scaled up automatically when traffic increases.

If a pod crashes, K8s auto-heals it by restarting a new instance.

With the help of the Kubernetes Scheduler, your application is distributed evenly across multiple nodes.

✅Key-Takeaway

Kubernetes is a powerful, scalable container orchestration system that addresses many of Docker’s limitations. With features like auto-scaling, auto-healing, load balancing, and more, Kubernetes has become the standard for running containerized applications at scale in production environments.

🚀Thanks for joining me on Day 30! Let’s keep learning and growing together!

Happy Learning! 😊

#90DaysOfDevOps

#DevOps #Kubernetes #K8’s #AutoScaling #AutoHealing #EnterprizelevelSupport #Master-Slave #LoadBalancing #Scaling #CloudComputing #TechLearning #ShubhamLonde #Day29

Subscribe to my newsletter

Read articles from Kedar Pattanshetti directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by