sarvadnya.tech

Sarvadnya Jawle

Sarvadnya Jawle

Creating a portfolio isn’t just about showcasing your projects—it's about demonstrating your expertise in the tools and technologies that power modern web applications. As a DevOps engineer, I wanted to go beyond a simple HTML page and build something that truly reflects my technical skill set. This is how I transformed my personal portfolio into a fully automated, scalable, and production-grade application using AWS, Kubernetes, Terraform, Jenkins, Docker, and cert-manager for TLS certificates.

In this article, I’ll walk you through the journey of building and deploying my portfolio sarvadnya.tech and the technica decisions that made it possible.

Project Overview

The core of my portfolio is built with HTML, SCSS, and JavaScript, focusing on clean, responsive design and fast load times. However, the technology stack doesn’t end there. My goal was to demonstrate not just frontend design but also my proficiency in DevOps, cloud computing, and continuous delivery practices.

Key technologies used include:

AWS: Hosting the infrastructure using Elastic Kubernetes Service (EKS).

Terraform: Provisioning AWS resources in a modular, repeatable way.

Jenkins: Automating deployments and ensuring CI/CD best practices.

Docker: Containerizing the application for consistency across environments.

Kubernetes: Orchestrating containers and managing deployment, scaling, and availability.

cert-manager: Automating the issuance and renewal of TLS certificates to secure the site with HTTPS.

Prometheus & Grafana: Monitoring system performance and visualizing key metrics.

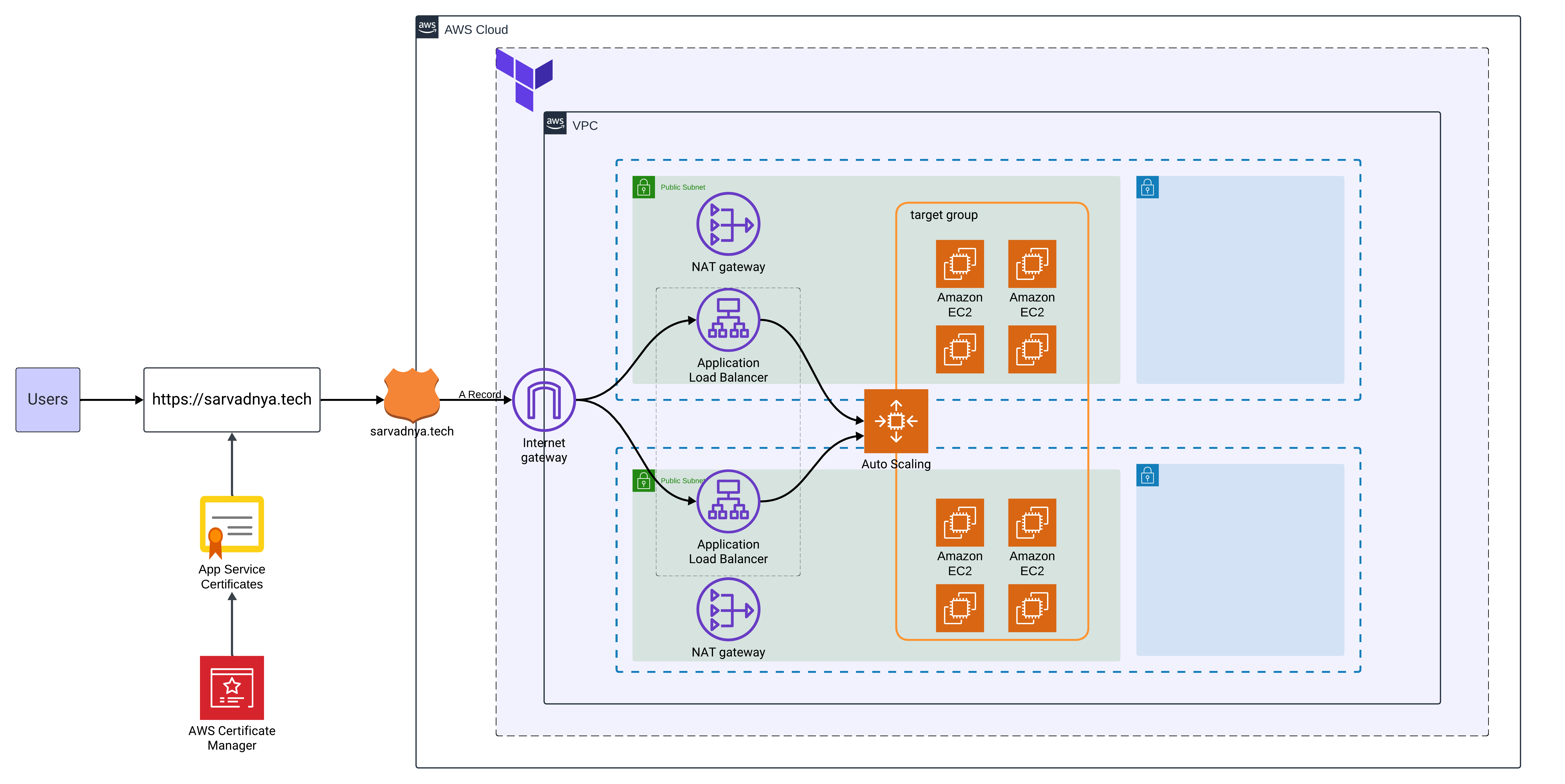

Infrastructure Setup

The Power of Terraform

Terraform is the backbone of this project’s infrastructure. All AWS resources—such as EC2 instances, VPCs, RDS, S3, and Load Balancers—are provisioned using Terraform, making the infrastructure declarative, repeatable, and version-controlled.

AWS Resources Managed by Terraform:

VPC (Virtual Private Cloud): Configures isolated networking, including public and private subnets, routing tables, and gateways.

Auto Scaling Group (ASG): Automatically scales EC2 instances based on traffic demands, ensuring high availability.

Security Groups: Implements fine-grained access controls, allowing specific traffic to pass while securing the application.

Route 53: Manages domain name routing to ensure that sarvadnya.tech resolves to the correct application endpoint.

S3 and ACM: Manages static asset hosting and SSL certificates, providing secure HTTPS communication.

Using Terraform’s modular design, I organized my code into various modules: VPC, EKS, Load Balancer, and more. This modularity allowed for reusability and easy maintenance.

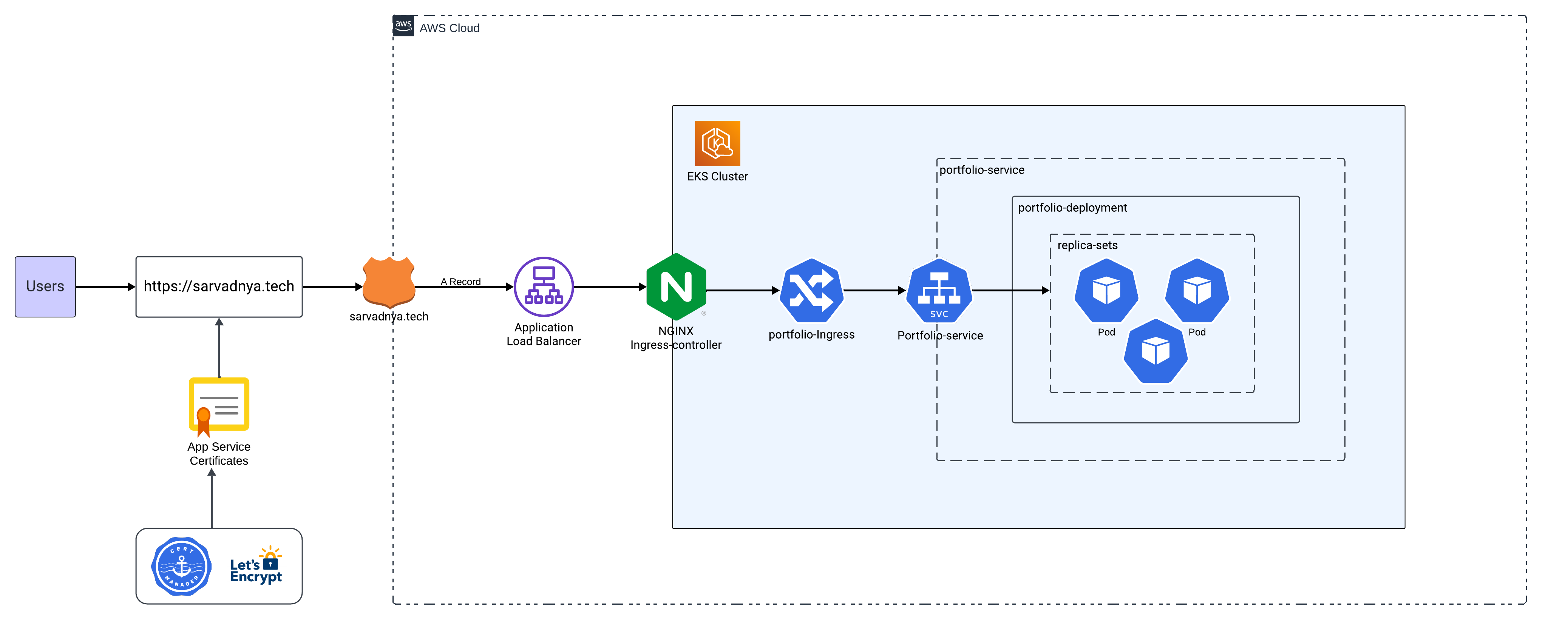

Kubernetes Ecosystem for Deployment

Once the infrastructure was set up, I deployed my portfolio on an AWS EKS cluster. The application is containerized using Docker and orchestrated through Kubernetes, enabling high availability and auto-scaling features out-of-the-box.

I wrote Kubernetes manifest files for deploying, scaling, and exposing the application, including:

Deployment: Manages the application’s pods, defining replica sets, resource limits, and rolling updates.

# To apply multiple files within one directory use, kubectl apply -f absolure/path/for/manifests -R ( Recurssively) apiVersion: apps/v1 kind: Deployment metadata: name: portfolio-deployment labels: app: portfolio spec: replicas: 2 selector: matchLabels: app: portfolio template: metadata: labels: app: portfolio spec: containers: - name: sarva-portfolio image: sarvadnya/portfolio:v1.0.5 ports: - containerPort: 80Service: Exposes the application within the cluster and routes traffic from the ingress.

apiVersion: v1 kind: Service metadata: name: portfolio-service spec: type: ClusterIP selector: app: portfolio ports: - protocol: TCP port: 80 targetPort: 80Ingress: Handles external access to the application, integrating with cert-manager to ensure all traffic is securely encrypted with TLS.

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: portfolio-ingress annotations: cert-manager.io/cluster-issuer: portfolio-cert-issuer nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx tls: - hosts: - sarvadnya.tech secretName: portfolio-tls-cert rules: - host: sarvadnya.tech http: paths: - path: / pathType: Prefix backend: service: name: portfolio-service port: number: 80ClusterIssuer: Cluster Issuer will send request to Let’s Encrypt server, either stage/prod.

Certificate: After certicate issued by Let’s Encrypt it will stored as secret that you mention.

# clusterissuer-lets-encrypt-staging.yaml apiVersion: cert-manager.io/v1 kind: ClusterIssuer metadata: name: portfolio-cert-issuer spec: acme: server: https://acme-v02.api.letsencrypt.org/directory email: sarvadnyajawle@gmail.com privateKeySecretRef: name: portfolio-private-key solvers: - http01: ingress: ingressClassName: nginx --- apiVersion: cert-manager.io/v1 kind: Certificate metadata: name: portfolio-tls-certificate namespace: default spec: secretName: portfolio-tls-cert # The certificate will be stored in this secret dnsNames: - sarvadnya.tech # Domain name to secure issuerRef: name: portfolio-cert-issuer # Reference to ClusterIssuer (created earlier) kind: ClusterIssuer

Deployment on EKS: Security and Scalability

Security was a key focus for this project. Using cert-manager in conjunction with Let’s Encrypt, I automated the process of generating and renewing TLS certificates. My ingress controller integrates with NGINX, ensuring the site is accessible via HTTPS.

Key security configurations:

ClusterIssuer: Configured cert-manager to issue certificates from Let's Encrypt.

Ingress TLS: Configured Kubernetes Ingress to manage HTTPS traffic, ensuring encrypted connections.

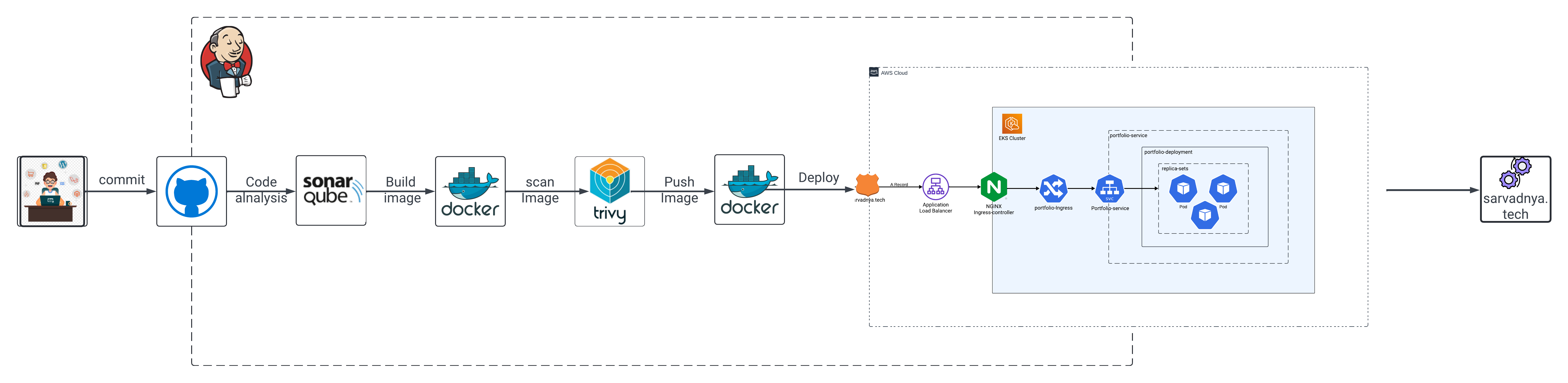

CI/CD Pipeline

A crucial part of this project’s success was its Jenkins-based CI/CD pipeline. Jenkins automated the entire deployment process, from code commits to infrastructure provisioning and container deployment.

Here’s a breakdown of how the pipeline is structured:

Code Checkout: Jenkins pulls the latest code from my GitHub repository.

Trivy Scans: Ensures that both the filesystem and Docker images are free from known vulnerabilities.

SonarQube Analysis: Enforces coding standards and prevents deployments if code quality gates are not met.

Docker Build & Push: Builds the Docker image for my portfolio and pushes it to a private Docker registry.

Kubernetes Deployment: Deploys the Docker container on the EKS cluster using the manifest files.

Monitoring Setup: Prometheus and Grafana are integrated to monitor performance and resource utilization.

This CI/CD pipeline ensures a smooth, automated deployment process, allowing me to focus on improving the application rather than manually managing the infrastructure.

Project Timeline

Here’s a timeline of how the project evolved:

September 10, 2024: Initial setup of Terraform modules and AWS provisioning.

September 20, 2024: EKS cluster setup and Kubernetes manifest files created.

September 24, 2024: CI/CD pipeline integration with Jenkins and Docker.

September 28, 2024: TLS certificates automated with cert-manager, and application deployed live on sarvadnya.tech.

October 1, 2024: Monitoring and logging integrated with Prometheus and Grafana.

Challenges and Solutions

One of the early challenges I faced was managing AWS billing issues, which temporarily halted progress. To overcome this, I optimized resource usage by switching to on-demand EC2 instances and setting up autoscaling to ensure we only used the resources we needed at any given time.

Another challenge was managing certificate renewals for HTTPS. By automating this with cert-manager and Let's Encrypt, I eliminated the need for manual certificate management, ensuring 100% uptime.

Optimizations and Future Plans

While the project is already production-ready, there are several optimizations and future improvements I’m planning to implement:

GitOps with Argo CD: This will allow me to manage Kubernetes deployments directly from Git, ensuring continuous delivery and deployment based on Git commits.

Horizontal Pod Autoscaling (HPA): Implement HPA to dynamically scale the number of pods based on real-time CPU and memory usage, ensuring that the application can handle traffic spikes efficiently.

Service Mesh: Integrate a service mesh like Istio to manage microservices communication, security, and observability across the cluster.

Gateway API: For more advanced traffic routing and load balancing, I plan to switch to the Gateway API, which provides better control and flexibility than traditional ingress controllers.

Final Thoughts

This portfolio project isn’t just a website—it's a demonstration of my DevOps expertise. From infrastructure provisioning with Terraform to container orchestration with Kubernetes, and CI/CD pipelines with Jenkins, this project reflects production-grade readiness and scalability.

By integrating security (cert-manager, TLS), monitoring (Prometheus, Grafana), and automation (Jenkins, Terraform), this project serves as a best-practice guide for deploying modern cloud applications.

I look forward to continuing to optimize and scale this project, making it even more robust and future-proof.

Subscribe to my newsletter

Read articles from Sarvadnya Jawle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sarvadnya Jawle

Sarvadnya Jawle

I am DevOps enthusiastic person. I work on Docker, Kubernetes and Open source. talks about cloud native tools.