Learning RAG: A Practical Guide for Developers Starting Out

Chai-dev682

Chai-dev682

Introduction

I started my journey with LLMs 2 years ago, and have learned a lot along the way. I have been working and mentoring other enthusiasts in this field and one of the first things that seems to trip people up is how to use RAG.

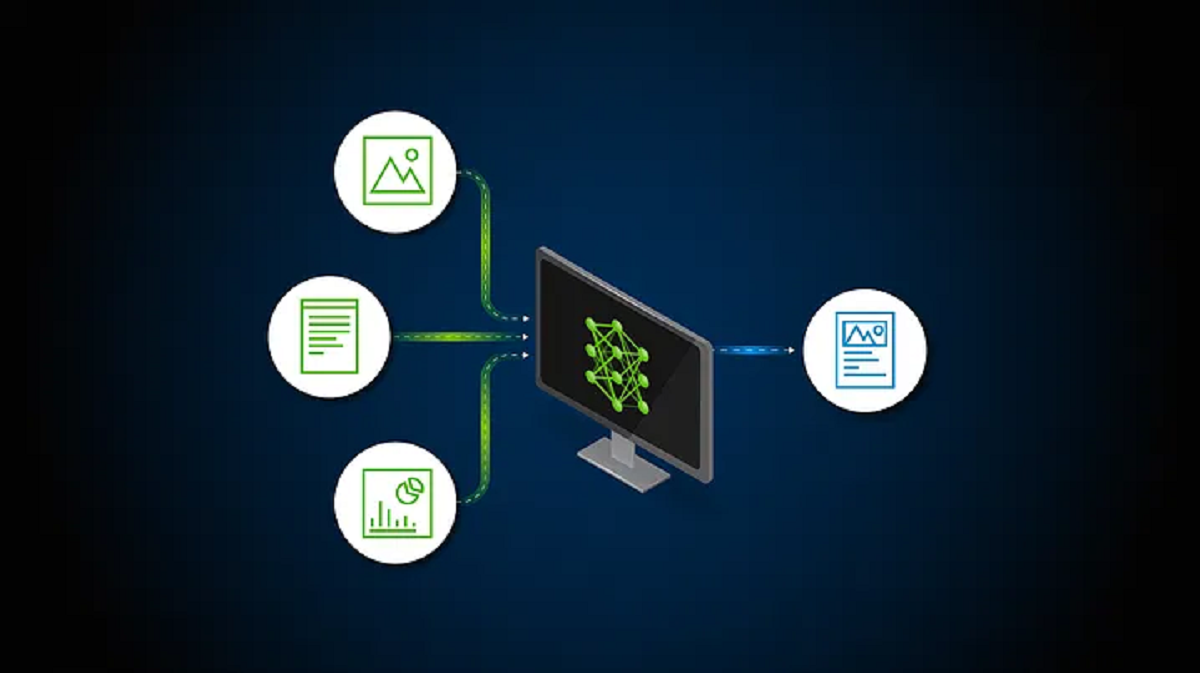

In my opinion, Retrieval-Augmented Generation (RAG) is a cornerstone in the development of intelligent applications, enabling models to access vast amounts of data efficiently and accurately. RAG allows AI tools access to whatever information is relevant to your particular use case without the need for fine-tuning or retraining. I have seen figures on this on a number of sites, but they all mostly say that RAG will get you about 70–80% of the way to completing your AI tools, prompt optimization is the next biggest, and fine-tuning/training can get you the last 10%.

With that in mind, RAG is a good place to start.

For developers venturing into RAG, understanding the key components is essential for building robust systems. In this article, I’ll walk you through the critical steps of document loading, text splitting, chunking, embedding, and retrievers, concluding with the final LLM calls. My goal is to help you navigate these components to find the best solutions for your specific use case while sharing insights from my own experiences.

Final note, I have spent a lot of time working with Langchain, so my examples below will be using that framework. With minor alteration you can use the same code with whatever your use case.

Document Loading: More Than Just Content

Document loading is the first step in the RAG process, where you retrieve the necessary metadata and content from your documents. While it’s tempting to focus solely on the content, doing so limits your system’s capabilities. Metadata — such as headers, bullets, and page numbers — provides valuable context that can significantly enhance the accuracy of your retrieval and generation processes.

For instance, when working with Word documents, the headers can be used to categorize content, bullets to understand structure, and page numbers to keep track of document sections. To properly leverage this, I recommend exploring document loaders in LangChain. These loaders can extract both content and metadata efficiently, giving you a richer dataset to work with from the start. Here’s a snippet of code that demonstrates this process:

from langchain_community.document_loaders import UnstructuredWordDocumentLoader

# Load the document and its metadata

loader = UnstructuredWordDocumentLoader('uploads/policy.docx')

documents = loader.load()

# Each document now contains both content and metadata

for doc in documents:

print(doc.metadata)

print(doc.page_content)

In my particular use case, when information is found I want to return additional data on where that information is located in a document. Without the metadata this would be severely limited.

Text Splitting & Chunking: Precision in Fragmentation

Once your documents are loaded, the next step is text splitting. This is where you divide the content into smaller, manageable pieces. The way you split the text can have a profound impact on your system’s performance. I personally favor recursive character detection for this task, which involves identifying specific characters (like newline characters or double newlines) to determine optimal splitting points.

Chunking is closely related to text splitting, but it involves grouping the split text into chunks that your model can process effectively. Getting the chunk size and overlap ratio right is crucial. Too large a chunk, and you risk losing the specificity of the content; too small, and you might miss out on important context.

Text splitting and chunking are two sides of the same coin — where splitting breaks down the text, and chunking organizes these fragments for optimal processing.

While I haven’t conducted extensive testing on chunking, the settings I’ve found effective so far are a chunk size of 1000 characters with a 30-character overlap. This balance allows for sufficient context retention while keeping the chunks manageable. As you experiment, you might find different settings that better suit your needs.

Here is an example using Langchain’s “RecursiveCharacterTextSplitter" module, which adjusts the splitting strategy based on the document’s structure, and the Chunking settings above:

from langchain.text_splitter import RecursiveCharacterTextSplitter

splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=30,

)

split_docs = splitter.split_documents(documents)

Embedding: Converting Text to Vectors

Once your text is chunked, the next step is embedding — converting the chunks into vectors that your model can understand. Embeddings are the backbone of RAG systems, transforming words into numerical representations that capture semantic meaning. There are numerous embedding options available, depending on whether you’re working locally or using cloud services.

For local embeddings, I use a model called “BAAI/bge-small-en”, which excels in generating high-quality embeddings.

from langchain_community.embeddings import HuggingFaceBgeEmbeddings

model_name = "BAAI/bge-small-en"

model_kwargs = {"device": "cpu"}

encode_kwargs = {"normalize_embeddings": True}

embedder = HuggingFaceBgeEmbeddings(

model_name=model_name,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs,

)

When working with OpenAI’s services, I typically use their embedding API “text-embedding-ada-002” for seamless integration. Here’s how you can implement this:

from langchain.embeddings import OpenAIEmbeddings

embedder = OpenAIEmbeddings(temperature=0.3, model="text-embedding-ada-002", openai_api_key="OPENAI_API_KEY")

Retrievers: Smart Context Finders

Retrievers are the components that search for relevant chunks based on a query, leveraging the embeddings you’ve generated. Think of them as smart context finders that can operate on a vector database or directly on in-memory vector embeddings. The choice of retriever depends on your specific use case — whether you need rapid in-memory retrieval or the scalability of a vector database.

In many cases, a simple in-memory retriever is sufficient, especially for smaller datasets. For larger applications, consider using a vector database like Facebook AI Similarity Search (FAISS), Qdrant, or Pinecone to store and retrieve embeddings efficiently.

Typically, if I do not plan on retrieving information from the document source long term, I simply work with it in memory. If that isn’t the case for you then saving it as a vector database file is probably what you want. The code below is an example of working with the document in memory. As you can see we have passed the variable split_docs that resulted from our splitting actions, and also the embedder variable we defined one step earlier to the FAISS retriever.

from langchain.vectorstores import FAISS

retriever = FAISS.from_documents(split_docs, embedder).as_retriever()

I should note that while FAISS is easy to use, I ultimately I found Qdrant to be faster and as accurate, if not a tiny bit more so. I’ll provide an example of that as a retriever as well. I suggest you try other alternatives and find out which ones work best for you.

from langchain_community.vectorstores import Qdrant

qdrant = Qdrant.from_documents(split_docs, embedder, location=":memory:")

retriever = qdrant.as_retriever()

LLM Calls: Bringing It All Together

Finally, you’ll make calls to your Language Model (LLM) to generate responses based on the retrieved information. I personally use LangChain’s “RetrievalQAWithSourcesChain” module, which not only generates responses but also provides source attribution. This transparency is invaluable for many applications, ensuring that users can trust the information provided.

from langchain.chains import RetrievalQAWithSourcesChain

from langchain_openai import ChatOpenAI

lls = ChatOpenAI(temperature=0.3, openai_api_key=env("OPENAI_API_KEY"))

qa_chain = RetrievalQAWithSourcesChain(retriever=retriever, llm=llm)

result = qa_chain.run(query="Your query here")

print(result)

However, you don’t have to use this specific module. Depending on your needs, you might opt for a simpler setup or a more complex one with additional features. The key is to choose a solution that balances efficiency with accuracy for your use case. Here is an example using LangChain Expression Language (LCEL)

from langchain_core.output_parsers import StrOutputParser

from langchain_openai import ChatOpenAI

lls = ChatOpenAI(temperature=0.3, openai_api_key=env("OPENAI_API_KEY"))

chain = self.prompt | llm | StrOutputParser()

result = chain.invoke({"topic": query})

print(result)

Bonus Tip

You may know the temperature setting for a large language model controls the randomness of the generated output. It determines how creative or conservative the model’s responses should be.

Low Temperature (e.g., 0.2): When you set a low temperature, the model’s output becomes more focused and deterministic. It tends to choose the most probable next word or phrase, leading to more predictable and coherent responses. This setting is useful when you need precise, accurate answers or when you’re looking to minimize the chances of generating unexpected or unconventional outputs.

High Temperature (e.g., 0.8 or 1.0): A higher temperature setting makes the model’s output more random and creative. The model is more likely to choose less probable words or phrases, which can lead to more diverse and imaginative responses. However, this also increases the likelihood of generating responses that might be less coherent or off-topic. This setting is useful for creative writing, brainstorming, or when you want the model to explore a broader range of possibilities.

What you probably didn’t know was that it also has an effect on the accuracy and completeness of your RAG queries. Most of my use cases have required the highest level of accuracy possible, and one way that I set out to accomplish this was to typically use a temperature setting of 0. However, during some intense RAG testing I found that a setting of 0.3–0.4 ultimately resulted in more complete answers.

Conclusion

Building a RAG system requires careful consideration of each component, from document loading to the final LLM calls. By understanding and optimizing these elements, you can create a system that not only retrieves and generates accurate information but also does so efficiently. As you experiment and refine your approach, you’ll find the settings and methods that work best for your specific applications.

I hope this guide has provided a solid foundation for your journey into RAG development. Remember, the key to success lies in continuous experimentation and adaptation — there’s always something new to learn and apply in this rapidly evolving field. Happy coding!

Subscribe to my newsletter

Read articles from Chai-dev682 directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Chai-dev682

Chai-dev682

I like Challenge, Champion, Competition