Kubernetes Cluster Auto scaler on AWS

mehdi pasha

mehdi pashaIntroduction

AWS Cloud use Cluster Autoscaler utilizes Amazon EC2 Auto Scaling Groups to manage node groups. Cluster Autoscaler typically runs as a Deployment in your cluster

Prerequisites

Cluster Auto scaler requires Kubernetes v1.3.0 or greater.

Enable IAM roles for service accounts

IAM Policy

IAM OIDC provider

Instruction to configure the Auto scaler

Firstly I will create EKS cluster using eksctl utilize as below if you have a cluster created already kindly ignore below steps.

ubuntu@ip-172-31-38-159:~$ sudo snap install aws-cli --classic

aws-cli (v2/stable) 2.17.63 from Amazon Web Services (aws✓) installed

ubuntu@ip-172-31-38-159:~$ sudo snap install kubectl --classic

kubectl 1.31.1 from Canonical✓ installed

ubuntu@ip-172-31-38-159:~$ # for ARM systems, set ARCH to: `arm64`, `armv6` or `armv7`

ARCH=amd64

PLATFORM=$(uname -s)_$ARCH

curl -sLO "https://github.com/eksctl-io/eksctl/releases/latest/download/eksctl_$PLATFORM.tar.gz"

# (Optional) Verify checksum

curl -sL "https://github.com/eksctl-io/eksctl/releases/latest/download/eksctl_checksums.txt" | grep $PLATFORM | sha256sum --check

tar -xzf eksctl_$PLATFORM.tar.gz -C /tmp && rm eksctl_$PLATFORM.tar.gz

sudo mv /tmp/eksctl /usr/local/bin

eksctl_Linux_amd64.tar.gz: OK

ubuntu@ip-172-31-38-159:~$

ubuntu@ip-172-31-38-159:~$ eksctl create cluster \

--name demo-autoscaling \

--region us-east-1 \

--nodegroup-name standard-workers \

--node-type t3.small \

--nodes 2 \

--nodes-min 1 \

--nodes-max 3 \

--managed

2024-10-02 16:11:13 [✔] EKS cluster "demo-autoscaling" in "us-east-1" region is ready

ubuntu@ip-172-31-38-159:~$ aws eks --region us-east-1 update-kubeconfig --name demo-autoscaling

Added new context arn:aws:eks:us-east-1:123456:cluster/demo-autoscaling to /home/ubuntu/.kube/config

Lets start with Auto scaling configuration steps

Create an IAM OIDC provider for your cluster

ubuntu@ip-172-31-38-159:~$ cluster_name=demo-autoscaling

oidc_id=$(aws eks describe-cluster --name $cluster_name --query "cluster.identity.oidc.issuer" --output text | cut -d '/' -f 5)

echo $oidc_id

eksctl utils associate-iam-oidc-provider --cluster $cluster_name --approve

4A5EA796644FF266A4E6C2AC9F0182F7

2024-10-02 16:13:51 [ℹ] will create IAM Open ID Connect provider for cluster "demo-autoscaling" in "us-east-1"

2024-10-02 16:13:51 [✔] created IAM Open ID Connect provider for cluster "demo-autoscaling" in "us-east-1"

Create policy with any name AmazonEKSClusterAutoscalerPolicy

ubuntu@ip-172-31-38-159:~$ aws iam create-policy --policy-name AmazonEKSClusterAutoscalerPolicy --policy-document file://my-policy.json

{

"Policy": {

"PolicyName": "AmazonEKSClusterAutoscalerPolicy",

"PolicyId": "ANPttttttQSKQAW4EWG7H",

"Arn": "arn:aws:iam::12345:policy/AmazonEKSClusterAutoscalerPolicy",

"Path": "/",

"DefaultVersionId": "v1",

"AttachmentCount": 0,

"PermissionsBoundaryUsageCount": 0,

"IsAttachable": true,

"CreateDate": "2024-10-02T16:19:50+00:00",

"UpdateDate": "2024-10-02T16:19:50+00:00"

}

}

IAM Service Account

ubuntu@ip-172-31-38-159:~$ eksctl create iamserviceaccount --name my-service-account --namespace kube-system --cluster demo-autoscaling --role-name my-role \

--attach-policy-arn arn:aws:iam::***:policy/AmazonEKSClusterAutoscalerPolicy --approve

2024-10-02 16:24:18 [ℹ] 1 iamserviceaccount (kube-system/my-service-account) was included (based on the include/exclude rules)

2024-10-02 16:24:18 [!] serviceaccounts that exist in Kubernetes will be excluded, use --override-existing-serviceaccounts to override

2024-10-02 16:24:18 [ℹ] 1 task: {

2 sequential sub-tasks: {

create IAM role for serviceaccount "kube-system/my-service-account",

create serviceaccount "kube-system/my-service-account",

} }2024-10-02 16:24:18 [ℹ] building iamserviceaccount stack "eksctl-demo-autoscaling-addon-iamserviceaccount-kube-system-my-service-account"

2024-10-02 16:24:18 [ℹ] deploying stack "eksctl-demo-autoscaling-addon-iamserviceaccount-kube-system-my-service-account"

2024-10-02 16:24:18 [ℹ] waiting for CloudFormation stack "eksctl-demo-autoscaling-addon-iamserviceaccount-kube-system-my-service-account"

2024-10-02 16:24:48 [ℹ] waiting for CloudFormation stack "eksctl-demo-autoscaling-addon-iamserviceaccount-kube-system-my-service-account"

2024-10-02 16:24:48 [ℹ] created serviceaccount "kube-system/my-service-account"

From github check cluster-autoscaler-autodiscover.yaml

ubuntu@ip-172-31-38-159:~$ kubectl apply -f cluster-autoscaler-autodiscover.yaml

serviceaccount/cluster-autoscaler created

clusterrole.rbac.authorization.k8s.io/cluster-autoscaler created

role.rbac.authorization.k8s.io/cluster-autoscaler created

clusterrolebinding.rbac.authorization.k8s.io/cluster-autoscaler created

rolebinding.rbac.authorization.k8s.io/cluster-autoscaler created

deployment.apps/cluster-autoscaler created

ubuntu@ip-172-31-38-159:~$ kubectl -n kube-system get pods

NAME READY STATUS RESTARTS AGE

aws-node-8fhmv 2/2 Running 0 27m

aws-node-jpzkf 2/2 Running 0 27m

cluster-autoscaler-5b987f8b78-9f7zp 1/1 Running 0 9m47s

coredns-586b798467-5glbk 1/1 Running 0 30m

coredns-586b798467-qngdz 1/1 Running 0 30m

kube-proxy-k9pcc 1/1 Running 0 27m

kube-proxy-xqkc2 1/1 Running 0 27m

ubuntu@ip-172-31-38-159:~$ kubectl -n kube-system get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

cluster-autoscaler 1/1 1 1 9m56s

coredns 2/2 2 2 30m

ubuntu@ip-172-31-38-159:~$

kubectl -n kube-system logs -f deployment.apps/cluster-autoscaler

I1002 16:44:02.336072 1 static_autoscaler.go:631] Scale down status: lastScaleUpTime=2024-10-02 15:34:39.834184668 +0000 UTC m=-3594.715082169 lastScaleDownDeleteTime=2024-10-02 15:34:39.834184668 +0000 UTC m=-3594.715082169 lastScaleDownFailTime=2024-10-02 15:34:39.834184668 +0000 UTC m=-3594.715082169 scaleDownForbidden=false scaleDownInCooldown=false

I1002 16:44:02.336096 1 static_autoscaler.go:652] Starting scale down

I1002 16:44:02.336118 1 nodes.go:134] ip-192-168-24-44.ec2.internal was unneeded for 9m12.486754847s

ubuntu@ip-172-31-38-159:~$ kubectl -n kube-system get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

cluster-autoscaler 1/1 1 1 18m

coredns 2/2 2 2 39m

ubuntu@ip-172-31-38-159:~$ kubectl scale -n kube-system --current-replicas=1 --replicas=10 deployment/cluster-autoscaler

deployment.apps/cluster-autoscaler scaled

ubuntu@ip-172-31-38-159:~$

ubuntu@ip-172-31-38-159:~$

ubuntu@ip-172-31-38-159:~$

ubuntu@ip-172-31-38-159:~$ kubectl -n kube-system get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

cluster-autoscaler 2/10 10 2 21m

coredns 2/2 2 2 41m

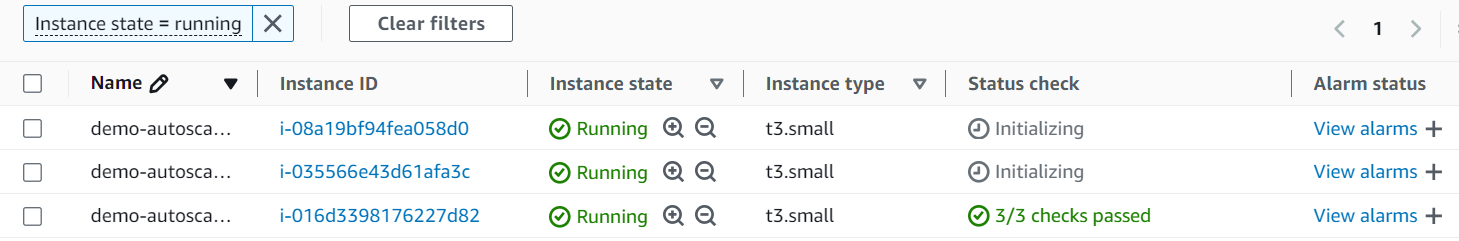

As per below image it has added a node to the cluster

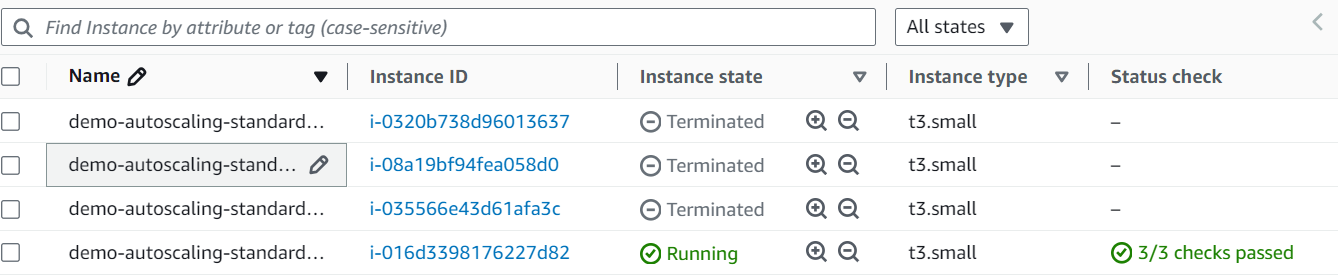

kubectl scale -n kube-system --current-replicas=10 --replicas=1 deployment/cluster-autoscaler

Testing :-

After testing i have reduce the pods using replicas and below are the results only one node is running as per requirement .

Troubleshooting

One of the issue I faced is with role created by eksctl so i need to add the policy AmazonEKSClusterAutoscalerPolicy to the eks role

I1002 16:31:45.149925 1 aws_manager.go:79] AWS SDK Version: 1.48.7

I1002 16:31:45.150034 1 auto_scaling_groups.go:396] Regenerating instance to ASG map for ASG names: []

I1002 16:31:45.150048 1 auto_scaling_groups.go:403] Regenerating instance to ASG map for ASG tags: map[k8s.io/cluster-autoscaler/demo-autoscaling: k8s.io/cluster-autoscaler/enabled:]

E1002 16:31:45.177839 1 aws_manager.go:128] Failed to regenerate ASG cache: AccessDenied: User: arn:aws:sts::****:assumed-role/eksctl-demo-autoscaling-nodegroup--NodeInstanceRole-lP1xaXWG65uO/i-0320b738d96013637 is not authorized to perform: autoscaling:DescribeAutoScalingGroups because no identity-based policy allows the autoscaling:DescribeAutoScalingGroups action

status code: 403, request id: 75e6adb1-bc02-424c-9a55-5ad99a928a8e

F1002 16:31:45.177883 1 aws_cloud_provider.go:460] Failed to create AWS Manager: AccessDenied: User: arn:aws:sts::****:assumed-role/eksctl-demo-autoscaling-nodegroup--NodeInstanceRole-lP1xaXWG65uO/i-0320b738d96013637 is not authorized to perform: autoscaling:DescribeAutoScalingGroups because no identity-based policy allows the autoscaling:DescribeAutoScalingGroups action

status code: 403, request id: 75e6adb1-bc02-424c-9a55-5ad99a928a8e

ubuntu@ip-172-31-38-159:~$

Conclusion

AWS Auto Scaling has below points to be noted

Leveraging AWS Auto Scaling is essential for any organization aiming to optimize resource management, enhance application performance, and reduce operational costs in a cloud environment. By automating the scaling process, you can focus more on developing and improving your applications while ensuring they are resilient and cost-effective.

Reference

https://docs.aws.amazon.com/eks/latest/userguide/autoscaling.html

https://github.com/kubernetes/autoscaler/blob/master/cluster-autoscaler/cloudprovider/aws/README.md

Subscribe to my newsletter

Read articles from mehdi pasha directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by