Implementing NFS Persistent Volumes in Kubernetes for MySQL and Petclinic Application Project: A Complete Guide

Subbu Tech Tutorials

Subbu Tech Tutorials

Before jumping into the concept of NFS Volumes, let me briefly explain the different types of volumes available in Kubernetes:

Here’s a concise version of the different types of Kubernetes volumes and their use cases:

Temporary Storage:

emptyDirUse Case: Temporary data storage that lasts only while the pod is running.

Example: Processing tasks without persistent data needs.

Host Access:

hostPathUse Case: Mounts files or directories from the host machine into the pod.

Example: Accessing host logs or Docker socket.

Persistent Storage:

PVC,NFS, Cloud ProvidersUse Case: Stores data persistently across pod restarts.

Example: Databases or file storage.

Volume Types:

persistentVolumeClaim,nfs,awsElasticBlockStore,gcePersistentDisk.

Configuration Management:

configMap,secretUse Case: Injects configuration or sensitive data into pods.

Example: Config files or secrets like passwords and API keys.

Dynamic Storage:

projectedUse Case: Combines data from multiple sources like

configMap,secret, etc., into a single volume.Example: Unified configuration and credential management.

Now, we will deep dive into using NFS Volumes with an example of the Spring PetClinic Application and MySQL database for a clear understanding.

NFS (Network File System): (Persistent)

Purpose: Allows multiple Pods running on different nodes to share storage via a network-mounted filesystem.

Usage: The NFS server shares a directory that can be mounted on Pods across multiple nodes.

Use Case: Shared file storage between applications, backup solutions, or any multi-Pod data-sharing requirement.

=> Set up an NFS server using an AWS t2.micro EC2 instance for testing purposes.

=> The following mandatory ports must be opened in the inbound rules of your NFS server's security group:

NFS (2049/TCP and UDP):

Port: 2049 (TCP and UDP)

Purpose: This is the primary port used by the NFS protocol for file sharing. It is mandatory for NFS communication.

RPCBind (111/TCP and UDP):

Port: 111 (TCP and UDP)

Purpose:

rpcbindis essential for mapping NFS requests to the correct services. It is also mandatory for NFS to function correctly because it helps in coordinating the other services.

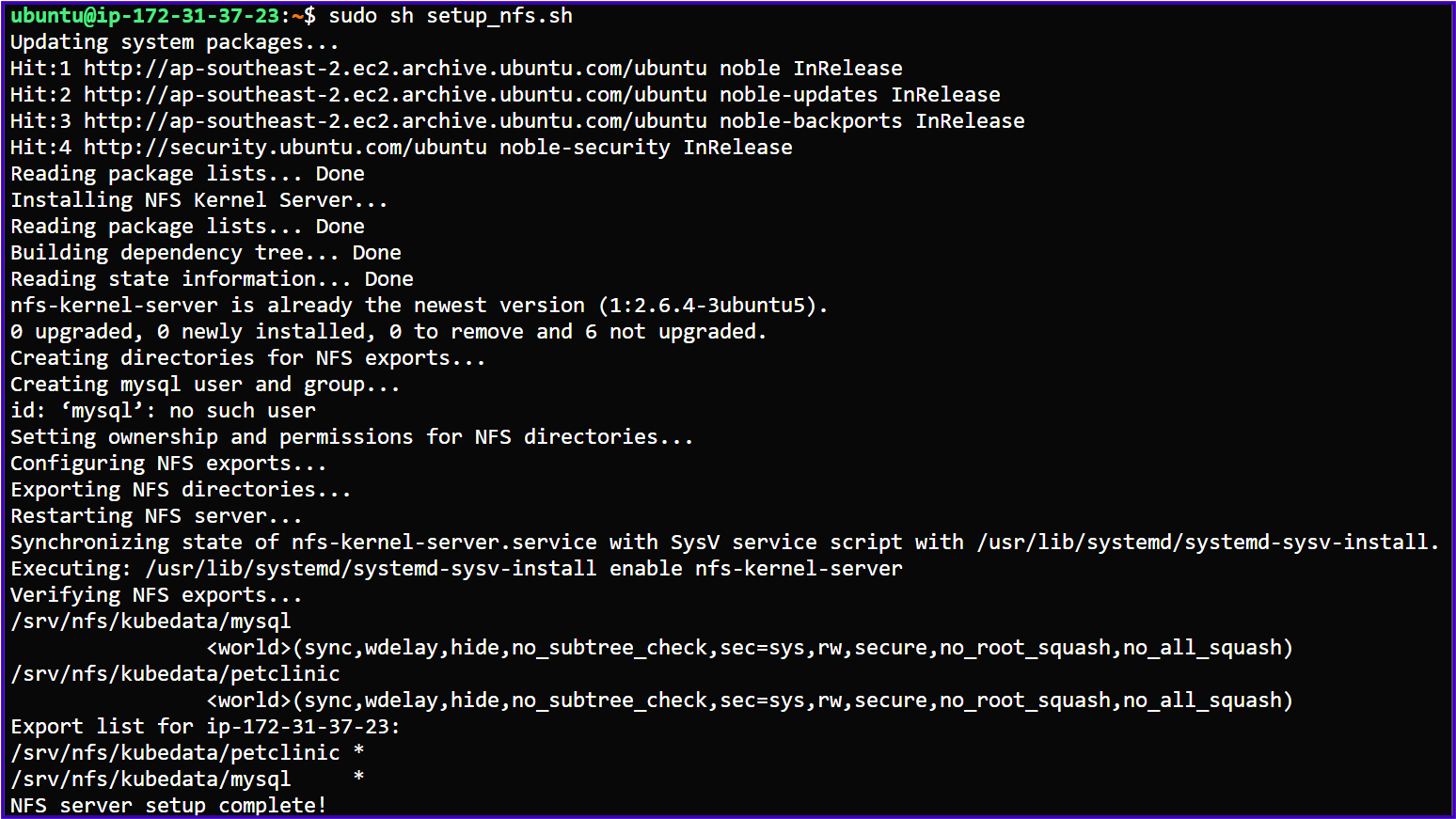

=> A shell script to automate the setup of an NFS server on an EC2 instance for my Spring Petclinc Application and MySQL Database:

#!/bin/bash

# Exit on any error

set -e

# Step 1: Update system packages

echo "Updating system packages..."

sudo apt-get update -y

# Step 2: Install NFS server

echo "Installing NFS Kernel Server..."

sudo apt-get install -y nfs-kernel-server

# Step 3: Create directories for NFS exports

echo "Creating directories for NFS exports..."

sudo mkdir -p /srv/nfs/kubedata/mysql

sudo mkdir -p /srv/nfs/kubedata/petclinic

# Step 3.1: Create mysql user and group if not exists

echo "Creating mysql user and group..."

if ! id "mysql" &>/dev/null; then

sudo groupadd mysql

sudo useradd -r -g mysql mysql

fi

# Step 4: Set permissions and ownership for directories

echo "Setting ownership and permissions for NFS directories..."

sudo chown -R mysql:mysql /srv/nfs/kubedata/mysql

sudo chown -R nobody:nogroup /srv/nfs/kubedata/petclinic

sudo chmod -R 755 /srv/nfs/kubedata/

# Step 5: Configure NFS exports

echo "Configuring NFS exports..."

NFS_EXPORTS_FILE="/etc/exports"

sudo bash -c "cat > $NFS_EXPORTS_FILE <<EOF

/srv/nfs/kubedata/mysql *(rw,sync,no_subtree_check,no_root_squash)

/srv/nfs/kubedata/petclinic *(rw,sync,no_subtree_check,no_root_squash)

EOF"

# Step 6: Export NFS directories

echo "Exporting NFS directories..."

sudo exportfs -r

# Step 7: Restart NFS server

echo "Restarting NFS server..."

sudo systemctl restart nfs-kernel-server

sudo systemctl enable nfs-kernel-server

# Step 8: Verify NFS exports

echo "Verifying NFS exports..."

sudo exportfs -v

sudo showmount -e

echo "NFS server setup complete!"

Make the script executable:

chmod +x setup_nfs.sh

Run the script on your NFS EC2-Server:

sudo ./setup_nfs.sh

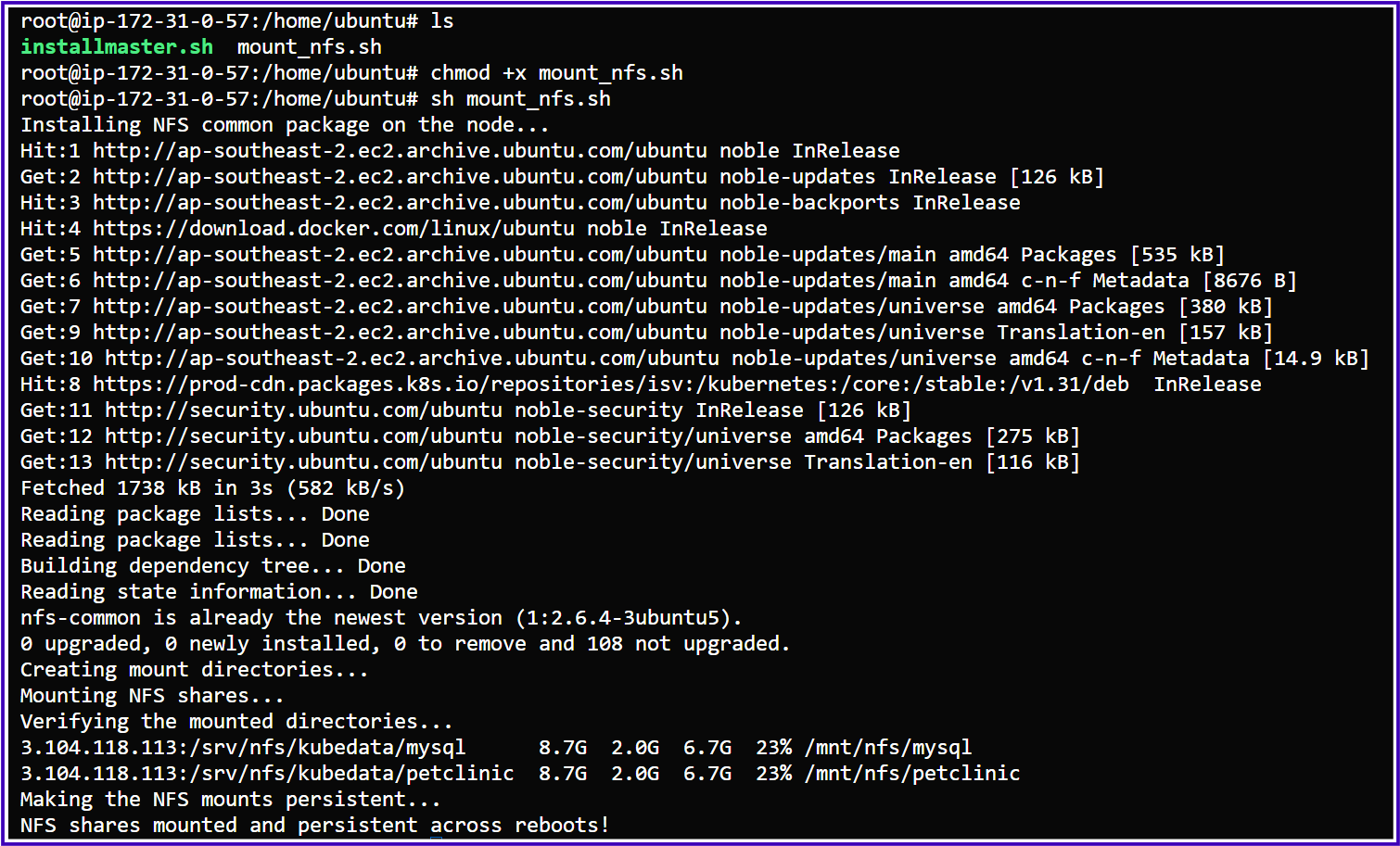

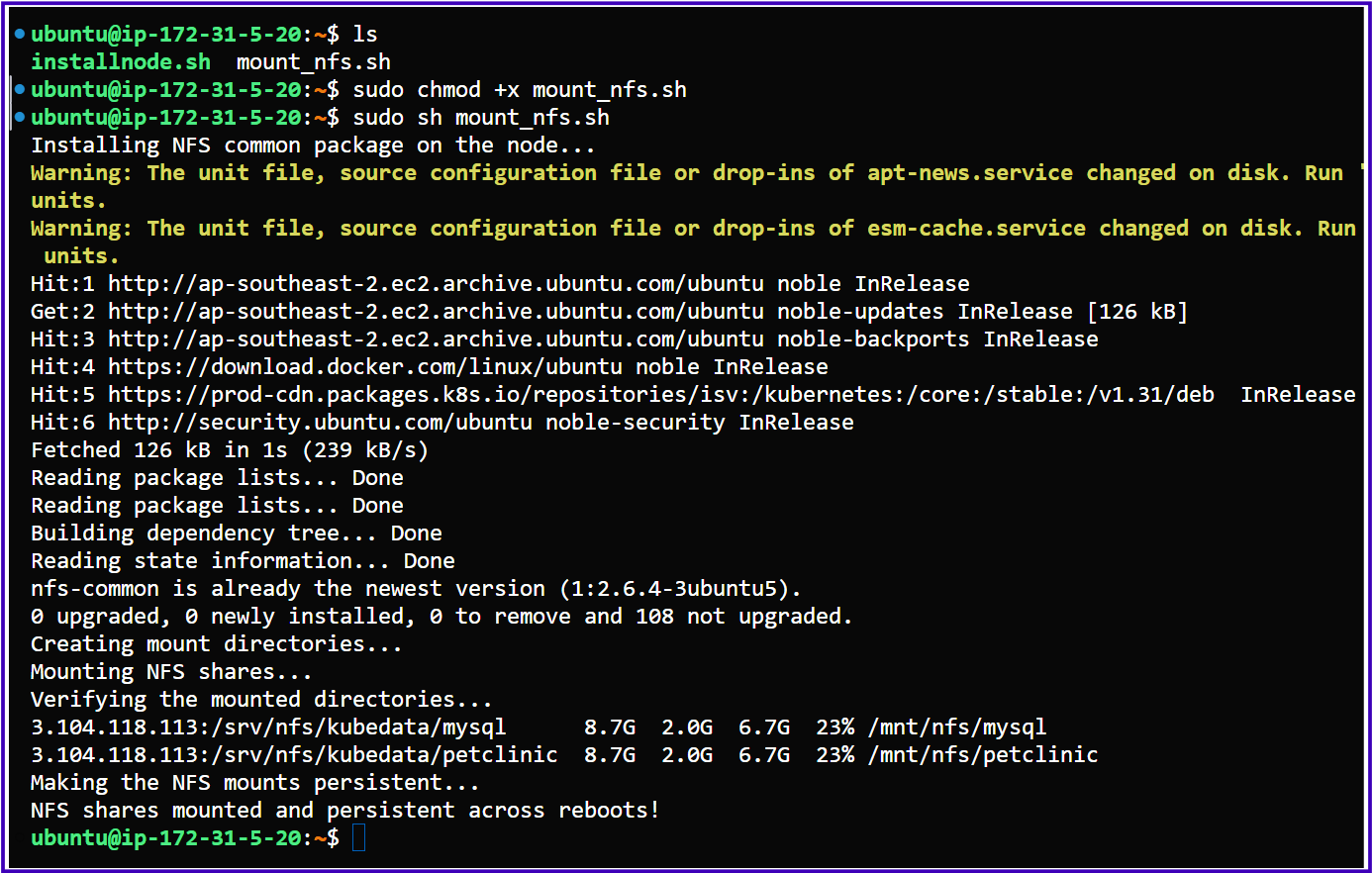

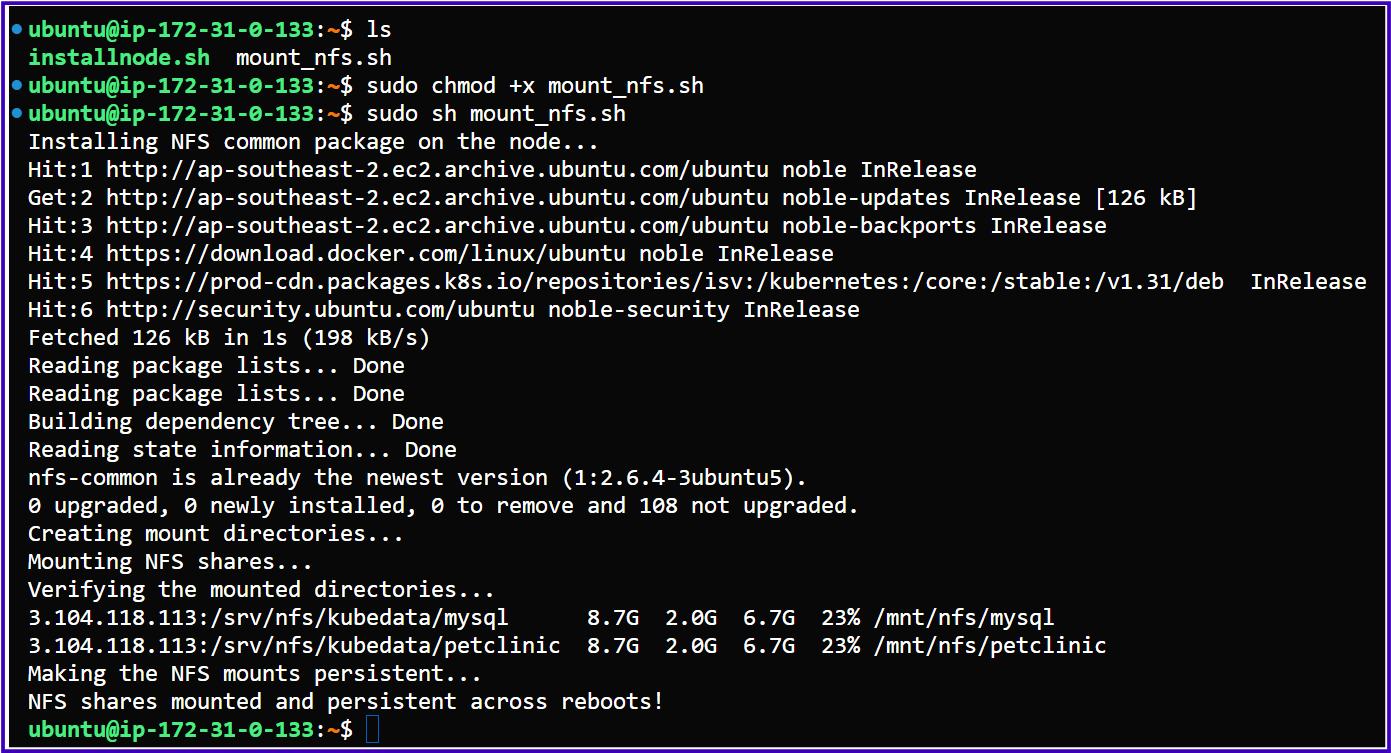

=> To integrate the NFS share with your Kubernetes nodes, follow these steps:

Here’s a shell script that automates the steps required to mount the NFS shares on your Kubernetes nodes. This script will:

Install the

nfs-commonpackage on each node.Create the necessary mount directories.

Mount the NFS shares for both MySQL and the PetClinic application.

Optionally, update

/etc/fstabto persist the mounts across reboots.

#!/bin/bash

# Exit on any error

set -e

# Variables

NFS_SERVER_IP="3.106.135.251" # Replace with your NFS server IP

MYSQL_NFS_PATH="/srv/nfs/kubedata/mysql"

PETCLINIC_NFS_PATH="/srv/nfs/kubedata/petclinic"

MYSQL_MOUNT_DIR="/mnt/nfs/mysql"

PETCLINIC_MOUNT_DIR="/mnt/nfs/petclinic"

# Step 1: Install NFS common package

echo "Installing NFS common package on the node..."

sudo apt-get update -y

sudo apt-get install -y nfs-common

# Step 2: Create mount directories

echo "Creating mount directories..."

sudo mkdir -p $MYSQL_MOUNT_DIR

sudo mkdir -p $PETCLINIC_MOUNT_DIR

# Step 3: Mount NFS shares

echo "Mounting NFS shares..."

sudo mount -t nfs -o vers=4 $NFS_SERVER_IP:$MYSQL_NFS_PATH $MYSQL_MOUNT_DIR

sudo mount -t nfs -o vers=4 $NFS_SERVER_IP:$PETCLINIC_NFS_PATH $PETCLINIC_MOUNT_DIR

# Step 4: Verify mounting

echo "Verifying the mounted directories..."

df -h | grep nfs

# Step 5: Make NFS mounts persistent in /etc/fstab

echo "Making the NFS mounts persistent..."

sudo bash -c "echo '$NFS_SERVER_IP:$MYSQL_NFS_PATH $MYSQL_MOUNT_DIR nfs defaults 0 0' >> /etc/fstab"

sudo bash -c "echo '$NFS_SERVER_IP:$PETCLINIC_NFS_PATH $PETCLINIC_MOUNT_DIR nfs defaults 0 0' >> /etc/fstab"

echo "NFS shares mounted and persistent across reboots!"

Make the script executable:

chmod +x mount_nfs.sh

Run the script on each node of your K8s cluster including master node:

sudo ./mount_nfs.sh

In the above shell script #Step 3 what happens?

You are mounting two NFS shares from the NFS server:

MySQL Data Share:

/srv/nfs/kubedata/mysqlon the NFS server is mounted to/mnt/nfs/mysqlon the local node.Spring Boot PetClinic Data Share:

/srv/nfs/kubedata/petclinicon the NFS server is mounted to/mnt/nfs/petclinicon the local node.

In the above shell script #Step 5 what happens?

In Step 5, you are making the NFS mounts persistent across reboots by adding the mount configuration to the /etc/fstab file. This ensures that the NFS shares are automatically mounted when the system starts.

Purpose: To ensure the NFS mounts for MySQL and the Spring Boot PetClinic app are automatically remounted after the system reboots.

Effect: The NFS shares (

/srv/nfs/kubedata/mysqland/srv/nfs/kubedata/petclinic) will be automatically mounted to the local directories (/mnt/nfs/mysqland/mnt/nfs/petclinic) when the system starts.

Note:

Generally we do require a Persistent Volume for MySQL, as the database must have persistent storage.

For the PetClinic app, you only need a Persistent Volume if it has data that needs to persist, such as logs, user uploads, or runtime-generated data that needs to survive pod restarts. If it doesn’t handle such data, you can avoid the persistent volume and rely solely on emptyDir.

We will create YAML files for Persistent Volumes (PVs) and Persistent Volume Claims (PVCs), along with mysql-deployment.yaml and petclinic-app-deployment.yaml, to utilize NFS volumes for data persistence.

1. Persistent Volume (PV) and Persistent Volume Claim (PVC) for MySQL:

mysql-pv.yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv

namespace: dev

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

nfs:

path: /srv/nfs/kubedata/mysql

server: <NFS_SERVER_IP> # Replace with your NFS server IP

mysql-pvc.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

namespace: dev

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi # Matching the PV size

Why Use ReadWriteOnce accessMode for MySQL:

For a database like MySQL, ReadWriteOnce is commonly used because it ensures only one pod (on one node) can write to the volume at any time. This is important for data consistency and integrity, as multiple writers could cause database corruption.

If you're using ReadWriteOnce in the context of a database, make sure that:

Only one pod is handling the database at a time (even if you have multiple replicas, only one pod should be active for the database).

You're using mechanisms like leader election (for stateful applications) or failover systems if you want to run multiple database replicas.

In a high-availability setup, you might use something like MySQL clustering, but each node would still require its own persistent volume to avoid data corruption.

Here, I will explain the remaining two types of access modes in Kubernetes Persistent Volumes (PVs) to provide a clearer understanding:

ReadWriteMany (

ReadWriteMany, RWX):Meaning: The volume can be mounted as read-write by multiple nodes simultaneously.

Use Case: This is useful for applications that require multiple instances to write to the same volume, like file servers or shared logs in a multi-replica setup.

ReadOnlyMany (

ReadOnlyMany, ROX):Meaning: The volume can be mounted as read-only by multiple nodes.

Use Case: Ideal for scenarios where multiple pods across different nodes need to read from a shared dataset (e.g., shared configuration files or a content delivery system), but none need to write to it.

2. Persistent Volume (PV) and Persistent Volume Claim (PVC) for PetClinic:

petclinic-pv.yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: petclinic-pv

namespace: dev

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteMany # In case multiple pods need to write to it

persistentVolumeReclaimPolicy: Retain

nfs:

path: /srv/nfs/kubedata/petclinic

server: <NFS_SERVER_IP> # Replace with your NFS server IP

petclinic-pvc.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: petclinic-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi # Matching the PV size

3. MySQL Deployment:

mysql-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-db

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:8.4

ports:

- containerPort: 3306

env:

- name: MYSQL_USER

value: petclinic

- name: MYSQL_PASSWORD

value: petclinic

- name: MYSQL_ROOT_PASSWORD

value: root

- name: MYSQL_DATABASE

value: petclinic

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-persistent-storage

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mysql-service

namespace: dev

spec:

type: ClusterIP

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql

Note:

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-persistent-storage

The directory /var/lib/mysql is the default location where MySQL stores its database files (e.g., data, tables, logs) inside a container or server.

Mounting a volume to this directory ensures that MySQL's database data persists across container restarts.

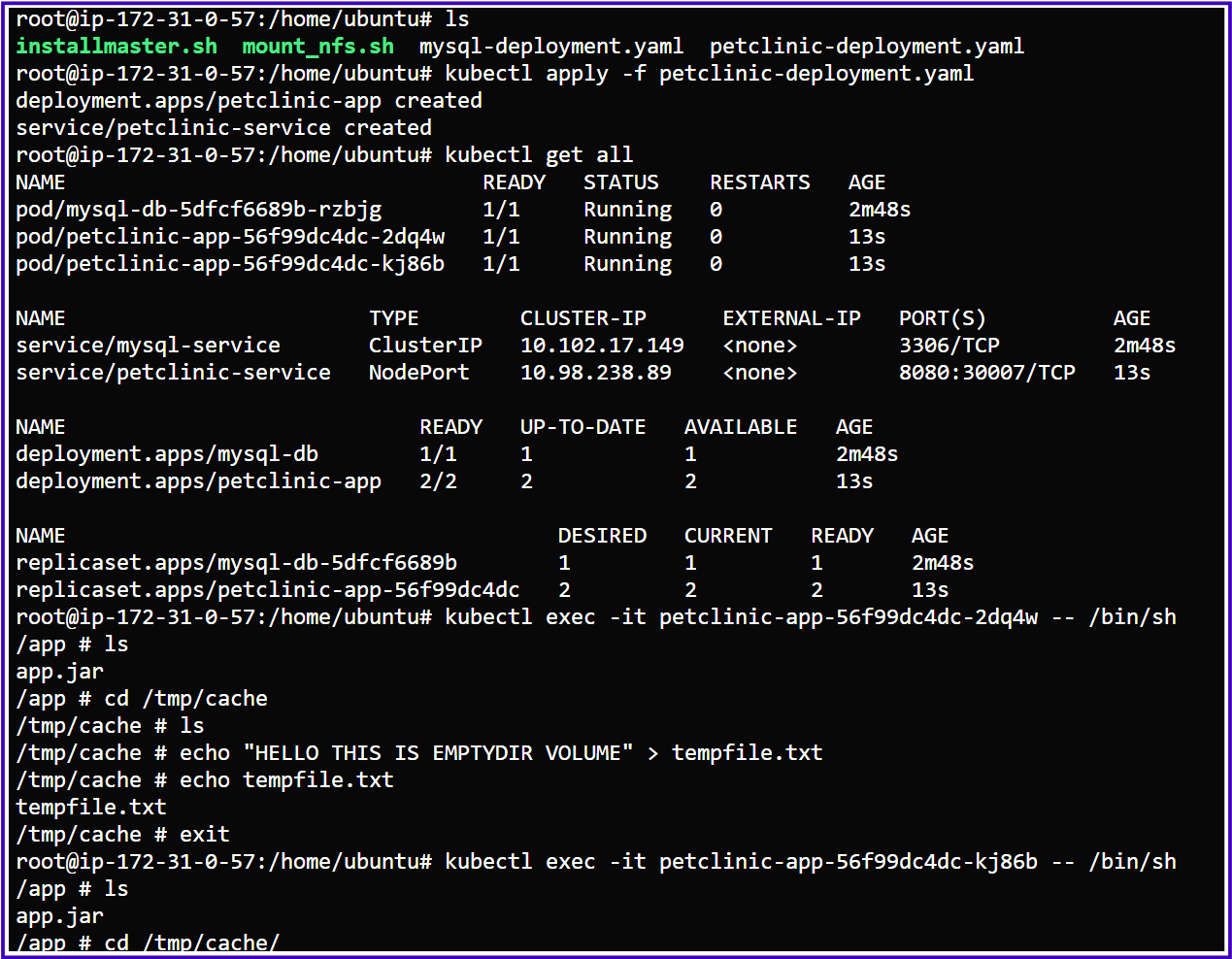

4. PetClinic Deployment :

petclinic-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: petclinic-app

namespace: dev

spec:

replicas: 2

selector:

matchLabels:

app: petclinic

template:

metadata:

labels:

app: petclinic

spec:

containers:

- name: petclinic

image: subbu7677/petclinic-spring-app:v1

ports:

- containerPort: 8080

env:

- name: MYSQL_URL

value: jdbc:mysql://mysql-service:3306/petclinic

- name: MYSQL_USER

value: petclinic

- name: MYSQL_PASSWORD

value: petclinic

- name: MYSQL_ROOT_PASSWORD

value: root

- name: MYSQL_DATABASE

value: petclinic

volumeMounts:

- mountPath: /tmp/cache

name: cache-volume

- mountPath: /app/data

name: petclinic-persistent-storage

volumes:

- name: cache-volume

emptyDir: {}

- name: petclinic-persistent-storage

persistentVolumeClaim:

claimName: petclinic-pvc

---

apiVersion: v1

kind: Service

metadata:

name: petclinic-service

namespace: dev

spec:

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 30007

selector:

app: petclinic

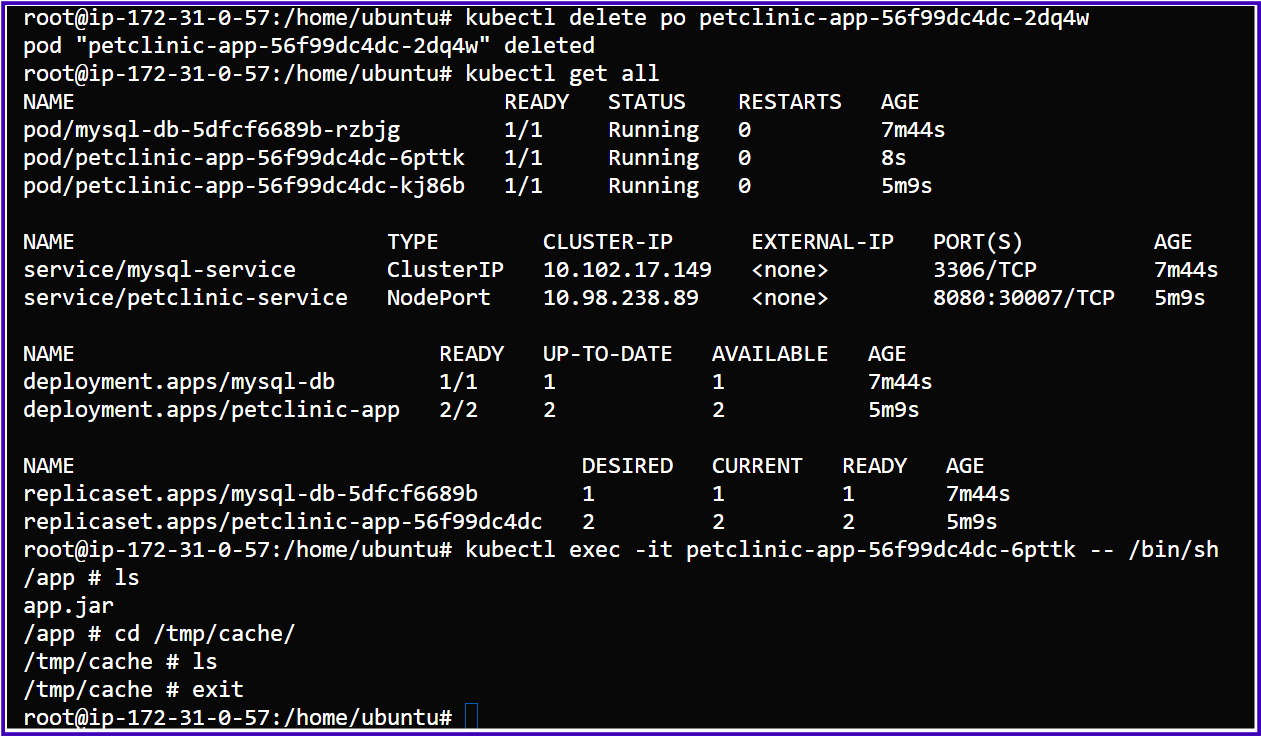

Why Two Volumes Are Created for our Petclinic Application Deployment:

Temporary Storage (

emptyDir): Some data like caches or temporary files don’t need to persist after the pod is stopped. TheemptyDirvolume is useful for these scenarios.Persistent Storage (

PersistentVolumeClaim): Critical data that must be preserved between restarts, such as user-uploaded files or application logs, is stored in the persistent volume backed by NFS.

Note:

volumeMounts:

- mountPath: /tmp/cache

name: cache-volume

- mountPath: /app/data

name: petclinic-persistent-storage

/tmp/cache: This directory inside the container is mapped to thecache-volume(emptyDir), which is temporary storage. The application uses this for cache or temporary files, and the data is deleted when the pod stops./app/data: This directory inside the container is mapped to thepetclinic-persistent-storage(a Persistent Volume). The application uses this for storing data that needs to persist across pod restarts, like important files or logs.

Practical Example:

- NFS Server Set Up:

- Integration with K8s Cluster Nodes:

a) Master Node:

b) Worker Node1:

c) Worker Node2:

First, we will deploy our MySQL database and Petclinic application without using any persistent volumes.

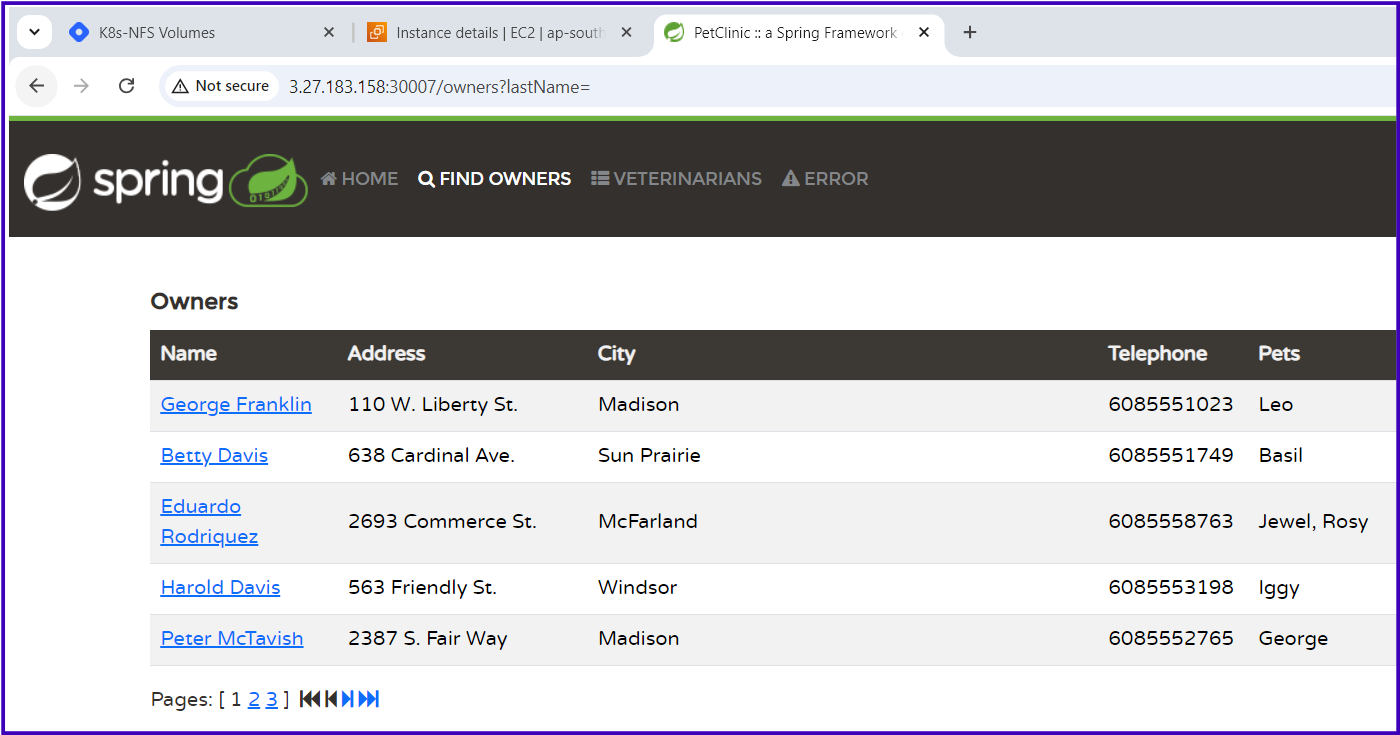

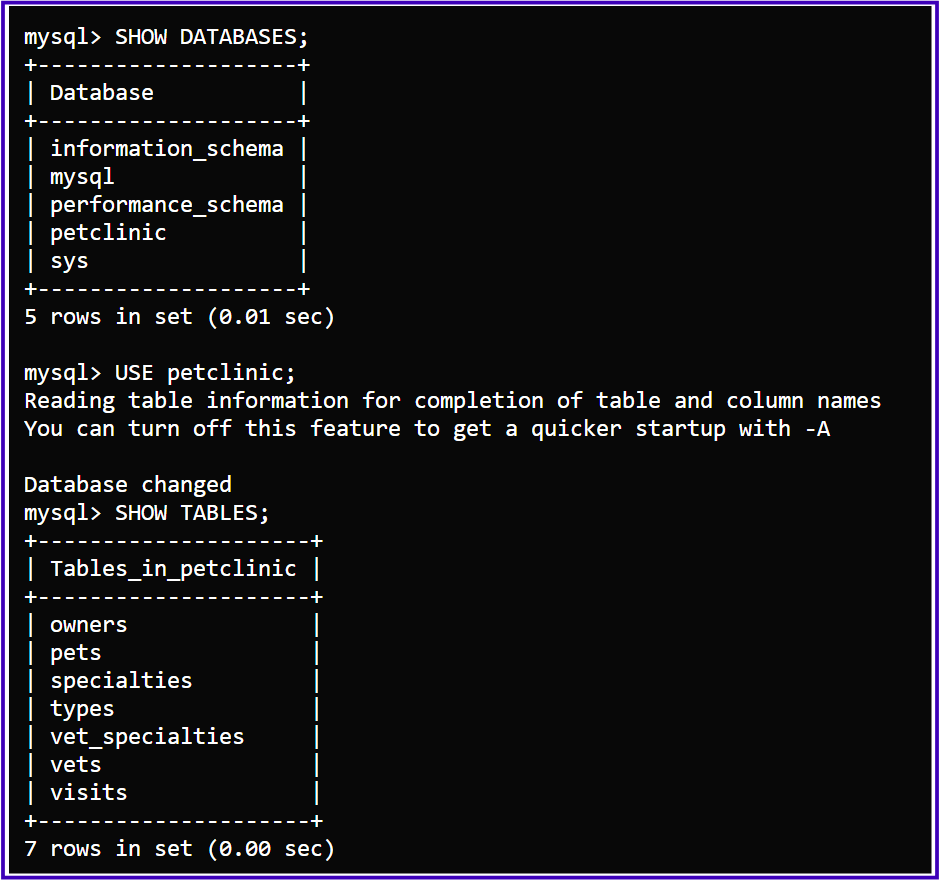

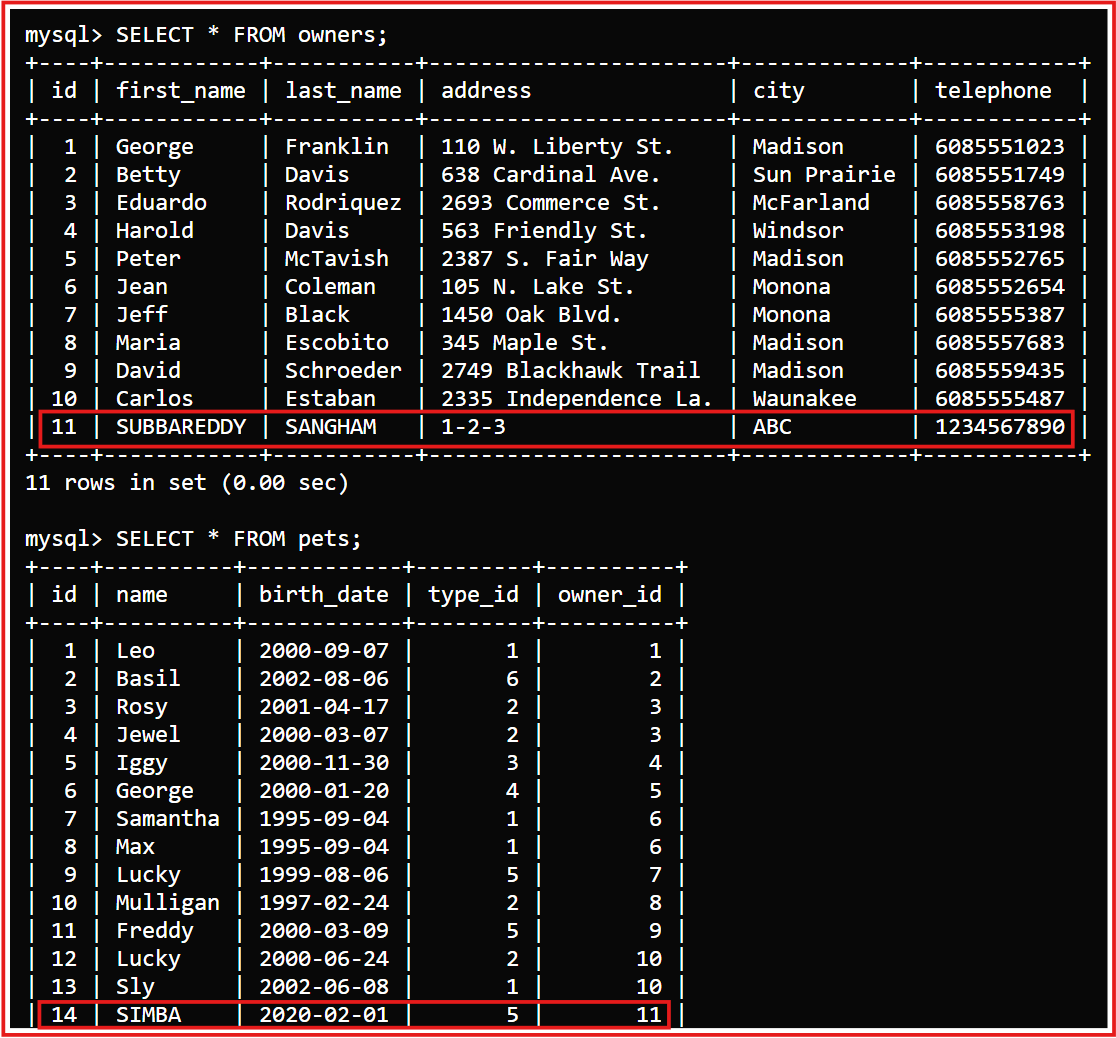

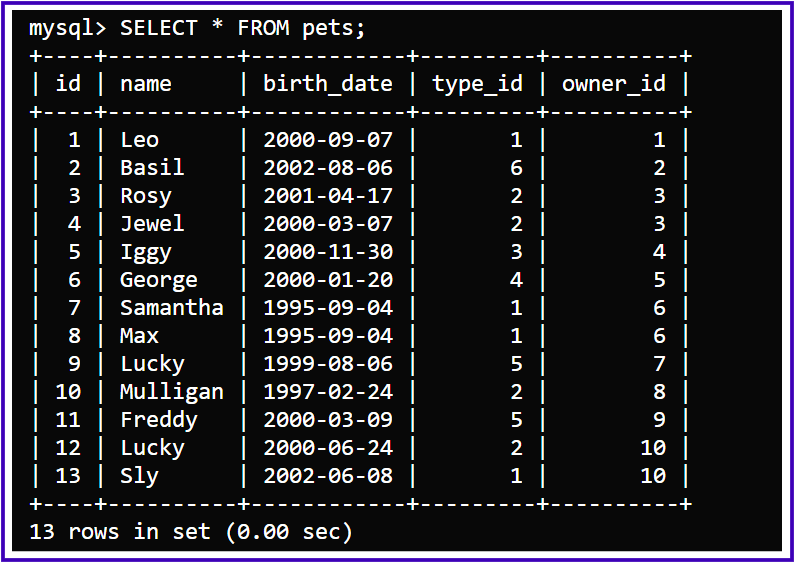

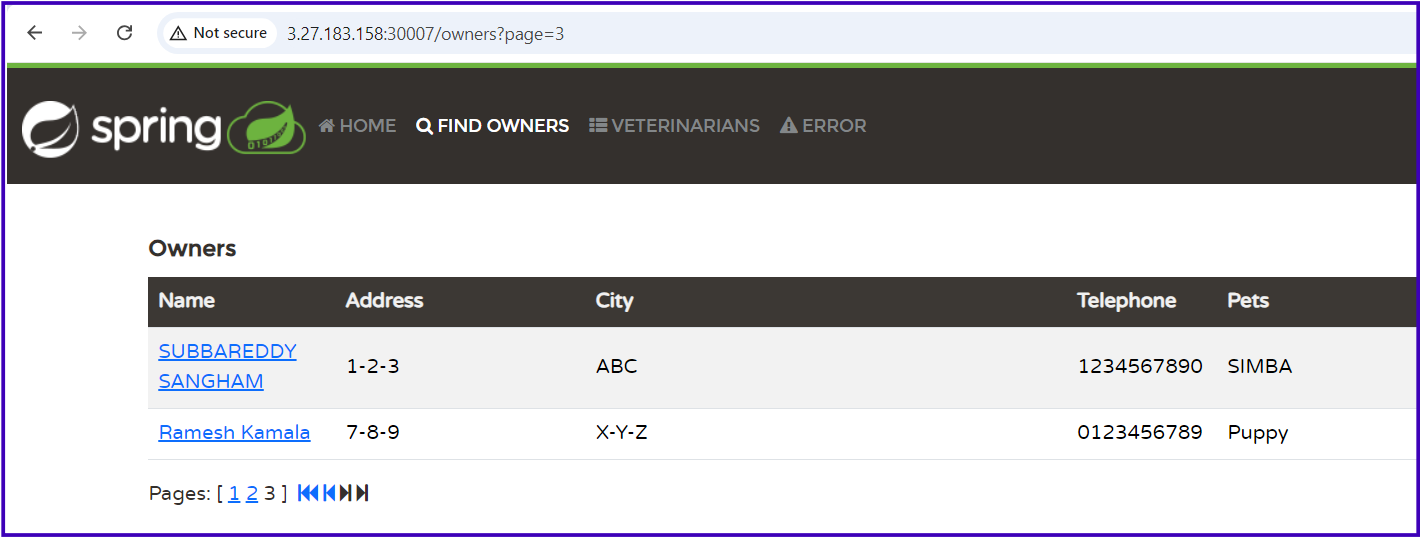

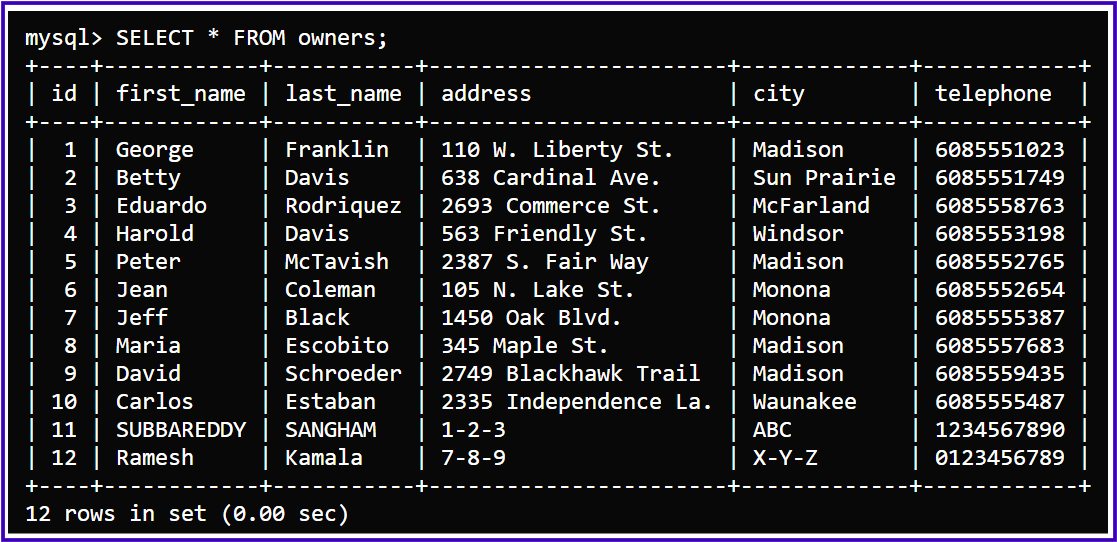

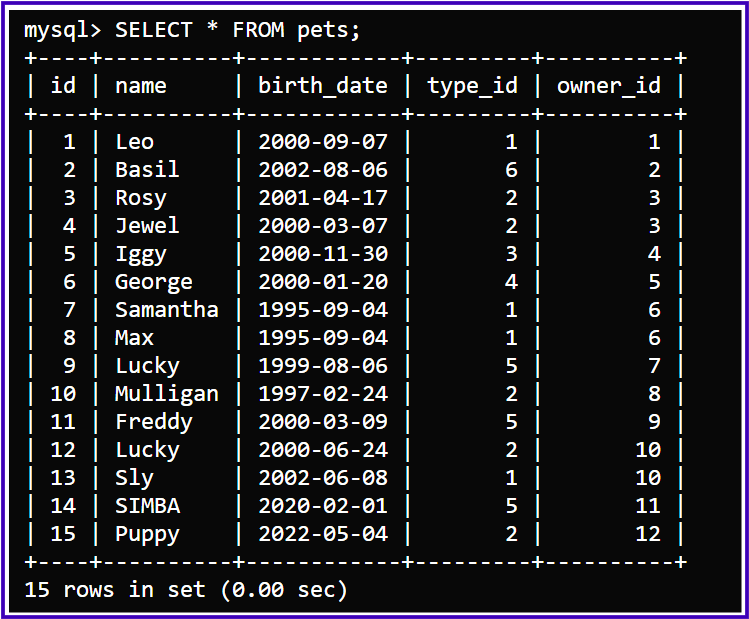

I have recently added the following owner with their pet details, which you can also see in the MySQL database table.

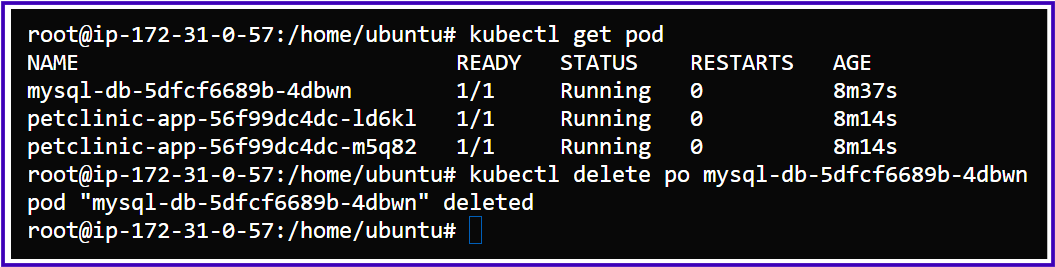

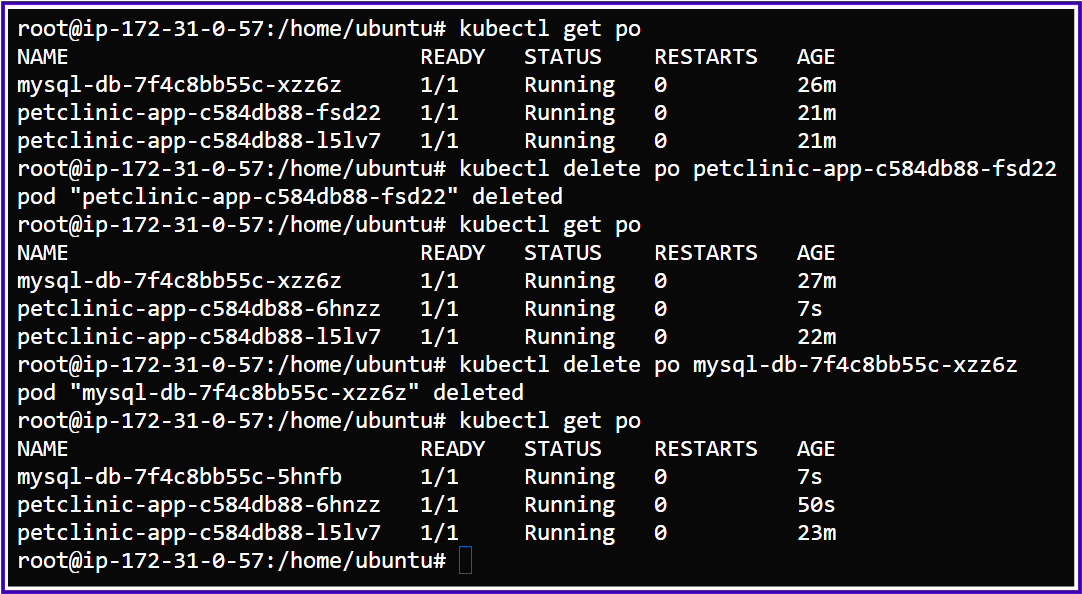

We will check whether the data persists when the MySQL pod is restarted or deleted.

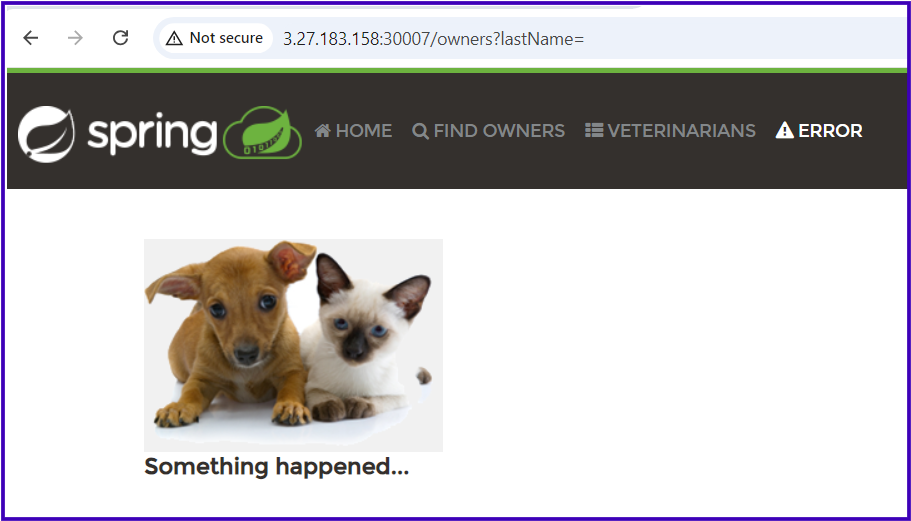

You can clearly observe in the following pictures that whenever the MySQL pod is deleted, the newly added user information is no longer available.

In this way, if we don't use persistent volumes for our MySQL database, the data won't persist. To address this, we will now use NFS volumes and observe the difference.

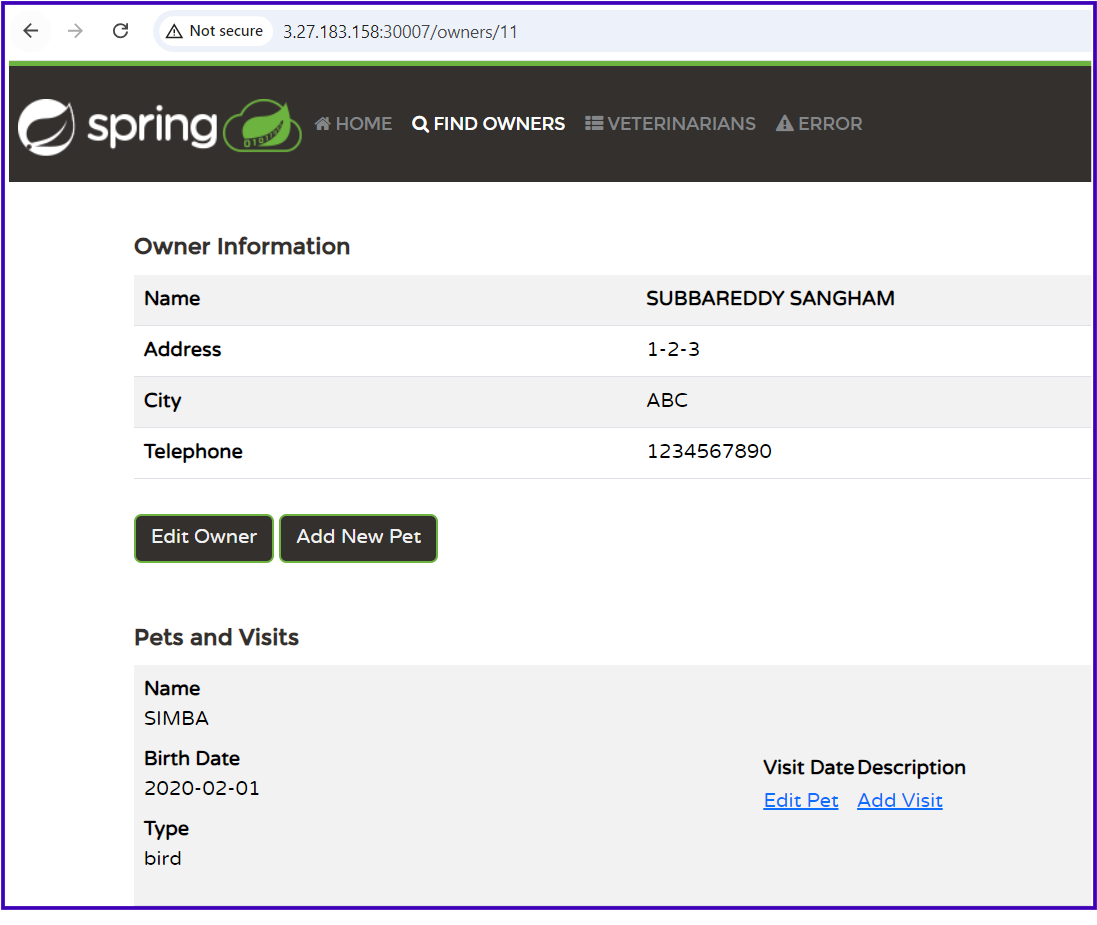

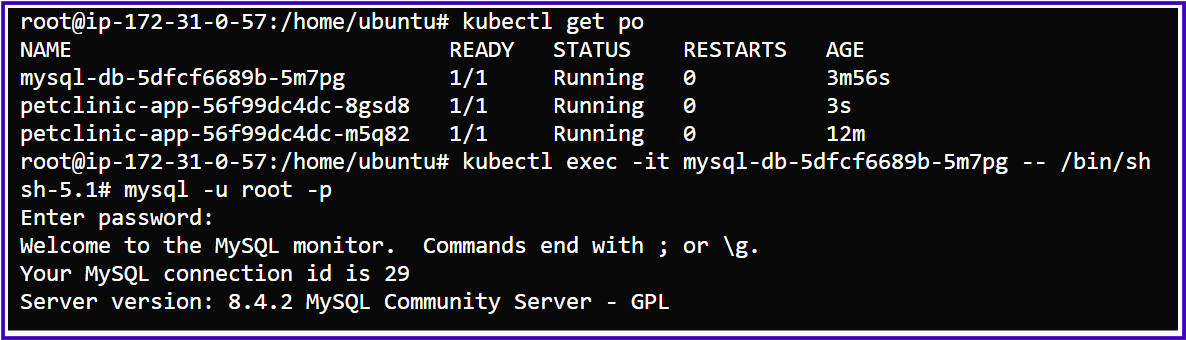

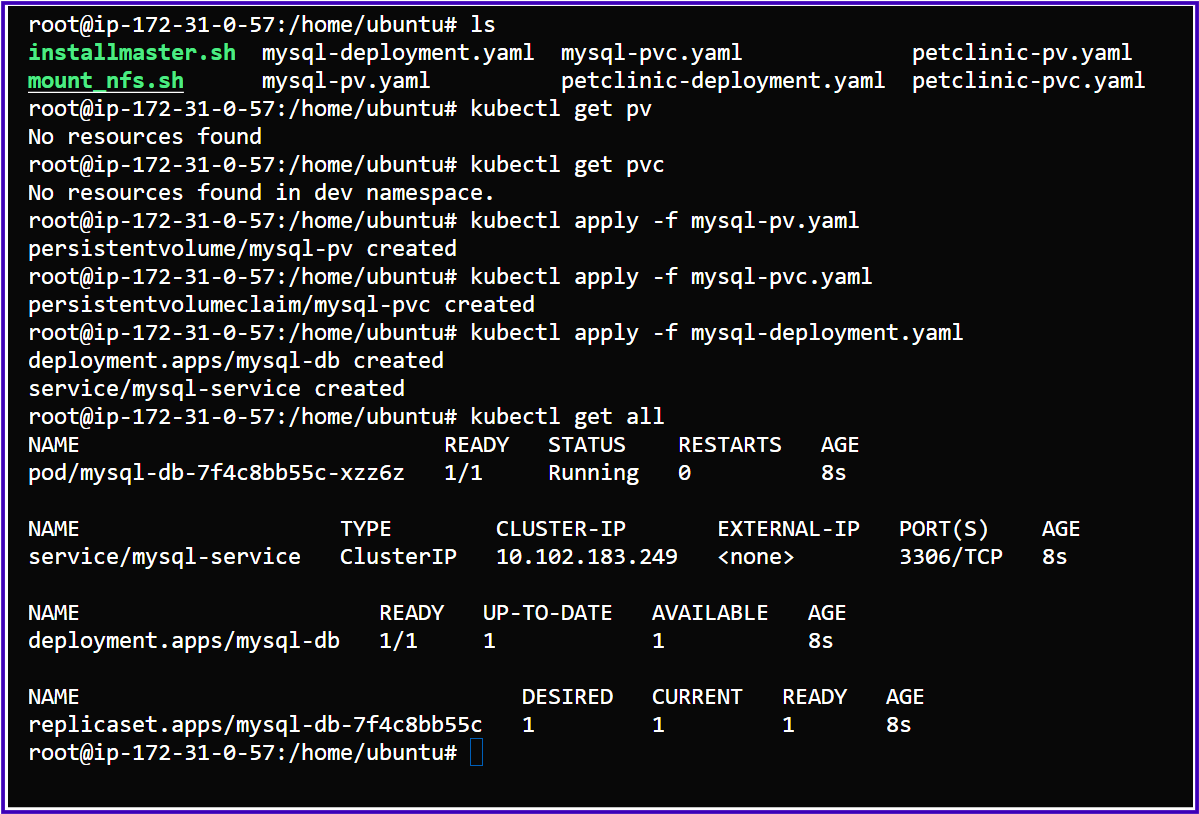

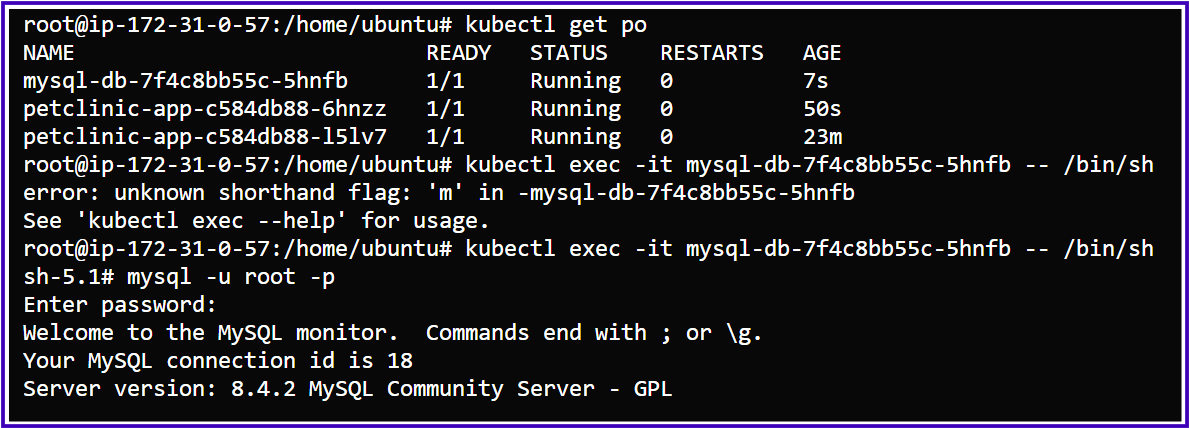

Step-1: I have created the Persistent Volume (PV), Persistent Volume Claim (PVC), and deployments for the MySQL database.

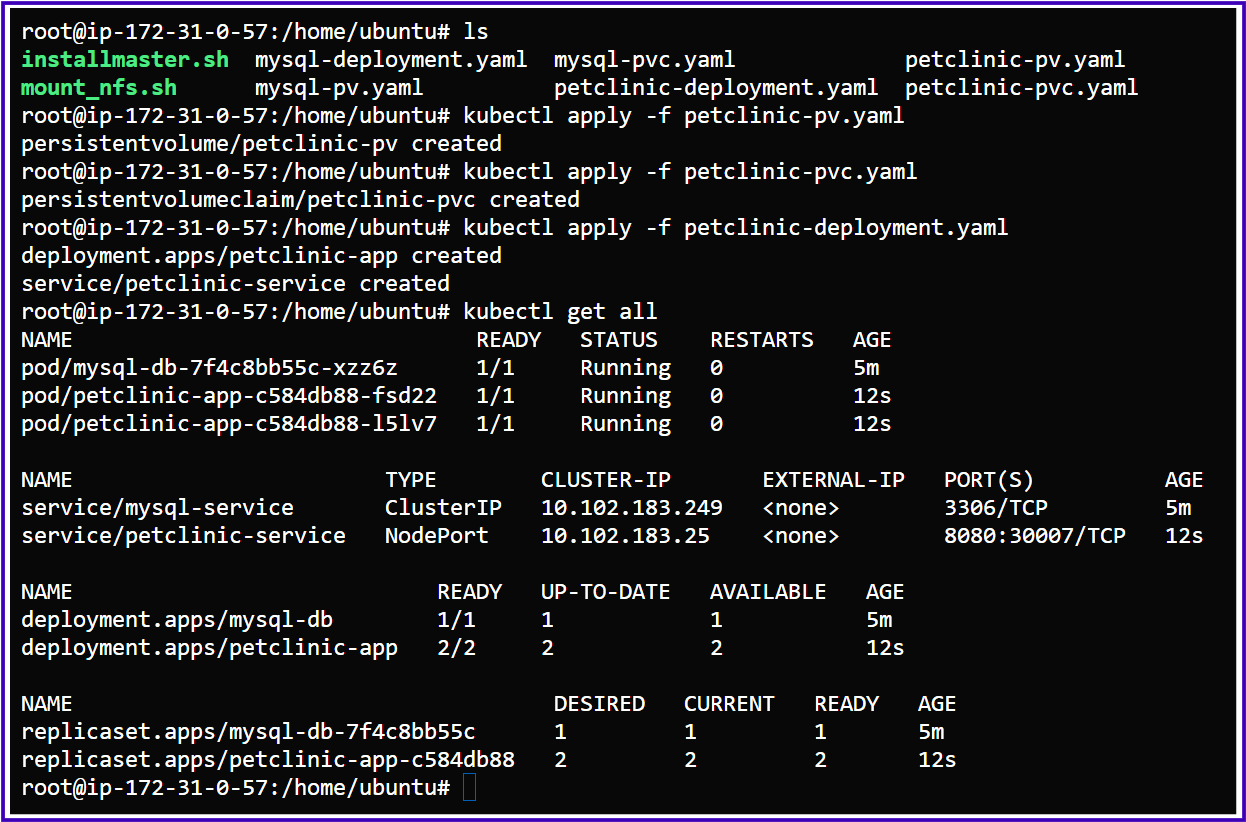

Step-2: I have created the Persistent Volume (PV), Persistent Volume Claim (PVC), and deployments for the Petclinic application.

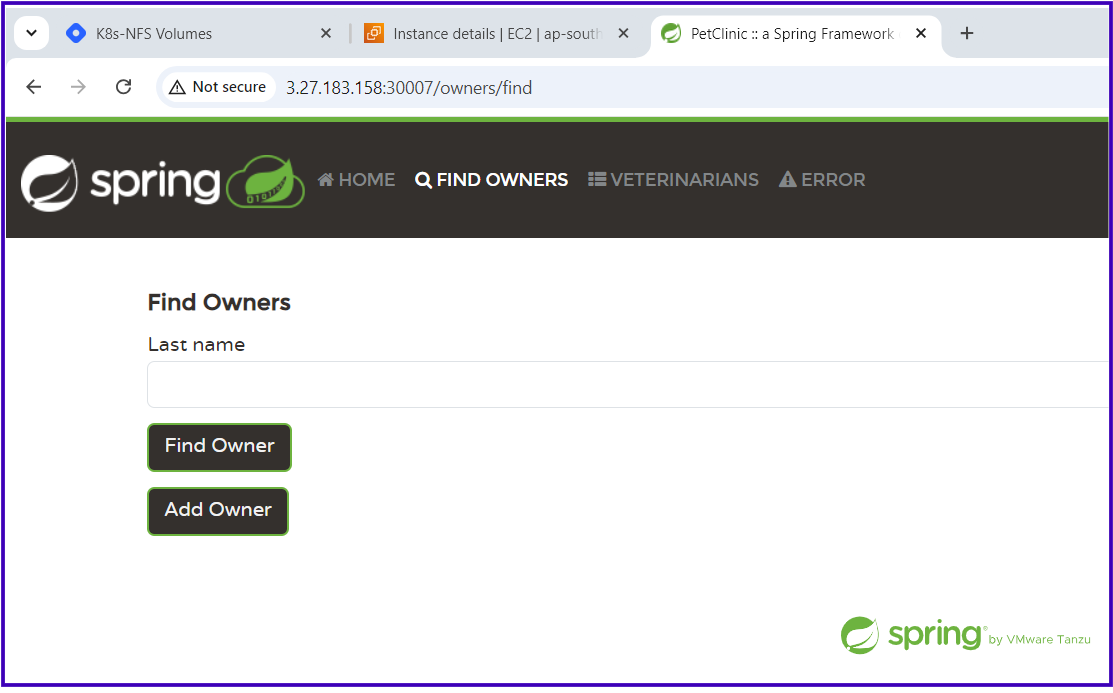

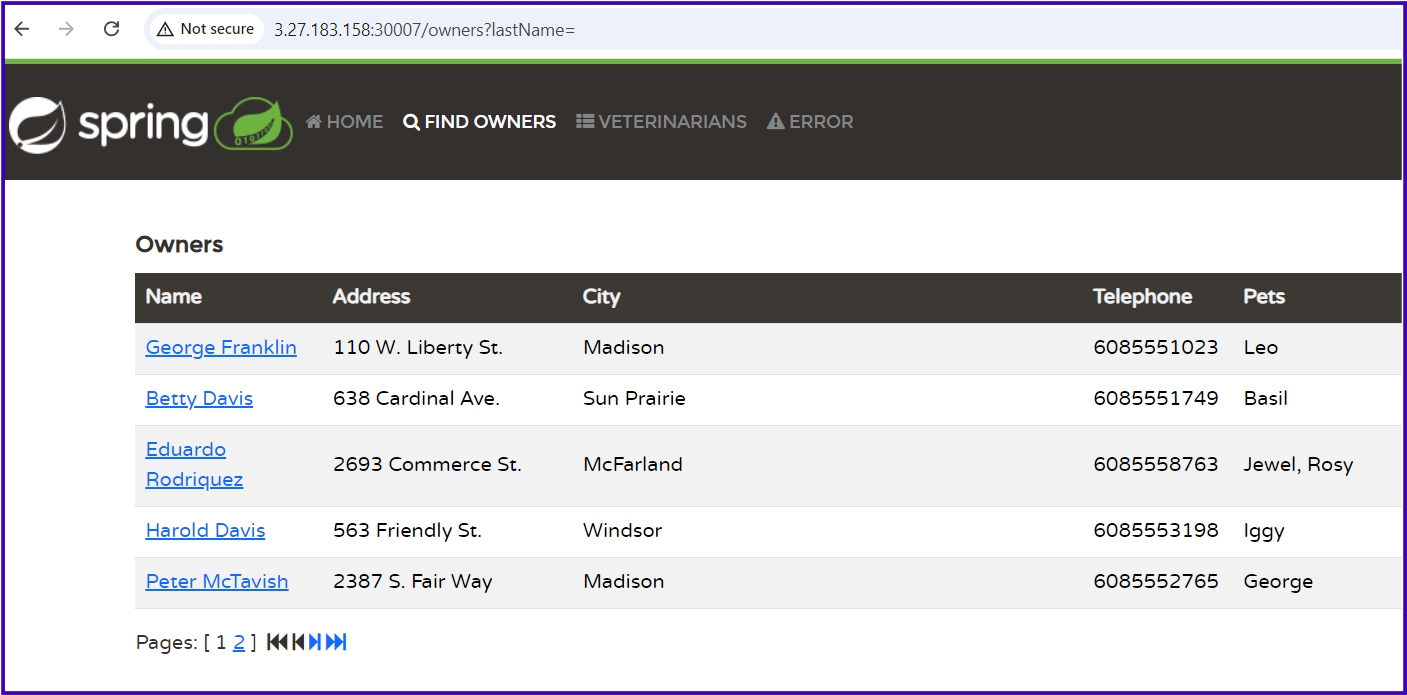

Step-3: Next, we will access our application in the browser.

We have successfully accessed the application.

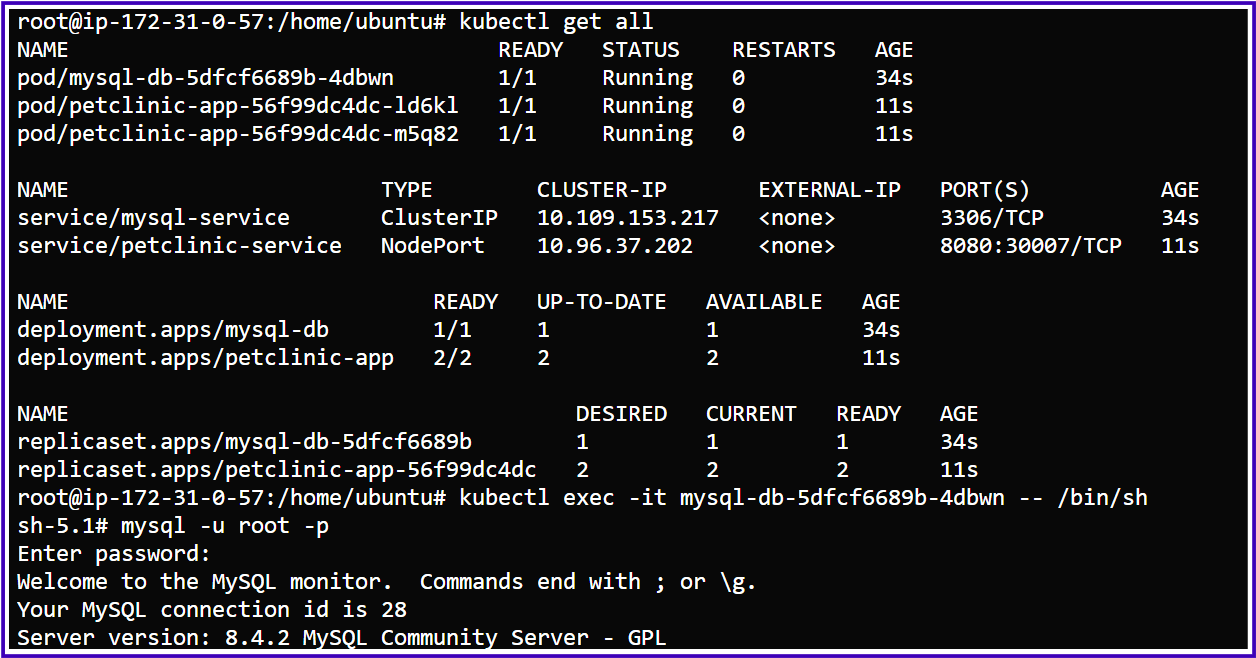

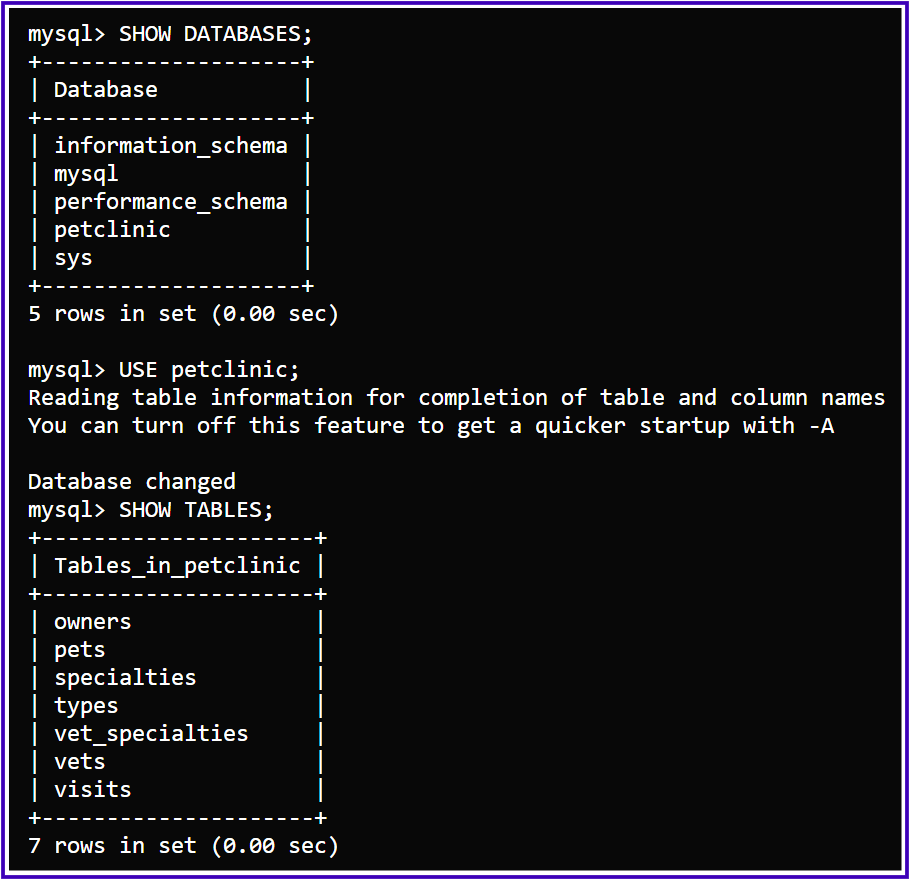

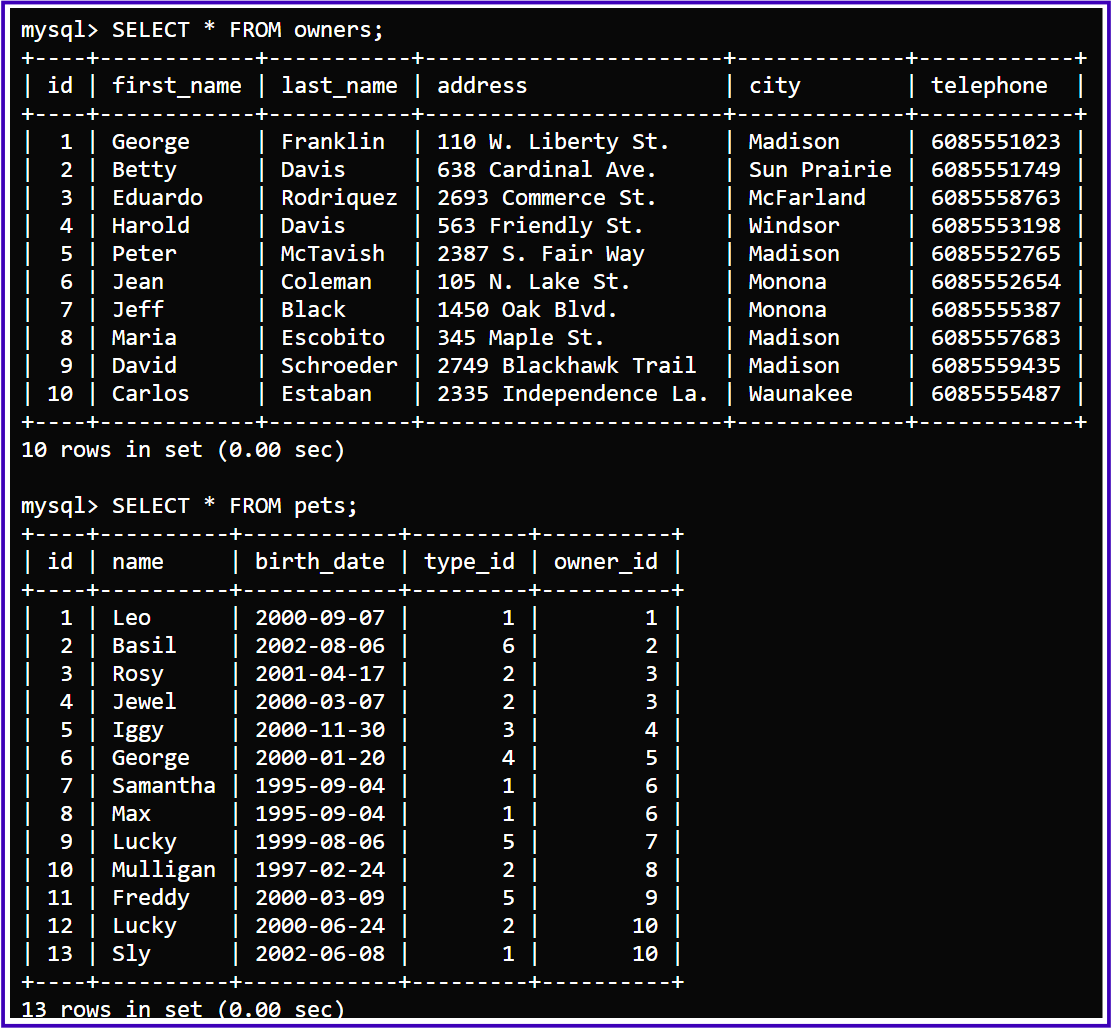

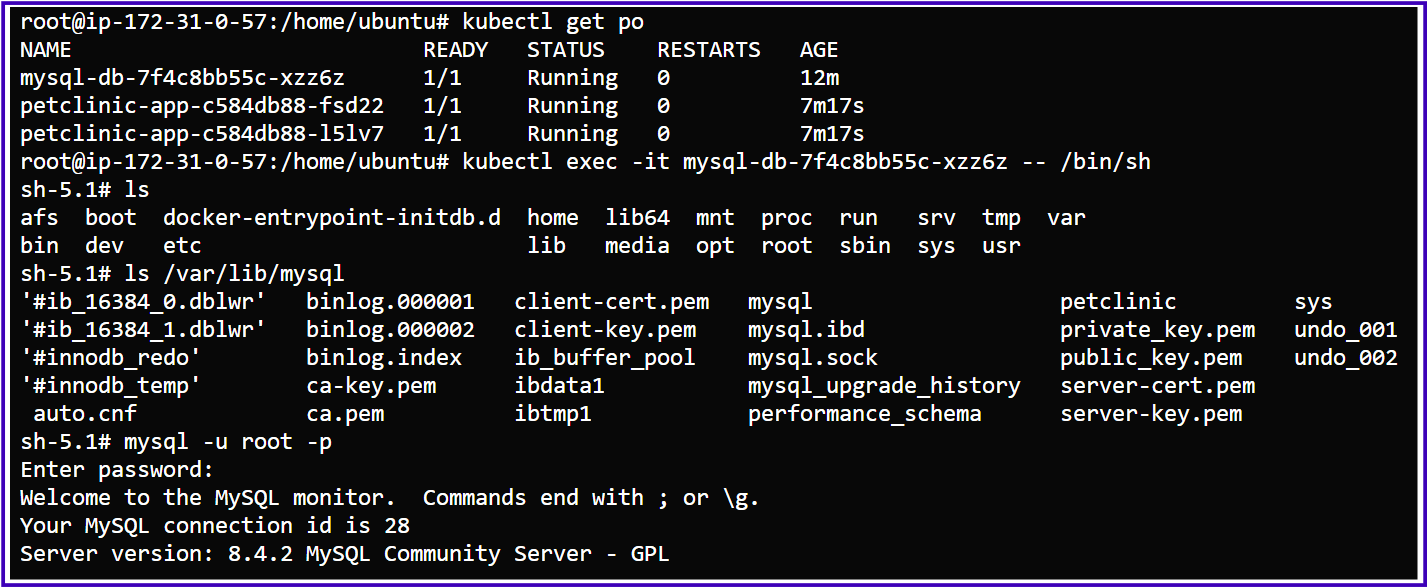

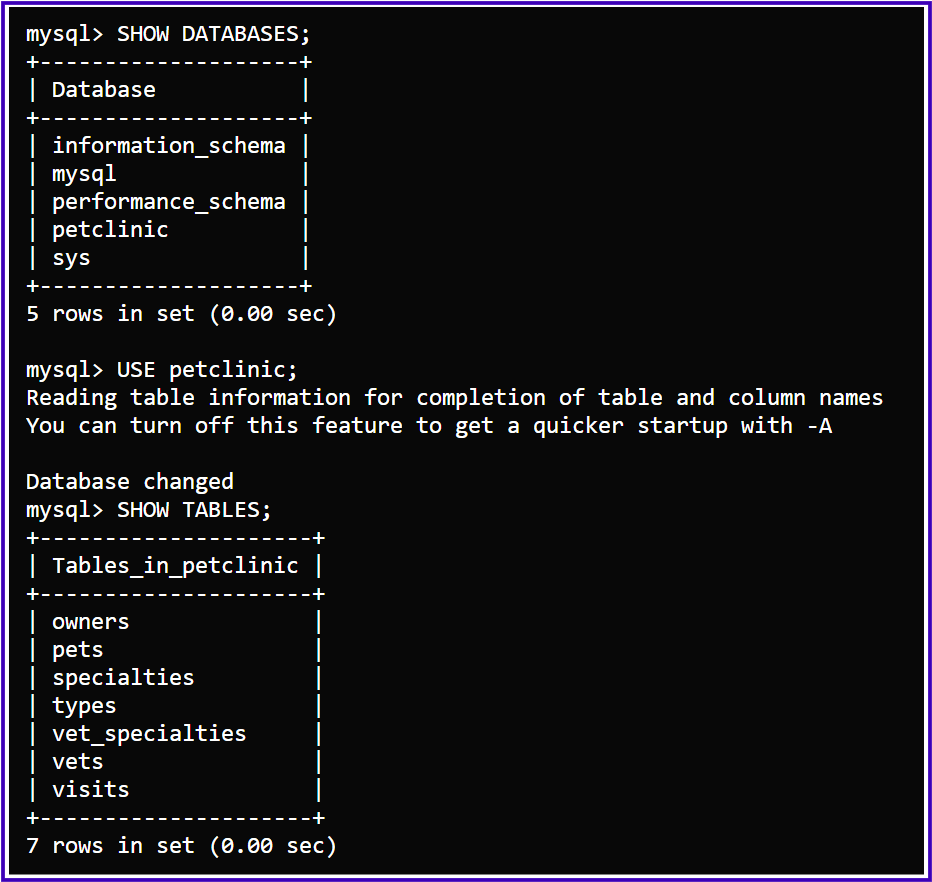

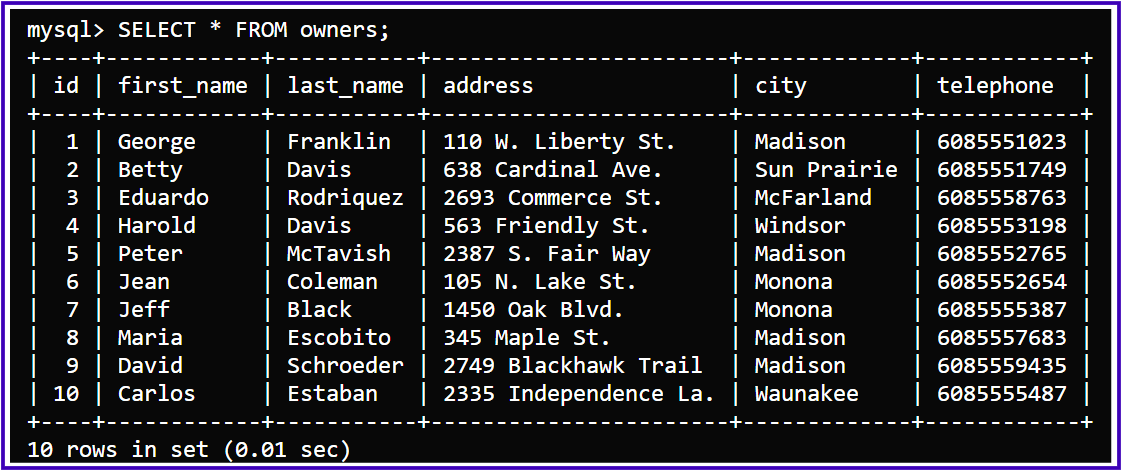

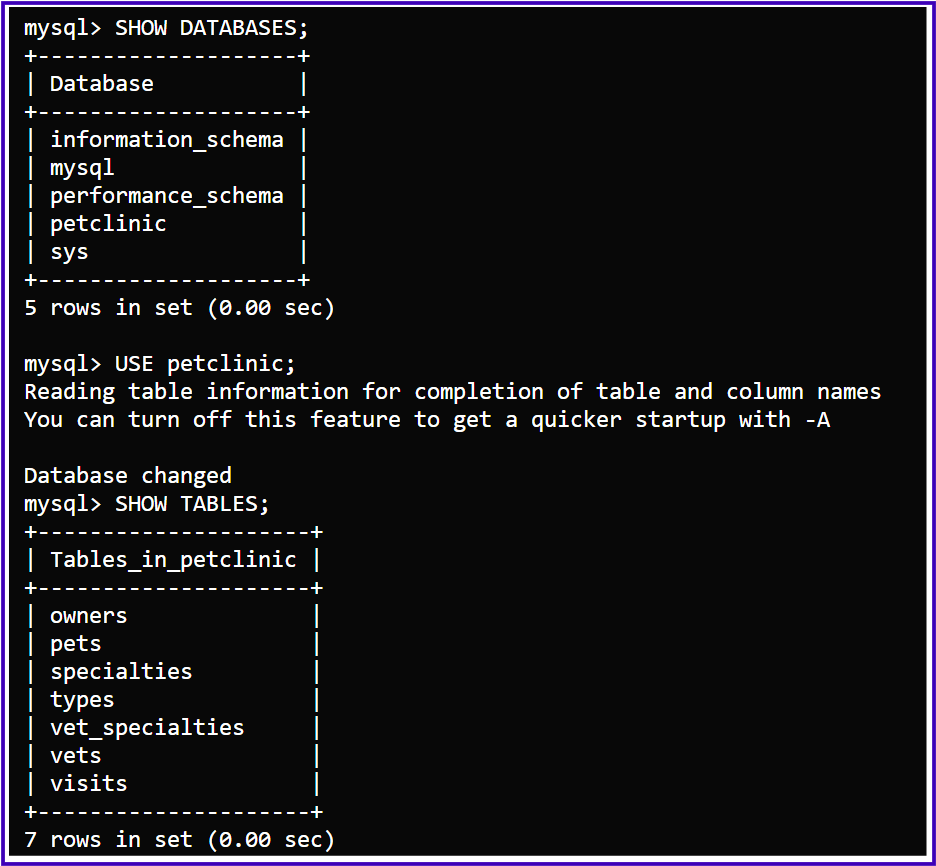

First, we will check the MySQL database and tables before adding new owners and pets:

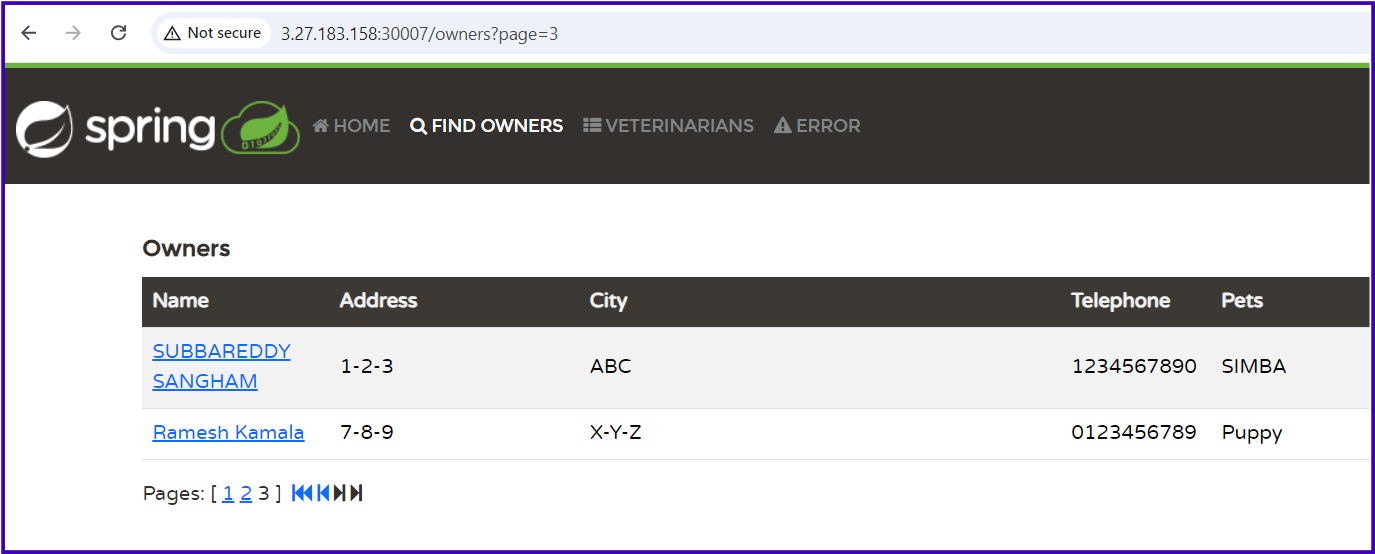

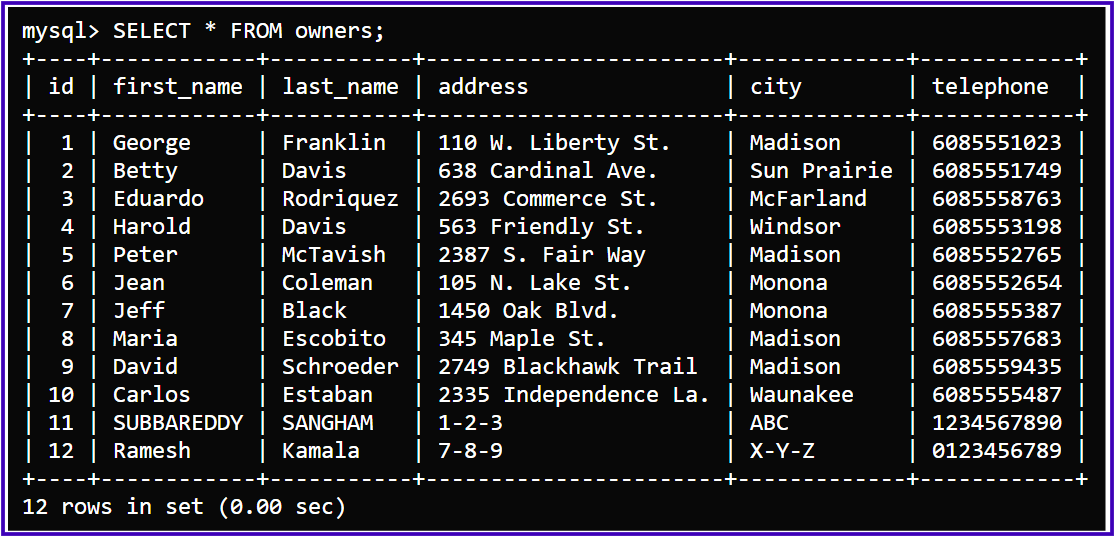

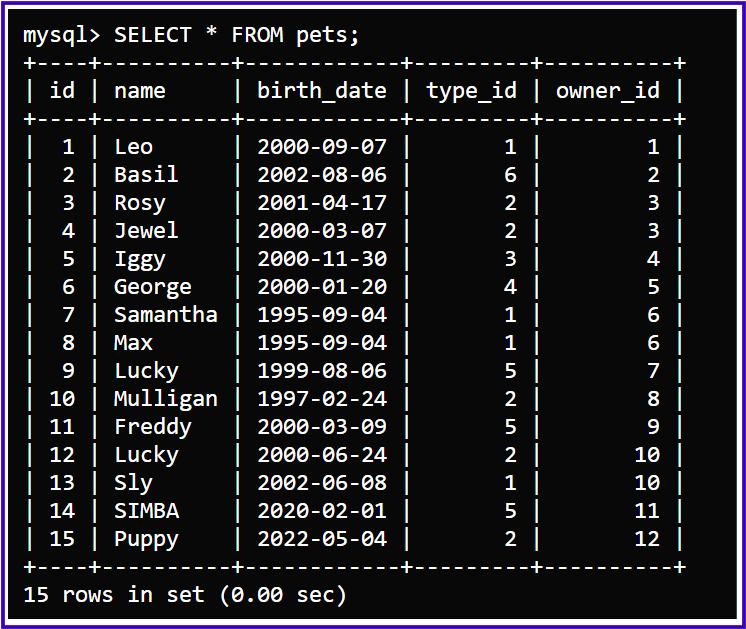

I have now added two new owners and their pets in the browser using my Petclinic application:

The same changes have been reflected in the backend as well:

Now, we will delete the MySQL or Petclinic pod and check whether the data is persisted after the pods are deleted or restarted while using NFS volumes.

Even after deleting the Petclinic and MySQL pods, the information for our newly added owners and pets is still saved, as we can see in the following images:

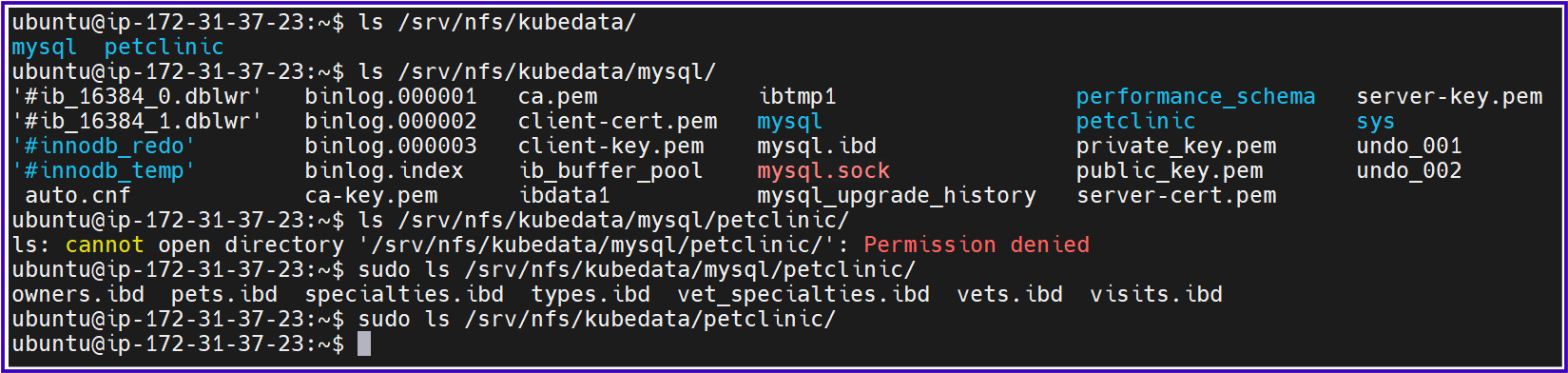

Now, we can check the MySQL and Petclinic mount directories on our NFS EC2 server to see if the data is saved.

From the output of the commands we ran on the NFS server, we can see the following:

MySQL Data (

/srv/nfs/kubedata/mysql):The MySQL database files are visible in

/srv/nfs/kubedata/mysql.The directory

petclinicunder/srv/nfs/kubedata/mysql/contains tables likeowners.ibd,pets.ibd,specialties.ibd, etc. These are InnoDB tables that belong to the PetClinic MySQL database.This confirms that MySQL data is being saved on the NFS server.

PetClinic App Data (

/srv/nfs/kubedata/petclinic):The directory

/srv/nfs/kubedata/petclinic/is empty.This is expected because the PetClinic app may not be configured to generate any persistent data, which is why the

/app/datadirectory (backed by the NFS persistent volume) remains empty. Meanwhile, theemptyDirvolume is used for temporary cache and does not persist beyond the lifecycle of the pod.

Final Conclusion for NFS Volumes in the Kubernetes-based Petclinic Project:

In this project, we successfully set up an NFS (Network File System) server and integrated it into a Kubernetes environment to provide persistent storage for the MySQL database and PetClinic application. The key achievements and observations are as follows:

NFS Server Setup:

A dedicated NFS server was set up on an AWS EC2 instance.

NFS exports were configured to share specific directories for both the MySQL database and the PetClinic application.

The NFS server was successfully connected to the Kubernetes cluster.

Persistent Volume Configuration:

We created PersistentVolumes (PVs) and PersistentVolumeClaims (PVCs) in the Kubernetes cluster to provide persistent storage for the MySQL database and the PetClinic application.

These volumes were mounted on the cluster nodes, enabling both applications to store data on the shared NFS server.

MySQL Database:

The MySQL database used a PersistentVolumeClaim backed by the NFS server for its data directory (

/var/lib/mysql).Data generated by MySQL, including database tables, was successfully persisted on the NFS server under the

/srv/nfs/kubedata/mysqldirectory, ensuring that the database state is maintained across pod restarts and re-deployments.

PetClinic Application:

The Petclinic application was configured with both

emptyDirand a PersistentVolumeClaim.emptyDirwas used for temporary cache storage, which is ephemeral and does not persist across pod restarts.The PersistentVolumeClaim was mounted to store any potential persistent data.

However, the Petclinic application does not generate or require persistent data in its current configuration, so the NFS-backed volume for Petclinic remains unused.

Key Takeaways:

NFS volumes were successfully implemented in the Kubernetes cluster, providing reliable persistent storage for the MySQL database.

While the NFS volume for the Petclinic application was set up and functional, the application does not require persistent storage based on its current usage, meaning the NFS volume remains empty for PetClinic.

This setup ensures that MySQL data is preserved across pod lifecycle events, while the PetClinic app continues to operate with ephemeral storage for non-persistent tasks.

Overall:

The project demonstrated the successful integration of NFS volumes in a Kubernetes environment, ensuring data persistence for critical components like MySQL while providing flexibility for applications that may or may not require persistent storage. The setup is ready for future scalability, and if the PetClinic application evolves to require persistent storage, the NFS-backed volume is available for use.

"You are never too old to set another goal or to dream a new dream."

— C.S. LewisThank you, and happy learning!

Subscribe to my newsletter

Read articles from Subbu Tech Tutorials directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by