Pre-signed URLs and the Chamber of S3-Crets!

Akhand Patel

Akhand PatelTable of contents

- The Problem

- Should You Make Your S3 Bucket Public?

- The Spell That Saves: Pre-signed URLs

- How Does It Solve Our Problem?

- Wands at the Ready: Writing the Code for Pre-signed URLs

- What’s the Magic behind them?

- The Cross-Origin Curse: CORS for browser

- The Two Faces of Pre-signed URLs: Pros and Cons

- Bonus: Magical Mimicry: Simulating S3 with LocalStack

- Final Words

"Welcome to Hogwarts," a voice echoes through the towering castle walls. "In just a moment, you will pass through these doors and join your classmates. But before you settle down at the Great Hall..." — WAIT, WAIT, WAIT, wrong script!

This isn’t Hogwarts. I can’t teach you magic (that would be so cool, though), because you know Ministry rules. But I have the second-best thing for you: pre-signed URLs in object storage like S3! In this blog, we will dive into the world of pre-signed URLs, explore the problems they aim to solve, weigh their pros and cons, and learn how to implement them in a NodeJS API.

The Problem

Imagine you’ve built a shiny new video hosting service. The architecture is simple: users upload video files to your app’s server, which then pipes them to an S3 bucket for storage. When a user logs in and tries to upload a 5GB video, your server handles it without breaking a sweat (Lightweight Baby). Everything seemed fine until now.

Next thing you know, you got featured on ProductHunt. Suddenly, 10,000 users come swinging their large sharp video files, and your server? It’s terrified. The fans scream, CPU spiking, memory leaking—your server’s bleeding, barely holding on. Everything else is abandoned; it’s just trying to survive the monsters.

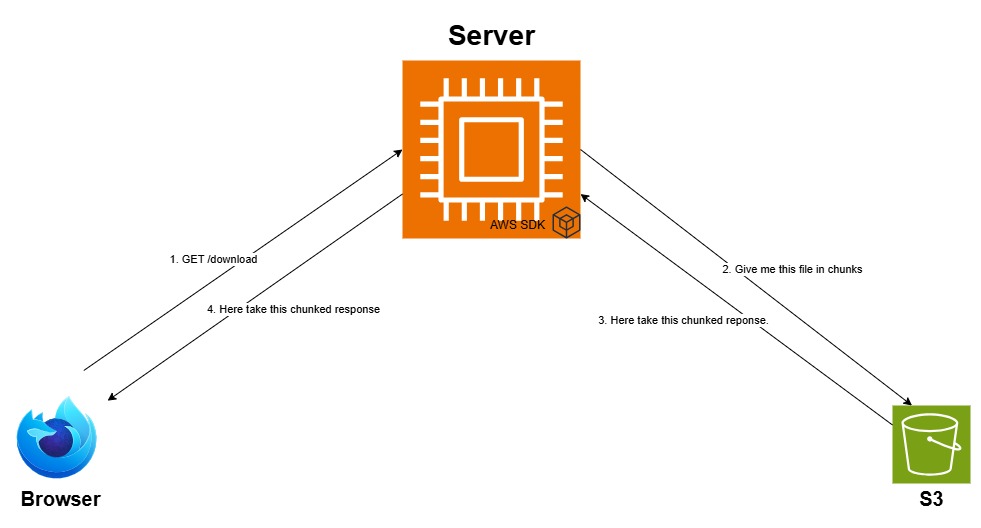

Current Architecture

//server

app.post("/upload", (req, res, next) => {

// Initialize busboy with request headers

const bb = busboy({ headers: req.headers });

bb.on('file', (name, file, info) => {

const { filename, mimeType } = info;

// Generate unique key for the file

const key = `uploads/${Date.now()}-${filename}`;

const upload = new Upload({

client: s3Client,

params: {

Bucket: bucketName,

Key: key,

Body: file,

ContentType: mimeType,

},

});

upload.done()

.then(() => {

res.json({ message: "File uploaded successfully", key: key });

})

.catch(next);

});

// Forward busboy errors to the error handling middleware

bb.on('error', next);

req.pipe(bb);

});

As you can see, the upload streaming process works as follows: Busboy parses the multipart/form-data request, creating a file stream that's piped to the AWS SDK's upload function, which streams file chunks directly to Amazon S3. For those unaware of Busboy, it is a lightweight package that streams incoming file uploads, ensuring large files are handled efficiently without consuming too much memory.

// server

app.get("/download", async (req, res, next) => {

const command = new GetObjectCommand({

Bucket: bucketName,

Key: req.query.filename,

});

const { Body, ContentType, ContentLength } = await s3Client.send(command);

res.setHeader("Content-Type", ContentType);

res.setHeader("Content-Length", ContentLength);

res.setHeader(

"Content-Disposition",

`attachment; filename="${req.query.filename}"`

);

Body.pipe(res);

});

Similarly, the download handler creates a Get command sent to S3, which returns a stream with appropriate headers attached that is sent to the user.

Where were we? Oh, how could we forget? Chaos takes over! Fans shriek in agony, and their desperate cries echo through the data centre. And the users… they just keep coming. In moments of panic, some might think, “Just make the bucket public! Problem solved.”

Should You Make Your S3 Bucket Public?

Sure, why not? And while you’re at it, could you also send me your credit card details? (Don’t worry, it’s for charity—I swear!). In other words, NO! Did you learn nothing in Defense Against the Dark Arts class?

Even AWS advises against making your buckets public. Think about it: your users might want some videos to remain private, like drafts or personal content. Everything is accessible to anyone. Making everything public not only creates security risks but also solves the download problem, leaving you with the same headache for uploads.

The Spell That Saves: Pre-signed URLs

Just as your app’s about to crash, something miraculous happens—like a Patronus sweeping in to save the day but this isn’t magic. Let’s look at what really helped, pre-signed URLs.

A pre-signed URL is a temporary link generated using AWS's SDK for S3 that provides limited access to a specific resource in your S3 bucket. This URL allows anyone with it to upload or download the specified file without needing their own AWS credentials, giving users controlled, temporary access to the resource.

That was a mouthful—I started sounding like Hermione by the end, didn't I? So, let’s break it down.

So, what are these magical URLs we read about? Your app generates a URL that gives a temporary key to S3. The URL allows only the specified operation (GET/PUT/POST) at the time it was created, and only for a short duration. This means you can grant access to specific files for a limited time. It's like writing a note that says, "Anyone who comes with this note within 2 minutes can access this file."

How Does It Solve Our Problem?

We create a pre-signed URL and give it to the user. The user then uses the URL to access S3. S3 checks the URL and grants temporary access to only that specific file.

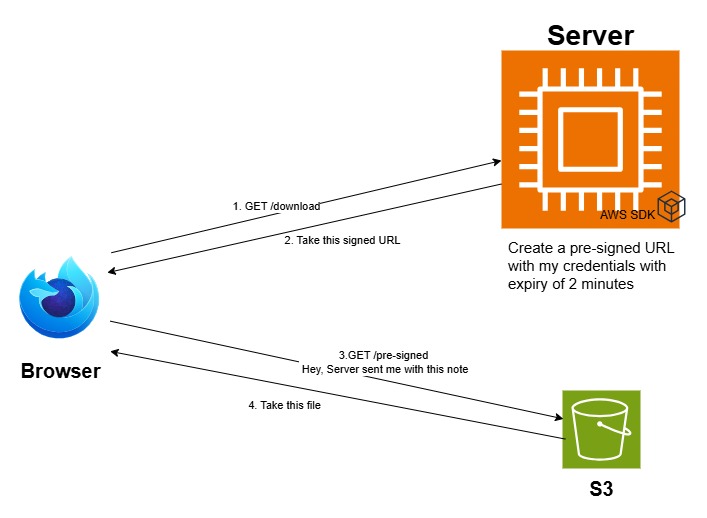

This solves both our babysitting and performance problems, we don’t have to waste server resources on monitoring downloads or uploads. Here’s how it will work for a download scenario

As you can see from the diagram, the new solution works like the following

A user requests a hefty 5GB video file.

Our app easily creates a pre-signed URL just for that file.

We send this magical URL to the user.

The user follows the link and downloads the file directly from S3, without involving our server in the transfer.

By using pre-signed URLs, we’re offloading the heavy lifting to S3. No more babysitting uploads or downloads, saving valuable server resources for other tasks—like, you know, spying on users (just kidding!). Your server must be happy now.

Enough talk, let’s just code it.

Wands at the Ready: Writing the Code for Pre-signed URLs

Let’s look at how we can integrate pre-signed URLs in our API. We will add two new routes and their handler which allow the client to get pre-signed URLs for upload and download only.

Let’s first take a look at the handler for downloadable pre-signed URLs.

// server

app.get("/download-url", async (req, res, next) => {

const command = new GetObjectCommand({

Bucket: bucketName,

Key: req.query.filename,

});

const signedUrl = await getSignedUrl(s3Client, command, { expiresIn: 120 });

res.json({ downloadUrl: signedUrl });

});

Let's understand this block of code. First, we create a GET command for the filename provided in the query parameters of our request. This is us writing what we plan to do. Then, we transform the Command to a signed URL using the AWS SDK and set the URL's expiry to 120 seconds. We send the signed URL in response to the request. On the client side, a GET request is made to the signed URL, which goes to AWS servers. AWS treats this as if the request came from the server itself and checks if the credentials used to sign the URL are authorized for the action. If approved, the file is sent in response to the client. On the client side, the code might look like this.

// client

async function downloadFilePresigned(filename) {

const response = await fetch(`/download-url?filename=${encodeURIComponent(filename)}`);

const { downloadUrl } = await response.json();

window.open(downloadUrl, '_blank');

}

We first fetch the pre-signed download URL and then open it. It's as simple as that.

Now we are sorted on the download side, let’s take a look at the upload pre-signed URLs. Yes, there are pre-signed URLs for that too. Let’s take a look at the route handler. The best part? The upload code is nearly identical to the download code.

// server

app.get("/upload-url", async (req, res, next) => {

const command = new PutObjectCommand({

Bucket: bucketName,

Key: `uploads/${Date.now()}-${req.query.filename}`,

ContentType: req.query.contentType,

});

const signedUrl = await getSignedUrl(s3Client, command, { expiresIn: 120 });

res.json({ uploadUrl: signedUrl });

});

Seems familiar, right? As you might have noticed, the only difference is that we create a PUT command instead of a GET command, and we can also restrict the file's ContentType. We create a signed URL and send it back to the user. On the client side, the logic might be a bit different, but let's break that down too.

// client

async function uploadFilePresigned() {

const file = document.getElementById('presignedFileInput').files[0];

const filename = encodeURIComponent(file.name);

const contentType = encodeURIComponent(file.type);

const response = await fetch(`/upload-url?filename=${filename}&contentType=${contentType}`);

const { uploadUrl } = await response.json();

await fetch(uploadUrl, {

method: 'PUT',

body: file,

headers: {

'Content-Type': file.type

}

});

}

as you can see we first send a request to the server, about the filename and content type. and then the server responds with a pre-signed PUT URL. We then send a PUT request to that signed URL along with our file.

What’s the Magic behind them?

Till now we have understood how to use them, but how does it all work? So let’s take a look at a sample pre-signed URL.

https://s3.amazonaws.com/my-bucket/my-file.txt?X-Amz-Algorithm=AWS4-HMAC-SHA256

&X-Amz-Credential=AKIAIOSFODNN7EXAMPLE%2F20231002%2Fus-east-1%2Fs3%2Faws4_request

&X-Amz-Date=20231002T123456Z

&X-Amz-Expires=3600

&X-Amz-SignedHeaders=host

&X-Amz-Signature=123456789abcdef123456789abcdef123456789abcdef123456789abcdef1234

What’s all this Parsel Tongue? Don’t worry, it all will make sense in a minute

“Help will always be given at Hogwarts to those who ask for it.”

Let’s break down these URL fields one by one.

https://s3.amazonaws.com/my-bucket/my-file.txt: Base URL for the S3 bucket and object.X-Amz-Date=20231002T123456Z: The date and time the URL was generated.X-Amz-Expires=3600: The URL will expire in 3600 seconds (1 hour).X-Amz-Algorithm=AWS4-HMAC-SHA256: The signing algorithm used for the URL.X-Amz-Credential=AKIAIOSFODNN7EXAMPLE/20231002/us-east-1/s3/aws4_request: The credentials used for signing, which include the Access Key ID and the date, region, and service scope.X-Amz-SignedHeaders=host: Specifies the signed headers used in the request.X-Amz-Signature=123456789abcdef123456789abcdef123456789abcdef123456789abcdef1234: The actual signature is based on the parameters above.

So, the URL contains all the data needed by AWS to process your request. When AWS receives the request on this URL, it needs to check the following things:

The URL is not expired.

The credentials used to create the URL are not expired.

The signature matches the content of the URL.

You(Slytherin) might be thinking, "What if I change any field in the URL? Won't I be able to access something else or maybe extend the expiry time?" To them, I say, NO. As you can see, there is a signature value included in the URL. The signature field depends on all the other fields and uses secret credentials known only to the signing party. So, if you change a certain field and try to access the URL, when AWS receives the request, the signature in the URL won’t match the other fields, and hence the request will be denied. And don’t worry about the AccessKeyId in the fields. It alone cannot do much harm to the security.

The Cross-Origin Curse: CORS for browser

Once you’ll have setup your code to create and download using presigned URLs, one hoocrux will still stand between you and victory- - CORS( Cross Origin Resource Sharing). Here's the deal: browsers are like club bouncers. They won’t let your application just access data from another domain (like AWS) and start grabbing data without permission. They will check with AWS first and if AWS says this guy is cool let him come, then only you can make request to AWS.

If our app runs on localhost:3000, but our files are stored on S3, the browser will block our attempts to access the bucket unless S3 config allows that. To do this, you need to adjust the CORS settings on your S3 bucket.

Here’s how you handle the CORS curse:

Go to your S3 bucket in the AWS Console.

Navigate to the "Permissions" tab.

Scroll to "CORS Configuration" and add a rule that allows your app’s domain (like

http://localhost:3000) to access the resources.

Here’s a super simple example of what that rule might look like:

<CORSRule>

<AllowedOrigin>http://localhost:3000</AllowedOrigin>

<AllowedMethod>GET</AllowedMethod>

<AllowedMethod>PUT</AllowedMethod>

<MaxAgeSeconds>3000</MaxAgeSeconds>

<AllowedHeader>*</AllowedHeader>

</CORSRule>

That should take care of things! But if you're still unsure about it, you can refer to the library here CORS and CORS with S3 .

The Two Faces of Pre-signed URLs: Pros and Cons

Dark Magic though powerful but it comes at a cost, and pre-signed URLs are no different. Let’s take a look at their pros and cons.

Pros

Server Efficiency: Offloading file handling to S3 means our server can handle more users than a pub on a Friday night.

Enhanced Security: We dont have to make our resource public. We grant temporary access, keeping our resources safer than Gringotts vault.

Cost-Effective: Since S3 handles the data transfer, our server bandwidth costs are reduced.

Simplified Experience: A small benefit, though debatable, is that for file download requests handled explicitly by client-side JavaScript, there is no way to download them without keeping them in memory, which is not feasible for large files.

Cons:

Expiration Juggling: These URLs have expiration time and timing is everything. Set it too short and it is an inconvenience to user, set it too long, the door is open unnecessarily.

Security Considerations: Sure, these links are temporary, but they can still be shared like the latest gossip.

Limited Control: Once a pre-signed URL is out there, we can't revoke it. We also can't control how many times it gets used.

Bonus: Magical Mimicry: Simulating S3 with LocalStack

If you don't want to experiment with a live S3 bucket, you can use LocalStack. It's a tool that can simulate AWS services on your machine. It offers both free and pro tiers, and for our use case, the free tier will be sufficient.

Getting Started

Run LocalStack: You need to have Docker installed on your system. Just run the following command to start LocalStack in a Docker container at http://localhost:4566.

docker run \ --rm -it \ -p 127.0.0.1:4566:4566 \ -p 127.0.0.1:4510-4559:4510-4559 \ -v /var/run/docker.sock:/var/run/docker.sock \ localstack/localstack

You will be familiar with the following steps if you have used AWS services before. We will be using aws-cli to connect with our mocked AWS services.

Set up AWS creds: Run the following command in a new terminal.

export AWS_ACCESS_KEY_ID=test export AWS_SECRET_ACCESS_KEY=test export AWS_DEFAULT_REGION=us-east-1Create a S3 bucket: We point the aws-cli to our mocked instance and tell it to create a bucket named “my-test-bucket”.

aws --endpoint-url=http://localhost:4566 --region us-east-1 s3 mb s3://my-test-bucket

Now you can use your existing code to connect with this bucket by simply changing the values in your code config, as shown below:

PORT=3000

AWS_REGION=us-east-1

AWS_ENDPOINT=http://localhost.localstack.cloud:4566

AWS_ACCESS_KEY_ID=test

AWS_SECRET_ACCESS_KEY=test

BUCKET_NAME=my-test-bucket

Final Words

As we reach the end of our magical journey through pre-signed URLs, it’s time to bid farewell, my fellow wizards, until we meet again. But before we part ways, let’s reflect on the spells we’ve learned today. Pre-signed URLs are great spells for creating a more scalable, secure, and efficient file-handling system. By letting S3 handle the heavy work, we can reduce server load by a lot. However, no solution is perfect. You'll need to manage expiration times, be cautious about sharing links, and always keep security in mind.

These pre-signed URLs are now a part of your spell arsenal, ready for you to wield. As Uncle Ben said, "With great power comes great responsibility." Remember, the true magic lies not just in having these spells but in knowing when and how to use them. I would suggest you read this amazing blog By Luciano Mammino which goes into much greater detail. You can also refer to the implementation I’ve used in this blog, available in this repo.

Farewell and happy learning! Feedback is appreciated, and criticism is invited.

Subscribe to my newsletter

Read articles from Akhand Patel directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by