Enhance LLM Capabilities with Function Calling: A Practical Example

Fotie M. Constant

Fotie M. Constant

Function calling has become an essential feature for working with large language models (LLMs), allowing developers to extend the capabilities of LLMs by integrating external tools and services. Instead of being confined to generic answers, function calling enables LLMs to fetch real-time data or perform specific tasks, making them far more useful in practical scenarios.

In this blog post, we will explore the power of function calling, showing how it works, what you can do with it, and demonstrating a practical use case, checking the current weather in Istanbul to show how this feature can be integrated into everyday applications.

Understanding function calling in LLMs

By default, large language models like GPT process inputs within a secure, sandboxed environment. This means they can generate responses based on the data they were trained on, but they are limited in terms of interacting with the real world. For instance, if you ask an LLM about the current weather in a city, it won’t be able to provide an accurate response unless it has access to real-time weather data.

This is where function calling comes in. Function calling allows you to provide an LLM with external tools, like an API to fetch weather data or access a database. The model can then call these functions to get the information it needs to give you more accurate and useful responses.

Practical example: Using function calling to get weather data for Istanbul

As someone who learns by doing, we will dive into a practical example and see how we can use this in a real world scenario. Let’s say you are building a chat bot that helps users get the current weather in any city in the world by just asking it, say you want to know the current weather in Istanbul. Without function calling, the LLM would likely respond with a generic statement like, “I don’t have real-time data.” But by adding a function to call a weather API, the LLM can pull real-time weather information and give you a precise answer.

Here’s a basic function calling setup that can be used to fetch the weather in any city (in our case Istanbul).

Defining the function

We’ll start by defining a simple weather function that uses the weather API to get real-time weather data for a given city:

const getWeather = async (city) => {

const response = await fetch(`https://api.openweathermap.org/data/2.5/weather?q=${city}&appid=your_api_key`);

return response.json();

};

This function takes in the name of a city as parameter and calls a weather API to retrieve current weather data for that city. Now for this to work we need to inform the LLM that this function is available for it to use.

Connecting the LLM to the function

To connect the LLM with the weather function, you can provide the it with the function's specifications. This lets the it know that the function exists and can be used when needed.

// this is just a schema for function calling with chatgpt, other models like llama could have different schema

const functionsSpec = [

{

name: "getWeather",

description: "Fetches the current weather for a specific city",

parameters: {

type: "object",

properties: {

city: {

type: "string",

description: "The city to retrieve weather data for",

},

},

required: ["city"],

},

},

];

// Informing GPT that this function is available

askGPT("What's the current weather in Istanbul?", functionsSpec);

ref: https://platform.openai.com/docs/guides/function-calling

With this setup, when you ask the GPT, “What’s the current weather in Istanbul?”, it will recognize that the getWeather function is available and can call it to fetch real-time data.

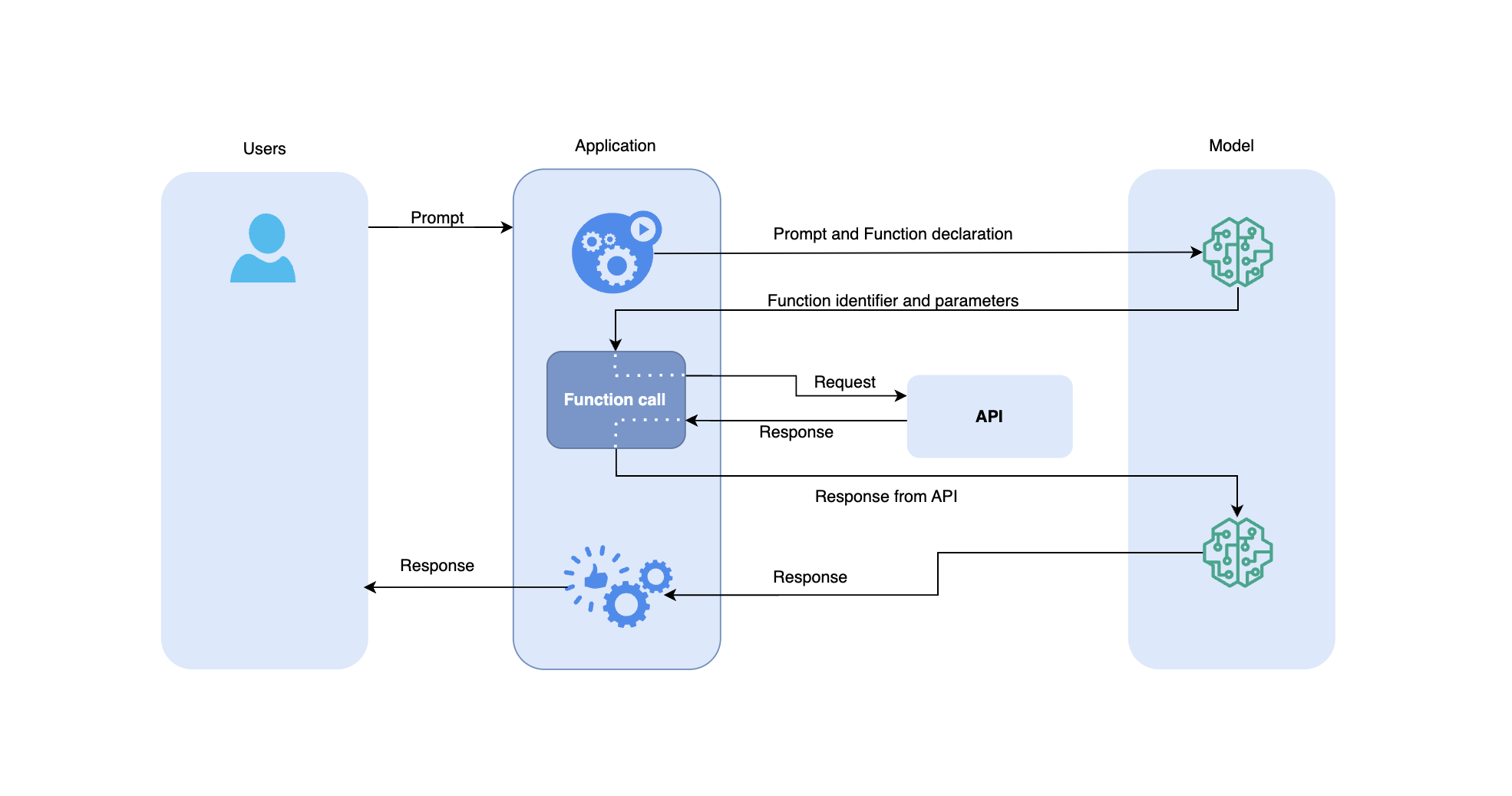

How it works - step by step

Here’s how function calling plays out in this example:

- You provide a question: In this case, “What’s the current weather in Istanbul?”

- GPT recognizes the function: The LLM understands that it can call the

getWeatherfunction because it has been informed that the function exists. - GPT requests to call the function: The LLM asks to execute the weather function for Istanbul.

- Function is executed: The code runs the

getWeatherfunction, retrieves the data from the API, and provides it back to the LLM. - GPT delivers the answer: Finally, the LLM responds with the real-time weather for Istanbul.

Extending functionality beyond weather data

The power of function calling doesn’t end with weather reports. You can extend this functionality to handle a wide variety of tasks, such as:

- Reading and sending emails: You can build a function that connects the LLM with an email service, allowing it to read, draft, or send emails on your behalf.

- Managing files: Define functions that let the LLM interact with the local file system, creating, reading, or modifying files as needed.

- Database interactions: Allow the LLM to query a database, providing real-time data retrieval or even writing data into the database.

For instance, if you want to save the weather data for Istanbul into a file, you can create another function like this:

const saveToFile = (filename, content) => {

const fs = require('fs');

fs.writeFileSync(filename, content);

};

// Save Istanbul's weather to a file

saveToFile('istanbul_weather.txt', 'The current weather in Istanbul is sunny.');

This way, the LLM can not only fetch the weather but also store that data into a text file for future reference if needed.

Why function calling enhances LLM capabilities

Function calling gives developers a flexible way to integrate LLMs with real-world applications. Instead of being limited to predefined responses, they can now perform more interactive and useful tasks. By leveraging APIs and other external tools, they can offer responses grounded in real-time data and actions, making them far more practical in real-world use cases.

For example, using a function to check the weather in Istanbul transforms the LLM from a static response generator into an interactive tool that provides real-world insights. This can be extended to tasks like monitoring stock prices, automating daily reports, or even managing complex workflows across multiple applications.

Conclusion

Function calling is a powerful feature that takes LLMs beyond their usual limitations, enabling them to interact with external systems in real time. By integrating functions such as APIs, databases, or file management systems, they can fetch real-time data, automate tasks, and perform complex actions.

In our example of checking the weather in Istanbul, function calling shows just how flexible and useful they can become when they are equipped with the right tools. Whether it’s retrieving real-time data or managing files, the potential applications of function calling are vast, making it an indispensable feature for developers looking to enhance their projects with large language models.

FAQs

1. What is function calling in LLMs?

Function calling allows LLMs to access external tools, like APIs, to retrieve real-time data or perform specific tasks.

2. Can LLMs access real-time data?

By default, these models cannot access real-time data. However, with function calling, they can call external APIs to fetch live information such as weather updates.

3. How does function calling work in LLMs?

Function calling works by providing the LLM with external tools (functions, note that the function is not run by the large language model but rather you and then the model uses the output in its response), such as APIs, that it can call when it needs data or needs to perform a task.

4. What are some examples of function calling?

Function calling can be used to fetch weather data, manage files, send emails, or query databases, among other tasks.

5. Can function calling be used for automation?

Yes, function calling can automate tasks by allowing LLMs to perform functions like retrieving data, managing files, or even interacting with other software systems.

Subscribe to my newsletter

Read articles from Fotie M. Constant directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Fotie M. Constant

Fotie M. Constant

Widely known as fotiecodes, an open source enthusiast, software developer, mentor and SaaS founder. I'm passionate about creating software solutions that are scalable and accessible to all, and i am dedicated to building innovative SaaS products that empower businesses to work smarter, not harder.