Understanding Amazon EC2: In-Depth Analysis

Hema Sundharam Kolla

Hema Sundharam Kolla

Introduction

Amazon EC2 (Elastic Compute Cloud) is a foundational component of Amazon Web Services (AWS), providing scalable, resizable compute capacity in the cloud. It allows users to run virtual servers (instances) on-demand, which can be scaled up or down based on the workload. Whether you’re a small startup or a large enterprise, EC2 offers flexibility, cost efficiency, and performance without the need to manage physical infrastructure.

This article will explore the key concepts behind EC2, how it differs from traditional physical servers, the various instance types, and real-world applications, along with step-by-step instructions for launching an instance. By the end, you’ll understand how EC2 can revolutionize your infrastructure management.

What is Amazon EC2?

Amazon EC2 enables users to create and manage virtual machines (VMs) or instances in the AWS cloud. These instances can have varying amounts of compute power (CPU), memory (RAM), and storage (ROM), similar to physical servers. However, unlike traditional servers, EC2 instances are:

Elastic: You can scale the number of instances up or down based on demand.

On-Demand: You only pay for the compute resources when they are in use.

Customizable: EC2 offers different instance types to match specific workloads, such as compute-intensive tasks, memory-intensive tasks, or storage-heavy applications.

Think of EC2 as a virtual server that can be customized and adjusted as your business needs change.

EC2 vs. Physical Servers: Key Differences

The traditional model of computing relied on purchasing physical servers from vendors like IBM, Dell, or HP. Businesses would set up these servers in data centers, divide them into multiple VMs using hypervisor software, and manage them manually. Here’s why EC2 offers a more efficient alternative:

Physical Servers:

High Upfront Costs: When you buy a physical server, you’re locked into the cost of the hardware, regardless of how much you use it.

Maintenance Overhead: You must update and secure all VMs individually, and any hardware failures lead to downtime and expensive repairs.

Complex Scaling: Scaling a physical server infrastructure means purchasing and installing new hardware, which can take weeks or months.

Limited Flexibility: Once a server is set up, it’s difficult to change its configuration. For example, increasing memory or CPU requires downtime and hardware modifications.

Amazon EC2:

Cost-Efficiency: With EC2, you only pay for the compute resources when your instance is running. You can stop or terminate instances when they aren’t needed, avoiding unnecessary costs.

Automated Management: AWS takes care of infrastructure updates, patches, and maintenance, allowing you to focus on your applications instead of the underlying hardware.

Elastic Scaling: EC2 allows you to easily scale up by launching additional instances or down by terminating instances based on demand, without the need for manual hardware provisioning.

On-Demand Flexibility: You can change your instance configuration (CPU, memory, storage) by stopping and restarting the instance with minimal downtime.

Example: In a traditional data center, if you were running 1000 VMs on physical hardware and wanted to update the operating system across all instances, you’d have to manage each one individually. With EC2, you can automate this process using services like AWS Systems Manager and save significant time.

How EC2 Works: Elasticity and Cost Management

One of the standout features of EC2 is its elasticity. Let’s consider a real-world scenario to understand its importance.

Scenario: Imagine you’re running an online store. Traffic to your website is fairly predictable most of the year, except for certain peak times like holidays or sales events when traffic might increase tenfold. With a traditional server setup, you’d have to buy enough physical servers to handle the maximum traffic, meaning most of your servers would remain underutilized throughout the year.

With EC2, however, you can automatically scale your infrastructure:

During normal periods, you might only need 2 or 3 EC2 instances running to serve your customers.

During peak traffic, you can scale up to 20 or 30 instances, ensuring smooth operations during the rush.

After the peak, you scale back down to 2 or 3 instances to avoid paying for idle resources.

This pay-as-you-go model ensures that you only pay for what you use, resulting in significant cost savings.

Key Concepts in Amazon EC2

Elasticity: This is one of EC2's most powerful features. You can dynamically adjust the amount of compute power you’re using, whether that means launching more instances to handle sudden traffic spikes or stopping instances during quiet periods.

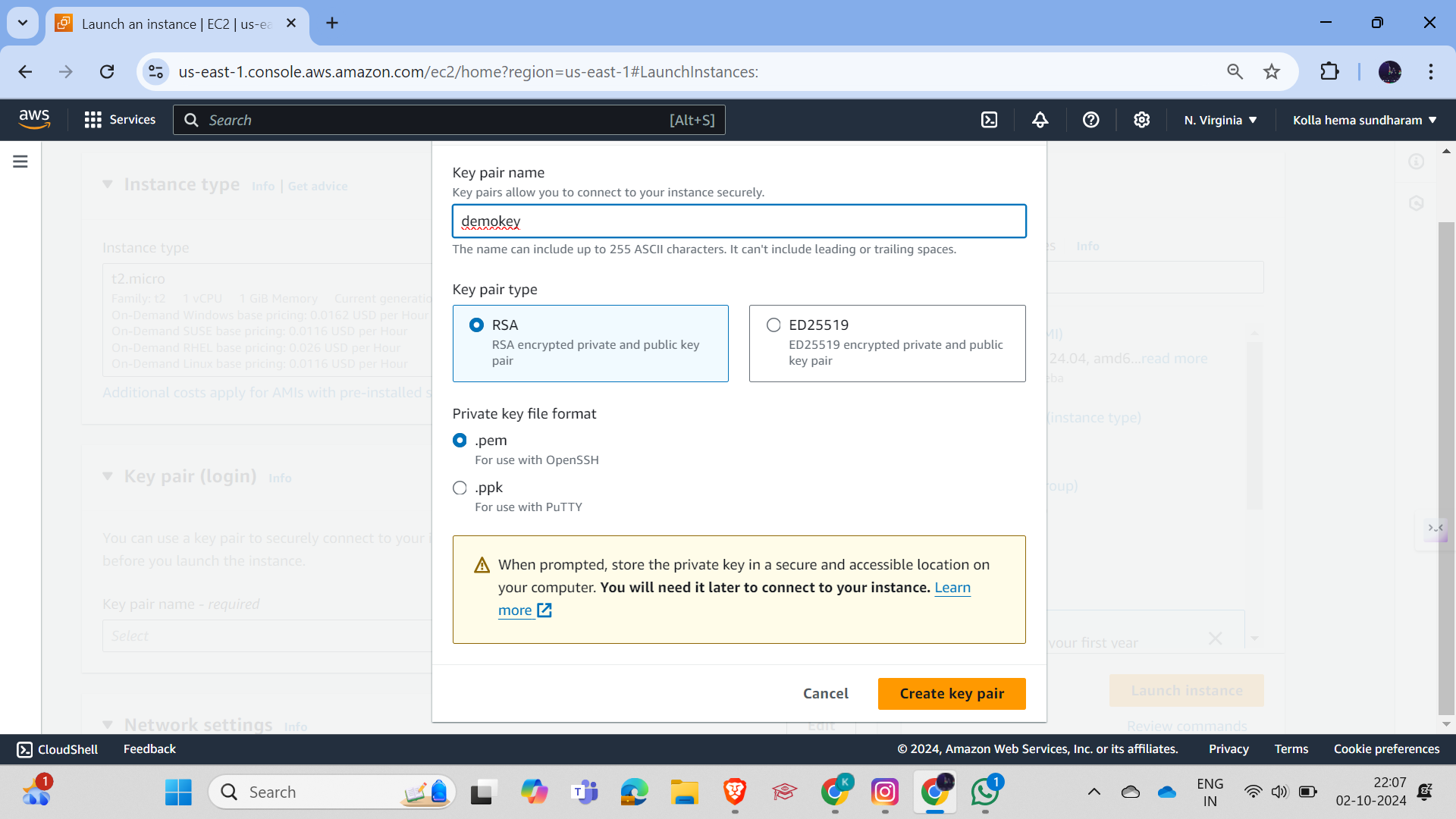

Key Pair Security: EC2 instances require a key pair (a combination of a public key and a private key) to ensure secure access. When you launch an instance:

AWS generates a public key and stores it on the instance.

You download the private key, which acts like a password. To access the instance, you combine your private key with the instance’s public key. Without both, access is denied.

Example: Think of it like entering a house with a lock. You can’t unlock the door (your instance) without both parts of the key. This ensures that even if someone tries to access the server remotely, they need the correct private key.

Types of EC2 Instances

Amazon EC2 offers a wide variety of instance types, each optimized for different workloads. This flexibility allows you to select the right instance for your specific application:

General Purpose Instances:

Use Case: Ideal for applications with balanced compute, memory, and networking needs.

Examples: t3, m5 instances.

Scenario: Running small web applications, development environments, and testing environments.

Compute Optimized Instances:

Use Case: Best for compute-bound applications that require high-performance processors.

Examples: c6g, c5 instances.

Scenario: Scientific modeling, data analytics, and gaming servers.

Memory Optimized Instances:

Use Case: Suited for memory-intensive applications such as large databases.

Examples: r5, x1 instances.

Scenario: In-memory databases like Redis or applications processing large datasets.

Storage Optimized Instances:

Use Case: For workloads that require high, sequential read and write access to large datasets.

Examples: i3, d2 instances.

Scenario: Data warehousing applications, distributed file systems, and Hadoop workloads.

Accelerated Computing Instances:

Use Case: Ideal for applications that require hardware accelerators like GPUs.

Examples: p4, g4 instances.

Scenario: Machine learning, video rendering, and 3D modeling.

Regions and Availability Zones

AWS operates data centers around the globe, organized into regions. Each region contains multiple Availability Zones (AZs)—physically separated data centers within a region that provide redundancy and high availability.

Why this matters:

Latency: Deploying your instances in the region closest to your users reduces latency and enhances performance.

Redundancy: You can distribute your EC2 instances across multiple AZs to ensure that your application remains available even if one AZ goes down.

Example: If you're hosting a global web application, you might launch instances in multiple regions (like North America, Europe, and Asia) to ensure that users experience minimal latency no matter where they’re accessing your app from.

Security in EC2

Amazon EC2 provides several layers of security to protect your instances:

Security Groups: These act like virtual firewalls, allowing you to control inbound and outbound traffic to your instances.

IAM Roles: With Identity and Access Management (IAM), you can assign permissions to instances, controlling which AWS services they can access.

Virtual Private Cloud (VPC): This feature lets you launch EC2 instances in a logically isolated network, enhancing security by controlling the communication between instances.

Real-World Use Case of EC2: Cost Management in Action

Imagine you’re running an online video rendering service. Video rendering is compute-heavy, requiring significant processing power but happens in bursts. Here’s how EC2 can help:

During periods of low demand, you can reduce your compute resources, paying only for the minimal usage.

When users submit large rendering jobs, EC2 allows you to launch compute-optimized instances to handle the intensive workload.

Once the job is complete, you can shut down the instances, ensuring cost efficiency.

This elasticity ensures that you aren’t over-provisioning resources during idle periods, saving costs while maintaining performance when it’s needed most.

How to Launch an EC2 Instance: Step-by-Step Guide

Let’s go through the process of launching your first EC2 instance:

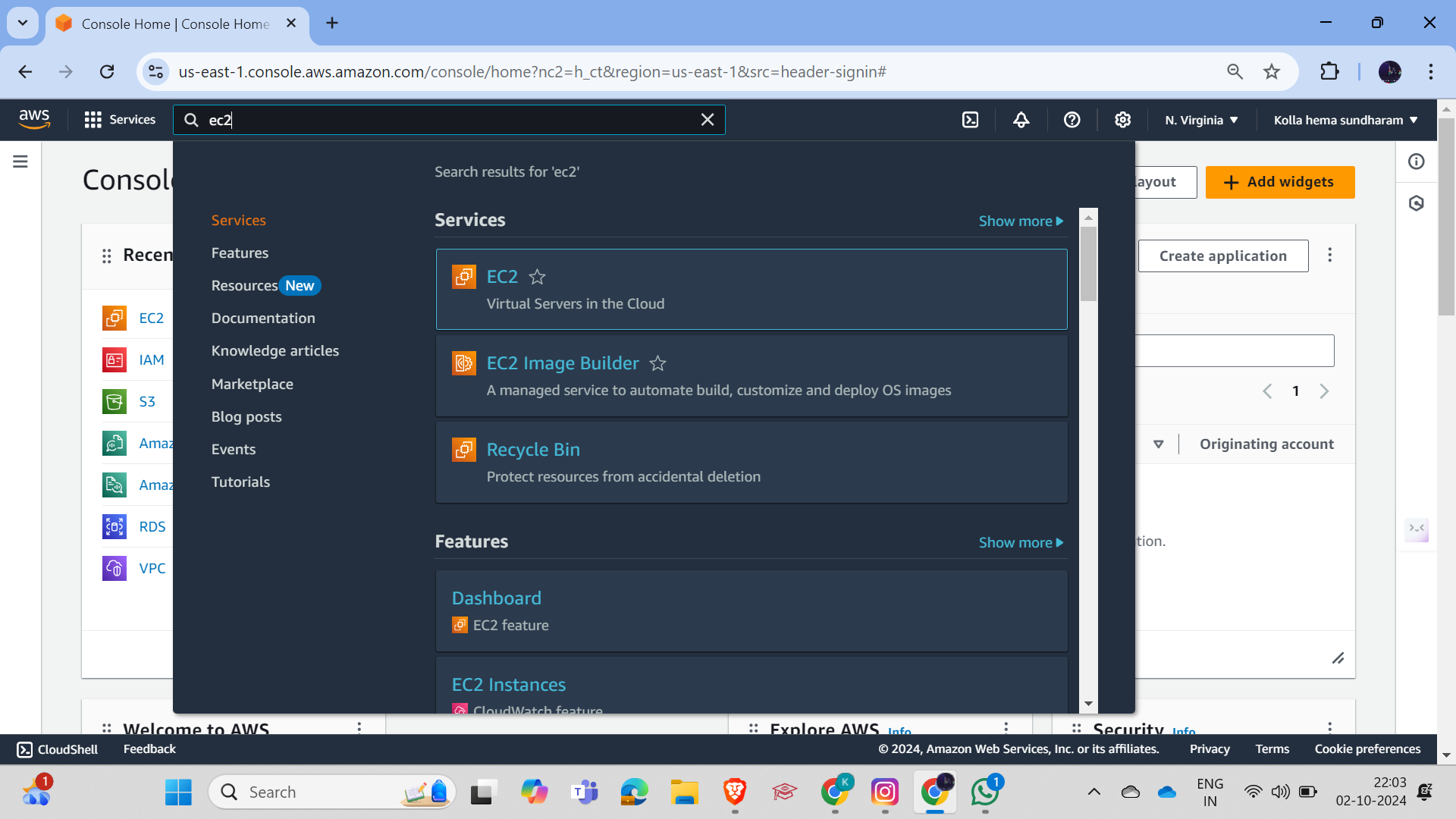

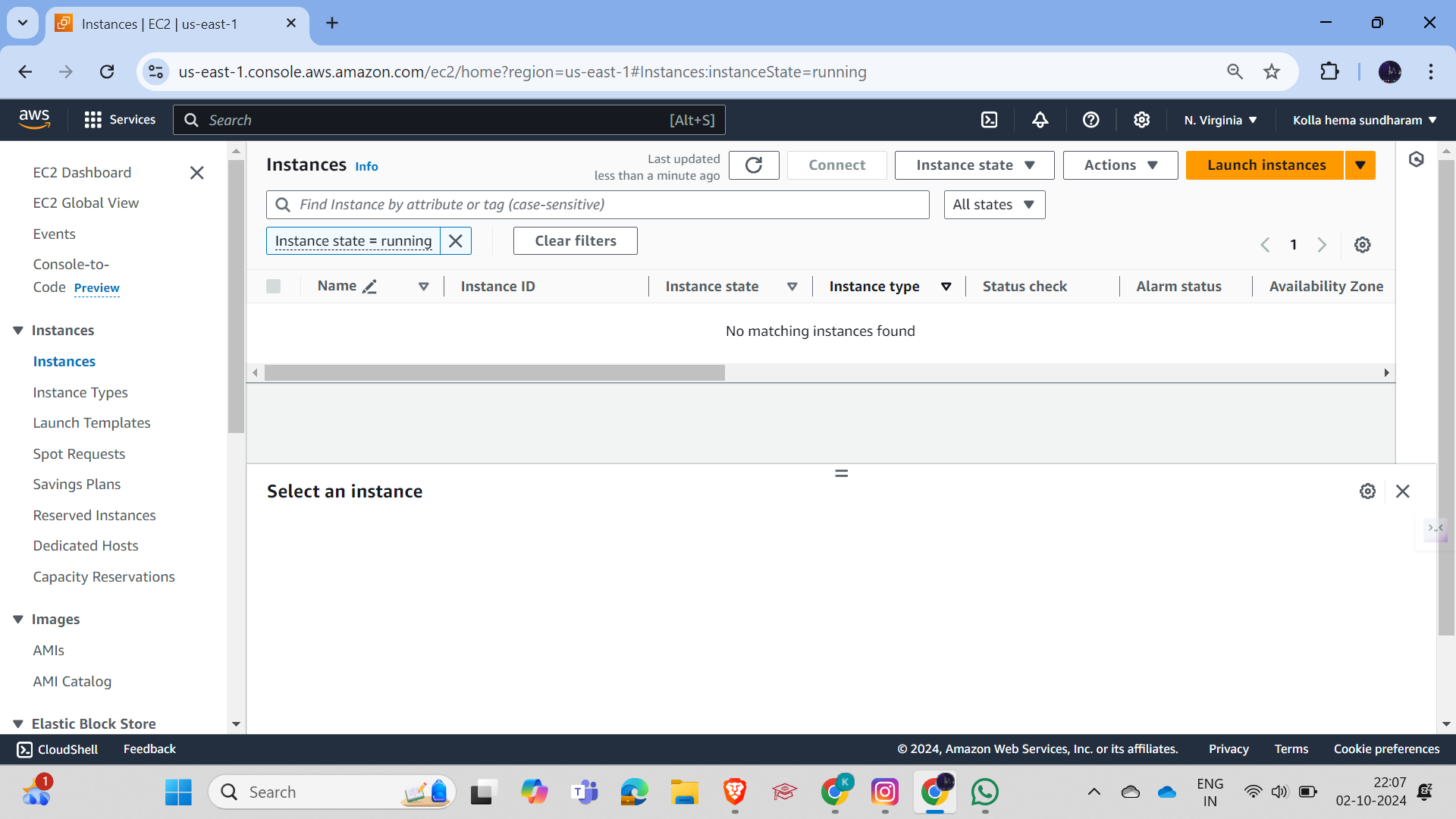

Login to AWS Management Console:

Navigate to the EC2 dashboard.

Insert screenshot of EC2 dashboard.

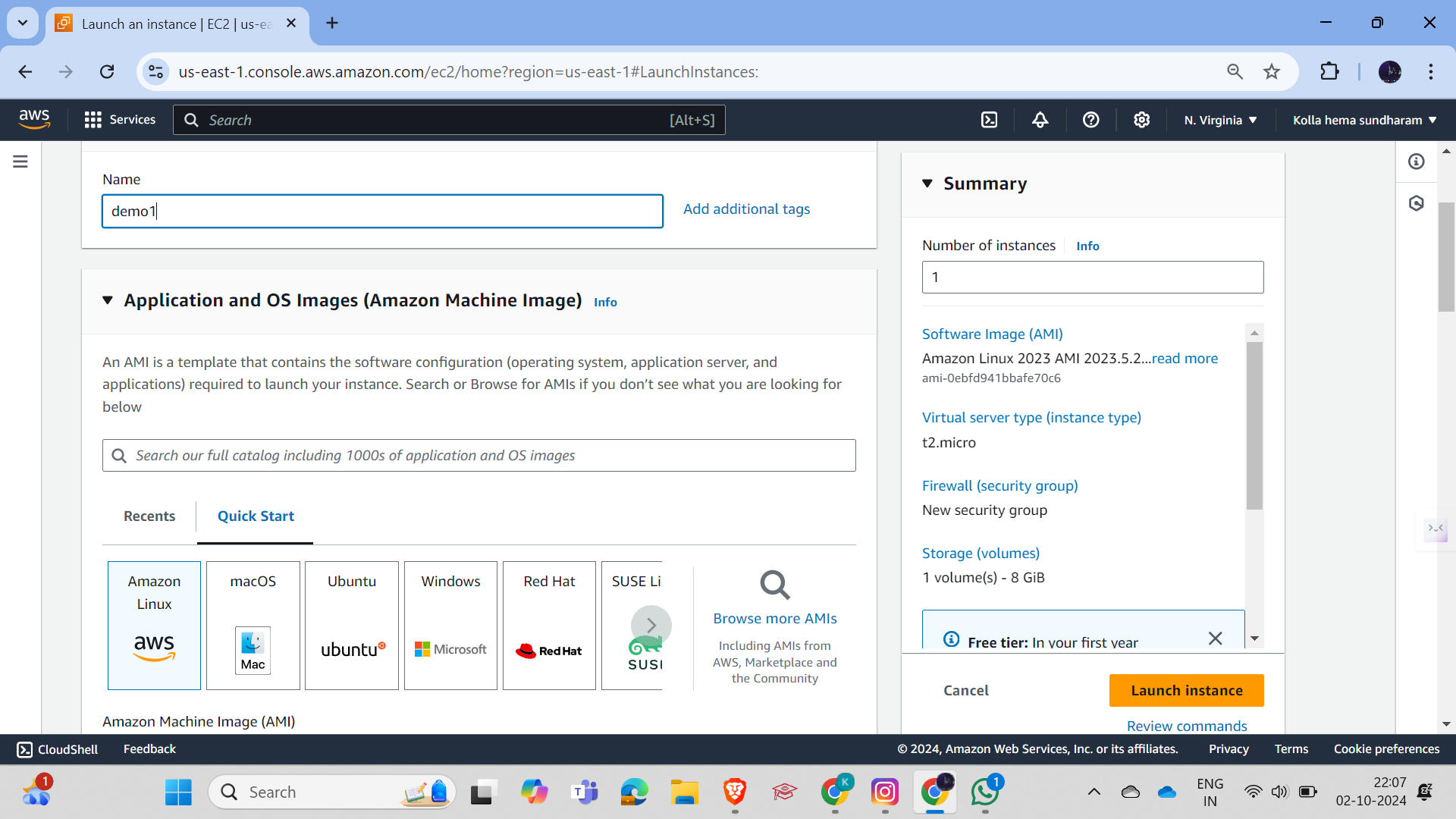

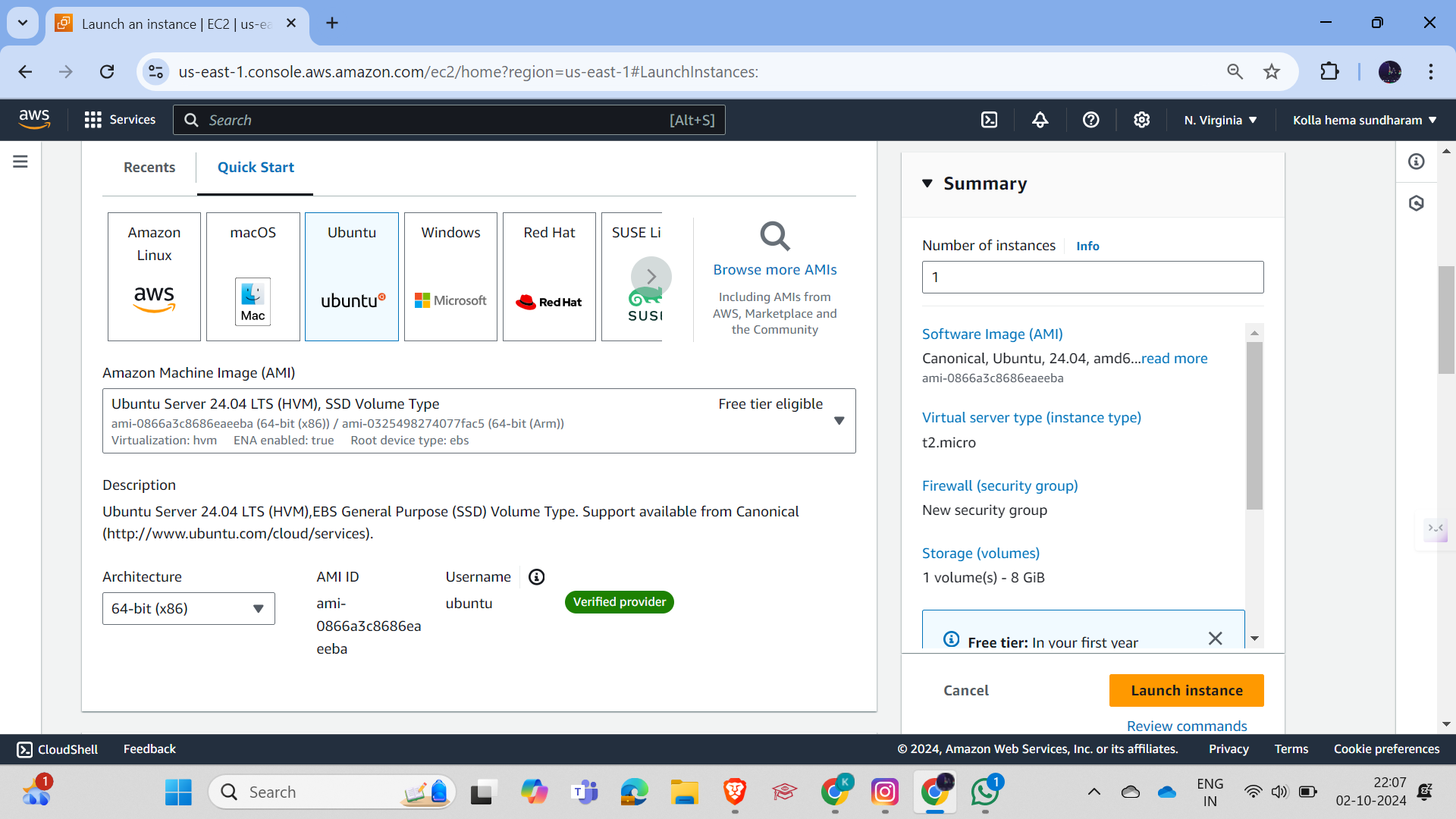

Choose an AMI (Amazon Machine Image):

Select an AMI, which is a template for your instance, including the OS and any pre-configured software.

Insert screenshot of AMI selection.

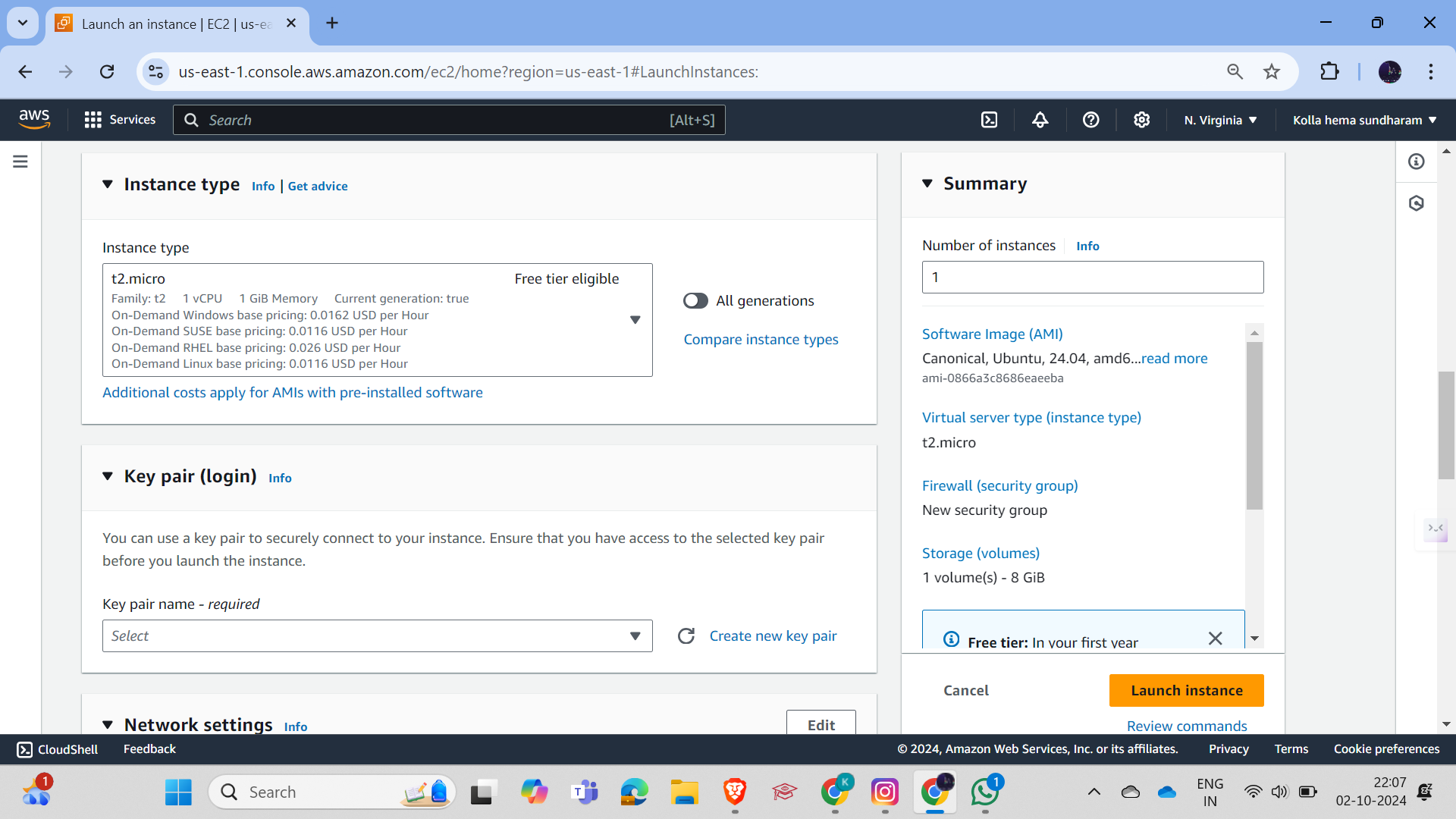

Choose an Instance Type:

Based on your needs, select an instance type. For example, for general-purpose use, you might choose a

t2.microinstance.Insert screenshot of instance type selection.

Configure Instance Details:

Decide the number of instances, networking options (VPC, subnet), and IAM roles.

Insert screenshot of instance configuration.

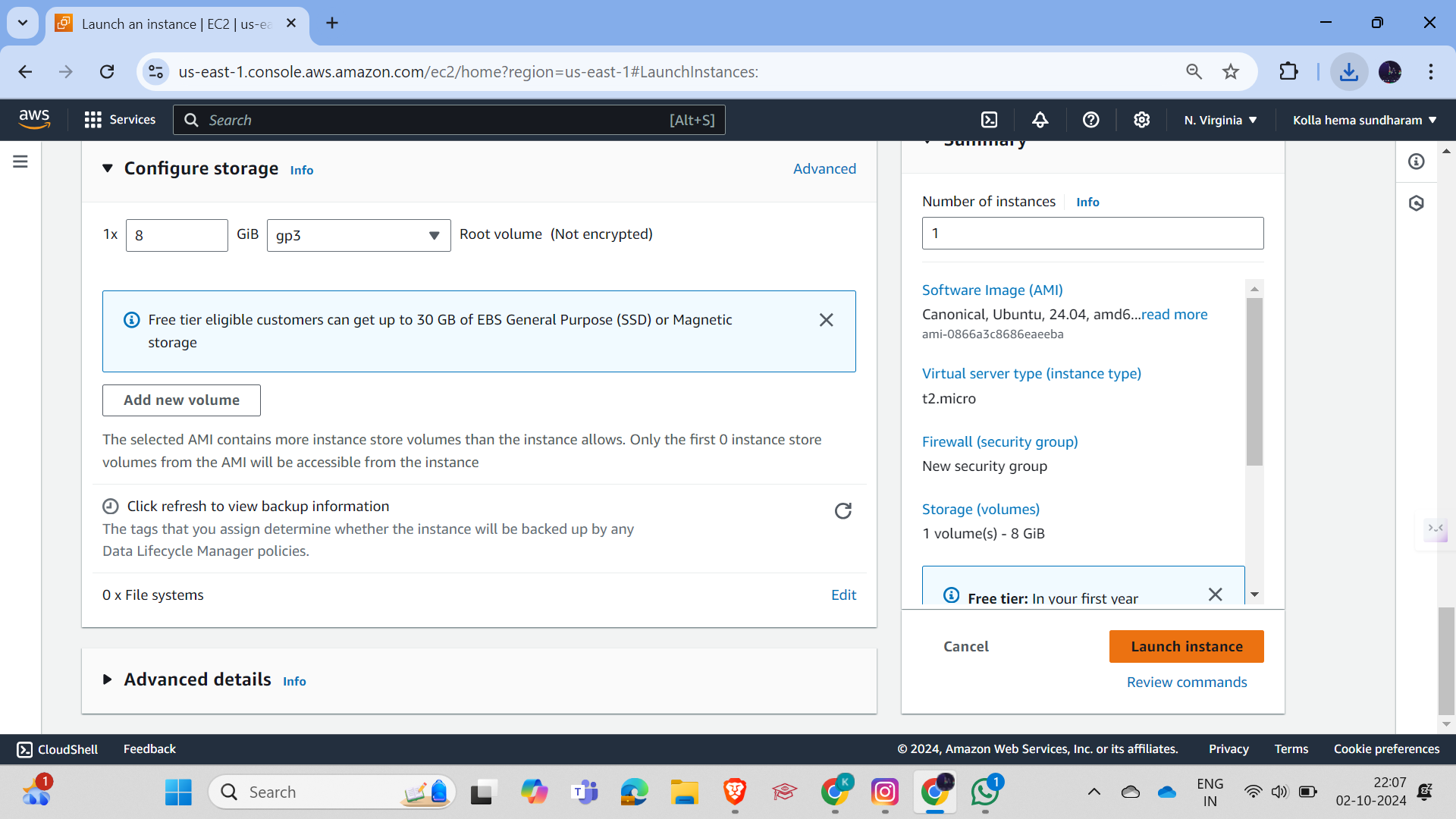

Add Storage:

Customize the storage options, such as the type and size of the Elastic Block Store (EBS) volumes attached to the instance.

Insert screenshot of storage settings.

Configure Security Group:

Define the inbound and outbound rules for the instance by setting up a security group.

Insert screenshot of security group configuration.

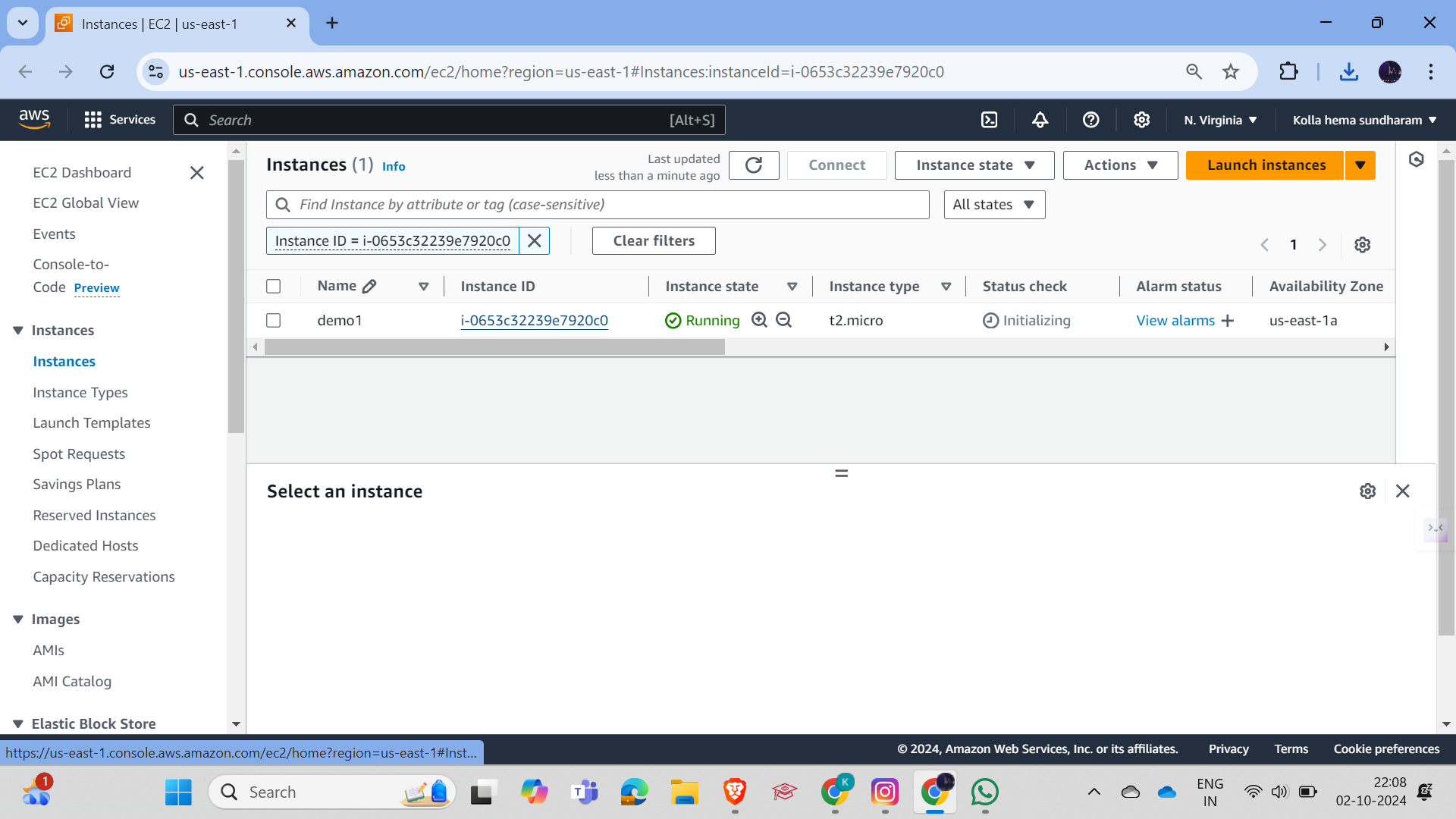

Launch the Instance:

After reviewing all settings, launch your instance.

You’ll be prompted to download the private key (

.pemfile) to securely access your instance later.Insert screenshot of instance launch confirmation.

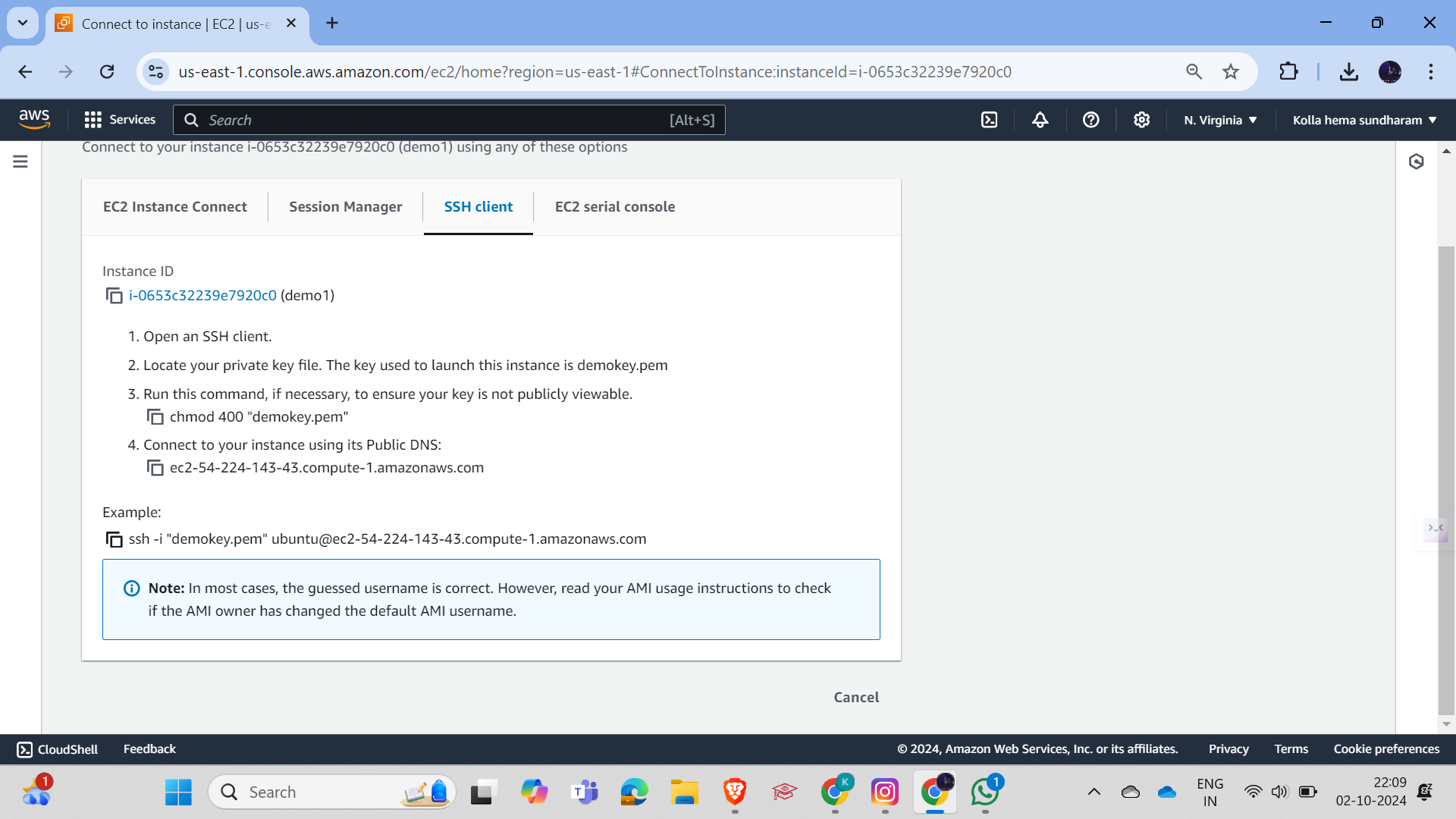

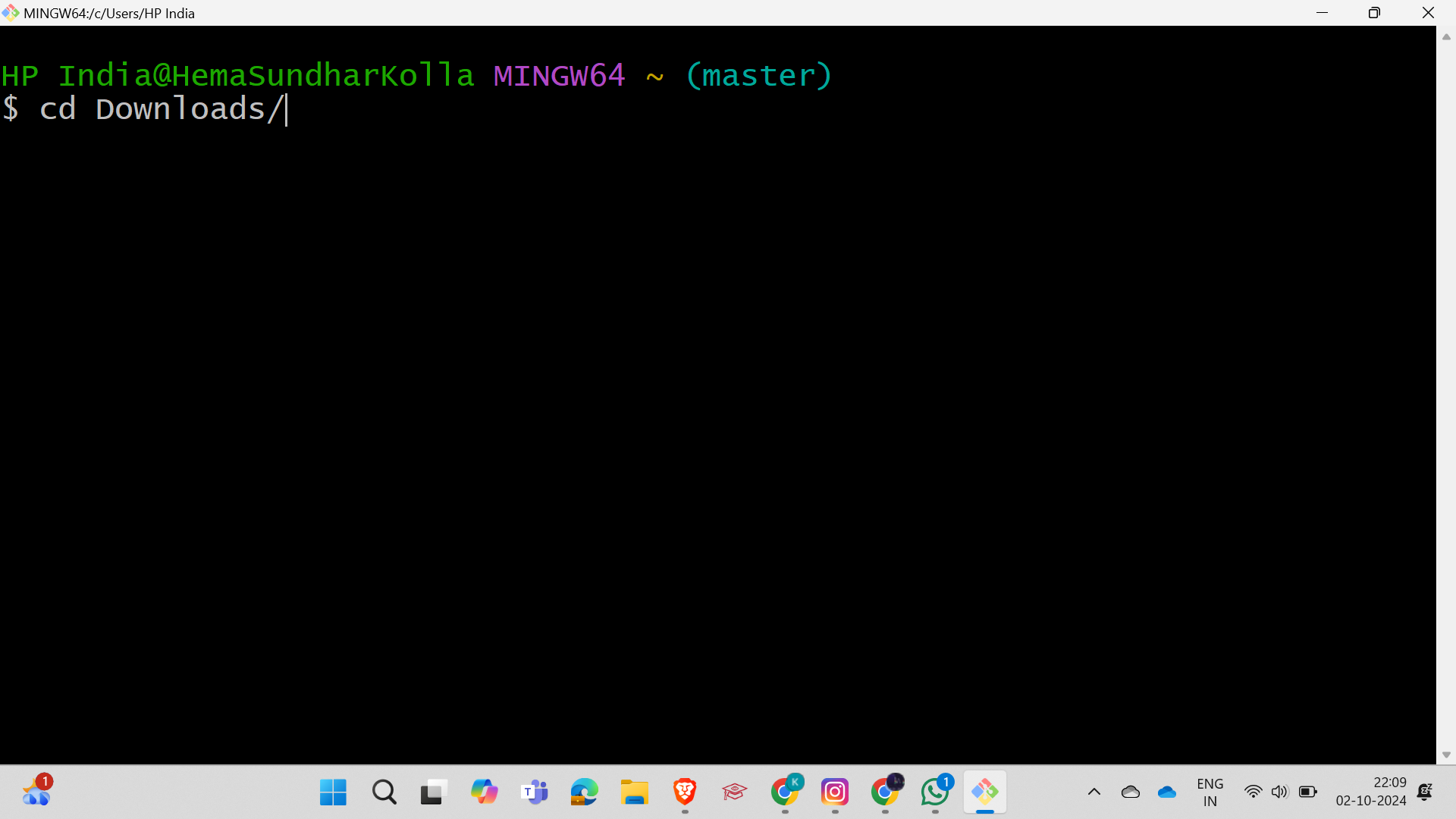

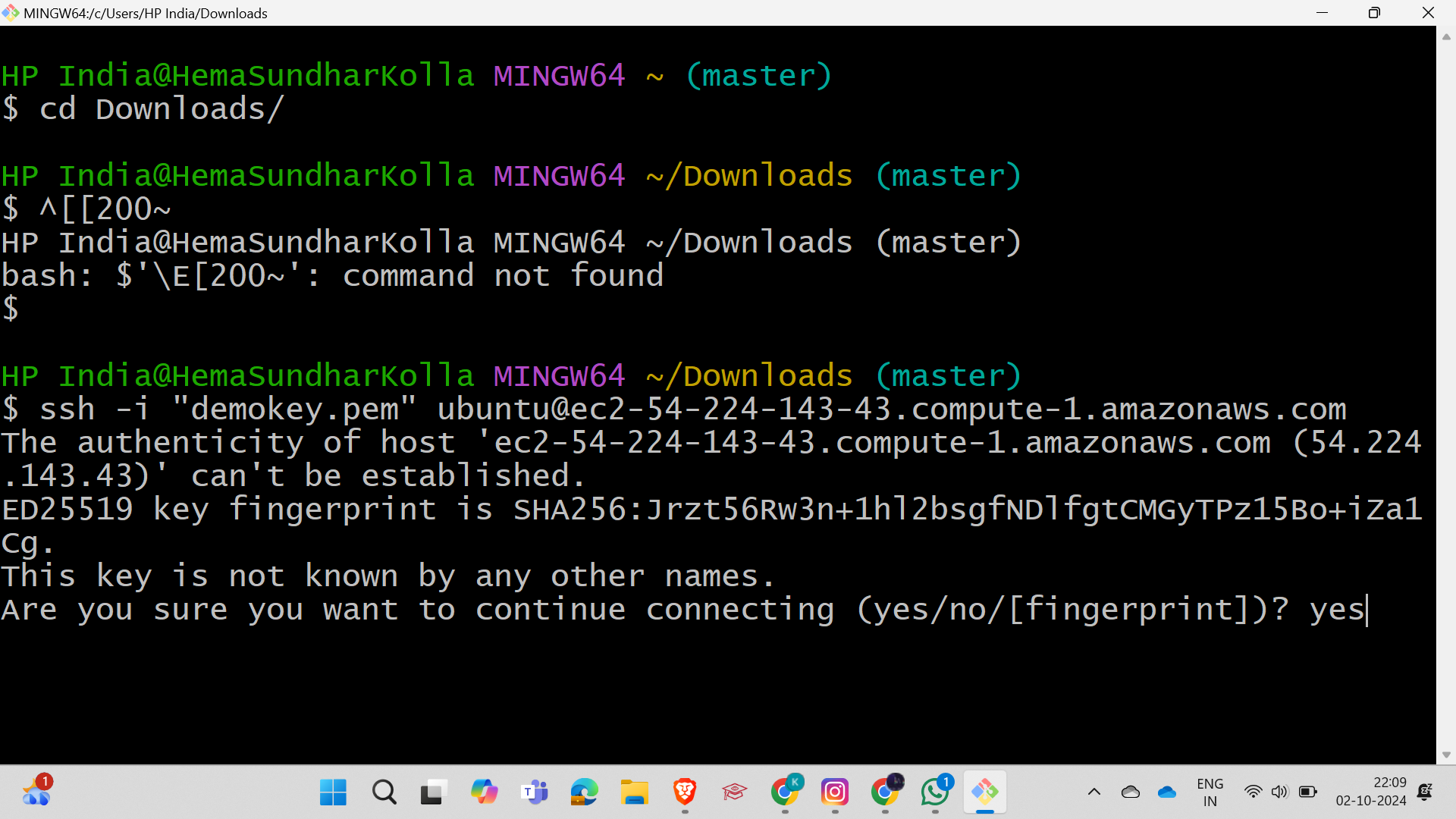

Connect to Your EC2 Instance:

Use SSH or an RDP client to connect to your instance by providing the private key and public IP address.

Insert screenshot of connecting to instance.

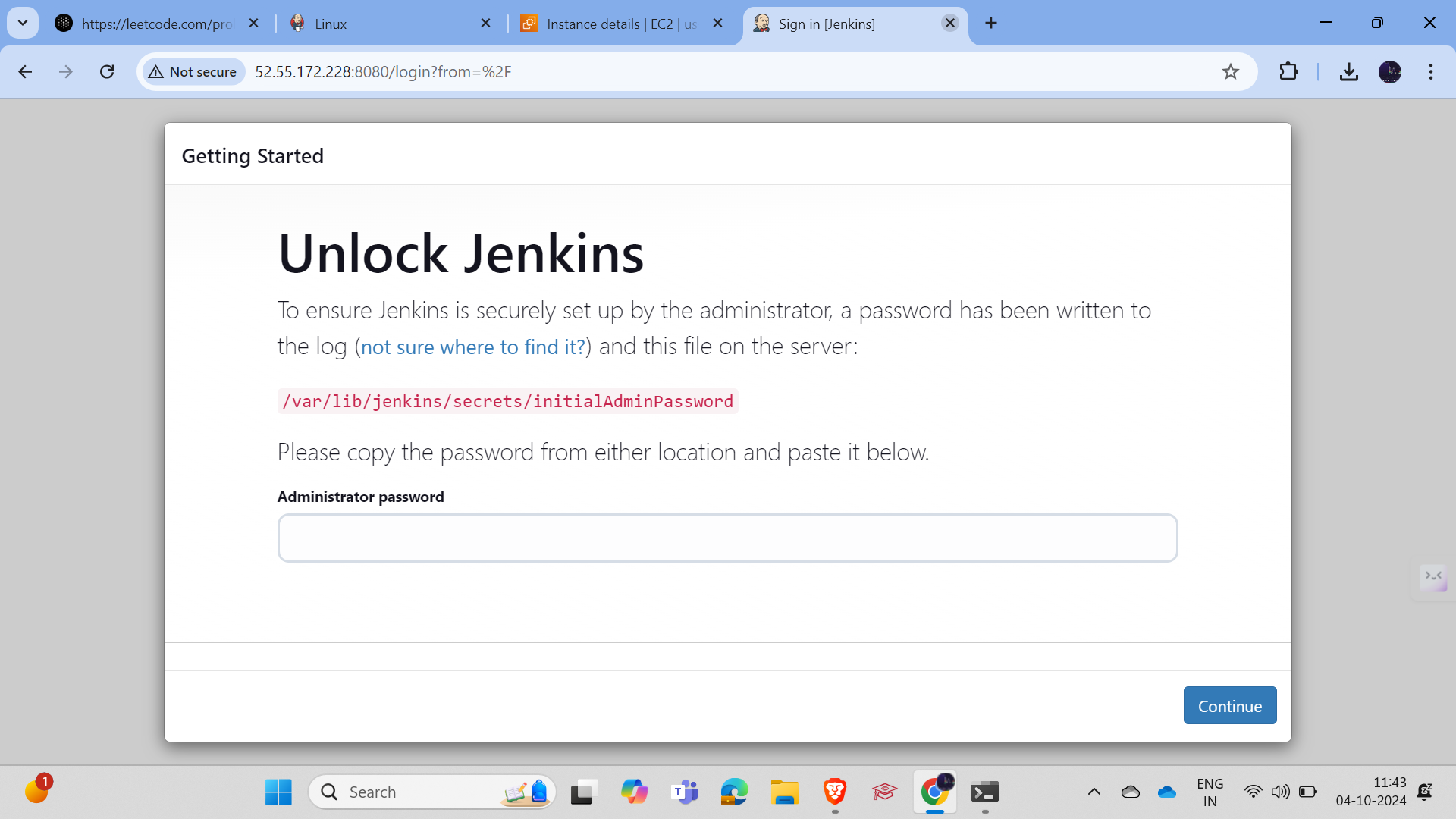

sudo apt update

sudo apt install openjdk-17-jdk -y

sudo wget -O /usr/share/keyrings/jenkins-keyring.aschttps://pkg.jenkins.io/debian/jenkins.io-2023.key echo "deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc]"https://pkg.jenkins.io/debian binary/ | sudo tee /etc/apt/sources.list.d/jenkins.list > /dev/null sudo apt-get update sudo apt-get install jenkins

sudo apt install jenkins -y

sudo systemctl start Jenkins

sudo systemctl enable Jenkins

Execute all the commands on the EC2 instance, and you will see the Jenkins page.

Conclusion

Amazon EC2 offers a versatile, scalable, and cost-effective solution for hosting applications and handling variable workloads. By providing an easy-to-use interface and powerful features like auto-scaling, on-demand pricing, and flexible configuration, EC2 empowers businesses to focus on innovation rather than infrastructure management. Whether you're running a small blog, a global e-commerce platform, or a high-performance computing cluster, EC2’s elasticity and flexibility ensure your infrastructure grows with your needs.

As you experiment with EC2, you’ll discover how it fits into your broader cloud strategy, allowing you to optimize costs, increase performance, and scale effortlessly.

Subscribe to my newsletter

Read articles from Hema Sundharam Kolla directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hema Sundharam Kolla

Hema Sundharam Kolla

I'm a passionate Computer Science student specializing in DevOps, cloud technologies, and powerlifting. I've completed several certifications, including AWS Cloud Practitioner and Google’s Generative AI badge, and I'm currently exploring both AWS and Azure to build scalable, efficient CI/CD pipelines. Through my blog posts, I share insights on cloud computing, DevOps best practices, and my learning journey in the tech space. I enjoy solving real-world problems with emerging technologies and am developing a platform to offer career advice to students. Outside of tech, I'm a competitive powerlifter, constantly striving to improve and inspire others in fitness. Always eager to connect with like-minded individuals and collaborate on projects that bridge technology and personal growth.