Guide to Building a Vector Search UI Using ReactiveSearch and OpenSearch 2.17

Siddharth Kothari

Siddharth Kothari

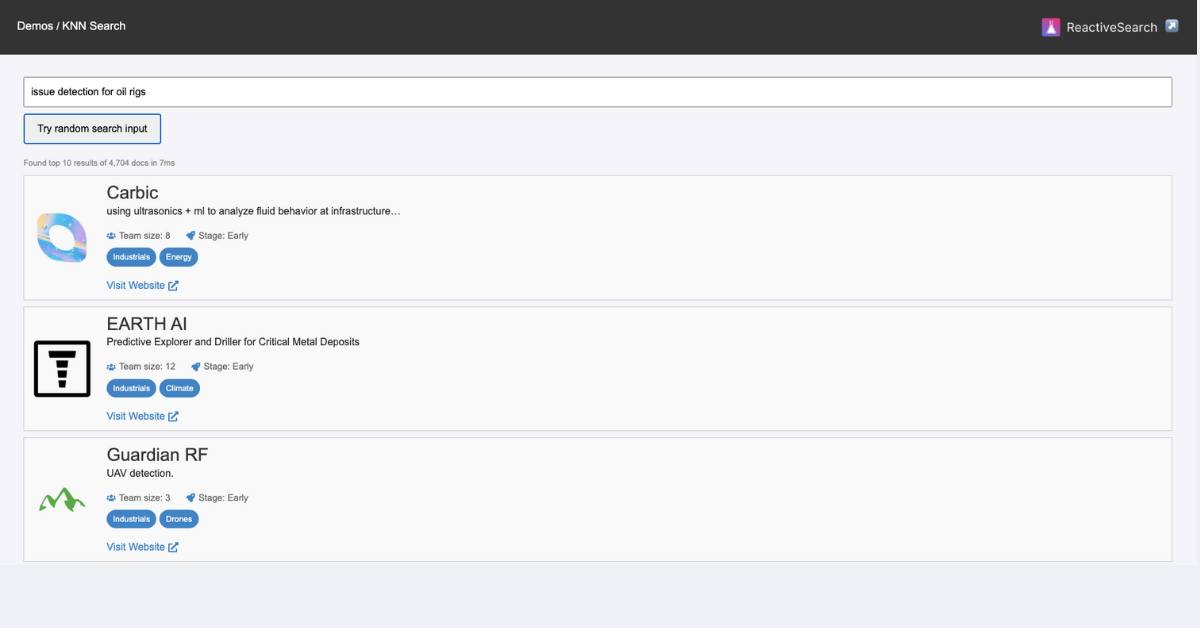

How often have you looked for something, but you're not sure what it’s called? Vector search (aka KNN-search) solves for this problem by allowing you to search for what you mean.

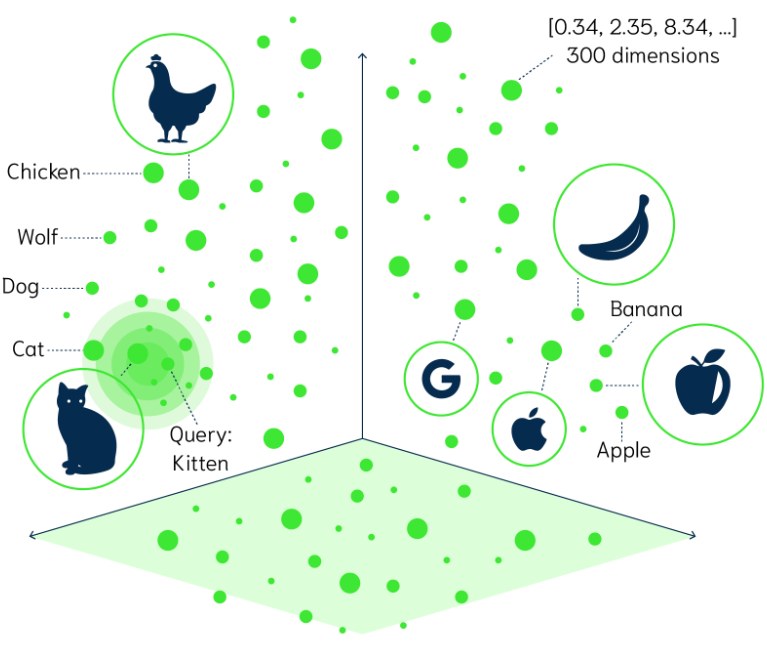

Internally, a vector search engine stores a numerical representation of each data: be it text, images, or other types of media even, called embeddings. These embeddings capture the underlying meaning and context of the data rather than just the exact words or pixels. When you search using vector search, the engine compares the embedding of your query to the embeddings of all stored items. It finds the results that are most semantically similar, even if they don’t contain the exact words or elements you used in your search.

This means you can search with vague descriptions, synonyms, or even related concepts, and still find the right match because vector search understands what you’re looking for, not just the exact terms you use. This makes it an ideal choice for product discovery, recommendation systems, natural-language and voice search use-cases.

The Ingredients

In this post, I will show how to build a vector search UI using ReactiveSearch for the search UI, OpenSearch as the vector search engine, and OpenAI embeddings API for creating the numerical vector representation.

ReactiveSearch offers an API for authoring search endpoints as well as an open-source UI kit for building search UIs with React, Vue, and Flutter.

OpenSearch is a versatile open-source keyword and vector search engine. The latest OpenSearch 2.17.0 release introduces several vector search enhancements,

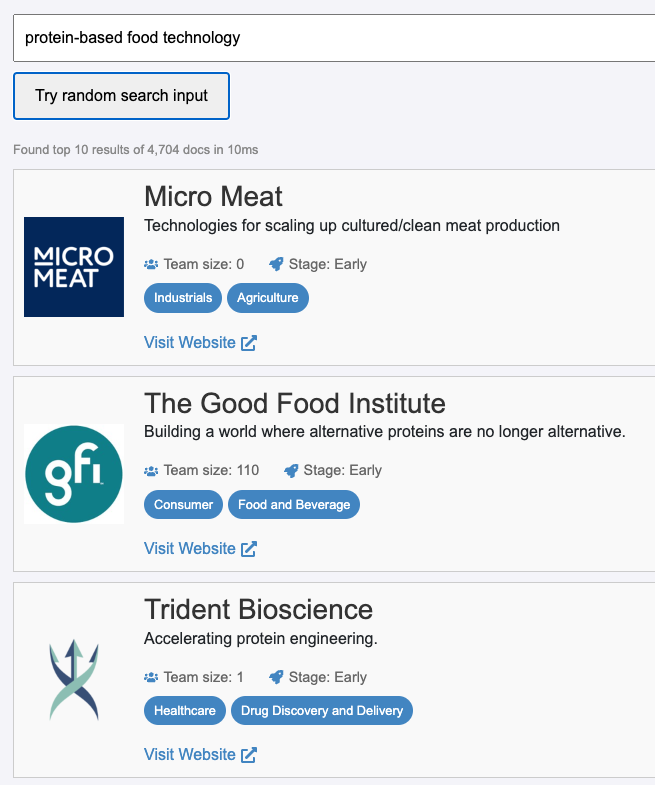

The vector search UI allows searching from over 5,000 Y-Combinator companies. You can try out the live demo at: https://oss-demos.reactivesearch.io/knn.

Create Search Cluster

We start by creating a search cluster with ReactiveSearch Cloud at https://reactivesearch.io. This will create a deployment of the OpenSearch engine and the ReactiveSearch API service.

If you already have an OpenSearch cluster hosted, you can deploy the ReactiveSearch API service using one of these docker-compose files.

Indexing Data

We start by indexing the dataset of over 5,000 Y-Combinator companies into the ReactiveSearch (with OpenSearch engine configured) cluster.

If you already have an OpenSearch index, you can use the KNN re-index utility https://github.com/appbaseio/ai-scripts/tree/master/knn_reindex to generate embeddings based on your existing data + mappings and re-index the data. It uses the text-embedding-3-small model from OpenAI for embedding, this can be updated by forking the utility.

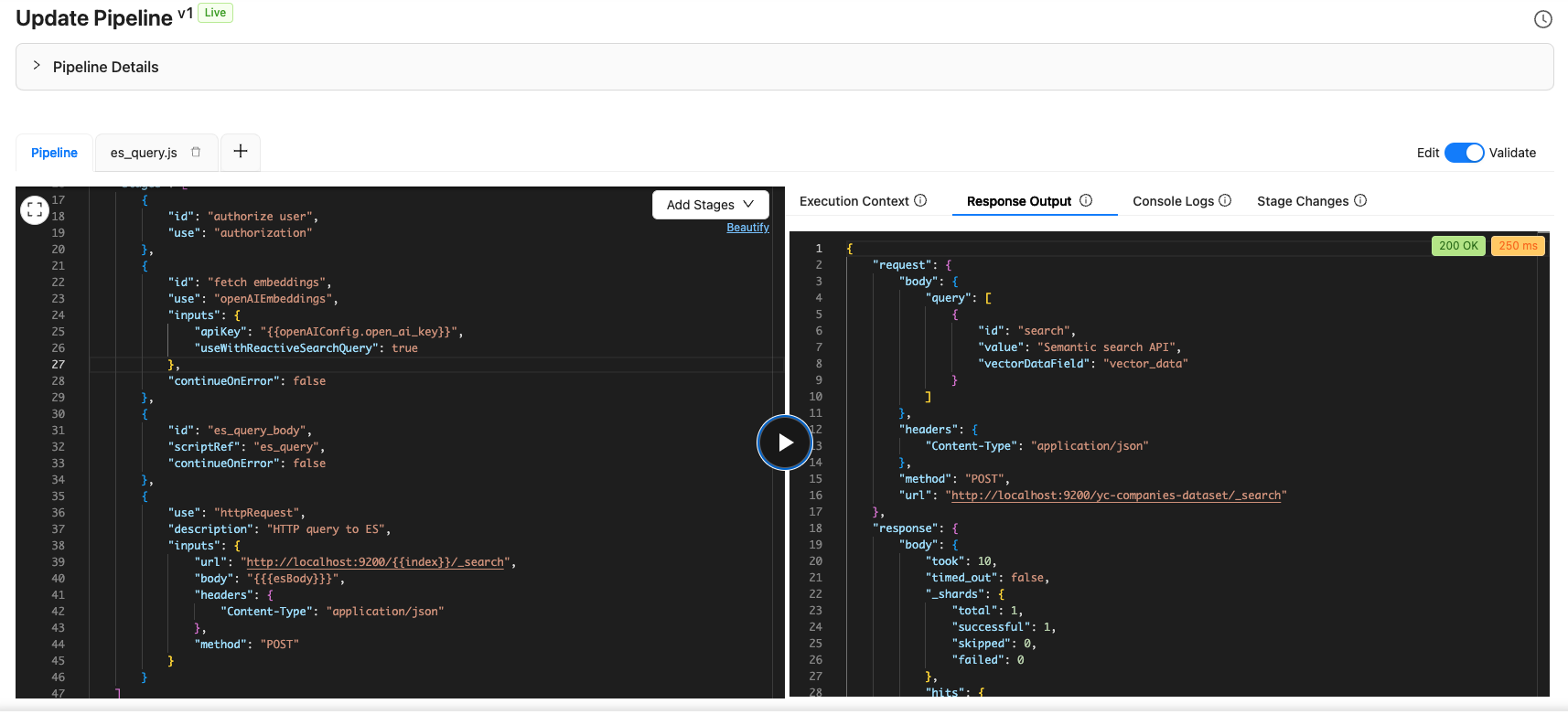

Authoring Search Pipeline

Next, I will author a search pipeline with the ReactiveSearch dashboard. A ReactiveSearch pipeline allows stitching the search engine (here, OpenSearch) with additional connectors to enrich search queries, add transformation steps, or enrich search engine results. The pipeline definition is based on a JSON format and these steps can be written in JavaScript. Check out these how-to guides to see what you can build with pipelines.

{

"enabled": true,

"description": "KNN query pipeline",

"routes": [

{

"path": "/_knn_search/_reactivesearch",

"method": "POST",

"classify": {

"category": "reactivesearch"

}

}

],

"envs": {

"index": "yc-companies-dataset"

},

"stages": [

{

"id": "authorize user",

"use": "authorization"

},

{

"id": "fetch embeddings",

"use": "openAIEmbeddings",

"inputs": {

"apiKey": "{{openAIConfig.open_ai_key}}",

"useWithReactiveSearchQuery": true

},

"continueOnError": false

},

{

"id": "os_query_body",

"scriptRef": "os_query",

"continueOnError": false

},

{

"use": "httpRequest",

"description": "HTTP query to OS",

"inputs": {

"url": "http://localhost:9200/{{index}}/_search",

"body": "{{{osBody}}}",

"headers": {

"Content-Type": "application/json"

},

"method": "POST"

}

}

]

}

Here, we first get the OpenAI embedding for the incoming search query, then generate the query DSL body using the os_query script (below) and then send this to the OpenSearch engine to return search results.

function handleRequest() {

// Parse the request body

console.log('request body is: ', context.request.body);

reqBody = JSON.parse(context.request.body);

// Construct the OpenSearch DSL query

var osBody = JSON.stringify({

query: {

knn: {

vector_data: {

vector: reqBody.query[0].queryVector,

k: 10,

},

},

},

_source: [

'name',

'one_liner',

'website',

'small_logo_thumb_url',

'team_size',

'industries',

'regions',

'stage',

],

size: 10,

});

// Return the updated context with the new esBody

return { ...context, osBody };

}

The ReactiveSearch dashboard provides an execution environment where I can set the REST API request body and see the live response from the pipeline. It also provides console logs and individual stage changes that can be helpful while developing.

We just configured the pipeline to respond to this API endpoint:

curl -XPOST https://search-server.com/_knn_search/reactivesearch -d '{

"query": [

{

"id": "search",

"value": "Semantic search API",

"vectorDataField": "vector_data"

}

]

}'

The pipeline fetches the OpenAI embeddings first for the search input, and then creates the query DSL to run a KNN search query against the OpenSearch engine. The results are then returned back without any modifications.

Building The Search UI

Now that the REST API is configured, the next and final step is to build a search UI. Here, I’m choosing to build a basic Search UI with a ChatGPT prompt.

### Task

Write a React component named `KNN` that implements a K-Nearest Neighbors (KNN) based search UI.

### Server Connection Info

- **POST method**: `https://appbase-demo-ansible-abxiydt-arc.searchbase.io/_knn_search/_reactivesearch`

- **API Payload**:

{

"query": [

{

"id": "search",

"value": "Semantic search API",

"vectorDataField": "vector_data"

}

]

}

Response is returned in Elasticsearch hits format.

### Component Requirements

React with styled-components based on @emotion

### Search Functionality

- Implement an input field for search queries.

- Include a button labeled **"Try random search input"** to autofill a random query from a predefined list.

- Use **Basic Auth** with credentials:

- Username: `a03a1cb71321`

- Password: `75b6603d-9456-4a5a-af6b-a487b309eb61`

### State Management

- Use `useState` to manage:

- `searchInput`

- `results`

- `loading`

- `noResults`

- `searchTime`

- Use `useEffect` and `useRef` to handle side effects and input debouncing.

### Result Display

- Display search results in a styled list with:

- **Company logo** `small_logo_thum_url` field

- **Company name** `name`

- **One-liner description** `one_liner`

- **Team size and stage** (with icons from `react-icons/fa`) `team_size`, `stage`

- **Industries** as tags `industries`

- **Link** to the company’s website. `website`

### Loading and No Results States

- Show a loading message during search.

- Display a message if no results are found.

Please provide the complete code.

This should generate a close to functional code. Here is a version with some tweaks to the ChatGPT output: KNN/index.jsx.

You can try out the live demo at: https://oss-demos.reactivesearch.io/knn.

Summary

In this guide, we explored how to build a vector search UI using ReactiveSearch and OpenSearch 2.17. I started by creating a search cluster with ReactiveSearch Cloud, then indexed a dataset of over 5,000 Y-Combinator companies. Next, I authored a search pipeline to handle the search queries and fetch results using OpenAI embeddings. Finally, I built a simple search UI to interact with the search API.

In the next post, we will see how to build a hybrid search with KNN-search along with faceting, where we will use the ReactiveSearch UI kit for building the search UI.

Subscribe to my newsletter

Read articles from Siddharth Kothari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Siddharth Kothari

Siddharth Kothari

CEO @reactivesearch, search engine dx