Concurrency in Go: Part I

Jeje Oluwayanfunmi

Jeje Oluwayanfunmi

Concurrency! The term comes from the Latin word "concurrentia." which translates to running together. When I first read about concurrency, it reminded me of a particular concept I was taught in one of my early CS classes, which is "Parallelism.". A lot of people often mix these two concepts and I would like to start off by explaining what they are and what distinguishes them.

Concurrrency vs Parallelism

Concurrency may be explained as that feature of a program or of a system that is capable of dealing with more than one task or operation at a time and thus supports parallel or partly interleaved execution.

Parallelism can be defined as the execution of more than one process or tasks in parallel, literally at the same instant of time, on different processors or cores.

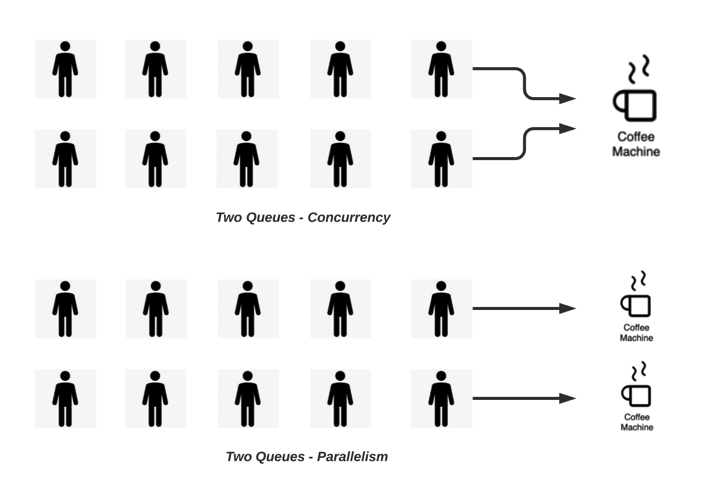

Above is an illustration of Concurrency and Parallelism explained with the two queues analogy:

Concurrency (Top Image):

You have two queues of customers waiting to use a single coffee machine you have a few people in line but they share one machine, one at a time.

It seems the coffee machine is similar to the barista in our previous example-run by one customer request, sit and wait on the coffee to brew, then on to the next customer request, context switching between tasks as required.

That's concurrency: multiple tasks are being worked on, but actual parallel-the system is context-switching between the tasks in order to make progress over each:

Parallelism (Bottom Image):

There are, in that case, two queues of customers, but this time with two different coffee machines. Each of the coffee machines is processing independently of the other, and simultaneously with the other, one order of a customer.

Because both machines are on and operating in parallel, two clients can be served without either one of the two machines having to context switch. That is parallelism: independent resources such as the coffee machines in this example performing tasks, in this case orders of coffee, simultaneously.

Concurrency in Software Development

Concurrency is not a new concept, but it has become increasingly important as we reach the physical limits of Moore's Law—the principle that the number of transistors on a chip doubles roughly every two years. As single-core processors began to plateau in performance, developers turned to concurrency and parallelism to make better use of multi-core processors and distributed systems.

Herb Sutter famously coined the phrase “the free lunch is over” in his 2005 article, arguing that developers could no longer rely on hardware to automatically make their code faster. Instead, they must embrace concurrency to fully utilize modern hardware. Concurrency allows programs to handle larger workloads by breaking down tasks into smaller, more manageable chunks that can be processed independently.

Why Is Concurrency Hard?

Where great benefits are achieved, complexity follows in concurrency. A few of the key challenges are highlighted below.

Race Conditions: Race conditions are generally related to those two or more operations that must be executed in a certain order, but due to inability of your program to enforce the order, the result turns out to be unpredictable.

Deadlocks: Such a situation when two or more processes, because of waiting for each other inside a program, hold up a resource and none of them release it. Such a deadlock hangs forever.

Livelocks: Same as deadlock, except the system keeps changing state but isn't making any progress.

Starvation: If certain processes are never getting the resources they need because other processes keep taking them.

These problems occur because, in programs that concurrently execute, you simply cannot reliably predict the order of execution for different tasks. No amount of planning can avoid minor timing differences that result in bugs, which then may be painful to reproduce and find.

Go’s Approach to Concurrency

Go was designed with concurrency in mind, and is considered one of the major reasons for its popularity. Go introduces a concept called goroutines; these are lightweight functions that can run concurrently with other functions. Unlike traditional threads, goroutines are very efficient and thus make it quite easy for a developer to easily spawn up to thousands of concurrent tasks without overwhelming the system.

Go also provides channels, a powerful primitive to safely share data between goroutines. Channels allow Go developers to use a communicating sequential processes model that avoids many of the trappings of shared memory concurrency.

Go's simplicity regarding concurrency allows writing programs that work on many tasks concurrently more easily. This improves performance and makes it easier to scale.

Next, we'll have a closer look at some Go Concurrency building blocks with the exploration of practical examples, showing how you can take full advantage of Go's powerful concurrency features in your own programs. Part 2 follows up on the workshop by applying what was learned.

Subscribe to my newsletter

Read articles from Jeje Oluwayanfunmi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jeje Oluwayanfunmi

Jeje Oluwayanfunmi

Jay: Anime lover, TV series / Movie enthusiast, and Software engineer 🚀📺🎌