Secure Design Principles

Pranav Bawgikar

Pranav BawgikarTable of contents

- Preface

- What are Secure Design Principles?

- #1 Defense in Depth

- #2 Principle of Psychological Acceptability

- #3 Principle of Complete Mediation

- #4 Principle of Separation of Privileges

- #5 Principle of Open Design

- #6 Principle of Least Common Mechanism

- #7 The Principle of Least Privilege (POLP)

- #8 Fail-Safe Defaults

- #9 Principle of Economy of Mechanism

[18]

Preface

The prevalence of software vulnerabilities and the increasing number of hacked enterprise systems underline the need for guidance in the design and implementation of secure software. If the software engineers and system developers consider applying and implementing the Secure Design Principles (SDPs), the enterprise systems would be secured against many types of attacks.

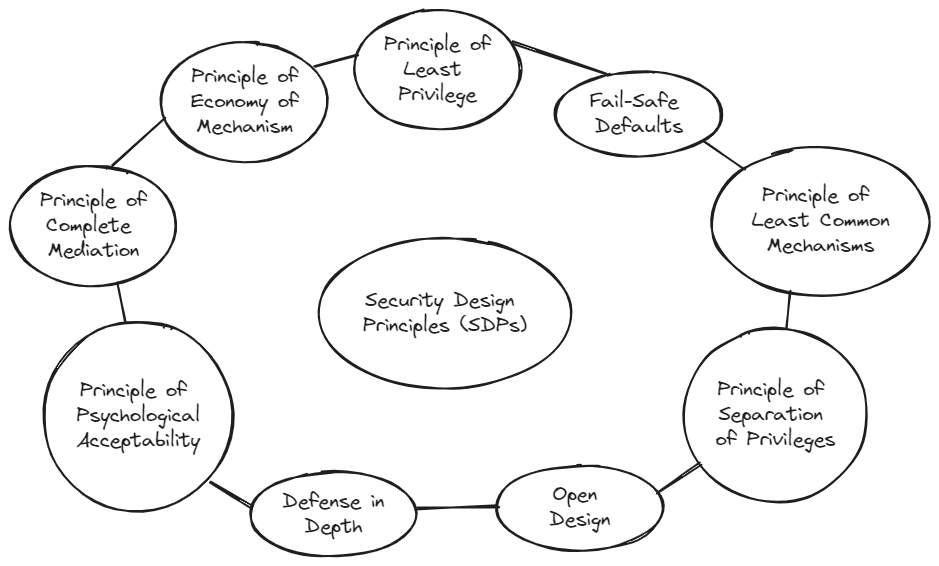

What are Secure Design Principles?

Secure design principles guide the development of secure systems that resist security vulnerabilities and unauthorized access. For example, A secure foundation for trustworthy mobile computing is achieved through a secure integrated core. This core comprises a security-aware processor, a compact security kernel, and a restricted set of essential secure communications protocols.

#1 Defense in Depth

Also known as layered defense, defense in depth is a security principle where single points of complete compromise are eliminated or mitigated by incorporating multiple layers of security safeguards and risk-mitigation countermeasures. By employing diverse defensive strategies, if one layer of defense proves inadequate, another layer can hopefully prevent a full breach.

#2 Principle of Psychological Acceptability

This principle recognizes the human element in computer security. The principle of psychological acceptability states that security mechanisms should not make resources more difficult to access than if the security mechanisms were not present. If security-related software is too complicated to configure, system administrators may unintentionally set it up insecurely. Additionally, security requires that messages impart no unnecessary information. For example, when a user supplies the wrong password during login, the system should simply state that the login failed.

In practice, the principle of psychological acceptability means that the security mechanism may add some extra burden, but that burden must be both minimal and reasonable. For example, accessing files might require the program to supply a password. Although this mechanism technically violates the principle, it is considered sufficiently minimal to be acceptable. However, in an interactive system where file accesses are more frequent and transient, this requirement would be too burdensome to be acceptable.

#3 Principle of Complete Mediation

This principle restricts the caching of information, which often leads to simpler implementations of mechanisms. It requires that all accesses to objects be checked to ensure they are allowed. Whenever a subject attempts to read an object, the operating system should mediate the action by first determining if the subject is allowed to read the object. If allowed, it provides the resources for the read to occur. If the subject tries to read the object again, the system should recheck if the subject is still allowed to read the object. Most systems, however, do not perform the second check; instead, they cache the results of the first check and base the subsequent access on the cached results.

For example, When a UNIX process tries to read a file, the OS determines if the process is allowed to read the file. If so, the process receives a file descriptor encoding the allowed access. Whenever the process wants to read the file, it presents the file descriptor to the kernel, which then allows the access. However, if the file's owner later disallows the process permission to read the file, the kernel still allows access because it relies on the previously issued file descriptor. This scheme violates the principle of complete mediation, as the second access is not rechecked. The cached value is used, rendering the denial of access ineffective.

#4 Principle of Separation of Privileges

This principle is restrictive because it limits access to system entities. It states that a system should not grant permission based on a single condition. Systems and programs should grant access to resources only when more than one condition is met. This approach provides fine-grained control over the resource and additional assurance that the access is authorized.

For example, In Berkeley-based versions of the UNIX operating system, users cannot switch from their accounts to the root account unless two conditions are met. First, the user must know the root password. Second, the user must be in the wheel group (the group with GID 0). Meeting only one condition is not sufficient to acquire root access; both conditions must be met.

#5 Principle of Open Design

This principle suggests that complexity does not add security. The principle of open design states that the security of a mechanism should not depend on the secrecy of its design or implementation. According to this principle, the implementation details should be independent of the design itself, which can remain open. If the details of the mechanism are leaked, it would result in a catastrophic failure for all users simultaneously. However, if secrets are abstracted from the mechanism, such as inside a key, then leakage of a key only affects one user. Keeping cryptographic keys and passwords secret does not violate this principle, as a key is not an algorithm. Conversely, keeping the enciphering and deciphering algorithms secret would violate this principle.

#6 Principle of Least Common Mechanism

The principle of least common mechanism restricts sharing by stating that mechanisms for accessing resources should not be shared across users or processes with different privilege levels. For instance, using the same function to calculate bonuses for exempt and non-exempt employees violates this principle, as the calculation is a shared mechanism.

For Example, A website provides electronic commerce services for a major company. Attackers want to deprive the company's revenue from that website. They flood the site with messages and tie up the electronic commerce services. Legitimate customers won't be able to access the website and, as a result, take their business elsewhere.

Here, sharing the Internet with the attackers' sites causes the attack to succeed. The appropriate countermeasure would be to restrict the attackers' access to the segment of the Internet connected to the Web site. Techniques for doing this include proxy servers such as the Purdue SYN intermediary or traffic throttling. The former targets suspect connections; the latter reduces the load on the relevant segment of the network indiscriminately.

#7 The Principle of Least Privilege (POLP)

The principle of least privilege states that a user or computer program should be given the least privileges to accomplish a task or a function. Access rights should be time-based to limit resource access bound to the time needed to complete necessary tasks. Programs or processes running on a system should have the capabilities required to make the code work but limit root access to the system. The secure coding concept must strictly adhere to POLP.

For example, an example in which the principle of least privilege works in the physical world is the use of valet keys. The idea is to give the valet access to those resources of the car necessary to get the car parked successfully.

Why the principle of least privilege is important?

Reduces malware propagation. When users are granted too much access, malware can leverage the elevated privilege to move laterally across your network and potentially launch an attack against other networked computers.

Proper implementation of the principle of least privilege improves operational performance by boosting workforce productivity, system stability, and fault tolerance.

How to Implement the Principle of Least Privilege (POLP):

Conduct a Privilege Audit: Review all accounts, processes, and programs to ensure they have only the required permissions.

Start with Least Privilege: Set minimal default privileges for new accounts, adding higher-level permissions only as needed.

Enforce Separation of Privileges: Separate admin from standard accounts and high-level functions from lower ones.

Use Just-in-Time Privileges: Grant elevated privileges only when needed, using expiring privileges or one-time-use credentials.

Ensure Traceability: Use unique IDs, one-time passwords, monitoring, and audits to track user actions.

Audit Regularly: Regular audits prevent privilege accumulation over time for accounts that no longer need it.

Another example, function checkPath() is used to verify the validity of a given path, which ensures access to unauthorized parts of the filesystem is not given for security purposes.

String checkPath(String pathname) throws Exception {

File target = new File(pathname);

File cwd = new File(System.getProperty("user.dir"));

String targetStr = target.getCanonicalPath();

String cwdStr = cwd.getCanonicalPath();

if (!targetStr.startsWith(cwdStr))

throw new Exception("File Not Found");

else

return targetStr;

}

checkPath()takes a pathname as an argument and creates aFileobject representing that path.It gets the current working directory (using

System.getProperty("user.dir")) and obtains the canonical (normalized) paths for both the target file and the current working directory. Canonicalization removes ambiguities like".."or"."in paths.The

ifstatement checks if the target path starts with the current working directory path. If it doesn't, an exception (File Not Found) is thrown to prevent unauthorized access.If the path is valid (within the server's working directory), it returns the canonical path.

Purpose of Canonicalization

It prevents path traversal attacks. The canonicalization and validation of the pathname help prevent attacks where an attacker could try to access files outside of the intended directory by using paths like "../..". By using canonical paths, the server ensures all such ambiguities are resolved and checks that the path lies within the permissible boundaries. Canonicalization and validation of pathname is essential in a server script to prevent attacks. This is a good security practice to ensure that the server does not allow access to directories or files that should not be accessed.

#8 Fail-Safe Defaults

A fail-safe stance involves designing a system that, even if one or more component fails, you can still ensure some level of security. The application must be able to handle errors that occur during execution consistently. The application should catch all errors and either fail-safe or closed. Design and operate an IT system to limit vulnerability and to be resilient in response.

#9 Principle of Economy of Mechanism

Keep the Design simple. Critical software components should be small and understandable. Simple designs are easier to inspect, trust, and have fewer chances for errors. Focus on minimizing structural complexity.

Use a Modular Approach:

Clean Interfaces: Ensure well-defined interfaces within and between modules.

Encapsulation: Use abstraction to simplify concepts by hiding implementation details.

Low Coupling, High Cohesion: These principles make the system easier to maintain and improve.

Reduce Complexity:

High complexity increases defects, makes testing difficult, and leads to insecure code due to cognitive overload. Access to data must be carefully managed, both internally and externally. Avoid implementing highly complex algorithms like O(nm), O(2^n), or O(n!). Limit privileged code blocks to 10 lines and keep all methods under 60 lines for maintainability.

Subscribe to my newsletter

Read articles from Pranav Bawgikar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pranav Bawgikar

Pranav Bawgikar

Hiya 👋 I'm Pranav. I'm a recent computer science grad who loves punching keys, napping while coding and lifting weights. This space is a collection of my journey of active learning from blogs, books and papers.