How KNN Works: A Simple Explanation of Nearest Neighbors

Manyue javvadi

Manyue javvadiWhen to use KNN Algorithm, What is KNN Algorithm?

When we need to do multi-class classification, and a linear classifier no longer performs well, it is recommended to choose KNN.

KNN - What does 'K' stand for?

KNN stands for K Nearest Neighbors, where "K" is a hyperparameter. We'll discuss this in more detail shortly.

Intuition of KNN:

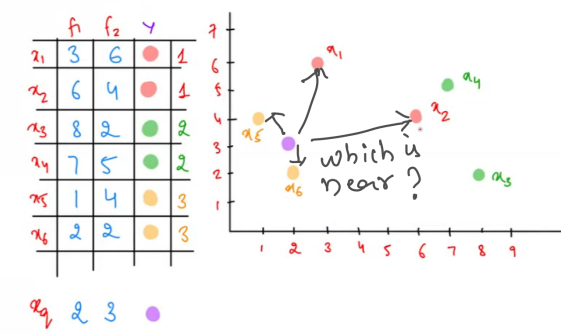

Let’s say we have two features and three classes: "Red," "Green," and "Yellow."

Problem: Which class does the new record/datapoint(xq) belong to?

Intuition: When looking at the above image, we usually think that the nearest points can indicate which class the new record belongs to.

Solution: The new record belongs to the "Yellow" class.

How do we know which points are nearest to the new record?

Distance:

We calculate the distance from all existing points to the new record/point and sort these distances in ascending order.

Assumption: When we say that a point belongs to the nearest neighbor’s class, we are assuming that points close to each other exhibit similar patterns.

Coming up with answers based on the distances we calculated:

Okay, so far, we understand that we calculate the distance between all points and the new point and sort those distances in ascending order. Then what?

Example of sorting distances:x6 < x5 < x1 < x2 < x4 < x3

Now, if we consider the first two distances, we can say the new point belongs to the “Yellow” class.

If we consider the first three distances, the voting will be:

"Yellow" - 2 votes

"Red" - 1 vote.

So, based on the majority voting, we conclude it’s still the “Yellow” class.

If we consider the first four distances, the voting will be:

"Yellow" - 2 votes

"Red" - 2 votes.

So, the classification could be either "Yellow" or "Red."

This is exactly what the 'K' hyperparameter controls:

The value of "K" determines how many neighbors' distances you need to consider for classification.

As observed above, it’s generally good to avoid even numbers for K to prevent ties in voting.

Summary so far:

Calculate the distance between all the points and the new point.

Sort these distances in ascending order.

Pick the top K distances (usually odd numbers).

Based on majority voting, decide which class the new point belongs to.

Let's continue in KNN Blog 2, where we'll cover:

When not to use KNN

Which Distance Metric to Use (Euclidean or Manhattan distance)

Issues with KNN

Handling imbalanced data

Practical implementation of KNN

Subscribe to my newsletter

Read articles from Manyue javvadi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Manyue javvadi

Manyue javvadi

Business Undergraduate |Ex-Software Engineer |Machine Learning Student |Interested In NLP |Creating New NLP Product for Retail and Hospitality Industry.