Load Balancers

Udai Chauhan

Udai Chauhan

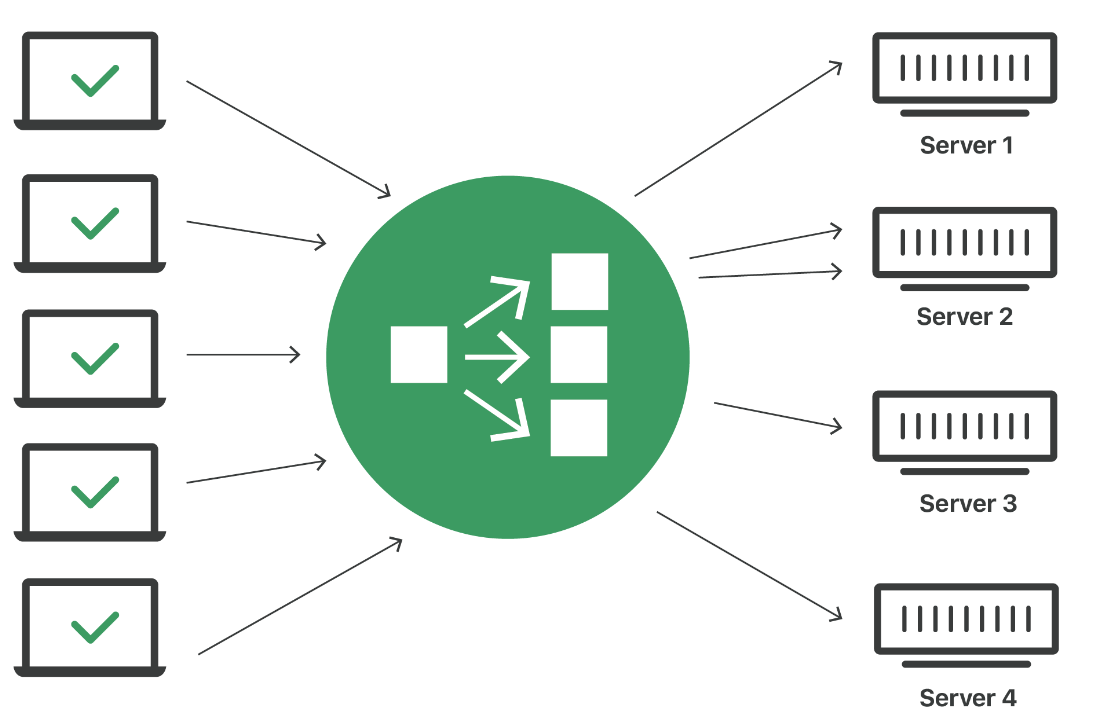

A load balancer is a networking device or application that distributes the incoming traffic among the servers to achieve efficient performance.

In simple terms, suppose in a group there is a leader, and he has the responsibility to distribute the work among the team members, so no single person has more workload.

Load balancing is the process of efficient distribution of network traffic (requests by users) across all the nodes (servers) in a distributed system.

Roles of Load Balancers

The load distribution is equal on every node.

Health check-up. If the node is not operational, the request is passed to another node that is up and running and ready to serve the request.

Load balancers ensure High Scalability, High Throughput (throughput means how much data flows per sec, measured as bits/sec), and High Availability.

When to use and when not to use it?

In monolithic or in the case of vertically scaled systems we don’t need it. All the resources of the application are in one device. But in Microservices Architecture we need it.

Challenges of Load Balancers

Single Point of Failure (SPoF): During a load balancer malfunction the communication between the client and server would be broken. To solve this issue, we can use Redundancy.

Now you have a question What the hell is Redundancy? Chill, I will explain.

Redundancy is simply the duplication of nodes or components so that when a node or component fails, the duplicated node is available to replace the failed one and is up for service to incoming requests (traffic).

Redundancy is of 2 types:

Active Redundancy: It is considered each unit is operating /active and responding to the action. Multiple nodes are connected with load balancers, and each unit receives an equal load.

Passive Redundancy: It is considered when one node is active or operational and the other duplicate nodes are not operational. But when the failure occurs on the main one. The passive node becomes available and maintains the availability by becoming. the active node.

The system can have ab active Load Balancers and one passive load balancers.

Advantages of Using Load Balancers

Optimization: Load Balancers help in resource utilization and lower response time thereby optimizing the system in a high-traffic environment.

Better User Experience: The load balancer helps reduce the latency and increase the availability making the user’s request go smoothly and error-free.

Prevents Downtime: The load balancers maintain a record of servers that are non-operational and distribute the traffic accordingly, therefore ensuring security and preventing downtime, which also helps increase profit and productivity.

Flexibility: The load balancers have the flexibility to re-route the traffic in case of a breakdown and work on server maintenance to ensure efficiency. This helps in avoiding the traffic bottlenecks.

Scalability: When the traffic of web applications increases suddenly, load balancers use physical or virtual servers to deliver the response without disruption.

Redundancy: Load Balancing also provides in-build redundancy by re-routing the traffic in case of any failure.

Load Balancing Algorithms

Round Robin (Static): Allocating the requests in rotating functions.

Weighted Round Robin (Static): It is similar to Round Robin when the sever are of different capacities (some nodes can have resources to handle more traffic, but some don’t). So the algo assign high capacity server, the higher request.

IP Hash Algorithm (Static): The server has almost equal capacity and the hash function (IP of Source IP) is used for random or unbiased distribution of requests to the nodes. (Basically, it algo sees the IP to be distributed to the particular server or node).

Source IP Hash (Static): Combines the server and client’s source and destination IP address to produce a Hash Key. The key can be used to determine the request distribution.

Least Connection Algorithm (Dynamic): Client requests are distributed to the application server within the least number of active connections at the time the client requests are received. (So in simple terms, Load Balancer sees which has the least connection request right now when the user makes the request, and that request will redirect to the least connection server).

Least Response Time (Dynamic): The request is distributed based on the server which has the least response time.

Static: those algo which mark as static, means these algo are predefined, they perform what is written in algo to do.

Dynamic: these algorithms will compute on runtime to check which server has the least connections and least response time.

I hope this article helps you to understand the load balancer and its application.

Thank You!!!. See You Soon. Adios Amigo.

Subscribe to my newsletter

Read articles from Udai Chauhan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Udai Chauhan

Udai Chauhan

I am a passionate software developer. Well-versed with Frontend tech stacks - HTML, CSS, JS, and many more.