Load Balancing

Prajakta Khatri

Prajakta Khatri

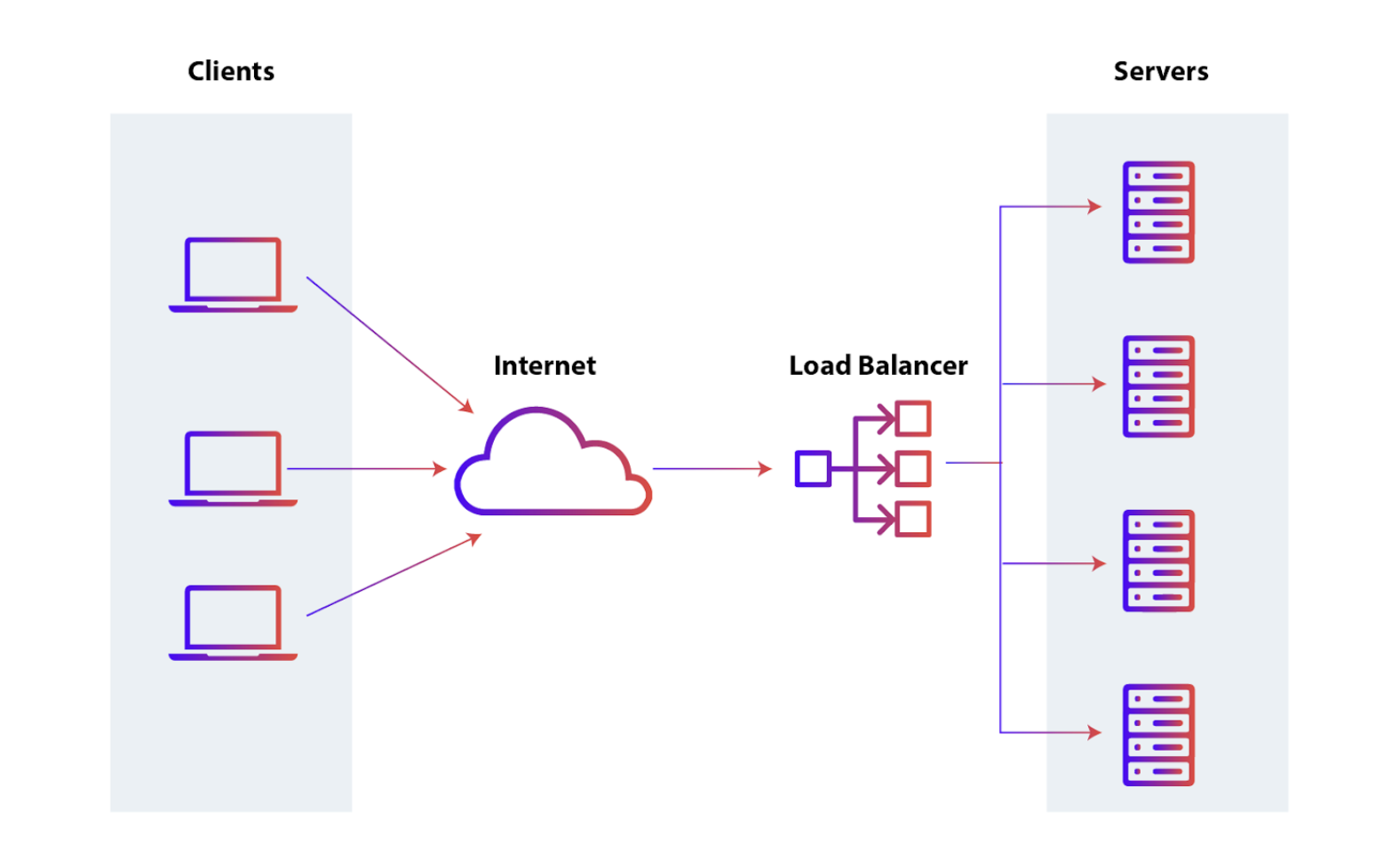

Load balancer is critical component of system. It manages the load or data across multiple servers, machines or database servers. It tracks the status of each of servers within the system and, based on their responses, distributes the load evenly.

What will happen if there is NO Load Balancer?

If application is running on single server and client is connecting to that server directly without using load balancing, there are below key issues:

Single Point of Failure: If the server experiences downtime or encounters any issue, entire application will be down, making it temporarily unavailable for the users. This leads to a poor user experience, which is unacceptable for service providers.

Overloaded Servers: A web server can handle only limited number of requests. As the business grows and traffic increases, server will be overloaded. To address this, additional servers need to be introduced, and requests must be distributed across a cluster of servers.

Benefits of using a Load Balancer

- Prevents Downtime: Ensures continuous performance without user downtime by efficiently distributing traffic.

Scalability: With auto-scaling, more servers are added during high traffic, and the load balancer adjusts the distribution.

Manages Failures: Isolates unhealthy servers from the load distribution to prevent service disruption.

Avoids Traffic Bottlenecks: Anticipates high traffic and warns for necessary actions.

Efficient Resource Utilisation: Distributes tasks across servers to balance the workload.

Session Persistence: Maintains user sessions across servers for applications needing stateful communication.

High Availability: Improves availability by redirecting traffic to healthy servers during failures.

Fault Tolerance: Ensures service continuity by rerouting traffic from failed servers.

SSL Termination: Offloads SSL/TLS encryption, enhancing server efficiency.

Workload distribution

A load balancer offers several key variations in how it distributes traffic:

Host-based: Distributes requests based on the requested hostname.

Path-based: Using the entire URL to distribute requests as opposed to just the hostname.

Content-based: Inspects the message content of a request. This allows distribution based on content such as the value of a parameter.

Layers

Layer 4 (Network Layer)

This is the simplest form of load balancing, where decisions are made based on network information such as IP addresses and TCP/UDP port numbers.

The load balancer routes traffic based on the source and destination IP addresses and ports but does not inspect the actual content of the requests.

These are often dedicated hardware devices that can operate at high speed.

Layer 7 (Application Layer)

At this layer, load balancing is done based on the content of the application-level protocols (e.g., HTTP, HTTPS).

The load balancer can inspect headers, cookies, paths, and the content of the requests to make more intelligent routing decisions. It can route requests based on URL patterns, HTTP methods, or specific application data.

Key Differences

Layer 4 load balancers are faster and more efficient as they work with less data, but they offer less fine-grained control.

Layer 7 load balancers are more flexible and capable of routing based on complex rules, but they may introduce more latency due to the additional processing required to inspect content.

Types of Load Balancers

Software Load Balancers

They are software applications that can run on standard servers or virtual machines.

They are flexible, easy to scale, cost-effective but may have lower performance compared to hardware solutions

Examples: HAProxy, NGINX, Apache Traffic Server.

Hardware Load Balancers

They are dedicated hardware devices that handle load balancing.

They provide high performance, low latency, suitable for high-traffic environments but are expensive, harder to scale, less flexible.

Examples: F5 Networks, Citrix ADC.

Cloud-based Load Balancers

They are managed load balancing services provided by cloud providers.

They are highly scalable, managed, easy to integrate into cloud environments, pay-as-you-go pricing but limited customisation available.

Examples: AWS Elastic Load Balancer (ELB), Azure Load Balancer, Google Cloud Load Balancer.

DNS-based Load Balancers

They use DNS resolution to distribute traffic across multiple servers.

They are simple, low-cost solution for basic traffic distribution but not have health checks, not suitable for real-time applications.

Examples: AWS Route 53, NS1.

Global Server Load Balancers (GSLB)

They distributes traffic across servers located in different geographical regions.

They ensure high availability and low latency across different regions, disaster recovery but are complex to set up and manage.

Examples: Cloudflare Load Balancing, AWS Route 53.

Routing Algorithms

Least Connections

A new request is sent to the server that currently has the fewest active connections. The server's computing power is also considered when deciding which one has the lightest load.

Least Bandwidth

This method measures traffic in megabits per second (Mbps), sending client requests to the server with the least Mbps of traffic.

Least Response Time

Issue the request to the machine taking the least amount of time to return a response.

Round Robin

Distribute all the requests evenly across all the servers.

Waited Round Robin

Distribute all the requests across servers based on the resource capability.

Hashing

Distributes requests based on a key we define, such as the client IP address or the request URL.

Subscribe to my newsletter

Read articles from Prajakta Khatri directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Prajakta Khatri

Prajakta Khatri

👩💻 Hello World, I’m Prajakta! 🚀 Learner| Developer| Tech Enthusiast