Caching

Prajakta Khatri

Prajakta Khatri

Caching is a common method for keeping your data closer to users, which speeds up response times compared to the usual access time. It involves saving frequently accessed data in a location that can be accessed quickly and easily.

The main purpose of caching is to enhance system performance by minimizing latency, reducing the load on the primary data store, and enabling quicker data retrieval.

Why use caching?

Improved Performance: Caching significantly reduces the time needed to access frequently used data, boosting overall system speed and responsiveness.

Reduced Load on Original Source: By decreasing the amount of data accessed from the original source, caching reduces the load on the source, enhancing its scalability and reliability.

Cost Savings: By easing the load on backend infrastructure, caching helps lower operational costs.

Better User Experience: Faster response times from cached data lead to a better user experience, especially for web and mobile applications.

How much data should be stored in cache?

While caching offers many advantages, it’s not practical to store all data in cache memory for faster access. There are several reasons for this:

Cache hardware is significantly more expensive than regular database storage.

Storing large amounts of data in the cache can slow down search times.

Cache should contain only the most relevant and frequently requested information to optimize future data access.

80/20 rule

The 80/20 rule is the most common way to do that — 20% of objects are used 80% of the time. According to the 80/20 rule, we can cache 20% of data and improve 80% requests latency time.

Where cache can be added?

Caching is prevalent across nearly every layer of computing.

In hardware, for instance, there are multiple levels of cache memory. The CPU uses level 1 (L1) cache, followed by level 2 (L2) cache, and finally, regular RAM (random access memory).

Operating systems also implement caching, such as for kernel extensions or application files.

Similarly, web browsers use caching to reduce website load times.

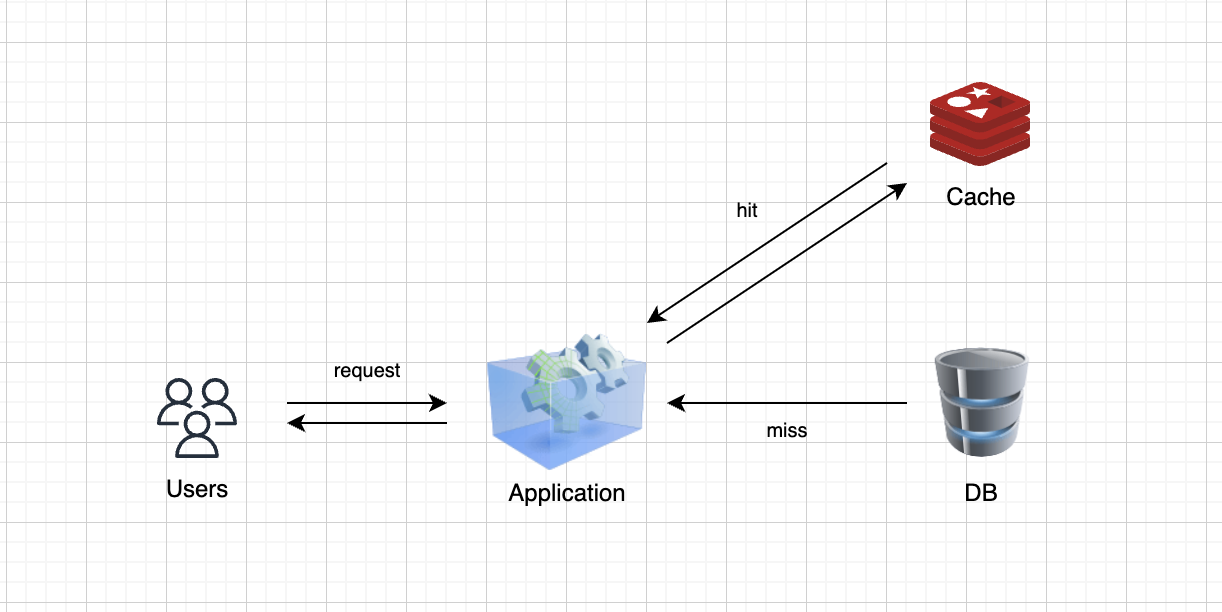

Cache Hit & Cache Miss

Cache Hit: Data is successfully read from the cache during the read request.

Cache Miss: Data is not present in the cache and the call goes to DB to fetch the data.

Cache Invalidation

Cache invalidation is the process by which a computer system marks cache entries as invalid and either removes or replaces them. When data is modified, it must be invalidated in the cache; failure to do so may lead to inconsistent behavior in the application.

There are three types of caching systems:

Write Around Cache

Write happens in database and cache is updated when data is read from database for the first time.

Pros: Writing will be faster than with a write-through cache but slower than with a write-back cache. This approach is effective in write-intensive applications.

Cons: There may be stale data in the cache since the database updates might not yet have invalidated the cached data.

Write Back Cache

During the write operation, the data is written to the cache, and a success response is returned. The database is updated asynchronously alongside the cache. Reads are performed from the cache, and if there is a cache miss, the call is directed to the database.

Pros: Writes are fastest compared to other two strategies.

Cons: There is a risk of data loss in case the caching layer crashes. We can improve this by having more than one replica acknowledging the write in the cache.

Write Through Cache

The write operation occurs in both the database and the cache before sending a success response to the user for the write request. Reads are performed from the cache, and if there is a cache miss, the call is directed to the database.

Pros: Reads are quicker for the most recent data, ensuring that stale data is not returned when using write-through cache.

Cons: Writes are very slow as the write has to be performed at both the layers.

Types of Caching

In-Memory Cache

In-memory caching is a technique where data is temporarily stored in the system's RAM (random-access memory) for faster access.

Instead of retrieving frequently accessed data from slower storage systems (like databases or disk-based storage), in-memory caching allows that data to be fetched directly from memory.

Since RAM is significantly faster than traditional storage, this results in improved application performance and reduced latency.

Distributed Cache

A distributed cache is a system that pools together the random-access memory (RAM) of multiple networked computers into a single in-memory data store used as a data cache to provide fast access to data.

While most caches are traditionally in one physical server or hardware component, a distributed cache can grow beyond the memory limits of a single computer by linking together multiple computers.

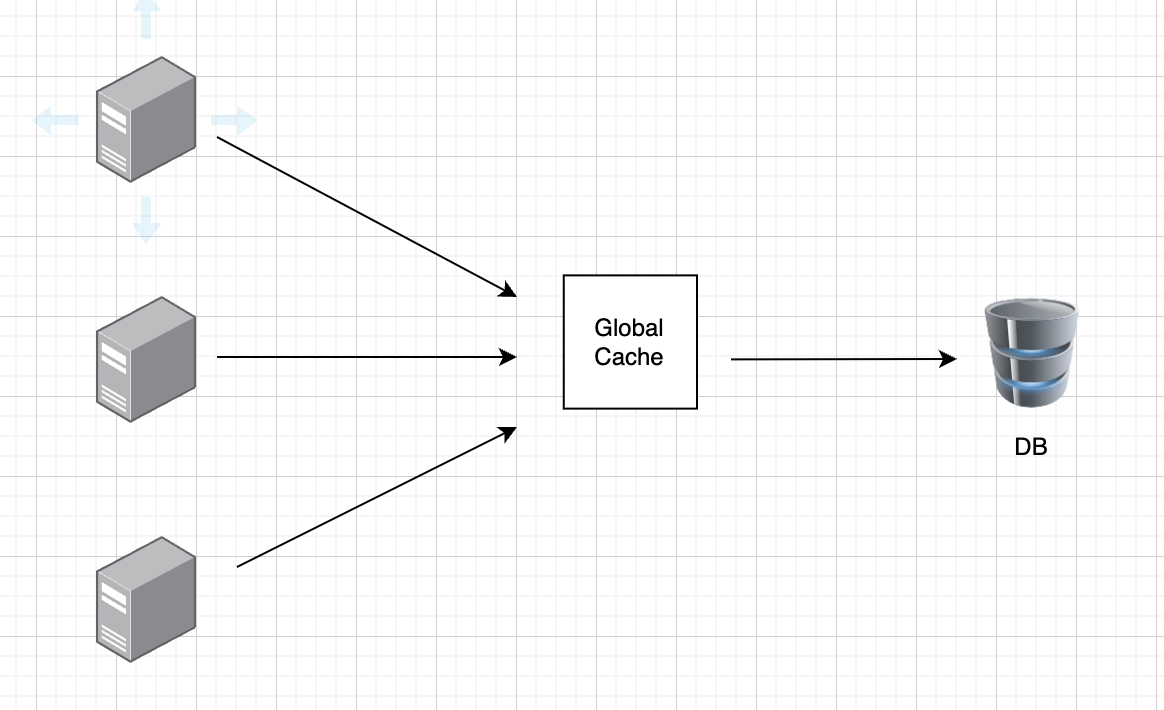

Global Cache

As the name suggests, we will have a single shared cache that all the application nodes will use.

When the requested data is not found in the global cache, it's the responsibility of the cache to find out the missing piece of data from the underlying data store.

Eviction Strategies

Removing data from the cache when the cache is full.

Least Recently Used (LRU): LRU removes the least recently accessed data when the cache is full.

Least Frequently Used (LFU): LFU removes data that has been accessed the least number of times, under the assumption that rarely accessed data is less likely to be needed.

Most Recently Used (MRU): Discards, in contrast to LRU, the most recently used items first.

Most Frequently Used (MFU): Discards, in contrast to LFU, the most frequently used items first.

First In, First Out (FIFO): FIFO removes the oldest data in the cache first, regardless of how often or recently it has been accessed.

Time-to-Live (TTL): TTL is a time-based eviction policy where data is removed from the cache after a specified duration, regardless of usage.

When not to use caching?

Let's consider some situations where caching may not be effective:

Caching offers no benefit when accessing the cache takes as much time as retrieving data directly from the main data store.

Caching is ineffective when the data changes frequently, since the cached information becomes outdated, requiring constant access to the primary data store.

Subscribe to my newsletter

Read articles from Prajakta Khatri directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Prajakta Khatri

Prajakta Khatri

👩💻 Hello World, I’m Prajakta! 🚀 Learner| Developer| Tech Enthusiast