Deadlock Prevention vs. Deadlock Avoidance: Explained with Examples and Analogies

Logeshwaran N

Logeshwaran N

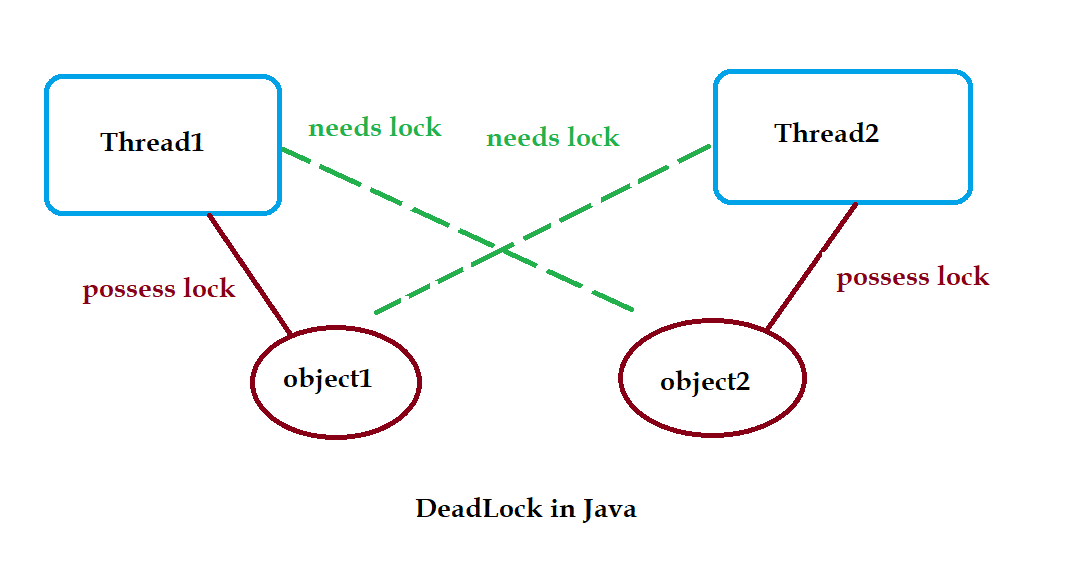

In the world of concurrent programming, deadlocks are a significant challenge. A deadlock occurs when two or more processes are unable to proceed because each is waiting for the other to release a resource. This can lead to system freezes, degraded performance, or complete application failure. Deadlock management techniques are crucial for ensuring that concurrent systems operate smoothly. Two commonly discussed strategies to deal with deadlocks are deadlock prevention and deadlock avoidance.

In this article, we will explore these two concepts, differentiate between them, and provide code examples and real-life analogies to better understand how these strategies work.

What is Deadlock?

Before diving into prevention and avoidance, let's briefly define a deadlock. Deadlock happens when:

Mutual Exclusion: At least one resource is held in a non-shareable mode.

Hold and Wait: A process is holding at least one resource and waiting for other resources that are being held by other processes.

No Preemption: Resources cannot be forcibly taken away from a process; the process must release them voluntarily.

Circular Wait: There is a cycle in the waiting graph where each process is waiting for a resource held by the next one in the cycle.

If all four conditions hold, a deadlock will occur, freezing the system.

Deadlock Prevention: Stopping Deadlock Before It Happens

Deadlock prevention is a proactive technique that aims to ensure that at least one of the four necessary conditions for deadlock is never met. If any one of the conditions is broken, a deadlock cannot occur. There are several strategies for preventing deadlocks:

1. Breaking Mutual Exclusion:

Ensure that resources can be shared among processes. For instance, read-only access to a database can be allowed to multiple processes. However, this isn’t always feasible, as many resources (like printers) cannot be shared.

2. Breaking Hold and Wait:

Require that a process requests all the resources it needs at once, rather than holding some and waiting for others. This ensures that no process holds onto resources while waiting.

3. Breaking No Preemption:

If a process is holding resources and requests another that cannot be immediately granted, all the resources held by the process are released. The process will then be restarted once it can obtain all the necessary resources.

4. Breaking Circular Wait:

Impose a total ordering on the resources and ensure that processes request resources in increasing order. This prevents the formation of cycles.

Example of Deadlock Prevention in Code

Let’s look at a simple deadlock prevention strategy using resource ordering. In this example, processes acquire resources in a defined sequence to avoid circular wait:

class Resource {

public synchronized void useResource() {

System.out.println(Thread.currentThread().getName() + " is using resource.");

}

}

public class DeadlockPrevention {

public static void main(String[] args) {

final Resource resource1 = new Resource();

final Resource resource2 = new Resource();

Thread t1 = new Thread(() -> {

synchronized (resource1) {

System.out.println("Thread 1: Holding resource 1");

try { Thread.sleep(50); } catch (InterruptedException e) {}

synchronized (resource2) {

System.out.println("Thread 1: Holding resource 1 & 2");

}

}

});

Thread t2 = new Thread(() -> {

synchronized (resource1) { // Request resources in the same order to avoid circular wait

System.out.println("Thread 2: Holding resource 1");

try { Thread.sleep(50); } catch (InterruptedException e) {}

synchronized (resource2) {

System.out.println("Thread 2: Holding resource 1 & 2");

}

}

});

t1.start();

t2.start();

}

}

In the above code, both threads are acquiring resources in the same order, which prevents deadlocks. If the resources were requested in a different order, we could potentially run into a deadlock situation.

Deadlock Avoidance: Carefully Steering Around the Problem

Deadlock avoidance is a more flexible approach where the system dynamically analyzes resource-allocation states to ensure that deadlocks are avoided. It’s like a careful driver steering around potholes. Unlike prevention, where we eliminate one of the necessary conditions for deadlock, avoidance allows for the possibility of a deadlock situation but ensures that the system never reaches a deadlock state.

One of the most popular deadlock avoidance algorithms is Banker’s Algorithm, which evaluates resource allocation requests to ensure they don’t push the system into an unsafe state. An unsafe state can lead to a deadlock, while a safe state guarantees no deadlocks.

Example of Deadlock Avoidance

In this simple example, we’ll simulate a system that checks if granting a resource request will push the system into an unsafe state:

class DeadlockAvoidance {

private final int[] availableResources = {3, 3}; // Example: 2 types of resources

private final int[][] max = {{7, 5}, {3, 2}}; // Max demand for 2 processes

private final int[][] allocation = {{0, 1}, {2, 0}}; // Currently allocated resources

private final int[][] need = {{7, 4}, {1, 2}}; // Remaining resources needed

public boolean isSafeState(int process, int[] request) {

// Simulate resource allocation to check safety

for (int i = 0; i < availableResources.length; i++) {

availableResources[i] -= request[i];

allocation[process][i] += request[i];

need[process][i] -= request[i];

}

// Check if system is still in a safe state

boolean safe = true; // (simplified check)

for (int i = 0; i < availableResources.length; i++) {

if (availableResources[i] < 0) {

safe = false;

break;

}

}

// Rollback if system is unsafe

if (!safe) {

for (int i = 0; i < availableResources.length; i++) {

availableResources[i] += request[i];

allocation[process][i] -= request[i];

need[process][i] += request[i];

}

}

return safe;

}

public static void main(String[] args) {

DeadlockAvoidance deadlockAvoidance = new DeadlockAvoidance();

int[] request = {1, 0}; // Process 0 requesting resources

if (deadlockAvoidance.isSafeState(0, request)) {

System.out.println("Safe to allocate resources.");

} else {

System.out.println("Not safe to allocate resources.");

}

}

}

In the above example, the system checks if it’s safe to allocate resources to the requesting process. If allocating the requested resources pushes the system into an unsafe state, the request is denied, and the resources are rolled back.

Real-Life Analogy

To better understand the difference between deadlock prevention and deadlock avoidance, let's consider a simple real-life analogy:

Deadlock Prevention: Imagine you’re driving through a one-lane tunnel. A rule is enforced that only one car can enter the tunnel at a time, ensuring no cars will meet head-on inside. This is akin to deadlock prevention, where strict rules are imposed to prevent conflicts before they arise.

Deadlock Avoidance: Now, imagine a scenario where cars can enter the tunnel freely, but there's a traffic officer at the entrance. The officer assesses the number of cars already inside and ensures that if another car enters, it won't cause a traffic jam. This resembles deadlock avoidance, where the system carefully analyzes whether it’s safe to proceed.

Conclusion

Deadlock prevention and deadlock avoidance are two different strategies used in concurrent programming to manage resources and avoid deadlocks. Prevention focuses on establishing rules that block deadlocks from happening, while avoidance allows the system to operate more freely, ensuring that resources are allocated only when safe.

By understanding these concepts and using the appropriate strategy for your system, you can significantly improve the reliability and performance of your applications.

Subscribe to my newsletter

Read articles from Logeshwaran N directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Logeshwaran N

Logeshwaran N

I’m a dedicated Cloud and Backend Developer with a strong passion for building scalable solutions in the cloud. With expertise in AWS, Docker, Terraform, and backend technologies, I focus on designing, deploying, and maintaining robust cloud infrastructure. I also enjoy developing backend systems, optimizing APIs, and ensuring high performance in distributed environments. I’m continuously expanding my knowledge in backend development and cloud security. Follow me for in-depth articles, hands-on tutorials, and tips on cloud architecture, backend development, and DevOps best practices.