Leveraging Caching, CDN, and Rate Limiting to Enhance Kubernetes Performance

Subhanshu Mohan Gupta

Subhanshu Mohan GuptaTable of contents

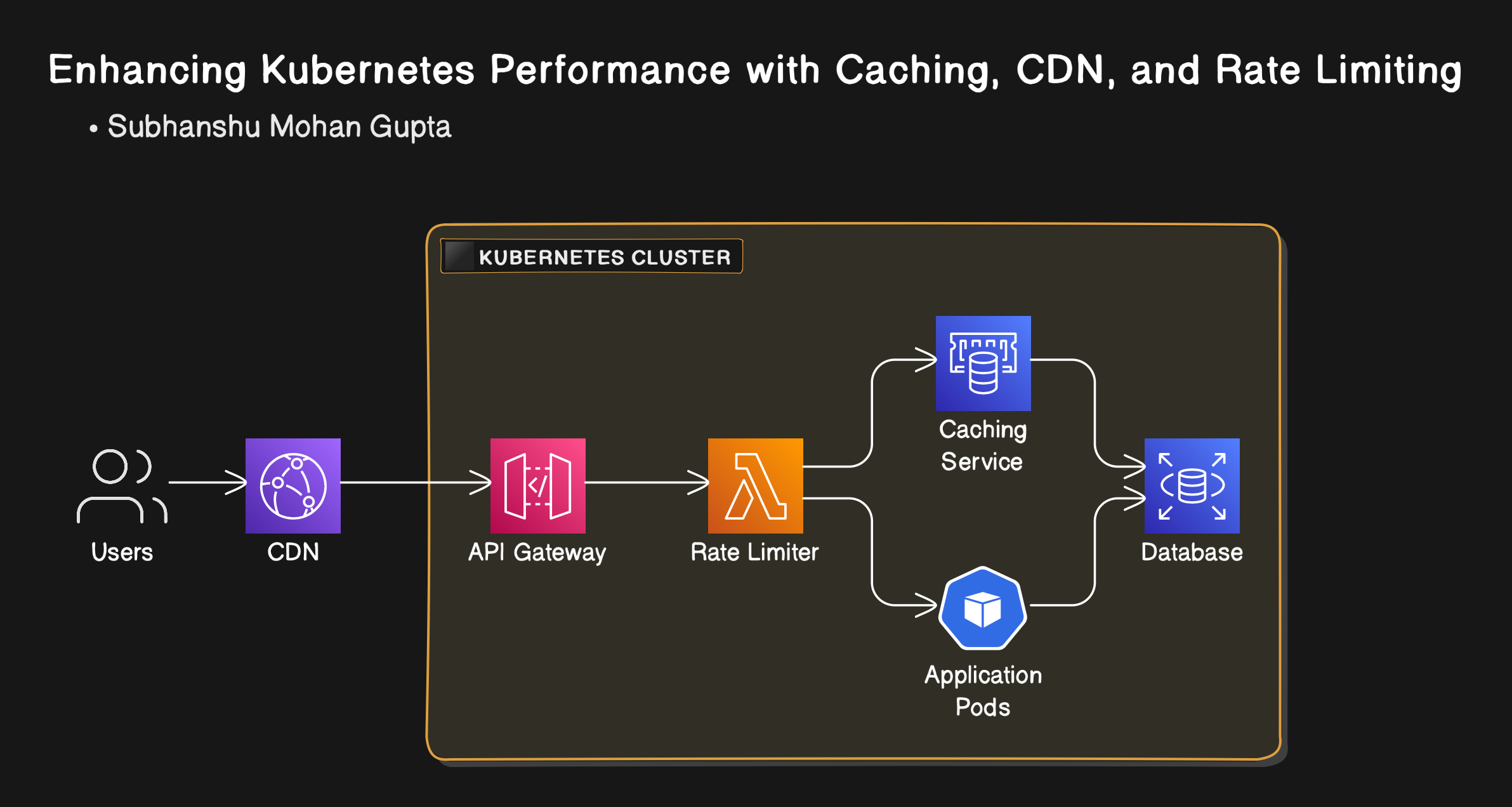

Welcome to Part VI of my Kubernetes series! In this post, we’re diving deep into three powerful techniques: caching, Content Delivery Networks (CDNs), and rate limiting that can significantly boost the performance and resilience of your Kubernetes-based applications.

Introduction

In today's world of cloud-native applications, speed, scalability, and reliability are paramount. Kubernetes, while powerful, needs additional tools to reach optimal performance. Integrating caching mechanisms, content delivery networks (CDNs), and rate limiting can supercharge your Kubernetes environment, reducing latency, improving content delivery, and ensuring fairness in resource allocation.

Why Caching, CDN, and Rate Limiting Matter in Kubernetes?

As Kubernetes is designed to scale applications dynamically, it's important to ensure that it can handle not just the scaling but also the performance aspects. Let’s break down why these three components: caching, CDNs, and rate limiting are critical:

Caching: By caching frequently accessed data, you minimize database queries and reduce latency for end-users, ensuring faster response times.

CDNs: CDNs distribute static assets like images, CSS, and JavaScript files across global edge locations, ensuring faster content delivery to users, regardless of their geographic location.

Rate Limiting: To prevent abuse and overload, rate limiting restricts how many requests a service can process, safeguarding your infrastructure from DDoS attacks or unexpected traffic spikes.

Problem Statement: Slow Responses and Scalability Issues in Kubernetes

As Kubernetes scales applications, the increasing number of requests can lead to slower response times and degraded performance. Without caching, every request may hit the backend, straining resources. Additionally, delivering static content without a CDN results in higher latency, and without rate limiting, services may get overwhelmed by a sudden traffic surge, leading to downtime.

Real-World Example: E-Commerce Platform

Imagine an e-commerce platform running on Kubernetes. It needs to serve high volumes of traffic, deliver product images quickly, and protect its APIs from being overwhelmed by excessive requests. With thousands of users searching for products, viewing images, and making purchases, this setup would need efficient caching for fast responses, a CDN to serve static assets, and rate limiting to safeguard the backend.

Implementation

Step 1: Set Up a Kubernetes Cluster

If you don't already have a Kubernetes cluster, you can set up one using Minikube, GKE, or any cloud provider's Kubernetes service. Here's an example of setting it up with Minikube:

# Install Minikube (if not installed)

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

# Start Minikube

minikube start --memory=4096 --cpus=2

Step 2: Deploy Redis as a Cache

First, we’ll deploy Redis into the Kubernetes cluster to handle caching.

Install Redis with Helm: Use Helm to install a Redis instance. If you don’t have Helm, install it here.

helm repo add bitnami https://charts.bitnami.com/bitnami helm install redis bitnami/redisCreate a Redis Kubernetes Service and Deployment:

Alternatively, here is a YAML file for deploying Redis without Helm:

apiVersion: v1 kind: Service metadata: name: redis labels: app: redis spec: ports: - port: 6379 selector: app: redis --- apiVersion: apps/v1 kind: Deployment metadata: name: redis spec: replicas: 1 selector: matchLabels: app: redis template: metadata: labels: app: redis spec: containers: - name: redis image: redis:6.0 ports: - containerPort: 6379Connecting Your Application to Redis:

In your application code (e.g., Node.js), connect to the Redis instance:

const redis = require('redis'); const client = redis.createClient({ host: 'redis', port: 6379 }); client.on('error', (err) => console.log('Redis Client Error', err)); client.get('key', (err, value) => { if (value) { return res.send(value); // Return cached data } else { // Fetch from database, cache result, and then return it const result = dbQuery(); client.set('key', result, 'EX', 3600); // Cache for 1 hour return res.send(result); } });Redis Monitoring: Use

redis-clito monitor Redis activity. You can execute it from inside the Redis pod:kubectl exec -it redis-<pod-name> -- redis-cli

Step 3: Set Up a CDN (Cloudflare)

Configure Cloudflare Account

Sign up at Cloudflare.

Point your domain's DNS to Cloudflare’s nameservers by changing your DNS configuration (done on your DNS provider’s dashboard).

Integrate Cloudflare with Kubernetes:

- Ensure your application is exposed via Kubernetes Ingress, as Cloudflare will point to this entry.

Configure SSL with Cloudflare and Kubernetes: Use Kubernetes Ingress with Cloudflare’s SSL (you can use cert-manager for SSL certificates).

Here's an example of an Ingress resource with Cloudflare SSL enabled:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-app-ingress annotations: nginx.ingress.kubernetes.io/ssl-redirect: "true" cert-manager.io/cluster-issuer: "letsencrypt-prod" cloudflare.com/ssl: "true" spec: rules: - host: my-app.example.com http: paths: - path: / pathType: Prefix backend: service: name: my-service port: number: 80Set Cache Control Headers: Ensure your Kubernetes application sends correct cache-control headers for static assets. For example, in a Node.js app, you can set:

app.use(express.static('public', { maxAge: '1y', // Cache for 1 year etag: false }));Cloudflare Page Rules for Caching:

In the Cloudflare dashboard, set up caching rules to optimize performance for static content.

Go to the Caching tab and configure rules for assets like images, CSS, and JavaScript.

Step 4: Implement Rate Limiting Using NGINX Ingress

Rate limiting prevents abuse by limiting the number of requests a user or service can make in a set time period.

Install the NGINX Ingress Controller: Use Helm to install the NGINX Ingress Controller:

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx helm install nginx-ingress ingress-nginx/ingress-nginxConfigure Rate Limiting in Ingress:

Add annotations to your Ingress resource to enable rate limiting:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: api-ingress annotations: nginx.ingress.kubernetes.io/limit-rps: "10" # Requests per second nginx.ingress.kubernetes.io/limit-burst: "20" # Burst limit nginx.ingress.kubernetes.io/limit-exempt: "127.0.0.1" # Exempt internal traffic spec: rules: - host: api.example.com http: paths: - path: /api pathType: Prefix backend: service: name: api-service port: number: 80Testing Rate Limiting:

- Use tools like

wrk,ab, orcurlto simulate multiple requests and validate rate limiting:

- Use tools like

wrk -t12 -c400 -d30s http://api.example.com/api/endpoint

- Check the logs from the NGINX Ingress controller to ensure requests beyond the rate limits are throttled.

Step 5: Monitoring and Testing

Monitoring:

Use Prometheus and Grafana to monitor the Redis cache, Kubernetes cluster, and NGINX rate-limiting behavior.

You can install Prometheus and Grafana using Helm:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts helm install prometheus prometheus-community/prometheus helm install grafana grafana/grafana

Testing CDN Performance:

- Use tools like Pingdom or GTmetrix to analyze CDN impact on page load times and confirm that Cloudflare is caching static content.

Testing Redis:

- Test cache hits and misses by monitoring Redis with

redis-cliand ensure your application is pulling data from the cache rather than querying the database on each request.

- Test cache hits and misses by monitoring Redis with

Testing Rate Limiting:

- Use

wrkor similar tools to test that rate limiting is correctly preventing excess requests while allowing legitimate traffic.

- Use

Architecture Diagram

Conclusion

By integrating caching, CDNs, and rate limiting into your Kubernetes environment, you can dramatically enhance application performance, ensure content is delivered efficiently, and safeguard your services from overload. The combination of these tools allows your infrastructure to scale gracefully while maintaining speed and reliability.

Implementing these best practices will ensure your cloud-native applications run smoothly, providing users with the seamless experience they expect in today’s fast-paced digital world.

Reference links

What’s next?

Stay tuned for Part VII of this series, where we’ll explore Designing an Effective Fallback Plan for Kubernetes Failures to ensure continuous uptime even in the face of unexpected failures.

Subscribe to my newsletter

Read articles from Subhanshu Mohan Gupta directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Subhanshu Mohan Gupta

Subhanshu Mohan Gupta

A passionate AI DevOps Engineer specialized in creating secure, scalable, and efficient systems that bridge development and operations. My expertise lies in automating complex processes, integrating AI-driven solutions, and ensuring seamless, secure delivery pipelines. With a deep understanding of cloud infrastructure, CI/CD, and cybersecurity, I thrive on solving challenges at the intersection of innovation and security, driving continuous improvement in both technology and team dynamics.