The AI Depth Illusion: Understanding the Limitations of Modern AI

Gerard Sans

Gerard SansTable of contents

In the era of artificial intelligence, it's easy to be swept away by the hype surrounding AI as an all-knowing oracle. However, today's AI, despite its impressive capabilities, still falls short of true understanding. Let's explore why AI is more like a powerful stochastic engine mapping inputs to patterns rather than a system with genuine comprehension.

The Blind Jigsaw Puzzle Analogy

Imagine AI solving a jigsaw puzzle but flipped upside down. Like puzzle pieces, AI has no lived experiences to use—just correlations from pieces that fit together. These pieces do carry embedded knowledge and biases, captured in their visible face where there's perhaps an image of a landscape; this is the information provided during the AI's training process. For instance:

A puzzle piece of a traffic light contains red, amber, and green colors as a single chunk.

Similarly, AI learns to associate these elements together without truly understanding their meaning or function.

The AI doesn't grasp how a traffic light operates; it simply utilises these colours as a whole, reflecting the embedded knowledge present in its training data.

The AI system is solving a jigsaw puzzle without seeing the picture. It doesn't understand what the completed puzzle represents; it only knows how the pieces fit together.

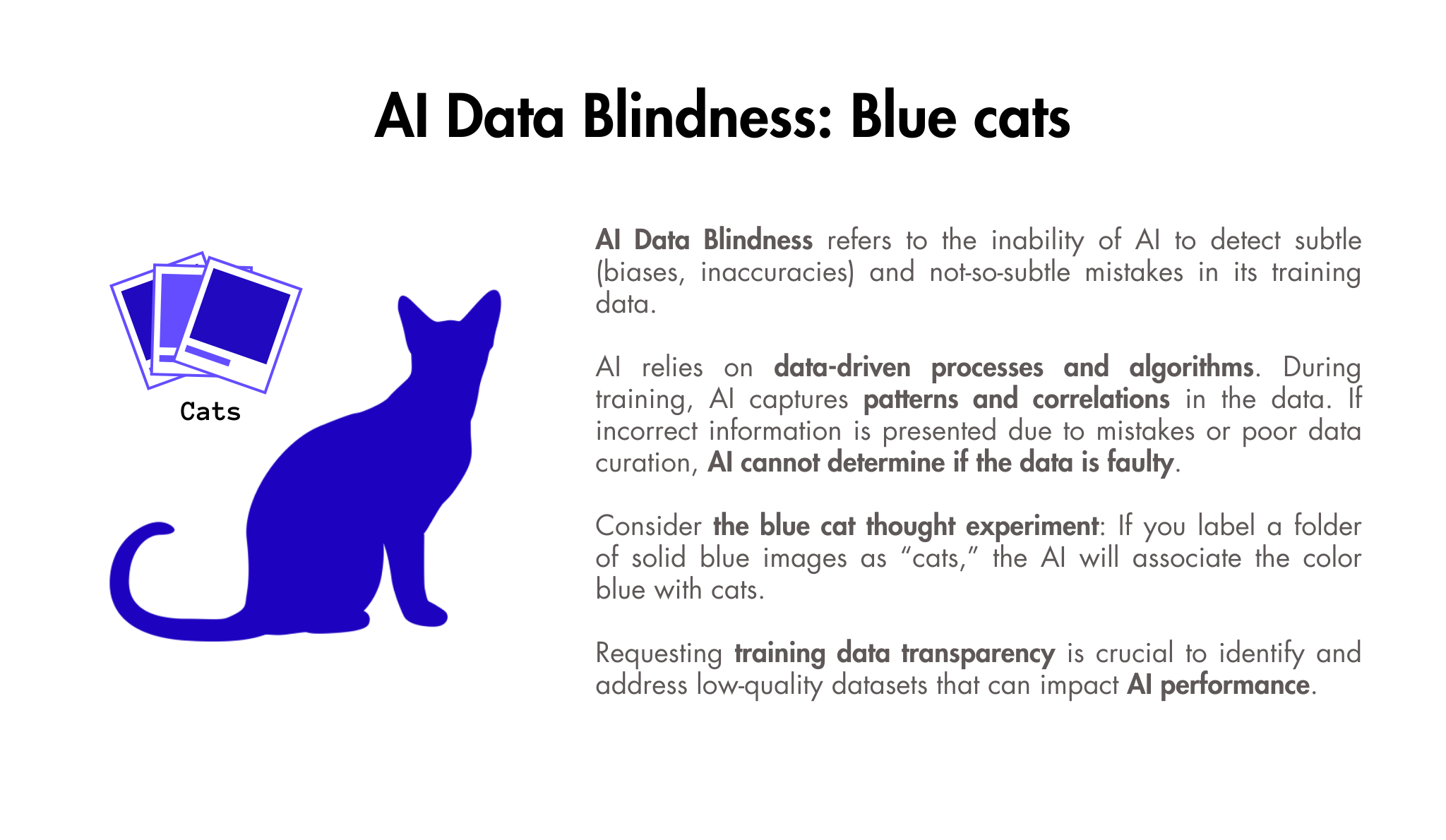

Further proving that AI lacks true understanding, if the picture in the puzzle is clearly defective or there are errors in the training data, the AI will continue as if everything is perfectly fine, unaware of any issues. That’s why data curation in AI is so important.

Surface-Level Correlations vs. True Reasoning

The depth we perceive in AI outputs is often superficial. While AI can assemble patterns that seem meaningful, it's fundamentally approximating based on surface-level correlations, not engaging in true reasoning.

Example: AI-Generated Images

A common example of this limitation is seen in AI-generated images, particularly regarding human anatomy. AI often produces images with discrepancies, such as:

Generating hands with the wrong number of fingers

Creating anatomically incorrect or inconsistent human forms

This occurs because the AI generates images based on learned patterns rather than understanding the biological realities of human anatomy.

The Dessert Dilemma: Pattern Matching Without Understanding

If an AI recommends a dessert, it might suggest one with nuts—not because it understands the risks of allergies, but because desserts and nuts often appear together in its training data. Unless explicitly instructed to consider allergies, the AI may overlook this critical detail, illustrating that fitting the pieces doesn't imply real expertise.

Lack of Common Sense and Cultural Awareness

AI also lacks access to common sense and cultural norms, relying instead on written data that may not capture unwritten rules. This can lead to problematic suggestions, such as:

Recommending alcoholic drinks without recognizing age restrictions

Failing to account for cultural practices like removing shoes in certain homes

Limitations in Specialized Fields

In specialized domains like medicine or law, AI may have access to vast text data but lacks the practical, tacit knowledge that experts develop through experience. This gap can lead to:

Inadequate recommendations in complex cases

Potentially harmful advice in high-stakes situations

Real-World Examples of AI Limitations

Chatbot Liability: A GM chatbot was tricked into agreeing to sell a $76,000 Chevy Tahoe for $1, exposing AI's lack of common sense.

Air Canada Chatbot Misunderstanding: The airline was ordered to pay damages after its chatbot misled a passenger about ticket policies.

ChatGPT's Fake Case Citations: Two New York lawyers were fined $5,000 for submitting a legal brief with fictitious case citations generated by ChatGPT.

Google Gemini's Historical Inaccuracies: The chatbot generated inaccurate representations of historical events and figures due to a restrictive diversity bias in its system prompt.

Conclusion: Navigating AI's Limitations

Recognizing these limitations is crucial for navigating the safety and ethical risks associated with AI technology. While AI may seem adept at assembling pieces, it ultimately lacks the full picture that only deeper understanding provides.

As we continue to develop and deploy AI systems, it's essential to:

Be aware of AI's inherent shortcomings

Implement proper safeguards and verification processes

Use AI as a tool to augment human intelligence, not replace it

Continue research into developing AI with more robust reasoning capabilities

By understanding the "AI Depth Illusion," we can ensure more responsible and effective use of AI technology in our rapidly evolving digital landscape.

Subscribe to my newsletter

Read articles from Gerard Sans directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gerard Sans

Gerard Sans

I help developers succeed in Artificial Intelligence and Web3; Former AWS Amplify Developer Advocate. I am very excited about the future of the Web and JavaScript. Always happy Computer Science Engineer and humble Google Developer Expert. I love sharing my knowledge by speaking, training and writing about cool technologies. I love running communities and meetups such as Web3 London, GraphQL London, GraphQL San Francisco, mentoring students and giving back to the community.