Machine Learning Model Scoring Across Workspaces in Fabric

Sandeep Pawar

Sandeep PawarI have written a couple of blogs about working with ML models in Microsoft Fabric. Creating experiments and logging and scoring models in Fabric is very easy, thanks to the built-in MLflow integration. However, the Fabric Data Science experience has one limitation. There are no model endpoints yet, and you cannot load a model from another workspace because the model URI, unlike in Databricks, does not reference a workspace. If you use MLFlowTransformer as shown in this blog, only the model from the workspace where the notebook is hosted is loaded. However, there is a workaround.

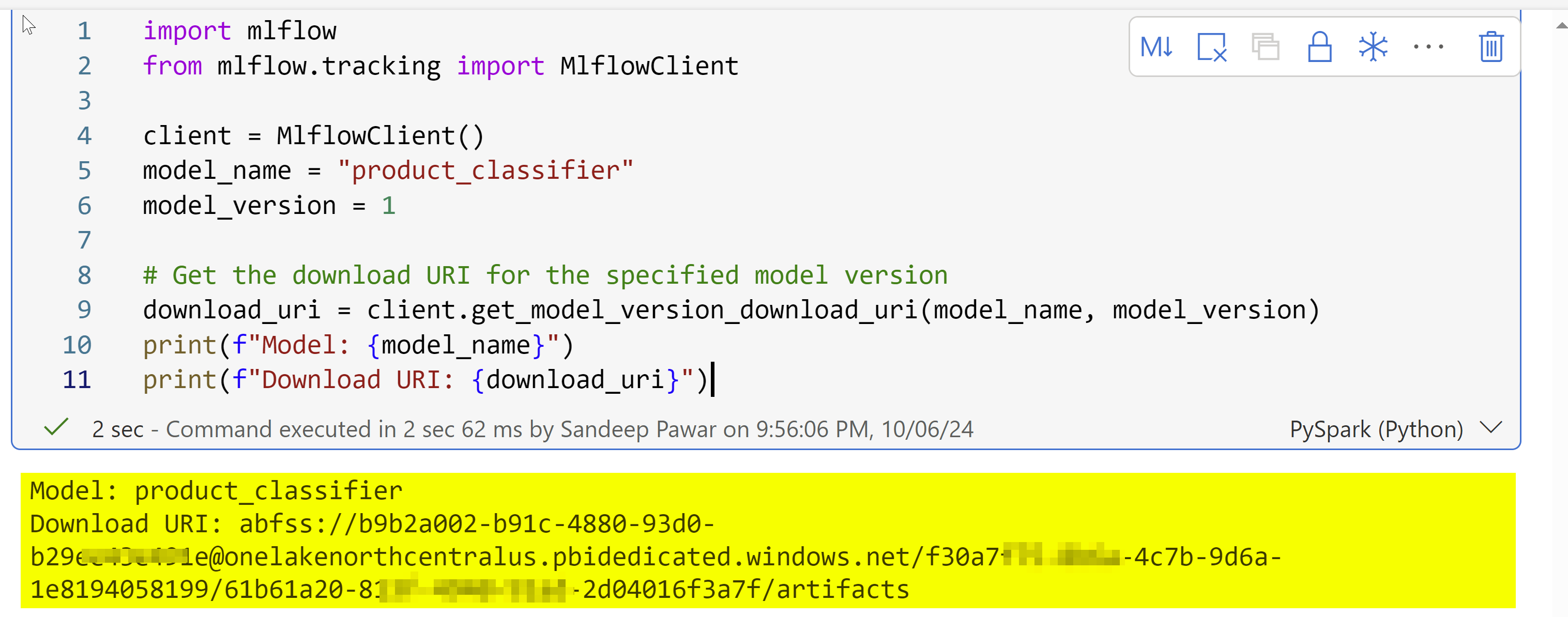

Get Model’s Abfss Location

When you register a model in a Fabric workspace, it is saved to Onelake storage in that workspace and it has an abfss path. You can get the abfss path of the registered model and use it to do model scoring from another workspace. Get the abfss path of the model by running below script in the workspace where the model is registered.

import mlflow

from mlflow.tracking import MlflowClient

client = MlflowClient()

model_name = "product_classifier"

model_version = 1

# Get the download URI for the specified model version

download_uri = client.get_model_version_download_uri(model_name, model_version)

print(f"Model: {model_name}")

print(f"Download URI: {download_uri}")

#returns abfss path of the model

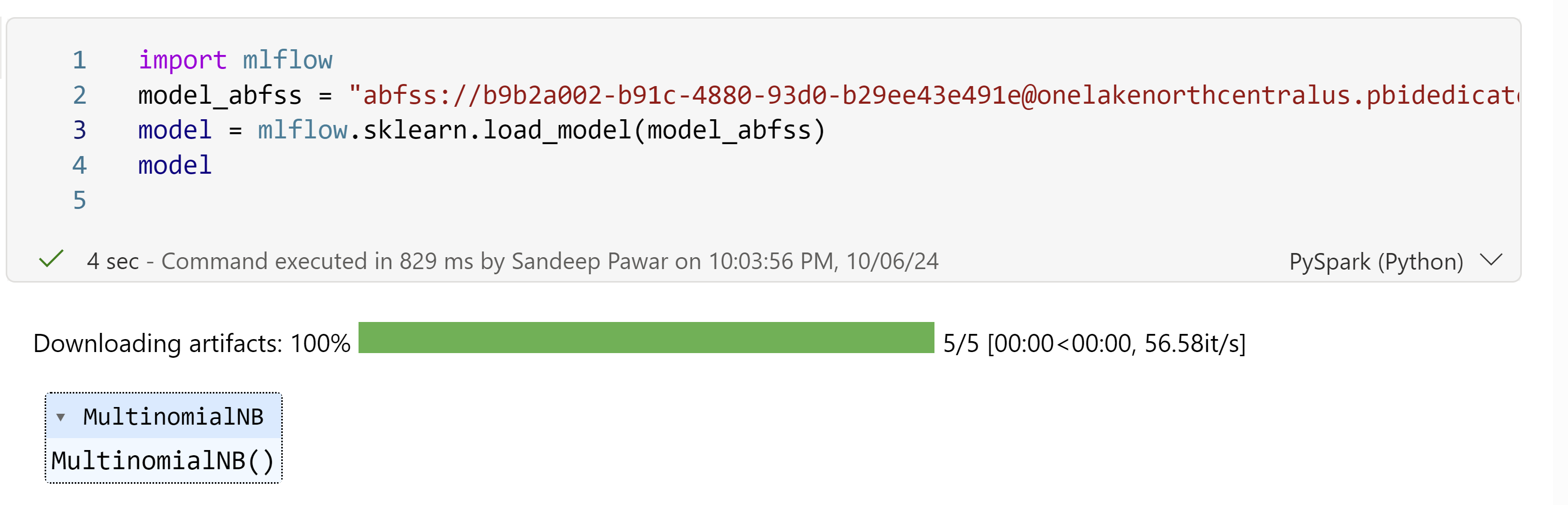

Load the model:

Once you have the abfss path, you can use it to call the model from any workspace:

Note that the abfss path is specific to the model version, so if you register a new version, you will need to obtain the associated abfss path. You can automate this process easily. Using the method above, you could save all the models in one workspace and load them in other workspaces.

I hope this workaround is short-lived and cross-workspace support and model endpoints become available soon.

Subscribe to my newsletter

Read articles from Sandeep Pawar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sandeep Pawar

Sandeep Pawar

Principal Program Manager, Microsoft Fabric CAT helping users and organizations build scalable, insightful, secure solutions. Blogs, opinions are my own and do not represent my employer.