Achieve Scalable Servers through Horizontal Scaling Architecture

Barun Tiwary

Barun Tiwary

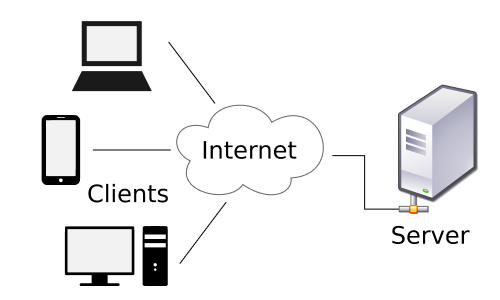

Client-Server Architecture

What is client-server architecture?

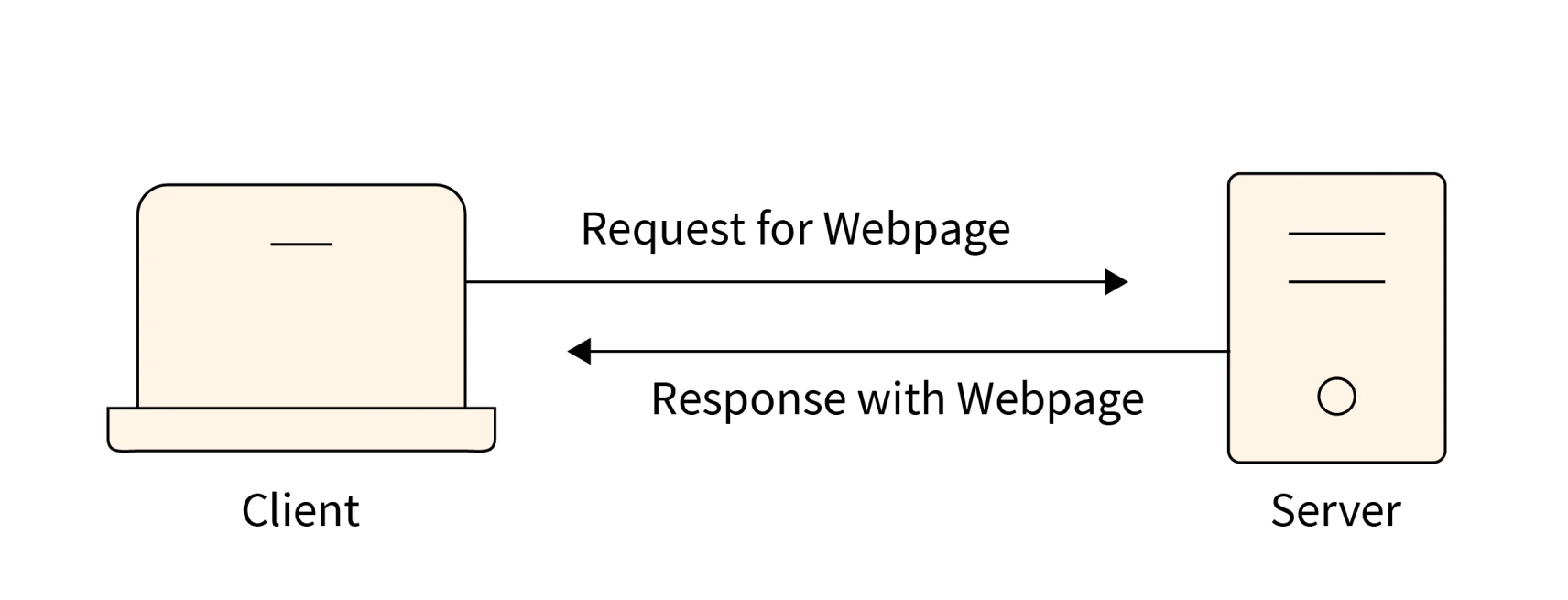

It is the basic way to create a web app where many devices can access the website through their browsers. When the website is opened, a request is sent to the server, and the server responds by providing the client with the page or information it needs.

Disadvantages of client-server Architecture and need of Horizontal Scaling Architecture

Client-server architecture is foundational for building web applications, allowing multiple devices to access a website through their browsers. In this setup, when a user opens a website, their browser sends a request to the server. The server then processes this request and responds by delivering the necessary page or information back to the client.

However, this architecture can face significant challenges when the server is overwhelmed by millions of requests. While it works well for small-scale applications, it becomes less efficient as the demand increases. As applications grow in size and the number of users expands, the limitations of a single server become apparent. This is when the need to reconsider the architecture arises.

To address these scalability issues, we turn to Horizontal Scaling. Horizontal Scaling involves adding more servers to handle increased load, rather than relying on a single server. This approach distributes the workload across multiple servers, enhancing the application's ability to manage a larger number of requests efficiently. By focusing on Horizontal Scaling, we can ensure that our applications remain responsive and reliable, even as they grow in complexity and user base.

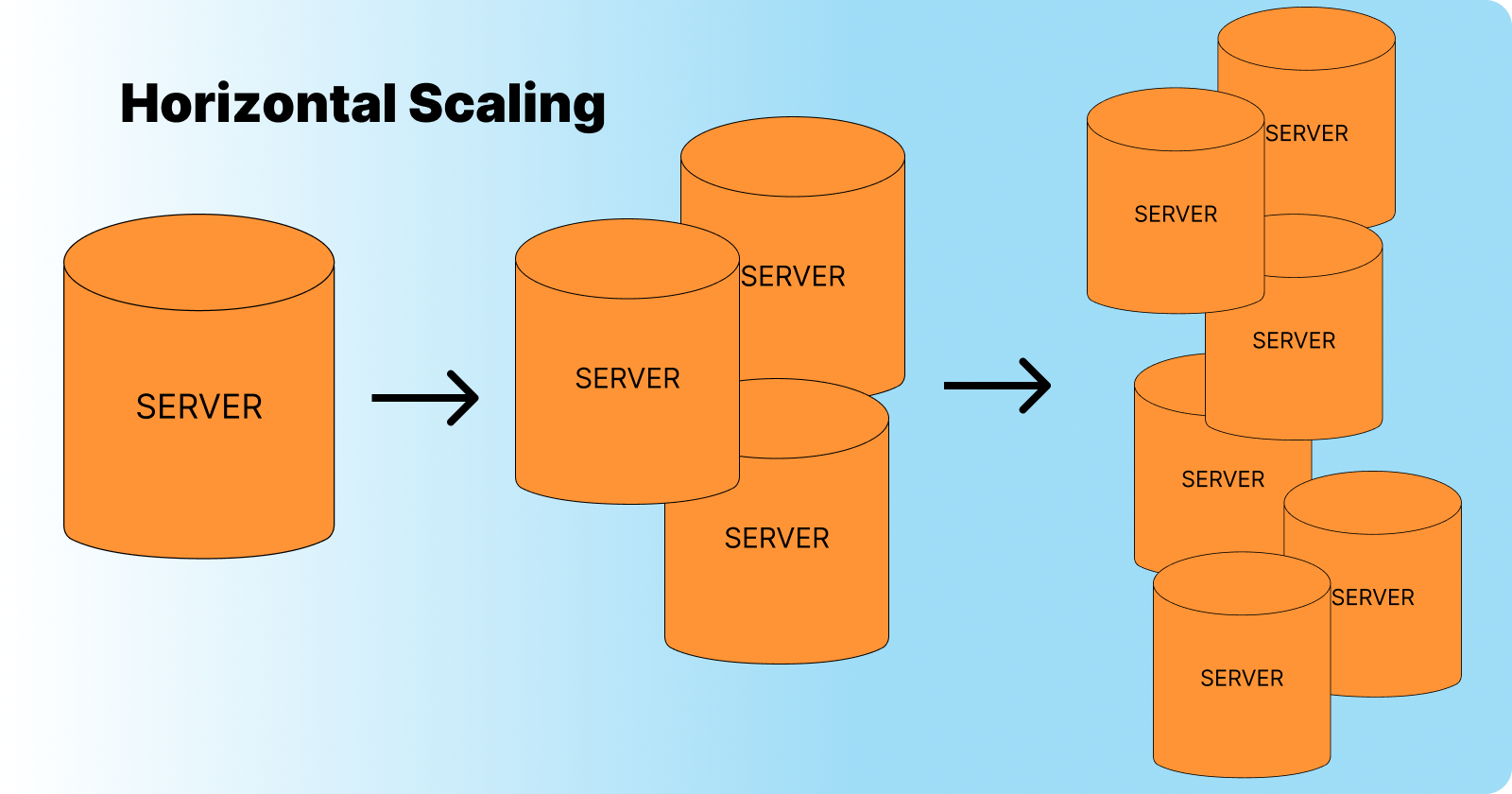

What is Horizontal Scaling?

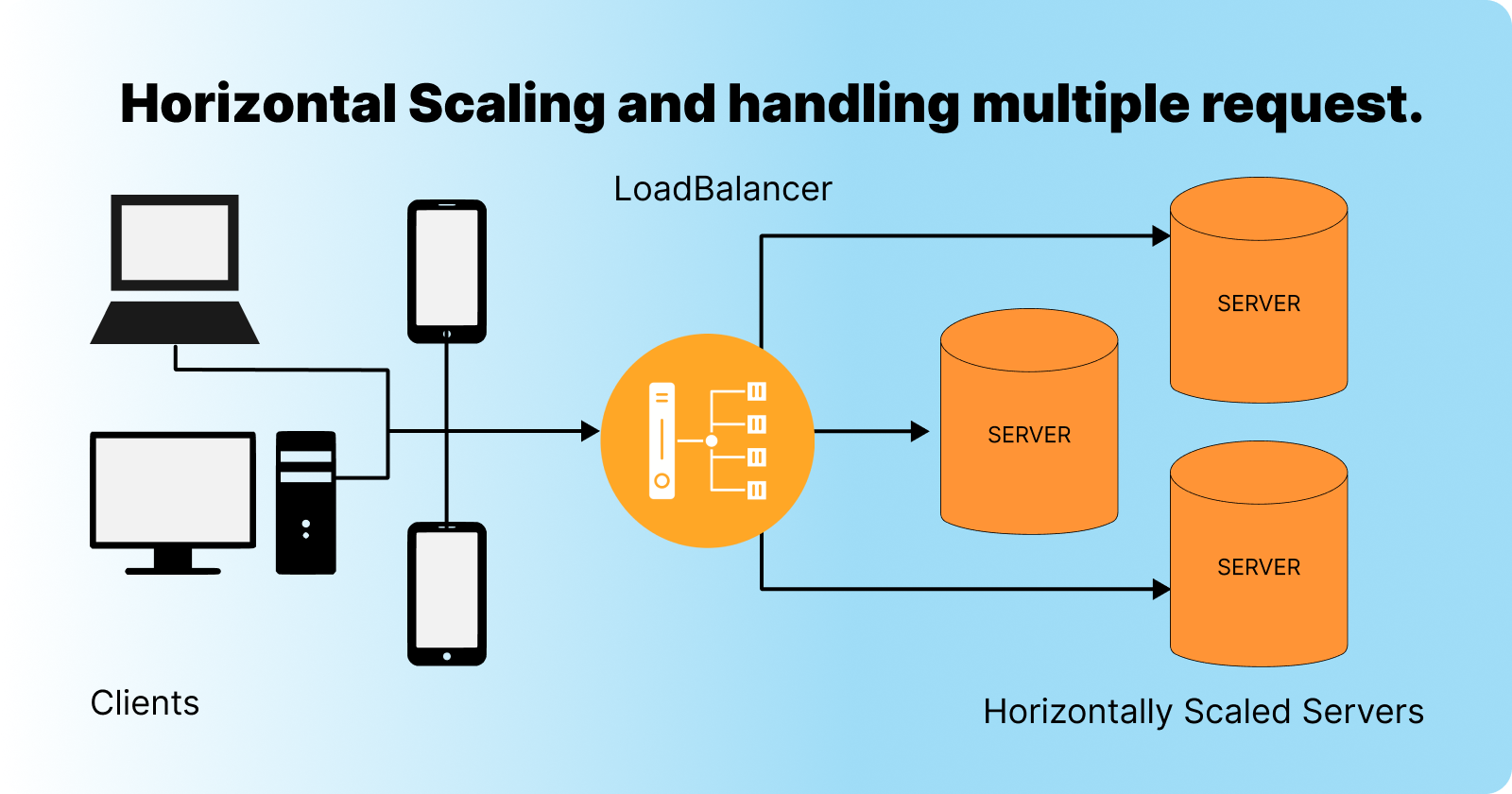

Horizontal Scaling involves expanding server capacity by deploying multiple instances of the original server. A load balancer is introduced to manage API requests, enabling the system to handle millions of requests simultaneously.

Load Balancer: This can be a hardware or software component, depending on the system architecture and business requirements. It employs various algorithms to distribute incoming requests across multiple servers.

Here is a list of different load-balancing algorithms.

Working on this architecture.

In this architecture, the process begins when a client makes a request. This request is first directed to the load balancer. The load balancer plays a crucial role in managing incoming traffic by using a specific algorithm to determine the most suitable server to handle each request. This ensures that no single server becomes overwhelmed, thereby maintaining system efficiency and reliability.

Once the load balancer selects the appropriate server, it forwards the request to that server. The server processes the request and generates a response. The load balancer then retrieves this response and sends it back to the client that made the initial request.

This architecture is designed to optimize resource utilization and improve response times, making it ideal for applications that experience high volumes of traffic. By distributing requests across multiple servers, it enhances the overall performance and scalability of the system.

Key components of this architecture include:

Client Request: Initiates the process by sending a request.

Load Balancer: Distributes requests using algorithms like round-robin, least connections, or IP hash.

Server Pool: A group of servers that handle requests and generate responses.

Response Handling: The load balancer ensures that responses are efficiently routed back to the requesting clients.

By leveraging this architecture, applications can effectively manage increased loads and maintain high levels of performance and reliability.

Subscribe to my newsletter

Read articles from Barun Tiwary directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Barun Tiwary

Barun Tiwary

Hii buddy, wanna learn, explore tech world. Come together to explore the world of Technology