Getting Started with NequIP: A Comprehensive Guide to Model Training

Chirag Sindhwani

Chirag Sindhwani

The main reason I'm writing this blog is to save you a week's time dealing with dependencies and issues with their config files, so you can easily install Nequip. You can thank me later.

What is NequIP?

I doubt you would have come this far if you didn't know about "nequip." Haha, it's not cool, but it's a big deal for those scientists working in molecular dynamics and computational chemistry.

NequIP : Neural Equivariant Interatomic Potentials

It is a new type of neural network architecture that makes it easier to predict how atoms and molecules interact with each other (basically predicts the interatomic forces and potentials within atoms in a molecular system). It learns these interactions from very accurate simulations (called ab-initio calculations) which are used to model molecular behavior.

Why is it a great deal?

NequIP can handle more complex information about atoms compared to older models. While traditional models focus on simpler interactions, NequIP captures richer details by using special "equivariant" methods. This means it can recognize not just the distances between atoms but also their geometric relationships in space.

Data efficiency: It can achieve the same or better accuracy as older models with much less training data—sometimes needing up to 1,000 times fewer data points. This is a huge breakthrough because it shows that deep learning doesn't always require massive datasets to work well.

Long simulations: Because it requires less data, NequIP can use very precise, high-level quantum chemical data to simulate molecules over longer time periods with greater accuracy.

If you want to learn more about its origins and the mathematics behind it, you can read the original research paper linked below:

Batzner, S., Musaelian, A., Sun, L. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat Commun 13, 2453 (2022). https://doi.org/10.1038/s41467-022-29939-5

Now let's code!

Requirements:

Linux local device (Latest or -1, -2 versions will work well): If you plan to work on this project for a long time, you'll need Linux; it performs best there! Don't rely on Colab for everything. You'll need to compile many C++ files and other components, which takes time, and if it succeeds, be thankful!

PyTorch GPU setup: I assume you have already set up your local GPU with PyTorch, as it's another lengthy task. If not, you can use the CPU for now.

Wandb account: To make things easier, sign up on Wandb. Just check it out! P.S.: If you are a student or academician, you can get the premium pack for free.

Patience: Yes! !

!pip install nequip

!pip install allegro

!pip install wandb # Makes your life easier

import os

os.environ["WANDB_ANONYMOUS"] = "must" # use if you havent set up an account

The original Colab notebook suggests downloading nequip=0.5.5 and torch=1.11, but these versions are outdated and won't work with your Linux and Python setup. Any version of torch will work, and you don't need to specify versions for nequip and allegro; it will run smoothly.

# install allegro

!git clone --depth 1 https://github.com/mir-group/allegro.git

!pip install allegro/

# clone lammps

!git clone --depth=1 https://github.com/lammps/lammps

# clone pair_allegro and pair_nequip

!git clone --depth 1 https://github.com/mir-group/pair_allegro.git

# download libtorch

!wget https://download.pytorch.org/libtorch/cu102/libtorch-cxx11-abi-shared-with-deps-1.11.0%2Bcu102.zip && unzip -q libtorch-cxx11-abi-shared-with-deps-1.11.0+cu102.zip

# patch lammps

!cd pair_allegro && bash patch_lammps.sh ../lammps/

# install mkl interface

!pip install mkl-include

Don't miss installing any of the above packages. All are important.

# update cmake

!wget https://github.com/Kitware/CMake/releases/download/v3.23.1/cmake-3.23.1-linux-x86_64.sh\

# Make the .sh file executable

!chmod +x cmake-3.23.1-linux-x86_64.sh

!sh ./cmake-3.23.1-linux-x86_64.sh --prefix=/usr/local --exclude-subdir

Remember to make the CMake file executable before installing it (the command was missing in the original Colab file)

# build lammps

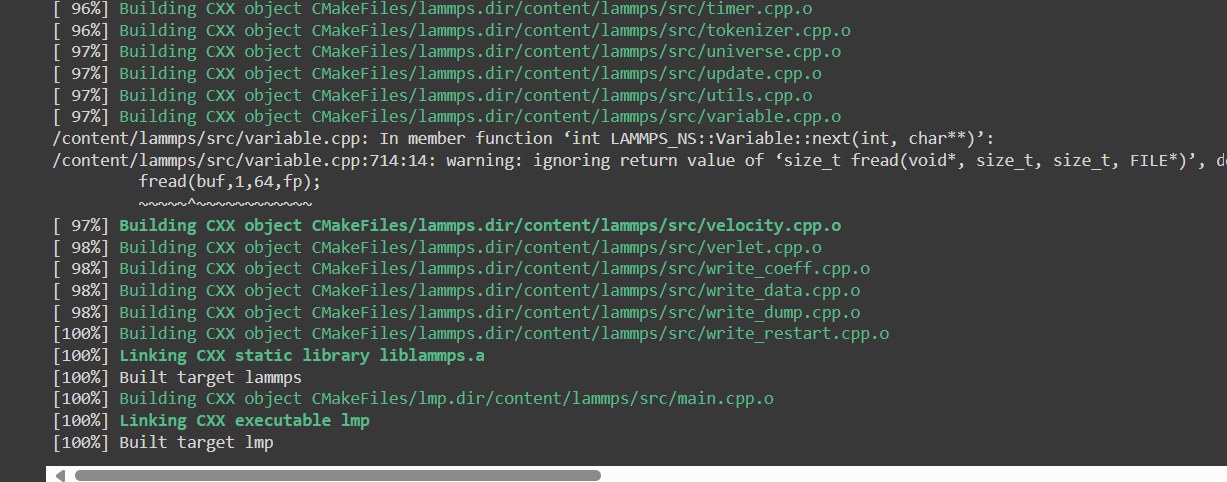

!cd lammps && rm -rf build && mkdir build && cd build && cmake ../cmake -DCMAKE_BUILD_TYPE=Release -DCMAKE_PREFIX_PATH=/content/libtorch -DMKL_INCLUDE_DIR=`python -c "import sysconfig;from pathlib import Path;print(Path(sysconfig.get_paths()[\"include\"]).parent)"` && make -j$(nproc)

When compiling the lammps package, you will definitely encounter an error, i.e.

CMake Error at CMakeLists.txt:XX (find_package):

By not providing "FindTorch.cmake" in CMAKE_MODULE_PATH this project has

asked CMake to find a package configuration file provided by "Torch", but

CMake did not find one.

Could not find a package configuration file provided by "Torch" with any

of the following names:

TorchConfig.cmake

torch-config.cmake

Add the installation prefix of "Torch" to CMAKE_PREFIX_PATH or set

"Torch_DIR" to a directory containing one of the above files. If "Torch"

is installed in a non-standard prefix, make sure to set

CMAKE_PREFIX_PATH to include this prefix.

To resolve this, you need to add this line of code in the CMakeLists.txt file, which is located at /lammps/cmake/.

cmake -DCMAKE_PREFIX_PATH=/path/to/torch ..

If you see this, be happy: half the work is done.

Now just run through the next lines of code until you encounter training.

# Python imports and pre-definitions

import numpy as np

from matplotlib import pyplot as plt

plt.rcParams['font.size'] = 30

def parse_lammps_rdf(rdffile):

"""Parse the RDF file written by LAMMPS

copied from Boris' class code: https://github.com/bkoz37/labutil

"""

with open(rdffile, 'r') as rdfout:

rdfs = []; buffer = []

for line in rdfout:

values = line.split()

if line.startswith('#'):

continue

elif len(values) == 2:

nbins = values[1]

else:

buffer.append([float(values[1]), float(values[2])])

if len(buffer) == int(nbins):

frame = np.transpose(np.array(buffer))

rdfs.append(frame)

buffer = []

return rdfs

Our workflow has 3 steps: train, deploy, and run:

Train: Use a dataset to train the neural network 🧠

Deploy: Turn the Python model into a standalone file for quick execution ⚡

Run: Use it in LAMMPS to perform tasks like Molecular Dynamics, Monte Carlo, Structural Minimization, etc. 🏃

In this section, we will train an Allegro potential using a high-temperature silicon dataset sampled with AIMD at 800K, containing 64 atoms per frame. We will only use 50 DFT structures for training. First, we download the dataset.:

%%capture # captures errors , lol

# download data

!gdown --folder --id --no-cookies https://drive.google.com/drive/folders/1FEwF4i8IDHGmAIQ3RilA0jG9_lEX4Yk0?usp=sharing

!mkdir Si_data

!mv *.xyz ./Si_data!ls Si_data

!ls Si_data

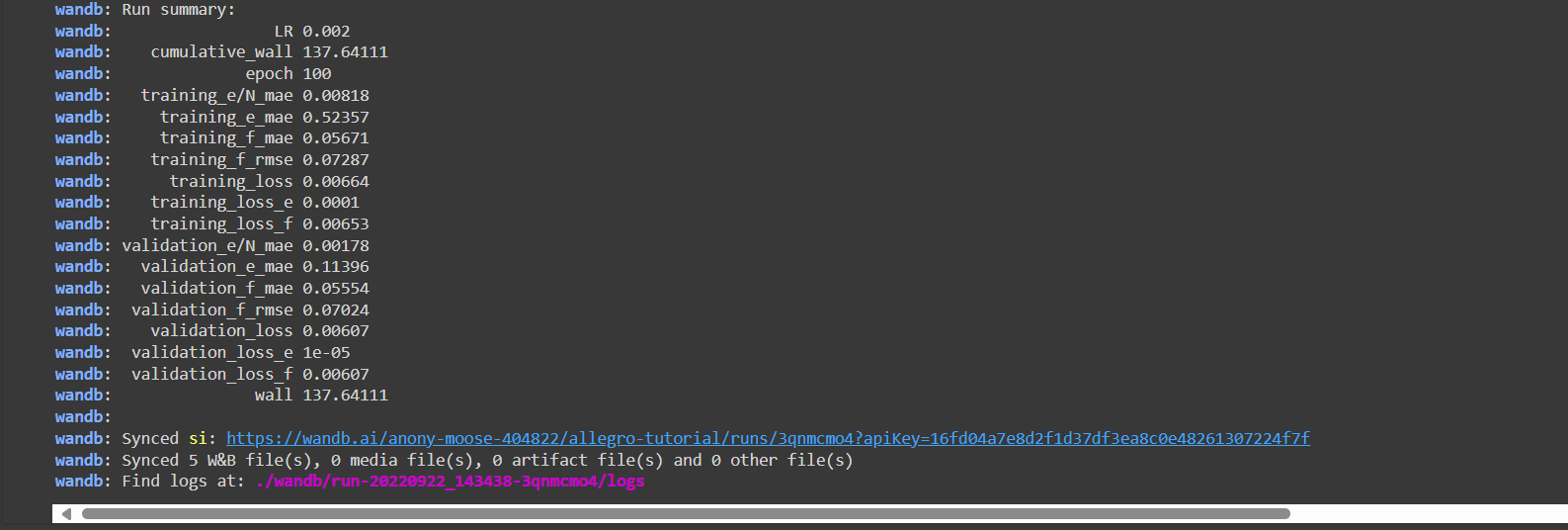

We train the Allegro model using the commands from the nequip package.

Remember to log in with Weights & Biases: The AI Developer Platform (wandb.ai) first. You will be able to track progress and view the results in a more intuitive way..!

rf ./results # removes previous results file to avoid clashes

!nequip-train allegro/configs/tutorial.yaml --equivariance-test # nequip command

Ha! You got an error again, right? Once more, the original collab file did not explain why.

You just have to go to allegro/configs/tutorial.yaml and make the following changes:

Simply delete "optimizer_params:" and write the code given below that. You will be done.

The error is likely a KEY error: optimizer_params.

The system doesn't recognize the original format in which the optimizer parameters were provided. Now, you need to specify them directly after mentioning the optimizer name, without using optimizer params, as shown here. .

Finally! After what felt like a thousand tries, I've succeeded—like a molecule that finally found its perfect bond! - author: me

Final Thoughts:

Let me know if you found this helpful! If you have any questions, feel free to comment below, and I'll try my best to answer as soon as possible.

GOOD LUCK EVERYBODY! KEEP Nequiping! 🤓

References :

NequIP_and_Allegro_Tutorial_2022.ipynb - Colab (google.com): Colab notebook for reference

E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials | Nature Communications: Original paper on NequIP

mir-group/nequip: NequIP is a code for building E(3)-equivariant interatomic potentials (github.com): GitHub repo of the original paper

Weights & Biases: The AI Developer Platform (wandb.ai): Makes life easier

THANKS!! 🙌

About the Author:

Chirag Sindhwani

I write about artificial and human intelligence

Subscribe to my newsletter

Read articles from Chirag Sindhwani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Chirag Sindhwani

Chirag Sindhwani

I write about artificial and human intelligence