Day 27 of 40DaysOfKubernetes : Setup a Multi-Node Kubernetes Cluster Using Kubeadm

Rahul Vadakkiniyil

Rahul Vadakkiniyil

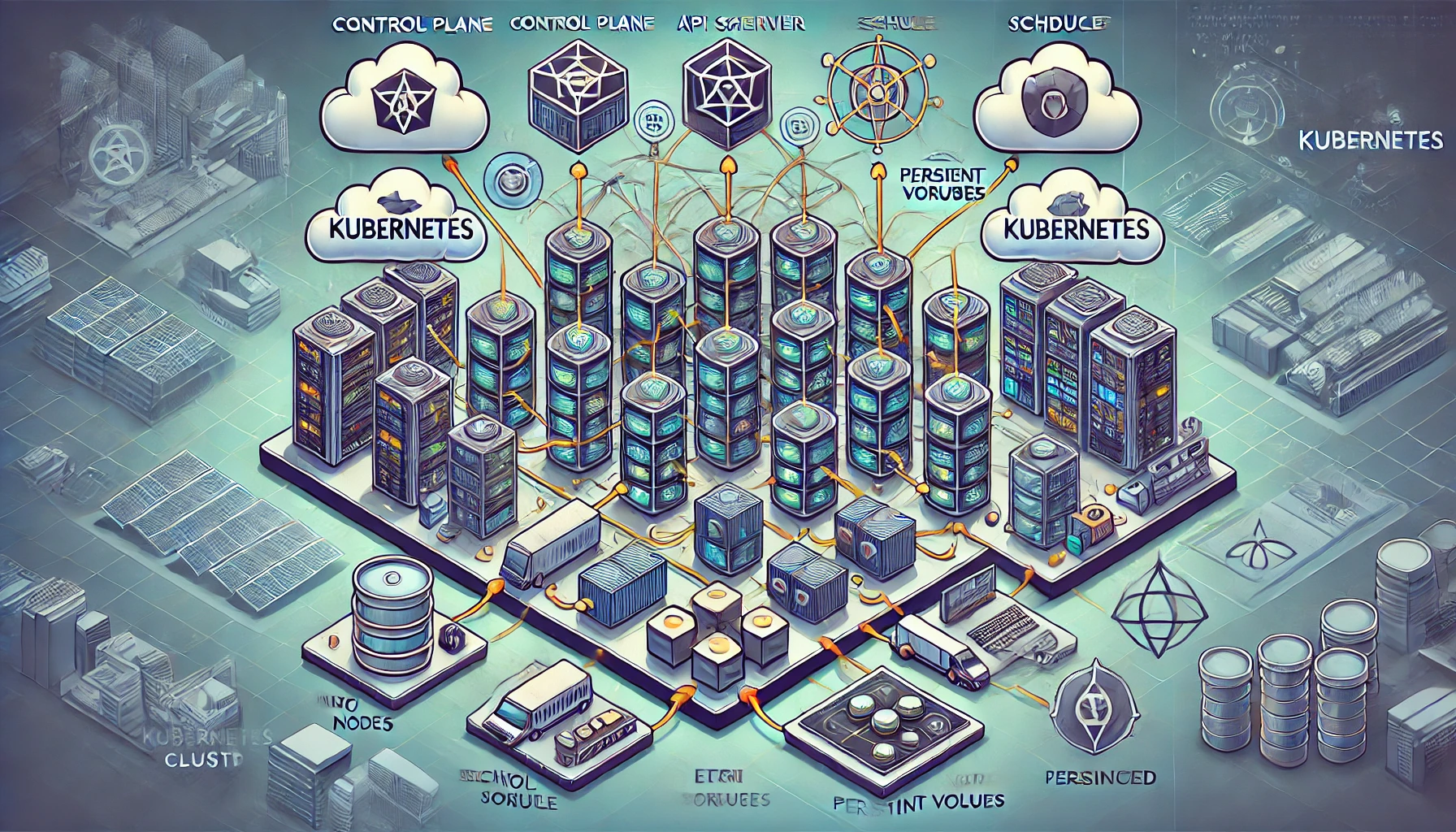

Introduction

In this 40 Days of Kubernetes series, we've been using a kind cluster on our local machine, which is good for development but not for production. While cloud services like EKS, AKS, or GKE offer managed Kubernetes, in this tutorial, we’ll learn how to set up a Kubernetes cluster using virtual machines (VMs) on the cloud. You can choose any cloud provider, but for this demo, I’ll use AWS. We will create three VMs: one Master node and two Worker nodes. We’ll set up two security groups—one for the master and one for the worker nodes—to make sure they can communicate properly. All Kubernetes components will be installed on these nodes using kubeadm. Let’s get started!

What is kubeadm

kubeadm is a tool designed to simplify the process of setting up a Kubernetes cluster. It installs all the control plane components, making the cluster ready to use.

Alongside control plane components such as the API Server, etcd, Controller Manager, and Scheduler, kubeadm also installs essential CLI tools like kubeadm, kubelet, and kubectl.

Key features of kubeadm

Cluster Initialization:

kubeadmcan initialize a Kubernetes control plane node (master) with a single command (kubeadm init). This sets up all the necessary components like the API server, etcd, controller manager, and scheduler.Joining Nodes: After initializing the control plane,

kubeadmprovides a token that can be used to join worker nodes to the cluster with a command likekubeadm join. This adds the node to the cluster, making it ready to run workloads.Networking:

kubeadmdoesn't install a network solution by default but provides hooks to install a networking plugin (like Calico, Flannel, Weave, etc.), which is essential for pod-to-pod communication.Certificate Management:

kubeadmautomatically generates and manages the certificates required for securing the Kubernetes components.Upgrade and Maintenance:

kubeadmcan be used to upgrade Kubernetes components safely, ensuring that the cluster remains consistent and functional during the process.Customization: While

kubeadmprovides a default setup, it allows for customization through configuration files, making it adaptable to different environments and use cases.

Steps to Set Up the Multi-Node Kubernetes Cluster

Create Security Groups

First, create two security groups: one for the Master node and one for the Worker nodes. Make sure to add the necessary inbound rules to enable communication between the master and worker nodes.

For SSH access on port 22, the source should be your local machine's IP. You can get your IP address by running:

curl ifconfig.meProvision the VMs

Create three instances of type

t2.mediumon AWS (or your chosen cloud provider):1 Master node

2 Worker nodes

Run the below steps on the Master VM

SSH into the Master EC2 server

Disable Swap using the below commands

swapoff -a sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstabForwarding IPv4 and letting iptables see bridged traffic

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF sudo modprobe overlay sudo modprobe br_netfilter # sysctl params required by setup, params persist across reboots cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF # Apply sysctl params without reboot sudo sysctl --system # Verify that the br_netfilter, overlay modules are loaded by running the following commands: lsmod | grep br_netfilter lsmod | grep overlay # Verify that the net.bridge.bridge-nf-call-iptables, net.bridge.bridge-nf-call-ip6tables, and net.ipv4.ip_forward system variables are set to 1 in your sysctl config by running the following command: sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forwardInstall container runtime

curl -LO https://github.com/containerd/containerd/releases/download/v1.7.14/containerd-1.7.14-linux-amd64.tar.gz sudo tar Cxzvf /usr/local containerd-1.7.14-linux-amd64.tar.gz curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service sudo mkdir -p /usr/local/lib/systemd/system/ sudo mv containerd.service /usr/local/lib/systemd/system/ sudo mkdir -p /etc/containerd containerd config default | sudo tee /etc/containerd/config.toml sudo sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/g' /etc/containerd/config.toml sudo systemctl daemon-reload sudo systemctl enable --now containerd # Check that containerd service is up and running systemctl status containerdInstall runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64 sudo install -m 755 runc.amd64 /usr/local/sbin/runcinstall cni plugin

curl -LO https://github.com/containernetworking/plugins/releases/download/v1.5.0/cni-plugins-linux-amd64-v1.5.0.tgz sudo mkdir -p /opt/cni/bin sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.5.0.tgzInstall kubeadm, kubelet and kubectl

sudo apt-get update sudo apt-get install -y apt-transport-https ca-certificates curl gpg curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubelet=1.29.6-1.1 kubeadm=1.29.6-1.1 kubectl=1.29.6-1.1 --allow-downgrades --allow-change-held-packages sudo apt-mark hold kubelet kubeadm kubectl kubeadm version kubelet --version kubectl version --clientConfigure

crictlto work withcontainerdsudo crictl config runtime-endpoint unix:///var/run/containerd/containerd.sockinitialize control plane

sudo kubeadm init --pod-network-cidr=192.168.0.0/16 --apiserver-advertise-address=172.31.89.68 --node-name masterNote:

in apiserver-advertise-address=172.xx.xx.xx use master node public ipSave the join command displayed at the end of this process; you’ll need it later.

Prepare

kubeconfig(use this from above command output)mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configInstall calico

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/tigera-operator.yaml curl https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/custom-resources.yaml -O kubectl apply -f custom-resources.yamlVerify the nodes

kubectl get nodes

Perform the below steps on both the worker nodes

Perform steps 1-8 on both the nodes

Run the command generated in step 9 on the Master node which is similar to below

sudo kubeadm join 172.31.71.210:6443 --token xxxxx --discovery-token-ca-cert-hash sha256:xxx- If you forgot to copy the command, you can execute below command on master node to generate the join command again

kubeadm token create --print-join-command

Verifying the nodes from master node

Our first worker node is also running.

Verifying the nodes from the worker node

First copy the config file from the master node

cat $HOME/.kube/configPaste in the worker node

sudo mkdir -p $HOME/.kube cd $HOME/.kube sudo nano configPaste the content in the file and give permissions

sudo chmod 755 config

Now use kubectl get nodes in worker node

Follow the same steps, for 2nd worker node.

Conclusion

Setting up a multi-node Kubernetes cluster using kubeadm on cloud instances provides hands-on experience in configuring and managing a Kubernetes environment from scratch. While managed Kubernetes services simplify many aspects of cluster management, understanding the underlying processes enhances your ability to troubleshoot, customize, and optimize your Kubernetes deployments. By following the steps in this guide, we now have a functional multi-node cluster that you can use for further exploration and experimentation with Kubernetes.

Reference

https://www.youtube.com/watch?v=WcdMC3Lj4tU&list=PLl4APkPHzsUUOkOv3i62UidrLmSB8DcGC&index=28

Subscribe to my newsletter

Read articles from Rahul Vadakkiniyil directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by