Understanding Multi-threading in Java: Essential Basics - Part 1

Petra's Tech Notes

Petra's Tech Notes

Multi-threading. Threads. Processes. Concurrency. Parallelism. Sync/Async programming. Race conditions. These concepts can be daunting, even for those who have encountered them in their work. Despite my ongoing learning and experience with these topics, I felt the need for a more structured approach to truly understand them, especially in the context of Java. My goal is to enhance my ability to solve related problems and write thread-safe programs as a software engineer.

We'll start from the basics, using practical examples to illustrate key concepts. This series will serve as a future reference for me, and I hope it proves useful to you as well to a degree.

As a disclaimer, I compiled these notes while studying Java MultiThreading for Senior Engineering Interviews course included in my subscription to the website. While my notes may resemble the course content, they include my solutions and insights as well.

Programme vs. Process vs Thread

What is a program? A program is a set of instructions to perform a specific task.

What is a process? A process is a program in execution.

What is a thread? Thread is the smallest unit of execution in a process.

Program

└── Process

├── Thread 1

├── Thread 2

└── Thread N

Naturally, a process can have several threads but a thread can only belong to a single process. A process usually holds a state which is shared among its threads. Additionally, each thread has an internal state to it. The shared state among threads is considered to be the critical section where race conditions are expected to occur if our code is not thread-safe. A textbook example of this is a counter function. But before we jump onto that, let’s get more details on threads, and reasons for why we should write multi-threaded programs.

The days are long gone when computers only have one central processing unit (CPU). Previously, a single CPU would have been responsible for scheduling and running threads to execute multiple processes on your machine at once. Each thread would get an allocated time on the CPU, and when the time is up, another thread would be scheduled. This would give the illusion of multitasking, yet there is always only one thread being executed at a time.

Benefits of execution with multiple threads:

Higher throughput

Illusion of multitasking

Efficient utilisation of resources, creating threads is very lightweight

Drawbacks:

Although multiple-threaded programs tend to have higher throughput, there are some odd cases where single-threaded execution yields better results, as thread creation and context switching might steal some performance gains

It is increasingly more difficult to debug multi-threaded programmes

More expensive to maintain it

Slowdown might be experienced as the creation of threads consumes resources and acquiring locks among threads can create significant overhead.

As an example for analysing potential performance gains, let’s take a sum-up function - which sums up all the numbers up to n - which only benefits from using multiple threads if the number is big enough - otherwise, we do not have any performance gains with using more than one thread for executing this action.

package com.example;

public class SumUp {

long counter = 0;

long startRange;

long endRange;

public SumUp(long startRange, long endRange) {

this.startRange = startRange;

this.endRange = endRange;

}

public void add() {

for (long i = startRange; i <= endRange; i++) {

counter += i;

}

}

}

Let’s call the main method with 100000 n the following way:

package com.example;

public class Main {

public static void main(String[] args) {

long start = System.currentTimeMillis();

SumUp sumUp = new SumUp(0, 100000);

sumUp.add();

long end = System.currentTimeMillis();

System.out.println("Single thread final count = " + sumUp.counter + " took " + (end - start) + "ms");

}

}

When I ran it a few times, I got one of the following results:

Single thread final count = 705082704 took 1ms

Single thread final count = 705082704 took 2ms

Now let’s make 2 threads do the sum-up up

package com.example;

public class Main {

public static void main(String[] args) throws InterruptedException {

long start = System.currentTimeMillis();

SumUp sumUp = new SumUp(0, 50000);

SumUp sumUp2 = new SumUp(50001, 100000);

Thread t1 = new Thread(sumUp::add);

Thread t2 = new Thread(sumUp2::add);

t1.start();

t2.start();

t1.join();

t2.join();

long finalCount = sumUp.counter + sumUp2.counter;

long end = System.currentTimeMillis();

System.out.println("Single thread final count = " +finalCount + " took " + (end - start) + "ms");

}

}

Some of the results I got when running the above code were:

Multiple threads final count = 705082704 took 5ms

Multiple threads final count = 705082704 took 24ms

Multiple threads final count = 705082704 took 22ms

Multiple threads final count = 705082704 took 8ms

Although the results vary significantly, none of them are ever close to the 1 or 2ms result we got when we only used a single thread. Thus, no performance gains can be realised using multiple threads when the number is relatively low.

Now let’s use Integer.MAX_VALUE for our sum-up function.

Results observed when the single main thread executes the task:

Single thread final count = 2305843008139952128 took 3028ms

Single thread final count = 2305843008139952128 took 2548ms

Single thread final count = 2305843008139952128 took 3299ms

Single thread final count = 2305843008139952128 took 3554ms

Results observed when two threads execute the task:

Multiple threads final count = 2305843009213693951 took 1231ms

Multiple threads final count = 2305843009213693951 took 1698ms

Multiple threads final count = 2305843009213693951 took 2600ms

With this number, we can start to see the potential performance gains when we use two threads. It’s always should be carefully analysed whether our software will make any gains by making the execution multi-threaded.

Yet, when we can prove the need for multiple threads, the hardware supports it. With the appearance of multi-core machines, the hardware tends to have several CPUs which each can execute threads (one at a time) in parallel.

Concurrency vs. Parallelism

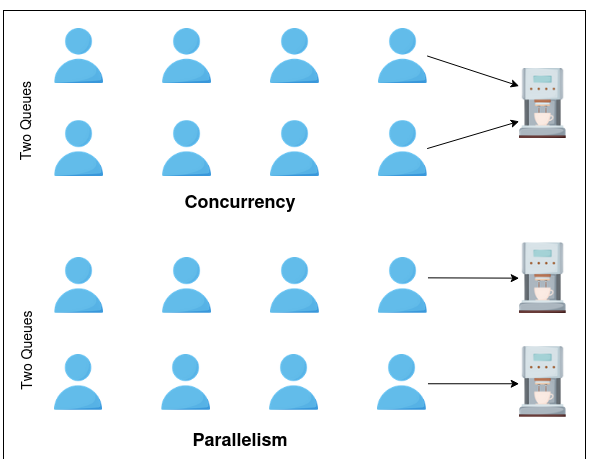

Concurrency and parallelism are often mentioned side by side, the differences between these concepts yet are still not often realised. Let’s see what each concept means.

Concurrent programmes can have multiple programmes in progress but not in execution at the same time. Each process is split into several threads, the scheduler thus can schedule threads belonging to different processes. Thus each running programme will be in progress at the same time.

Whereas, parallel programmes can execute multiple programmes at the same time. This can be enabled by multi-processor hardware.

Source: Educative.io

There are 2 ways in which a system can achieve concurrency:

Preemptive vs. Co-operative MultiTasking

Preemptive multitasking: The operating system schedules which thread gets scheduled to the CPU and how long, therefore individual threads and processes cannot know when they will be executed. This is beneficial for the programmer as they don’t have to think about when to give control back to the CPU in the code.

Co-operative multitasking: In this scenario, well-behaved/well-written programmes coordinate themselves and voluntarily give back control to the scheduler. This happens when they either become idle, their time expired or logically blocked(waiting to acquire a lock for example). Consequently, the operating system’s scheduler has no say in which process gets to run and when.

Synchronous programming vs asynchronous programming:

Synchronous programming is a synonym for serial execution, which refers to line-by-line execution as specified in the code. Once a function is invoked, execution is blocked until the function is completed, then moves down to executing the rest of the program.

Asynchronous programming - “Asynchronous programming is a means of parallel programming in which a unit of work runs separately from the main application thread and notifies the calling thread of its completion, failure or progress. (defined by Wikipedia)”

This we can establish that methods are not necessarily being called in the order in which they are written in the code in asynchronous programming. Async functions typically return a future or a promise, which we can poll for. Additionally, we can also use a callback function on the result once the async function is completed. Naturally, these functions are non-blocking.

In non-threaded environments, this use of async functions is an alternative to concurrency as we have at least two different processes in progress at the same time. It utilizes a cooperative multitasking model.

Moving on, we should talk about 2 types of programmes which can both benefit from multi-threading and in what ways:

CPU vs. I/O-bound programmes

CPU-bound programmes: These programmes can be made faster if we make our CPU more powerful (vertical scaling). But there is an upper limit to that, after that it we should increase the number of CPUs. To be able to utilise the resources of all these CPUs in parallel, we have to write multithreaded programmes.

I/O-bound programmes: These programmes spend a lot of their time writing/ reading from main memory or network interfaces (API calls) while the CPU sits unused. Even in this scenario multi-threading can increase efficiency. It would make sense to make a thread give up control of the CPU so a thread which needs CPU power to execute can use its resources efficiently.

Throughput vs Latency.

Throughput indicates how many tasks get done in a unit of time. In the domain of payments, for example, this can be demonstrated by measuring how many transactions per second a service can process.

Latency is about how much time a single task takes to be completed from start to finish. Going back to payments, this means how much time a single payment takes from the point of initiation to be settled and sent out to the creditor’s bank.

Further concepts often mentioned when speaking about multi-threading:

Deadlock: This is when a program execution is hanging because the lock required by the first thread is held by the second thread and the lock needed by the second thread is held by the first thread

Liveness: indication of a program execution promptly. When the system experiences deadlock, it can’t show liveness.

Live-lock is when threads seemingly make progress but in reality they don’t. Example of 2 people trying to cross each other.

To summarize, understanding multi-threading in Java is vital for developing efficient applications. It involves grasping the concepts of programs, processes, threads, concurrency, and parallelism. While multi-threading enhances throughput and resource use, it also introduces debugging challenges. By evaluating software needs and hardware capabilities, developers can optimize both CPU-bound and I/O-bound programs, improving performance and reducing latency. This knowledge is crucial for writing thread-safe Java applications.

Subscribe to my newsletter

Read articles from Petra's Tech Notes directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by