NLP - Do you really need this step in your Pipeline ?

Siddhi Takawade

Siddhi Takawade

This is what I learned today...

Surprisingly or not surprisingly, to build an effective NLP model, we do not actually need each and every step that we supposedly know in NLP Pipeline.

As we know NLP comprises various preprocessing steps, it can be quite a task to streamline an efficient NLP pipeline aligned with your end goal. However, knowing when not to use a particular step in designing your NLP pipeline can be one of the most advantageous techniques to achieve desired results with higher accuracy.

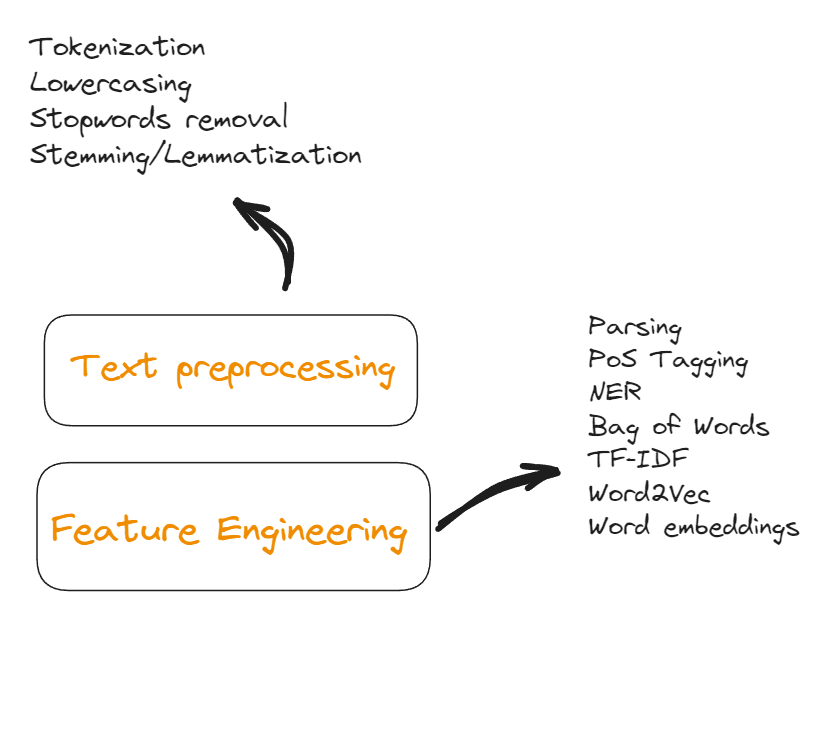

Let’s have a quick context about NLP pipeline first. So, before model building, you'll need to perform a set of NLP steps, like some as illustrated below:

So, below are examples where the following steps might not be needed:

Tokenization

In a specific type of language modeling, known as character-level language modeling, where the model predicts the next character based on the preceding sequence of characters, here you can feed the entire string into your model skipping the tokenization of the string.

Lowercasing

Lowecasing might be a common practice in NLP while there are cases where lowercasing might not just be unnecessary, but could also harm the intention of the use case. To get a clear idea, let's look at the examples - Hashtag classification on social media and Preserving brand identity in Marketing text.

In both these examples, Capitalized letters will hold great significance and lowercasing would just kill the intent.

Stemming/Lemmatization

Search engines often identify keywords within a search query to return relevant results. Especially for short queries, the focus is on capturing the exact terms users are searching for. Stemming or lemmatization could potentially alter the user's intended meaning. For instance, stemming "running" to "run" might miss the specific intent of searching for "running shoes."

Parsing

Topic Modeling for Short Texts - When dealing with short texts like social media posts or product reviews, dependency parsing might be overkill. Here, techniques like bag-of-words or TF-IDF (Term Frequency-Inverse Document Frequency) can be effective. These methods represent documents as collections of words, capturing the overall themes without needing to understand the grammatical relationships.

POS Tagging

Machine Translation models like Statiscal Machine Translation(uses word-based alignment techniques) and Neural Machine Translation(uses encoder-decoder techniques) can learn to capture syntactic and semantic relationships without relying on POS tags.

TF-IDF and word embeddings

TF-IDF is useful for tasks like document classification or keyword extraction, while word embeddings are better suited for tasks that require understanding the semantic relationships between words, such as machine translation or question answering.

In summary, above are just a few examples which show that there are possibilities where skipping certain steps would definitely enhance your NLP model’s performance. Well, of course! You will have to try out every known processing over your data in order to understand what performs best, which is how you understand which steps are not necessary and can leverage it to your benefit.

Subscribe to my newsletter

Read articles from Siddhi Takawade directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Siddhi Takawade

Siddhi Takawade

Hi you, I am an AI Enthusiast; Crafting AI Models, Traveling and savoring good music and literature!"