Getting Started with Llama 3.2: The Latest AI Model by Meta

Spheron Network

Spheron Network

Llama 3.2, Meta's latest model, is finally here! Well, kind of. I’m excited about it, but there's a slight catch—it’s not fully available in Europe for anything beyond personal projects. But honestly, that can work for you if you are. Only interested in using it for fun experiments and creative AI-driven content.

Let’s dive into what’s new with Llama 3.2!

The Pros, Cons, and the “Meh” Moments

It feels like a new AI model is released every other month. The tech world just keeps cranking them out, and keeping up is almost impossible—Llama 3.2 is just the latest in this rapid stream. But for AI enthusiasts like us, we’re always ready to download the newest version, set it up on our local machines, and imagine a life where we're totally self-sufficient, deep in thought, and exploring life’s great mysteries.

Fast-forward to now—Llama 3.2 is here, a multimodal juggernaut that claims to tackle all our problems. And yet, we're left wondering: How can I spend an entire afternoon figuring out a clever way to use it?

But on a more serious note, here’s what Meta’s newest release brings to the table:

What’s New in Llama 3.2?

Meta's Llama 3.2 introduces several improvements:

Smaller models: 1B and 3B parameter models optimized for lightweight tasks.

Mid-sized vision-language models: 11B and 90B parameter models designed for more complex tasks.

Efficient text-only models: These 1B and 3B models support 128K token contexts, ideal for mobile and edge device applications like summarization and instruction following.

Vision Models (11B and 90B): These can replace text-only models, even outperforming closed models like Claude 3 Haiku in image understanding tasks.

Customization & Fine-tuning: Models can be customized with tools like torchtune and deployed locally with torchchat.

If that sounds like a lot, don't worry; I’m not diving too deep into the “Llama Stack Distributions.”... Let’s leave that rabbit hole for another day!

How to Use Llama 3.2?

Okay, jokes aside, how do you start using this model? Here’s what you need to do:

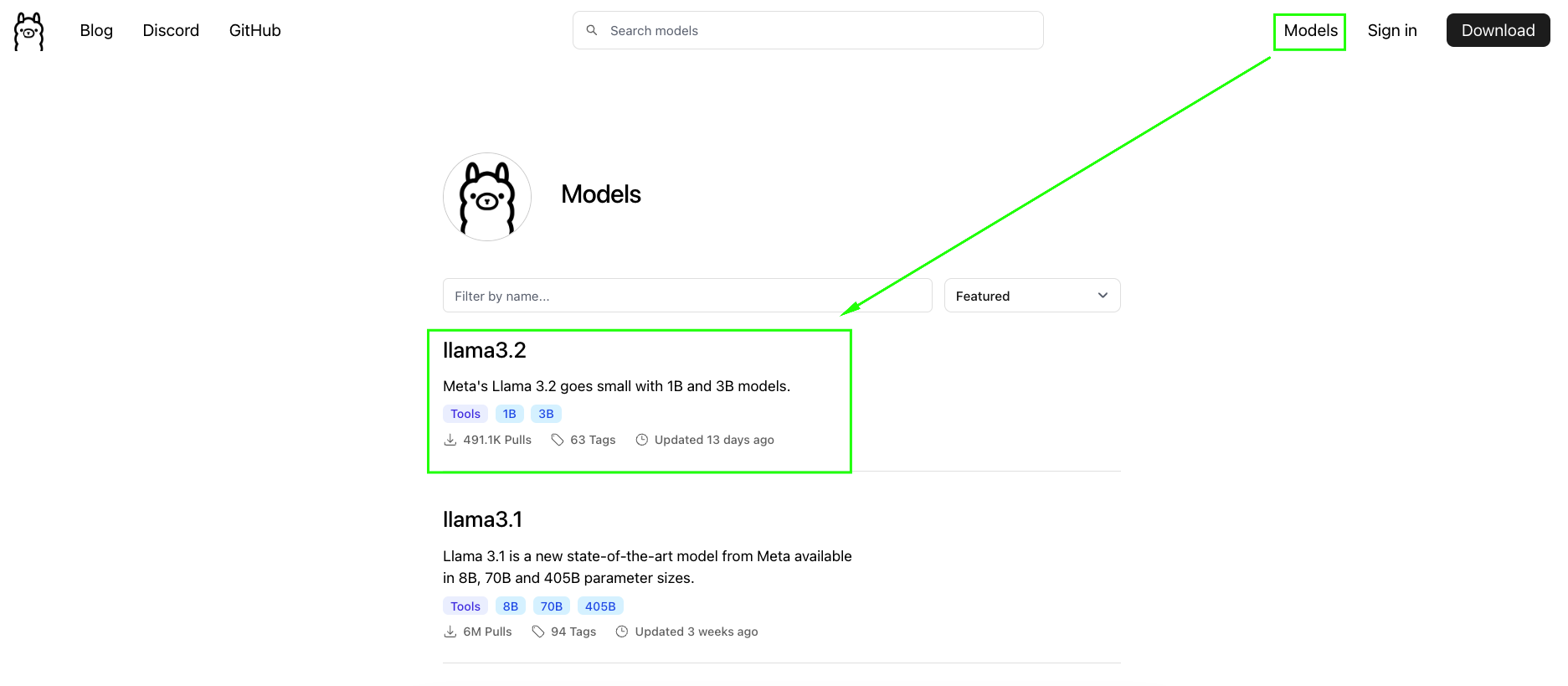

Head over to Hugging Face…or better yet, just go to ollama.ai.

Find Llama 3.2 in the models section.

Install the text-only 3B parameters model.

You're good to go!

If you don’t have ollama installed yet, what are you waiting for? Head over to their site and grab it (nope, this isn’t a sponsored shout-out, but if they’re open to it, I’m down!).

Once installed, fire up your terminal and enter the command to load Llama 3.2. You'll chat with the model in a few minutes, ready to take on whatever random project strikes your fancy.

Multimodal Capabilities: The Real Game Changer

The most exciting part of Llama 3.2 is its multimodal abilities. Remember those mid-sized vision-language models with 11B and 90B parameters I mentioned earlier? These models are designed to run locally and understand images, making them a big step forward in AI.

But here’s the kicker—when you try to use the model, you might hit a snag. For now, the best way to get your hands on it is by downloading it directly from Hugging Face (though I’ll be honest, I’m too lazy to do that myself and will wait for Ollama’s release).

If you’re not as lazy as I am, please check out meta-llama/Llama-3.2-90B-Vision on Hugging Face. Have fun, and let me know how it goes!

Wrapping It Up: Our Take on Llama 3.2

And that’s a wrap! Hopefully, you found some value in this guide (even if it was just entertainment). If you’re planning to use Llama 3.2 for more serious applications, like research or fine-tuning tasks, it’s worth diving into the benchmarks and performance results.

As for me, I’ll be here, using it to generate jokes for my next article!

FAQs About Llama 3.2

What is Llama 3.2?

- Llama 3.2 is Meta’s latest AI model, offering text-only and vision-language capabilities with parameter sizes ranging from 1B to 90B.

Can I use Llama 3.2 in Europe?

- Llama 3.2 is restricted in Europe for non-personal projects, but you can still use it for personal experiments and projects.

What are the main features of Llama 3.2?

- It includes smaller models optimized for mobile use, vision-language models that can understand images, and the ability to be fine-tuned with tools like torchtune.

How do I install Llama 3.2?

- Head to ollama.ai, download the software and install the text-only model (3B parameters).

What’s exciting about the 11B and 90B vision models?

- These models can run locally, understand images, and outperform some closed models in image tasks, making them great for visual AI projects.

Subscribe to my newsletter

Read articles from Spheron Network directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Spheron Network

Spheron Network

On-demand DePIN for GPU Compute