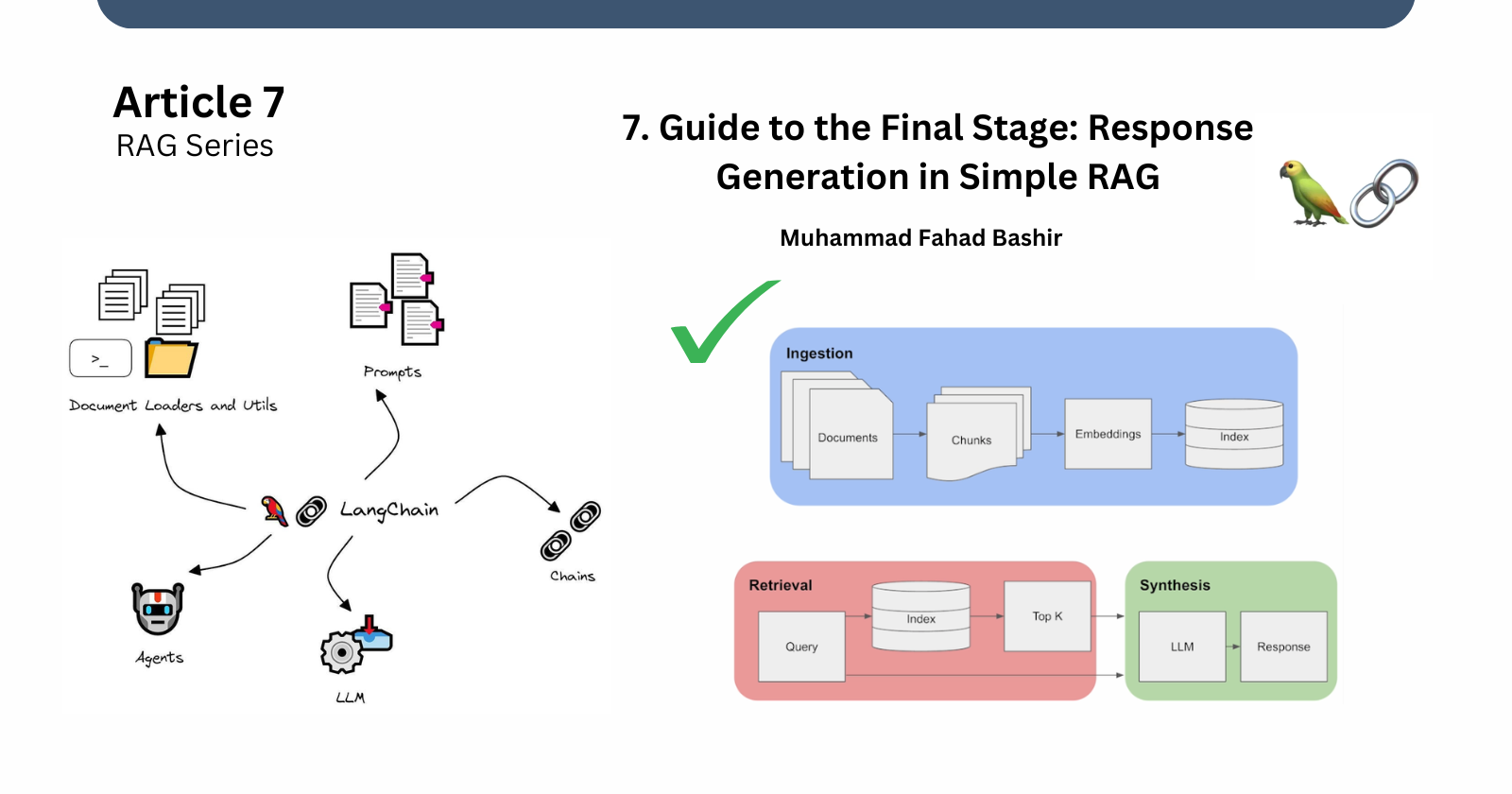

7. Guide to the Final Stage: Response Generation in Simple RAG

Muhammad Fahad Bashir

Muhammad Fahad Bashir

Welcome to the seventh and final article in our series on Retrieval-Augmented Generation (RAG) explaining the stpes. In the previous articles, we thoroughly explored each step of the RAG process, from understanding what RAG is to retrieving data using various techniques. Here is the link .

In this article, we will discuss about the crucial step of response generation, specifically focusing on large language model (LLM) selection and the implementation of chain methods. By the end, you'll have the knowledge to develop a fully functional chatbot application capable of answering queries using the power of LLMs.

Understanding Large Language Models (LLMs)

Large language models (LLMs) are advanced AI systems trained on extensive datasets to understand and generate human-like text. Some of the notable LLMs include:

ChatGPT from OpenAI

LLaMA from Meta

Gemini from Google

Choosing the Right LLM

When selecting an LLM, several factors must be considered, depending on the nature of your task and budget:

Context Window: The context window defines how much information the model can consider at once. A larger context window can enhance the model's ability to generate coherent responses.

Number of Tokens: Different LLMs have varying token limits, which can impact how much text can be processed and generated in a single query.

Business Use Case: Your choice of LLM should align with the specific task you need to address, such as text generation, sentiment analysis, or translation.

You can access LLMs through their official websites or via APIs. For instance, the Google Gemini API allows you to send queries and relevant context to the model for processing. For example here is a comparison according to June 2024. To know more read the source

Main Concept

in our previous article 6. Understanding Retrievers in detail -LangChain Retriever Methods we focused on retrieving data from our documents. Now, we will take the next crucial step: passing this retrieved data to a Large Language Model (LLM) to generate responses. This article will explore how queries, LLMs, and context converge to produce meaningful outputs.

Theme of Response Generation

The central theme of this revolves around the following components:

Query: The question posed by the user.

LLM: The Large Language Model chosen for processing the query.

Context: The data retrieved from our documents, which provides the necessary background for the LLM.

Final Response: The output generated by the LLM based on the input query and context.

By understanding how these elements interact, you will be better equipped to implement a functional chatbot application that leverages the capabilities of LLMs.

you can choose any llm model based on our preference. Here is the code for last step . we will be using Google Geimini as llm .

# Install Google Generative AI library

!pip install google-generativeai

# Import the library

import google.generativeai as genai

# Initialize the Google Gemini model

genai.configure(api_key="YOUR API KEY ") # Set your API key here

model = genai.GenerativeModel("gemini-1.5-flash")

# Now let's retrieve and combine the context (similar to your earlier steps)

retrieved_text = "\n".join([doc.page_content for doc in retrieved_docs])

# Create a question prompt using the context and your query

prompt = f"Based on the following information, please provide the answer to: {query}\n\n{retrieved_text}"

# Generate an answer using the model (use the generate_content method)

response = model.generate_content(prompt)

# Output the response generated by Google Gemini

print("LLM Response:", response.text)

All steps together

Below are the all steps combined together

# Required Imports

import google.generativeai as genai

from langchain.document_loaders import PyPDFLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Chroma

from langchain_huggingface import HuggingFaceEmbeddings

# Initialize Google Gemini

genai.configure(api_key="Your api key ")

model = genai.GenerativeModel("gemini-1.5-flash")

# Load and prepare documents

loader = PyPDFLoader('/content/FYP Report PhysioFlex(25july).pdf') # Load your document

doc = loader.load()

# splitting doucment

text_splitter = RecursiveCharacterTextSplitter(chunk_size=300, chunk_overlap=50)

split_docs = text_splitter.split_documents(doc)

# making embedding

embed_model = HuggingFaceEmbeddings(model_name='BAAI/bge-small-en-v1.5')

vector_store = Chroma.from_documents(split_docs, embed_model)

# Function to handle conversational context

def conversational_rag(query, chat_history):

# Combine user query with previous chat history

combined_input = " ".join(chat_history + [query])

# Perform similarity search to retrieve relevant documents

retrieved_docs = vector_store.similarity_search(combined_input, k=4)

# Prepare context from retrieved documents

retrieved_text = "\n".join([doc.page_content for doc in retrieved_docs])

# Create a prompt for the LLM

prompt = f"Based on the following context, answer the question: {combined_input}\n\n{retrieved_text}"

# Generate the response

response = model.generate_content(prompt)

# Append the query and response to chat history

chat_history.append(query)

chat_history.append(response.text)

return response.text, chat_history

# Example usage

chat_history = []

user_query = "Ask the quetsion that you want"

response, chat_history = conversational_rag(user_query, chat_history)

print("LLM Response:", response)

Concept of chain

In the context of Retrieval-Augmented Generation (RAG), the concept of chains is vistal in organizing and streamlining the process of interacting with Large Language Models (LLMs). Chains refer to sequences of operations that involve multiple steps, allowing for efficient handling of tasks such as data retrieval, processing, and response generation.

Chains refer to sequences of calls made to an LLM, a tool, or a data preprocessing step. Different types of chains serve various purposes, including:

QA Chain: For answering questions directly.

QA Retriever Chain: To retrieve data and then generate an answer.

Conversational Chain: For maintaining context in ongoing dialogues.

QA Conversational Retriever Chain: A combination that retrieves data while managing conversational context.

LangChain Expression Language (LCEL)

The LangChain Expression Language (LCEL) is an integral part of LangChain, a framework designed to simplify the development of applications that leverage LLMs and other AI tools. LCEL provides a concise syntax for defining chains and workflows, making it easier for developers to specify how data flows through their applications. By utilizing LCEL, developers can create more complex and efficient chains, facilitating seamless interactions between the user inputs, LLMs, and other processing steps. This powerful feature allows for greater flexibility and scalability in building RAG applications, ultimately enhancing their ability to deliver accurate and contextually relevant responses.

Future Directions

As we look ahead in developing Retrieval-Augmented Generation (RAG) applications, here are some exciting directions to consider:

Experiment with Different Loaders: Try out various data loaders to implement different use cases. This will help improve how our applications work in diverse scenarios.

Incorporate User Interaction History: Utilize user history to make responses more personalized and relevant. This can lead to a better overall experience for users.

Explore Advanced Methods: Look into advanced techniques, such as fine-tuning models or using ensemble methods, to enhance the accuracy of the responses generated.

Add a Graphical User Interface (GUI): Implement a user-friendly interface using tools like Streamlit or Gradio. This will make it easier for users to interact with the application.

Deploy on Cloud Platforms: Consider deploying the application on cloud platforms for better scalability and accessibility, allowing more users to take advantage of RAG technology.

By following these directions, we can make our applications more powerful, user-friendly, and innovative in the AI and machine learning landscape.

Conclusion

Response generation is the pivotal step in the RAG process, integrating user queries, LLM capabilities, and contextual information to produce insightful answers. By effectively combining these components, you can create a robust chatbot application that utilizes the power of retrieval-augmented generation to meet users' needs.

Thank you for joining us on this journey through the RAG series. We hope this article has provided you with valuable insights into response generation and has equipped you with the knowledge to develop your own intelligent applications.

Subscribe to my newsletter

Read articles from Muhammad Fahad Bashir directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by