Building Modern Applications: Exploring Networks, Web Protocols, and Streaming

Ritik Singh

Ritik Singh

When building modern applications or systems, it's crucial to have a basic understanding of networking principles and related technologies. Whether you're developing a simple web app or a complex, real-time communication platform, knowing the basics of how data travels, how systems communicate, and how to ensure security and efficiency is essential. So in this, we are going to discuss the following terms

Internet Service Provider and Internal Networking

Open Systems Interconnection (OSI) model

HTTP, TCP, UDP, WebSockets, and Video Transmission in the Modern Web like WebRTC and HTTP-DASH

Data Access and Interaction in Modern Applications: REST APIs, GraphQL, and GRPC

Head-of-Line Blocking: Understanding the Bottleneck in Data Transmission

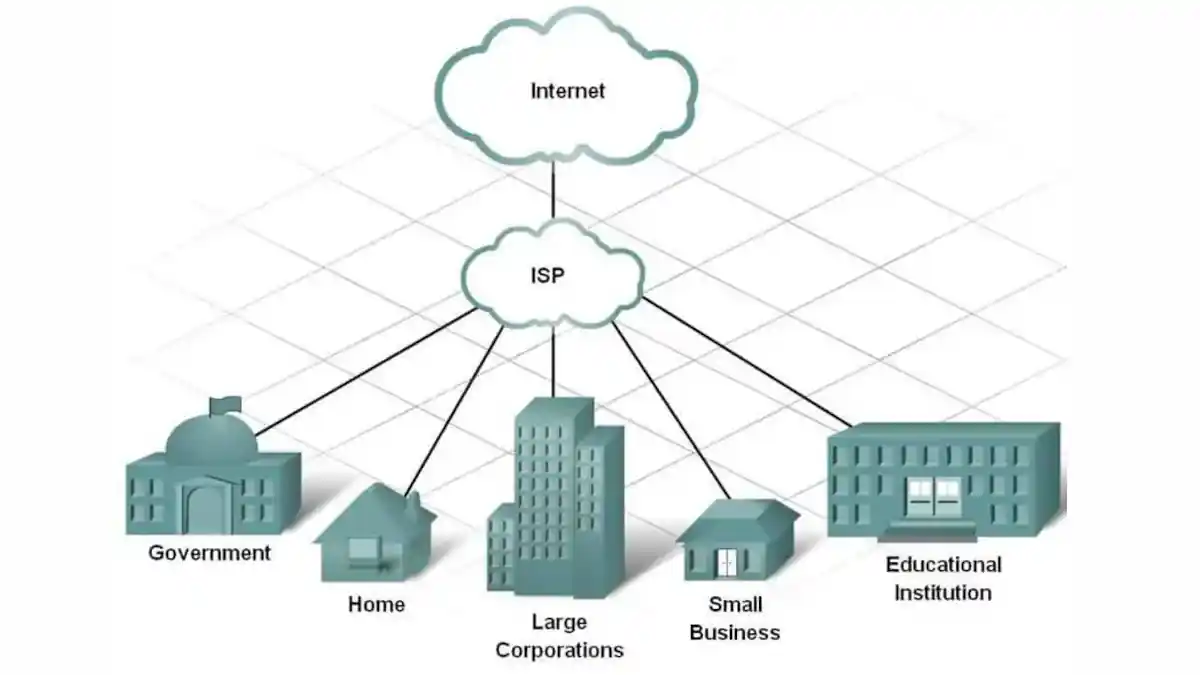

Internet Service Provider and Internal Networking

Whenever you type "google.com" into your browser, a complex process ensures that the data reaches your computer. Let’s break it down simply.

You have internet access, but how does it work? It's provided by an Internet Service Provider (ISP). ISPs manage your connection and handle data traffic. They also have access to your browsing history, even when you're in incognito mode. 😜

But how does your computer know where "google.com" is? This is where DNS (Domain Name System) comes into play. DNS is like the internet’s phonebook. When you type "google.com," your computer asks the DNS server to find the corresponding IP address for that domain.

Once the DNS server finds the IP address (a series of numbers), it sends it back to your computer. Now your device knows exactly where to send the request. Your ISP then broadcasts your IP address to central servers, allowing you to connect.

But what is an IP address? It’s a unique identifier for devices on a network, usually an IPv4 address. Your router and Network Interface Card (NIC) work together to allocate and manage this IP address. This process enables you to access websites and services seamlessly.

There is also the role of a MAC address, which we will dive deeper into in the next topic as we explain the functionality of each layer.

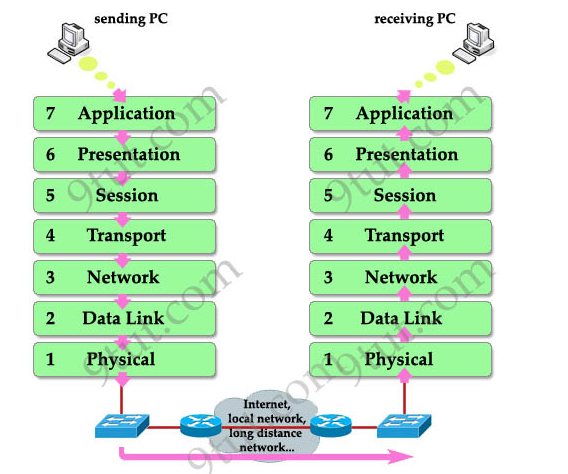

Open Systems Interconnection (OSI) model

The OSI model has seven layers, each playing a role in how data is sent and received over a network. To make it easy to understand, let’s use a fun analogy: imagine you're sending a physical letter to someone. Here’s how each layer works:

Application Layer (Layer 7): This is where you, the sender, decide to write a letter. You open your email or messaging app and start typing your message. In our analogy, this is like sitting down at your desk and writing your letter.

Presentation Layer (Layer 6): After writing the letter, you make sure it's in a format that the receiver will understand. It’s like writing in a language both you and your friend can read. The presentation layer takes care of any translations or encryption if needed, just like making sure your letter is readable.

Session Layer (Layer 5): Now, you want to have a conversation. This layer makes sure your communication (sending the letter) happens smoothly. It’s like deciding when to send your letter and when to expect a reply. The session layer manages the conversation between you and your friend.

Transport Layer (Layer 4): This layer is all about delivering the letter safely. It’s like putting the letter in an envelope and deciding whether to send it through regular mail or special delivery (reliable vs. fast). The transport layer ensures that the data arrives safely, whether it’s broken up into parts or sent all at once.

it also adds checksum to the broken-up packets to make sure every packet is exactly in the order that was sent.

Network Layer (Layer 3): Now, the letter needs to find its way to the correct destination. It makes sure the mail gets to the right city (IP address) using the best path, So it attaches (IP address) sent to each packet.

Data Link Layer (Layer 2): Once your letter is in the right city (IP address), the mailman needs to know the exact house to deliver it to. This is where the data link layer comes in, ensuring that the letter gets to the correct address (MAC address) and making sure no one else opens it on the way.

Physical Layer (Layer 1): Finally, your letter is physically delivered. This layer is all about the real-world, physical stuff: cables, Wi-Fi signals, and electrical signals. It’s like the mailman actually walking to your friend’s house and putting the letter in their mailbox.

HTTP, TCP, UDP, WebSockets, and WebRTC

When designing an application, it's important to understand the different communication protocols available and where they are best suited. Let's break down the common protocols—HTTP, TCP, UDP, WebSockets, and WebRTC—in simple language, with real-life examples to help you decide which one to use.

1. HTTP (HyperText Transfer Protocol)

What it is: HTTP is the most commonly used protocol on the web. It allows your browser to request data (like web pages) from a server and then display it.

Where to use it: Use HTTP for basic, one-way communication where the client (like a web browser) requests data from the server, and the server responds. It’s great for websites, APIs, or any situation where you just need to fetch information.

Real-life example: Imagine visiting an online store to browse products. When you click on a product, the browser sends an HTTP request to the server, and the server sends back the product page. This one-way communication works perfectly for displaying web content.

2. TCP (Transmission Control Protocol)

What it is: TCP ensures reliable, ordered, and error-checked delivery of data between devices. It's a connection-oriented protocol, meaning it guarantees that the data sent will arrive exactly as it was sent.

Where to use it: Use TCP for applications where accuracy is more important than speed, like sending emails, downloading files, or loading web pages.

Real-life example: Think about sending an email. You want to make sure the entire message is received by the recipient, with no missing or mixed-up words. TCP ensures the data arrives correctly, even if it takes a bit longer.

3. UDP (User Datagram Protocol)

What it is: UDP is a faster, connectionless protocol that doesn’t guarantee the order or delivery of packets. It’s useful when speed is more important than reliability.

Where to use it: Use UDP when you need real-time communication and can tolerate some data loss. It's often used for live streaming, online gaming, or voice/video calls where slight delays or missing data won’t ruin the experience.

Real-life example: Think of a live sports broadcast. You’d prefer watching the game in real time, even if there’s a small glitch, rather than waiting for every frame to load perfectly.

4. WebSockets

What it is: WebSockets allow for full-duplex communication, meaning data can flow both ways between the client and server in real-time. This is useful for applications that need constant interaction between the two.

Where to use it: Use WebSockets for chat applications, live notifications, or real-time collaboration tools, where the client and server need to exchange data continuously.

Real-life example: Think of a live chat feature on a website. As soon as you send a message, it appears instantly on the other user’s screen without having to refresh the page. That’s WebSockets at work, keeping the connection open so messages can go back and forth in real time.

5. WebRTC (Web Real-Time Communication)

What it is: WebRTC is a technology that allows for peer-to-peer communication, enabling direct media exchange (video, audio) between browsers without needing a server to relay the data.

Where to use it: Use WebRTC for real-time video and audio calls, like video conferencing, where low-latency, direct communication between users is essential.

Real-life example: Imagine you’re on a Zoom call with someone. The video and audio are being exchanged directly between your browser and theirs, in real-time, thanks to WebRTC.HTTP-DASH (Dynamic Adaptive Streaming over HTTP)

6. HTTP-DASH (Dynamic Adaptive Streaming over HTTP)

What it is: HTTP-DASH is a protocol used for adaptive streaming of media content over the web. It breaks the video or audio into small segments and adjusts the quality of these segments based on the user’s internet connection in real time.

Where to use it: Use HTTP-DASH for video-on-demand platforms or live streaming services where smooth, uninterrupted playback is necessary, regardless of fluctuating internet speeds.

Real-life example: Think of Netflix. When you start watching a show, it might play in high quality. But if your internet connection drops, the video automatically adjusts to a lower resolution to avoid buffering, providing a seamless viewing experience.

Choosing the Right Protocol

Simple client-server communication: Use HTTP for tasks like browsing websites or interacting with APIs, where only the client needs to request data.

Reliable communication: Use TCP when sending important information, like emails or files, where accuracy matters.

Fast, real-time communication: Use UDP for things like live video streams or online gaming, where you need speed and can tolerate minor data loss.

Two-way, real-time interaction: Use WebSockets for real-time applications like live chats, where both sides need to send and receive data continuously.

Peer-to-peer media sharing: Use WebRTC for video and voice calls, where direct communication between devices is required.

Adaptive media streaming: Use HTTP-DASH for video-on-demand services or live streaming platforms like Netflix or YouTube. This protocol ensures smooth video playback by adjusting the video quality based on the viewer’s internet speed in real time.

Data Access and Interaction in Modern Applications: REST APIs, GraphQL, and gRPC

In today's digital world, applications rely on efficient and reliable ways to access and interact with data. Whether you're building a simple web app or a complex microservice architecture, understanding how your application communicates with servers and fetches data is critical. Gone are the days when traditional methods were enough to handle complex demands. Now, the choice of API—whether REST, GraphQL, or gRPC—can greatly influence your app's performance and scalability.

But how do you choose the right protocol for your project? The answer depends on the complexity of your data, the need for real-time updates, and how critical speed and efficiency are to your system. In this article, we’ll dive deep into the three most popular approaches: REST APIs, GraphQL, and gRPC, and explain where and when each shines with real-world examples.

REST APIs (Representational State Transfer)

REST has been the standard for building web services for a long time. It relies on the HTTP protocol and is known for its simplicity and wide adoption.

How It Works: REST APIs operate through stateless requests, where each interaction between client and server is isolated. The server handles CRUD (Create, Read, Update, Delete) operations via specific HTTP methods like

GET,POST,PUT, andDELETE.Where to Use It: REST is best for simpler applications where a clear, resource-based approach to data access is needed. This might include websites, e-commerce platforms, or services that need consistent, reliable communication between the frontend and backend.

Real-life Example: Think of a blogging platform. When a user requests to view all posts, the client sends a

GETrequest to the/postsendpoint. The server responds with all the blog posts in a standardized format, typically JSON.

GraphQL (Graph Query Language)

While REST has its benefits, it can sometimes be inefficient, particularly when multiple API calls are needed to retrieve related data. GraphQL solves this problem by allowing clients to request exactly the data they need, no more, no less.

How It Works: GraphQL enables clients to specify the structure of the data they need in a single query. Instead of multiple endpoints, you have just one GraphQL endpoint that can return any data structure requested.

Where to Use It: GraphQL is perfect for applications with complex data models or when you need to avoid over-fetching or under-fetching of data. It's great for mobile apps, dashboards, and social media platforms, where different views require different data sets.

Real-life Example: In a social media app, when you want to fetch a user's profile, posts, and followers in one request, a GraphQL query can retrieve exactly those fields without multiple round trips to the server. This optimizes both the client’s experience and the server load.

Example Query:

graphqlCopy codequery { user(id: "123") { name email posts { title } } }

gRPC (Google Remote Procedure Call)

While REST and GraphQL focus on fetching data efficiently, gRPC takes it a step further by focusing on performance. Built by Google, gRPC enables fast, low-latency communication, which is particularly useful in microservice architectures.

How It Works: gRPC uses Protocol Buffers (Protobuf), a more efficient binary format than JSON, allowing for smaller message sizes and faster transmission. gRPC also supports multiple programming languages and handles real-time, bi-directional communication with ease.

Where to Use It: gRPC excels in systems where speed and scalability are crucial, such as in real-time data processing or microservices communicating with each other in large-scale backend systems.

Real-life Example: In a ride-sharing app, gRPC can help communicate between services like driver tracking, navigation, and fare calculation in real time, ensuring that the information is exchanged quickly and efficiently.

Choosing the Right Protocol

The choice between REST, GraphQL, and gRPC depends on your application’s needs:

REST: Use for simple, resource-based communication in traditional web apps.

GraphQL: Best for flexible data fetching, especially when dealing with complex data models or optimizing for mobile apps and dashboards.

gRPC: Ideal for performance-critical systems like real-time services or microservices where speed and low latency are crucial.

Each protocol has its strengths, and by understanding their differences, you can ensure that your application performs optimally based on the requirements of your project.

Head-of-Line Blocking: Understanding the Bottleneck in Data Transmission

In the world of network communication, speed and efficiency are critical. But sometimes, even the most efficient systems can encounter delays due to an issue known as Head-of-Line (HOL) blocking. This phenomenon can cause significant bottlenecks in data transmission, affecting overall application performance and user experience. Whether you're working with protocols like TCP, HTTP/2, or using queuing systems, HOL blocking can slow things down.

But what exactly is head-of-line blocking, and how does it impact your system? In this article, we'll break down the concept of HOL blocking, explore where it commonly occurs, and discuss strategies to overcome this bottleneck.

What is Head-of-Line Blocking?

Head-of-Line blocking occurs when the first packet or message in a queue is delayed, causing all subsequent packets or messages to be held up behind it. This means that even though the rest of the data is ready to be processed, nothing can move forward until the first item is resolved. It’s like being in a traffic jam: no matter how clear the road is ahead, you're stuck until the car at the front moves.

Where Does HOL Blocking Happen?

HOL blocking can occur in various scenarios, typically where data is processed in a sequential order:

TCP Protocol: In TCP (Transmission Control Protocol), data is sent as a stream of packets. If one packet is lost or delayed, the entire stream is held up until the missing packet is retransmitted. This creates a delay even if all the other packets have arrived.

HTTP/2 Multiplexing: Although HTTP/2 introduced multiplexing to allow multiple requests to be sent over a single connection, it can still suffer from HOL blocking at the TCP level. If one frame of a request is delayed, all other requests in that connection must wait.

Queuing Systems: In systems where data or tasks are processed in a queue, HOL blocking can occur if the first task is delayed or stuck, causing a backlog for the rest of the queue.

Real-Life Example

Imagine you're at a fast-food drive-thru. If the person at the front of the line is taking a long time to order, every car behind them is forced to wait, even if their orders are simple and could be processed quickly. This is a real-world analogy of HOL blocking: the first task (order) delays all subsequent tasks, even if they're ready to go.

How Does HOL Blocking Impact Performance?

The impact of HOL blocking is most noticeable in high-performance applications where real-time communication is critical, such as video streaming, online gaming, or financial trading platforms. It can lead to:

Increased latency (delay in data transmission)

Decreased throughput (reduction in the amount of data successfully transmitted)

Poor user experience due to slow or interrupted services

Strategies to Mitigate HOL Blocking

To minimize or avoid HOL blocking, different approaches can be taken depending on the protocol or system in use:

UDP for Real-Time Communication: Unlike TCP, UDP (User Datagram Protocol) does not guarantee packet delivery, which can reduce the impact of HOL blocking. For applications like online gaming or voice-over-IP (VoIP), UDP is preferred because it allows data to be transmitted without waiting for lost packets to be resent.

HTTP/3 (QUIC Protocol): HTTP/3, built on the QUIC(Quick UDP Internet Connections) protocol, addresses HOL blocking at the transport layer. QUIC uses multiple independent streams to ensure that a delay in one stream doesn’t block others, solving the problem seen in HTTP/2 over TCP.

Parallelism in Queuing Systems: In systems that process tasks in a queue, using parallel queues or prioritization can help reduce the impact of HOL blocking. By breaking tasks into independent streams, high-priority or quicker tasks can bypass longer or delayed ones.

Subscribe to my newsletter

Read articles from Ritik Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by