The Limits of Correlation: Why Today’s AI Falls Short of True Understanding

Gerard Sans

Gerard Sans

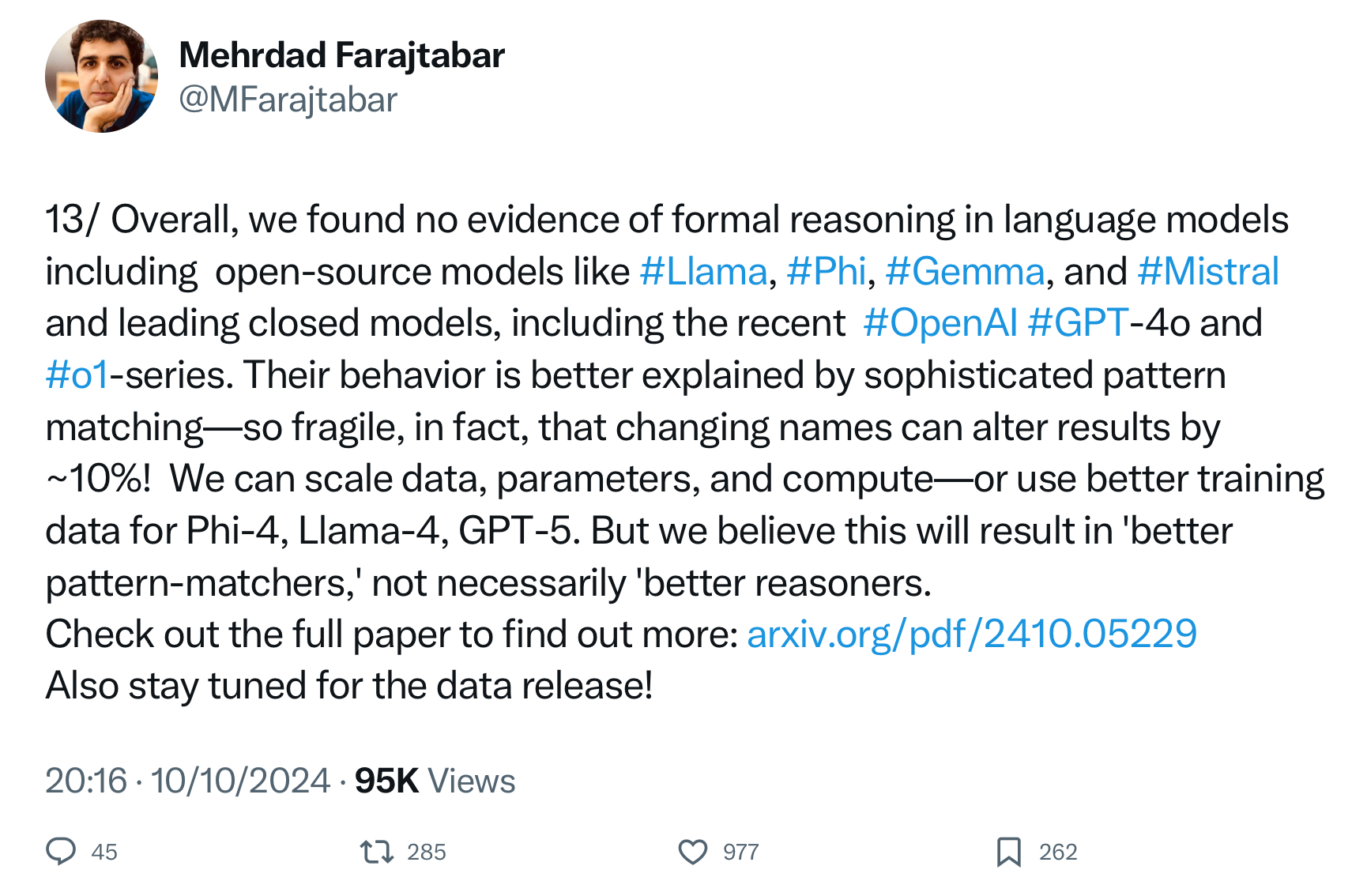

Artificial Intelligence, particularly in the form of large language models (LLMs), has made remarkable strides in recent years. However, there's a fundamental limitation to these systems that's often overlooked: the gap between correlation and causation. This distinction is crucial for understanding why AI, despite its impressive capabilities, still falls short of human-like reasoning and comprehension.

Correlation vs. Causation: The Heart of the Matter

Current AI systems, including state-of-the-art language models, are built on a foundation of statistical correlations. They excel at recognizing patterns in data and can generate responses that seem remarkably human-like. However, they lack the ability to understand causal relationships or engage in logical reasoning.

The Human Approach: Logic and Reasoning

As humans, we navigate the world using logic and reasoning based on causation, not just correlation. We employ various forms of reasoning:

Deduction: Drawing specific conclusions from general premises

Induction: Inferring general rules from specific examples

Abduction: Forming the most likely explanation for an observation

This allows us to understand the "why" behind phenomena and make logical inferences beyond surface-level patterns.

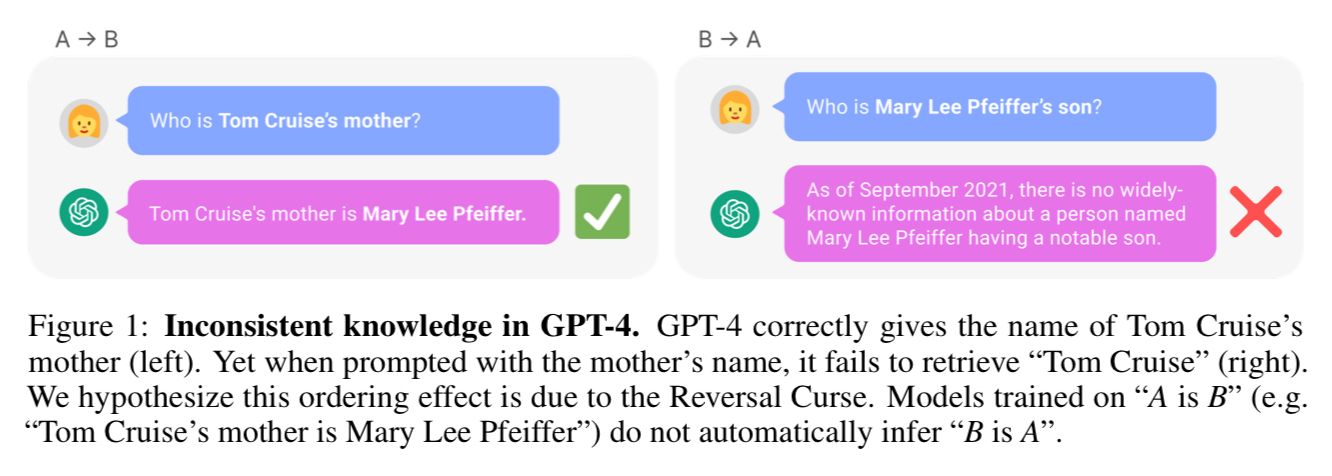

The Mother-Son Relationship: A Revealing Example

To illustrate the limitations of correlation-based AI, let's consider a simple example involving a celebrity and their family relationships.

The AI's Perspective

When asked about a famous actor like Robert Downey Jr., an AI model can usually provide accurate information. This is because the actor's name frequently appears in the training data, creating strong correlations with various facts about his life and career.

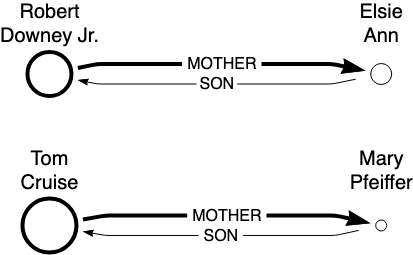

However, if we approach the same information from a different angle—say, asking about who Elsie Ann’s (Robert Downey Jr. mother) son is—the AI's limitations become apparent.

The Correlation Gap

The mother of a celebrity is rarely the central focus of articles or social media posts. As a result, there are far fewer data points connecting the mother's name directly to the actor. This creates a "correlation gap" that the AI struggles to bridge.

An AI doesn't truly understand the concept of a mother-son relationship or how to navigate family trees logically. It can't reason that if A is the mother of B, then B must be the son of A. Instead, it relies solely on the patterns and correlations present in its training data.

See below a diagram illustrating AI's perspective on celebrity-mother relationships. Nodes represent individuals, arrows show family connections. Celebrities, frequent in AI training data, are depicted as thicker larger nodes. Their mothers appear as smaller thinner nodes, highlighting the 'correlation gap' in AI's understanding of less-mentioned individuals.

As this example demonstrates, LLMs lack true intelligence or reasoning abilities because their knowledge is context-dependent and not stored in a unified, accessible knowledge base.

Beyond Correlation: Other Critical Limitations of AI

While the gap between correlation and causation is a fundamental limitation of current AI systems, there are several other crucial areas where AI falls short of human-like understanding. These limitations stem from the nature of AI training data and the lack of certain types of knowledge that humans acquire through experience and intuition.

1. Logical Axioms and Common Sense Reasoning

Humans often take for granted the basic logical principles and common sense understanding that we use to navigate the world. We assume that AI possesses similar capabilities, but this is frequently not the case.

Example: While a human easily understands that if A is greater than B, and B is greater than C, then A must be greater than C, an AI might struggle with this transitive property unless it has been explicitly trained on many similar examples.

AI systems often fail at tasks requiring intuitive reasoning that seem trivial to humans. This is because common sense is difficult to codify and is often not explicitly stated in training data.

2. Lack of Lived Experience

Unlike humans, AI doesn't have personal experiences to draw from. It lacks the embodied understanding that comes from:

Interacting with the physical world

Forming relationships

Navigating social situations

Example: An AI can process vast amounts of text about human emotions, but it cannot truly understand the feeling of joy, sadness, or love in the way a human does. It lacks the context that comes from actually experiencing these emotions.

This absence of lived experience limits AI's ability to truly understand and relate to human experiences, leading to potential misinterpretations or inappropriate responses in certain contexts.

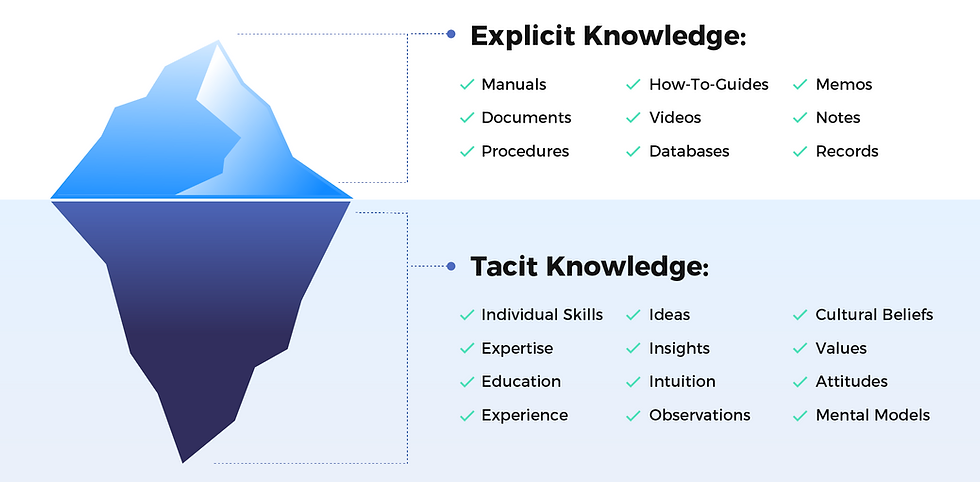

3. Absence of Tacit Knowledge

Humans possess a vast amount of unspoken, intuitive knowledge gained through experience. This tacit knowledge is:

Difficult to articulate

Often missing from explicit training data

Crucial for understanding nuanced situations

Example: A human chef knows intuitively how to adjust cooking times based on the look and smell of a dish, even if the recipe doesn't specify it. This kind of tacit knowledge is extremely difficult to capture in AI training data.

AI systems lack this intuitive understanding, which can lead to gaps in their ability to handle complex, real-world scenarios that require more than just explicit, codified knowledge.

Implications for AI Development and Use

Recognizing these limitations is crucial for several reasons:

Realistic Expectations: Understanding what AI can and cannot do helps set appropriate expectations for its capabilities.

Responsible Development: Awareness of these limitations should guide the development of AI systems, encouraging researchers to find ways to incorporate more human-like reasoning and understanding.

Appropriate Application: Knowing where AI falls short helps in determining appropriate use cases, especially in high-stakes situations where human judgment and experience are irreplaceable.

Complementary Strengths: Rather than trying to replace human intelligence entirely, the goal should be to develop AI systems that complement human strengths, filling in gaps where machine processing excels while relying on human judgment for areas requiring intuition, experience, and common sense.

As AI continues to advance, addressing these limitations will be a key challenge. Future developments may find ways to incorporate more human-like understanding into AI systems, but for now, it's essential to approach AI as a powerful tool with specific capabilities and well-defined limitations, rather than as a complete replacement for human intelligence and experience.

The Illusion of AI Understanding

This example highlights a common misconception about AI capabilities. Many people assume that AI systems understand information in the same way humans do, but this isn't the case. They don't employ logic, reasoning, or a genuine understanding of concepts like family relationships.

Instead, AI captures data correlations—nothing more, nothing less. While this approach can yield impressive results in many scenarios, it falls short when faced with tasks requiring true comprehension or logical inference.

Implications for Responsible AI Use

Understanding these limitations is crucial for the responsible development and application of AI technologies. We must be cautious about deploying AI in high-stakes situations where errors resulting from these limitations could have serious consequences.

The Path Forward: AI Literacy and Continued Research

As AI continues to advance, it's essential to promote AI literacy among developers, users, and the general public. We need to appreciate the power of current AI systems while also recognizing their inherent limitations.

Simultaneously, researchers are exploring ways to incorporate causal reasoning and other forms of logical inference into AI systems. These efforts may eventually lead to AI that can bridge the gap between correlation and causation, bringing us closer to machines that truly understand and reason about the world.

Until then, it's crucial to approach AI as a powerful tool with specific capabilities and limitations, rather than as a replacement for human understanding and judgment.

Subscribe to my newsletter

Read articles from Gerard Sans directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gerard Sans

Gerard Sans

I help developers succeed in Artificial Intelligence and Web3; Former AWS Amplify Developer Advocate. I am very excited about the future of the Web and JavaScript. Always happy Computer Science Engineer and humble Google Developer Expert. I love sharing my knowledge by speaking, training and writing about cool technologies. I love running communities and meetups such as Web3 London, GraphQL London, GraphQL San Francisco, mentoring students and giving back to the community.