Case Study: Building a Real-Time Focus Timer with Django, Redis, and WebSockets

Saurav Sharma

Saurav Sharma

Hey there! Today, we're diving into the inner workings of Tymr, a cloud-based focus timer that's got some interesting tech under the hood. You can check it out at https://tymr.online if you're curious. Let's break down how this app keeps you focused, even when your browser decides to take a nap.

The Big Picture: How Timer Ticks

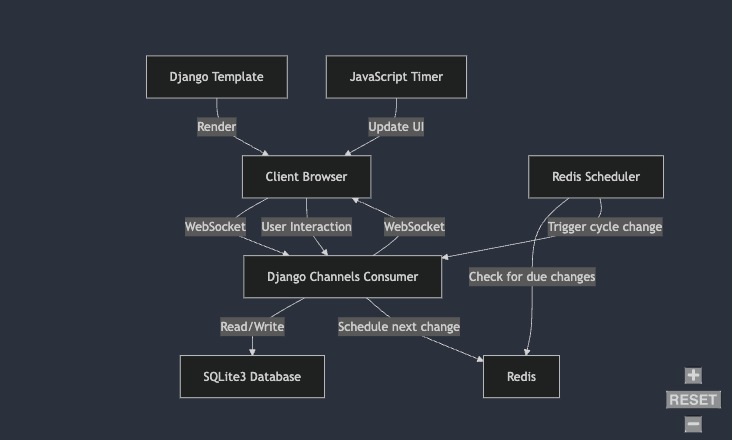

The Tymr focus timer application uses a unique combination of Redis, Django Channels, and client-side JavaScript to manage real-time timer updates. At its core, a Redis Scheduler continuously checks for scheduled cycle changes. When a change is due, it triggers an update in the Django application, which then uses Django Channels to push this update to connected WebSocket clients. On the client side, a combination of JavaScript and Django template language renders the timer interface and handles user interactions. These interactions are sent back to the Django application via WebSocket, which may result in new cycle schedules being added to Redis. All persistent data, including user information and session details, is stored in a SQLite3 database. This architecture allows for precise, server-side timer management while providing a responsive, real-time experience for users.

Here's a diagram illustrating this architecture:

This diagram shows the flow of data and interactions between the different components of the Tymr application, highlighting the central role of the Redis Scheduler and Django Channels Consumer in managing the focus timer sessions.

Your browser talks to Nginx, our trusty web server.

Nginx routes HTTP requests to Django and WebSocket connections to Uvicorn (our ASGI server).

Both Django and Uvicorn interact with our SQLite3 database (yep, keeping it simple!) and Redis.

Our Redis Scheduler keeps an eye on when to change the cycle. we just add the cycle details along with focus session id to the redis zset & in the redis_scheduler.py, we keep on scanning the redis zset if there is any data and we process them if found.

OneSignal handles push notifications when your focus session ends.

when a session start, consumer send the timer update to clients & client browsers use web worker to keep the timer ticking. one there is an update about next cycle, that also sent to client browser using websocket connection.

Now, let's zoom in on some of the cool parts!

the decision to use sqlite3 was to keep things simple. i don’t think i need postgresql for this so far. also i saw a short clip by dhh inspiring me for simplicity in the start and not caring much about scalability because we are not there yet. although i worked on this project mainly for learning purpose & experimenting different things i learn.

Battling Browser Naps with Web Workers

Ever noticed how sometimes your browser tabs seem to doze off when you're not looking at them? This can mess with JavaScript timers, which is not great for a focus timer app. To combat this, we're using Web Workers. These little champions run in the background, keeping our timer ticking even when the browser tab is sleeping.

Here's a snippet of how we set this up:

// In focus_session.js

const worker = new Worker('hack_timer_worker.js');

worker.onmessage = function(event) {

// Handle timer updates

};

// Start a timer

worker.postMessage({

name: 'setInterval',

time: 1000 // Update every second

});

This keeps our timer accurate, even if you switch tabs or minimize your browser. Pretty neat, huh? but to make it more simple, i just add this script before loading the timer related code. https://github.com/turuslan/HackTimer which solves most of tab sleep issues.

Redis Scheduler: The Time Lord of Our App

Now, let's talk about our Redis Scheduler. This is the behind-the-scenes magician that makes sure your focus cycles change at the right time, even if you close your browser entirely.

Here's how it works:

When you start a session, we schedule the next cycle change in Redis using a sorted set (zset).

Our Redis Scheduler (running as a Django management command) constantly checks for due changes.

When it's time, it triggers the cycle change and schedules the next one.

Let's look at some code:

# In redis_scheduler.py

class RedisScheduler:

async def run(self):

while True:

now = time.time()

due_changes = await self.redis.zrangebyscore("scheduled_cycle_changes", 0, now)

for session_id in due_changes:

await self.process_change(session_id)

await asyncio.sleep(1)

async def process_change(self, session_id):

session = await selectors.get_session_by_id_async(session_id)

timer_service = AsyncTimerService(session_id, session.owner, session.owner.username)

await timer_service.change_cycle_if_needed(session)

await self.redis.zrem("scheduled_cycle_changes", session_id)

await timer_service.schedule_next_cycle_change(self.redis)

This setup allows for precise timing without relying solely on client-side JavaScript. Cool, right? well here is the complete redis_scheduler.py file incase you wanna know.

import asyncio

import redis.asyncio as redis

from django.conf import settings

from channels.layers import get_channel_layer

import logging

import time

from django.core.management.base import BaseCommand

from apps.realtime_timer.business_logic import selectors

from apps.realtime_timer.business_logic.services import AsyncTimerService

from apps.realtime_timer.models import FocusSession

logger = logging.getLogger(__name__)

class RedisScheduler:

def __init__(self):

self.redis = redis.Redis.from_url(f"redis://{settings.REDIS_HOST}:{settings.REDIS_PORT}")

self.channel_layer = get_channel_layer()

async def run(self):

while True:

try:

now = time.time()

due_changes = await self.redis.zrangebyscore("scheduled_cycle_changes", 0, now, start=0, num=100)

if due_changes:

for session_id in due_changes:

logger.info("--------------------------------------------")

await self.process_change(session_id)

logger.info("--------------------------------------------")

# TODO: remove this wait if possible

await asyncio.sleep(0.1)

else:

spinner = "⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏"

for _ in range(10):

print(f"\rWaiting for changes {spinner[_ % len(spinner)]}", end="", flush=True)

await asyncio.sleep(0.1)

print("\r" + " " * 30 + "\r", end="", flush=True)

await asyncio.sleep(1)

except redis.RedisError as e:

logger.error(f"Redis error: {e}")

await asyncio.sleep(5)

except Exception as e:

logger.error(f"Unexpected error: {e}")

await asyncio.sleep(5)

async def process_change(self, session_id):

try:

session_id = session_id.decode()

session = await selectors.get_session_by_id_async(session_id)

session_owner = await selectors.get_session_owner_async(session)

if not session:

logger.error(f"Session {session_id} not found, skipping processing")

await self.redis.zrem("scheduled_cycle_changes", session_id)

return

timer_service = AsyncTimerService(session_id, session_owner, session_owner.username)

if session.timer_state == FocusSession.TIMER_RUNNING:

logger.info(f"calling timer_service.change_cycle_if_needed for session {session_id}")

await timer_service.change_cycle_if_needed(session)

logger.info(f"calling timer_service.schedule_next_cycle_change for session {session_id}")

await self.redis.zrem("scheduled_cycle_changes", session_id)

await timer_service.schedule_next_cycle_change(self.redis)

logger.info(f"cycle change completed for session {session_id}")

else:

logger.info(f"session {session_id} is not running, skipping cycle change")

await self.redis.zrem("scheduled_cycle_changes", session_id)

except Exception as e:

await self.redis.zrem("scheduled_cycle_changes", session_id)

logger.error(f"Error processing change for session {session_id}: {e}")

logger.exception("Full traceback:")

class Command(BaseCommand):

help = "Runs the Redis scheduler for focus session cycle changes"

def handle(self, *args, **options):

scheduler = RedisScheduler()

asyncio.run(scheduler.run())

Race Conditions: When Timing is Everything

Now, let's talk about a tricky problem in concurrent programming: race conditions. Imagine two people trying to update the same focus session at the same time. Chaos, right?

We faced this issue with our focus cycles. Multiple processes could try to update the same session simultaneously, leading to:

Incorrect cycle transitions

Wrong focus period calculations

Inconsistent timer states

To tackle this, we use Django's select_for_update(). It's like putting a "Do Not Disturb" sign on our database rows. Here's how we use it:

@database_sync_to_async

def get_current_cycle_locked_async(session: FocusSession):

return FocusCycle.objects.select_for_update().get(id=session.current_cycle_id)

But be careful! Using select_for_update() can lead to deadlocks if not used properly. Here are some precautions:

Always acquire locks in the same order across your application.

Keep the locked section as short as possible.

Use timeouts to prevent indefinite waiting.

For example:

from django.db import transaction

@database_sync_to_async

def update_session(session_id):

with transaction.atomic():

session = FocusSession.objects.select_for_update(nowait=True).get(id=session_id)

# Perform updates here

session.save()

The nowait=True option helps prevent deadlocks by raising an error immediately if the lock can't be acquired and you can catch that error and handle those cases manually.

Async Consumers: Real-Time Magic

Last but not least, let's chat about our async consumers. These are the multitasking ninjas of our application, handling real-time communications without breaking a sweat.

We use Django Channels to handle WebSocket connections asynchronously. This allows us to manage multiple user connections simultaneously. Here's a simplified version of our consumer:

class FocusSessionConsumer(AsyncWebsocketConsumer):

async def connect(self):

self.session_id = self.scope["url_route"]["kwargs"]["session_id"]

self.session_group_name = f"focus_session_{self.session_id}"

await self.channel_layer.group_add(self.session_group_name, self.channel_name)

await self.accept()

async def receive(self, text_data):

data = json.loads(text_data)

if data["action"] == "toggle_timer":

await self.toggle_timer()

# ................................

@check_session_owner_async

async def toggle_timer(self):

timer_state = await self.timer_service.toggle_timer()

if timer_state == "paused":

await self.timer_service.cancel_scheduled_cycle_change_if_timer_stopped(self.redis_client, self.session_id)

elif timer_state == "resumed":

await self.timer_service.schedule_next_cycle_change(redis_client=self.redis_client)

# rest of the logic...................

This setup allows us to handle real-time updates for multiple users smoothly!

Wrapping Up

Building Tymr was like assembling a high-tech clock with a sprinkle of cloud magic. From battling browser naps with Web Workers to orchestrating time with our Redis Scheduler, and managing real-time connections with async consumers - each component plays a crucial role in keeping everything ticking smoothly.

I deployed this django app to https://tymr.online so incase you want to learn about deployment of Django app on vps then check this link

https://selftaughtdev.hashnode.dev/comprehensive-django-deployment-guide-for-beginners

Got any questions or want to dive deeper into any part? Drop a comment below - I'd love to geek out about it with you!

p.s. I am still learning & i may have missed some points here or not explain them well.

Subscribe to my newsletter

Read articles from Saurav Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Saurav Sharma

Saurav Sharma

I am a Self Taught Backend developer With 3 Years of Experience. Currently, I am working at a tech Startup based in The Bahamas. Here are my skills so far - 💪Expert at - 🔹Python 🔹Django 🔹Django REST framework 🔹Celery ( for distributed tasks ) 🔹ORM ( Know how to write fast queries & design models ) 🔹Django 3rd party packages along with postgresQL and mysql as Databases. 🔹Cache using Redis & Memcache 🔹Numpy + OpenCV for Image Processing 🔹ElasticSearch + HayStack 🔹Linux ( Debian ) 😎 Working Knowledge - Html, CSS, JavaScript, Ajax, Jquery, Git ( GitHub & BitBucket ), Basic React & React Native, Linux ( Arch ), MongoDB, VPS 🤠 Currently Learning - 🔹More Deep Dive into Django 🔹Docker 🔹Making APIs more Robust 🔹NeoVim 🔹System Design ☺️ Will Be Learn in upcoming months - 🔹GraphQL 🔹 Rust language Other than above, there is not a single technology ever exists that i can't master if needed.