JVM Architecture in Depth Understanding

Bikash Nishank

Bikash Nishank

1. Introduction to Java Virtual Machine (JVM)

1.1 What is the JVM?

The Java Virtual Machine (JVM) is a virtual environment responsible for running Java applications. When you write Java code and compile it, the Java compiler (javac) transforms the human-readable Java source code into bytecode, a platform-independent intermediate representation. The JVM then interprets this bytecode or compiles it into native machine code that can run on the specific platform.

Instead of compiling Java directly into machine code specific to a single platform, Java programs are compiled into bytecode. This bytecode can be executed on any platform that has a JVM installed. In essence, the JVM abstracts the hardware specifics of the system, allowing for seamless cross-platform execution.

1.2 Role in the Java Ecosystem

The JVM works in conjunction with other parts of the Java ecosystem:

JRE (Java Runtime Environment): Contains the JVM and the libraries necessary to run Java applications.

JDK (Java Development Kit): Includes development tools like the compiler (

javac), debugger (jdb), and libraries for building Java applications. It also includes the JRE and JVM.

1.3 Platform Independence: Write Once, Run Anywhere

Java’s main selling point is its platform independence. The JVM enables the compiled bytecode to be executed on any platform, such as Windows, Linux, or macOS, without modification. Each platform has its own implementation of the JVM, ensuring compatibility with the underlying operating system while still running the same Java bytecode.

This abstraction is what allows Java to be used across different environments and devices, from desktop computers to mobile phones and embedded systems.

1.4 Difference Between JVM, JRE, and JDK

Here’s a simple distinction between these key components:

JVM: The virtual machine that runs Java bytecode.

JRE: The runtime environment that includes the JVM and the necessary libraries to execute a Java application.

JDK: A development toolkit that includes the JRE, JVM, and tools like the compiler (

javac) to build Java programs.

2. Overview of JVM Architecture

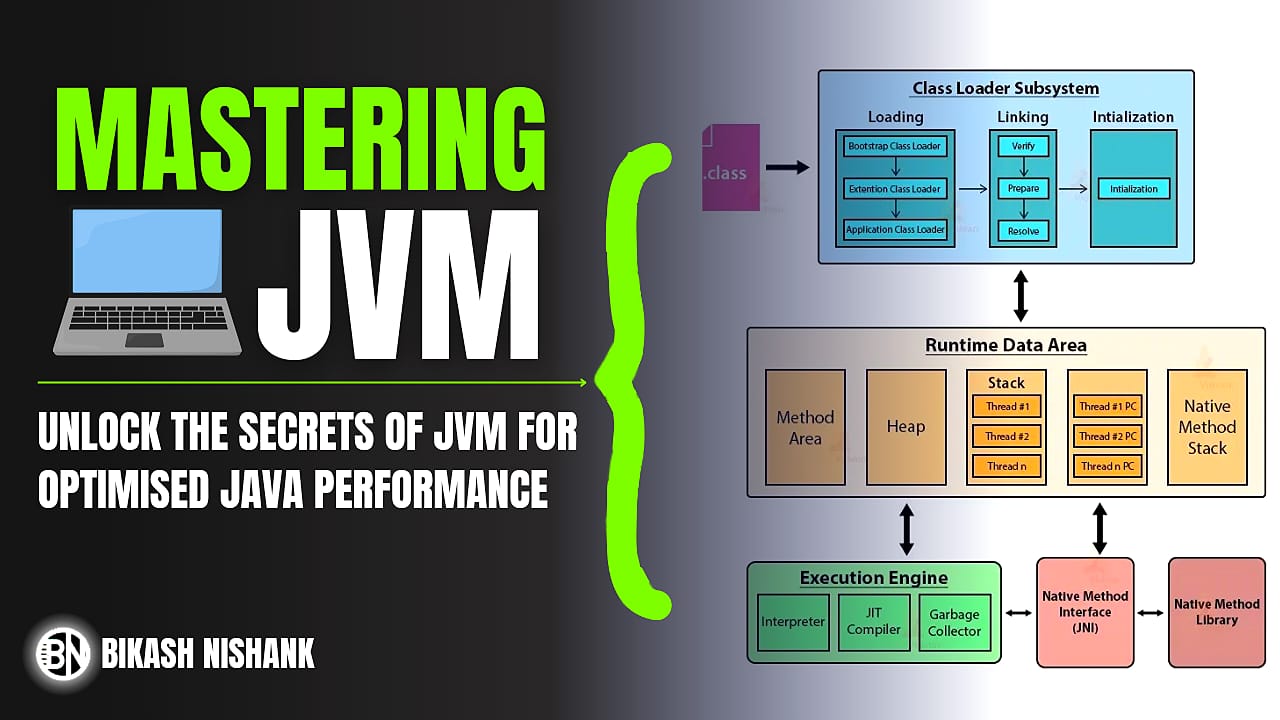

The JVM has a multi-component architecture that plays a vital role in the lifecycle of a Java program. The architecture consists of:

2.1 Class Loader Subsystem

The Class Loader is responsible for loading Java classes into memory when needed. Java uses a dynamic, on-demand approach for class loading, which helps to save memory and improve performance. The Class Loader Subsystem has three main activities:

Loading: The

.classfiles are loaded from the file system or network into memory.Linking: After loading, classes are verified, prepared, and resolved.

Initialization: Static fields are initialized, and static blocks are executed.

The Class Loader follows a delegation model, where it first delegates the class-loading request to its parent class loader. Only if the parent cannot load the class, does the current class loader attempt to load it.

2.2 Memory Areas in JVM

The JVM divides its memory into distinct regions to handle different parts of program execution:

Heap Memory: This is where all Java objects are stored, and it is shared among all threads.

Stack Memory: Each thread has its own stack memory, which stores local variables, method parameters, and intermediate calculations. The stack follows a Last-In-First-Out (LIFO) principle.

Method Area: Stores class-level data such as class structure, method metadata, constant pool, and static variables.

Program Counter (PC) Register: Each thread in Java has its own PC register, which keeps track of the next instruction to execute.

Native Method Stack: Used when Java code interacts with native code written in other languages like C or C++ through the JNI (Java Native Interface).

2.3 Execution Engine

The Execution Engine is responsible for executing the bytecode. It does this through various techniques such as interpretation and compilation.

2.4 Native Method Interface (JNI)

The JNI provides the mechanism for Java code to call or be called by native applications or libraries written in languages like C or C++. This is particularly useful when you need to access operating system-specific features or performance-optimized native code.

2.5 Java Languages on JVM

Java isn’t the only language that can run on the JVM. Many other languages, such as Kotlin, Scala, Groovy, and Clojure, are also designed to run on the JVM. These languages are compiled into Java bytecode, allowing them to benefit from the JVM's features like garbage collection, platform independence, and memory management.

3. Class Loader Subsystem in Detail

3.1 Class Loader Mechanism

The Class Loader in the JVM is responsible for finding and loading class files at runtime. Class loading happens lazily, meaning classes are only loaded when they are first used. This allows Java to minimize its memory footprint.

3.2 Types of Class Loaders

There are several built-in class loaders in the JVM:

Bootstrap ClassLoader: Loads core Java libraries (e.g.,

java.langpackage) from the Java runtime.Extension ClassLoader: Loads libraries located in the

java.ext.dirsdirectory.Application ClassLoader: Loads classes from the application's classpath (specified by

-classpathorCLASSPATHenvironment variable).

3.3 Delegation Model of Class Loaders

The delegation model ensures that a class is loaded by the top-most class loader first. Each class loader checks with its parent before attempting to load a class on its own. This model prevents duplication of classes and ensures that core Java classes are always loaded by the Bootstrap ClassLoader.

3.4 Class Loading Process

The class loading process consists of three main steps:

Loading: Locating the class file (from the file system or network), reading its bytecode, and creating a corresponding

Classobject.Linking: The linking step verifies the bytecode (ensures that it follows the JVM’s security rules), prepares class variables, and optionally resolves all symbolic references.

Initialization: During initialization, the JVM assigns initial values to static fields and executes any static initializer blocks.

3.5 ClassLoader in Java 8 and Beyond

Starting from Java 8, the JVM replaced the PermGen (used in previous versions to store class metadata) with Metaspace, which is dynamically sized. This change addresses memory issues that plagued the PermGen, especially in applications with heavy class loading like application servers.

4. JVM Memory Structure (Java Memory Model)

Memory management is critical in the JVM, and the Java memory model is designed to optimize performance while providing strong consistency guarantees.

4.1 Heap Memory

The heap is where all Java objects are stored. It is divided into several regions:

Young Generation: Stores short-lived objects, such as local variables or temporary objects. This is subdivided into:

Eden Space: Where new objects are allocated.

Survivor Spaces (S0 and S1): Objects that survive a garbage collection in the Eden space are moved to the Survivor spaces.

Old Generation: Stores long-lived objects that have survived several garbage collection cycles. This space is typically larger and subjected to less frequent garbage collection.

4.2 Stack Memory

Each thread in Java has its own stack, where method calls and local variables are stored. The stack is smaller than the heap and operates in a LIFO (Last In, First Out) fashion. When a method is called, a new stack frame is created, and when the method returns, the frame is removed from the stack.

4.3 Method Area

The method area holds metadata about the classes that have been loaded, including method information, static variables, and constants. In Java 7 and earlier, this was part of the PermGen space, but Java 8 replaced it with Metaspace, which is dynamically sized and located in native memory.

4.4 Runtime Constant Pool

The runtime constant pool is a per-class data structure that holds constants such as string literals, method references, and numeric constants. This pool is part of the method area and plays a crucial role in dynamic method dispatch and other JVM runtime operations.

4.5 Program Counter (PC) Register

Each thread in Java has its own Program Counter (PC) register. This register stores the address of the current instruction being executed by the thread. If the thread is executing a native method, the PC register is undefined.

4.6 Native Method Stack

The native method stack is used when Java calls methods written in other languages (like C or C++) through the JNI. These methods may need access to low-level system resources, making JNI a powerful but platform-dependent feature.

5. Execution Engine

The Execution Engine is responsible for executing Java bytecode. It converts the bytecode into machine code so it can be executed by the underlying hardware. There are two primary modes of execution: interpretation and compilation.

5.1 Interpreter

The JVM can interpret bytecode, which means it reads the bytecode line by line and translates each instruction into machine code on the fly. While interpretation is simple, it’s not the most efficient approach because each instruction must be translated every time it’s encountered.

5.2 Just-In-Time (JIT) Compiler

To improve performance, the JVM uses a Just-In-Time (JIT) compiler. Rather than interpreting the bytecode line by line, the JIT compiler translates the bytecode into native machine code all at once. Once compiled, the native code is cached and reused, eliminating the need for repeated interpretation. The JIT compiler focuses on optimizing methods that are executed frequently, known as "hot spots." By converting these methods into native code, the JVM significantly reduces the overhead of execution.

The JIT compiler can also perform inlining, where frequently called methods are embedded directly into the calling code, further reducing method call overhead.

5.3 HotSpot Profiler

The HotSpot JVM, developed by Oracle, uses a HotSpot Profiler to identify these "hot" methods. The profiler tracks the execution of the bytecode and determines which methods should be compiled into native code. The profiling data is constantly updated, and the JVM adapts its optimizations based on the runtime behavior of the program.

5.4 Garbage Collection

An integral part of the Execution Engine is the Garbage Collector (GC), which is responsible for reclaiming memory allocated to objects that are no longer in use. The garbage collector works in the background to manage memory and prevent memory leaks by freeing up space taken by unreachable objects. The garbage collection process uses algorithms like Mark-and-Sweep and Generational GC to perform efficient memory management.

5.5 Adaptive Optimisation

Modern JVMs use adaptive optimisation, meaning they dynamically optimize the code as it runs. The JVM monitors the runtime behavior and makes adjustments accordingly. For example, frequently used code paths are compiled into native code, while infrequently used code may remain interpreted. This process allows the JVM to strike a balance between execution speed and memory usage without requiring manual intervention.

6. Detailed Look into Garbage Collection (GC)

Garbage Collection (GC) is a core feature of the JVM responsible for automatically reclaiming memory by removing objects that are no longer in use. This helps in managing heap memory efficiently and prevents memory leaks. Java provides multiple types of garbage collectors to optimise performance based on specific application needs.

6.1. Heap Management and GC in Java 7

In Java 7, the heap is divided into three regions:

Young Generation: Contains newly created objects. Most objects die here quickly, and those that survive multiple GC cycles are promoted to the Old Generation.

Old Generation: Contains objects that have survived several GC cycles. GC in the Old Generation is usually less frequent but takes more time.

Permanent Generation (PermGen): Stores class metadata, constants, method code, and static variables. PermGen has a fixed size and can cause

OutOfMemoryErrorif it runs out of space.

Example:

// Allocating objects in heap

public class HeapMemoryDemo {

public static void main(String[] args) {

for (int i = 0; i < 100000; i++) {

String str = new String("Object " + i);

}

System.gc(); // Request garbage collection

}

}

In the above code, short-lived objects are created in the Young Generation, and after some iterations, the GC will be triggered to reclaim memory.

Generational GC Process:

Minor GC: Cleans the Young Generation.

Major GC: Cleans the Old Generation and sometimes the PermGen space.

The default GC in Java 7 is the Parallel GC, which runs the GC process in multiple threads to improve performance on multi-core processors.

6.2. Introduction of G1 GC in Java 7

The Garbage First (G1) GC was introduced as an alternative to the CMS (Concurrent Mark-Sweep) collector. It focuses on predictable pause times while maintaining high throughput. G1 works by breaking the heap into small regions and prioritising garbage collection in regions with the most garbage.

How G1 GC Works:

Regions: Instead of dividing the heap into fixed Young and Old Generations, G1 divides it into a number of equal-sized regions.

Marking and Evacuation: G1 uses a marking phase to identify live objects, and then an evacuation phase to move live objects into new regions, compacting memory and freeing space.

Example:

In a web application where response time is critical, G1 GC helps by reducing the time the application spends in GC pauses. Developers can control the pause time with options like -XX:MaxGCPauseMillis=200.

6.3. Changes to Garbage Collection in Java 8

Java 8 introduced several important changes to garbage collection:

Removal of PermGen: The PermGen space was removed and replaced by Metaspace, which resides in native memory (outside the heap) and dynamically grows as needed.

Improved G1 GC: G1 GC became more stable and was enhanced to reduce Full GCs and manage large heaps better.

String Deduplication: A feature introduced with G1 GC that automatically detects duplicate strings and stores only one copy to reduce memory usage.

Example:

String s1 = "Hello";

String s2 = "Hello"; // This will not create a new object due to String interning

String deduplication reduces memory overhead by storing only one instance of identical strings.

6.4. G1 GC as the Default Collector in Java 9

In Java 9, G1 GC became the default garbage collector for both client and server-class machines, as it balanced throughput with shorter, predictable GC pauses. G1 reduces Full GC pauses by dynamically managing memory and limiting the amount of garbage collected in one go.

Example GC Options in Java 9:

java -XX:+UseG1GC -XX:MaxGCPauseMillis=200 -Xms2g -Xmx4g YourApp.jar

This sets G1 GC with a maximum pause time of 200 milliseconds, ensuring better responsiveness.

6.5. ZGC and Shenandoah GC in Java 11+

Starting from Java 11, newer low-latency garbage collectors were introduced to handle larger heaps and provide ultra-low GC pause times.

ZGC (Z Garbage Collector):

Introduced in Java 11, ZGC is designed for large heaps (up to multiple terabytes) and aims to keep pause times below 10 milliseconds.

ZGC performs concurrent marking and relocation of objects while minimising the impact on application threads.

Shenandoah GC:

- Shenandoah GC was designed for ultra-low pause times by performing both marking and compacting concurrently with the application’s execution.

Both ZGC and Shenandoah are ideal for applications where latency is a primary concern.

6.6. Performance Tuning with GC

Tuning GC is essential for optimising application performance, especially for large, long-running applications.

Tuning Tips:

Heap Sizing: Use

-Xmsand-Xmxto set the initial and maximum heap sizes.G1 GC Tuning: Control GC pauses using

-XX:MaxGCPauseMillis.GC Logs: Enable GC logging with

-Xlog:gc*to analyse GC behavior and detect performance bottlenecks.

7. The Method Area and Its Evolution

7.1. Method Area in Java 7 (PermGen Space)

In Java 7, the Method Area was part of the PermGen space, which held class metadata, method code, and static variables. It was a fixed-size memory area, which often led to issues in long-running applications, such as OutOfMemoryError when classloading was excessive.

7.2. Problems with PermGen in Java 7

Memory Leaks: Classloader leaks often caused the PermGen space to run out of memory. For example, in web applications where classes are frequently reloaded (like Tomcat), PermGen would fill up quickly.

Fixed Size: PermGen had a maximum size (

-XX:MaxPermSize), and exceeding this size could crash the application.

7.3. Metaspace in Java 8 (What Changed?)

Java 8 replaced PermGen with Metaspace, which is not part of the Java heap but instead allocated from native memory. This change made class metadata storage more dynamic, as the Metaspace can grow as needed, reducing the risk of memory errors.

Key Improvements:

Unlimited Growth: Metaspace can dynamically grow until native memory is exhausted, unlike PermGen which had a fixed upper limit.

Reduced Memory Overhead: The JVM uses compressed class pointers in Metaspace, making metadata storage more efficient.

Example: Configuring Metaspace

java -XX:MetaspaceSize=128M -XX:MaxMetaspaceSize=256M YourApp.jar

This sets the initial Metaspace size to 128 MB and limits the maximum size to 256 MB.

7.4. How Metaspace Works in Java 8 and Beyond

Metaspace resides in native memory, and class metadata is stored here. When the class metadata size exceeds a certain threshold, a garbage collection process is triggered to unload classes and free up Metaspace.

7.5. Configuring Metaspace

Developers can configure the Metaspace using JVM options:

-XX:MetaspaceSize: Sets the initial size of Metaspace.-XX:MaxMetaspaceSize: Limits the maximum Metaspace size.

If not properly configured, Metaspace can consume excessive native memory, leading to system-level memory exhaustion.

8. The Threading Model in JVM

The Java threading model provides the foundation for concurrency in Java applications, allowing multiple threads to execute concurrently. The JVM manages these threads and interacts with the native OS threads to handle scheduling and execution. Understanding the threading model is crucial for optimizing performance, achieving thread safety, and avoiding concurrency issues.

8.1. JVM Thread Life Cycle

The life cycle of a thread in Java consists of several stages, from creation to termination. A thread moves between these states based on its actions and interaction with other threads.

1. New

State: The thread is created but not started yet. It exists as an object but hasn’t begun execution.

Example:

Thread thread = new Thread();

2. Runnable

State: After calling

start(), the thread enters the runnable state. It is ready for execution but may not be running immediately due to the OS scheduler's decision.Example:

thread.start(); // Now thread is in the Runnable state

3. Blocked

State: The thread is waiting to acquire a lock or monitor held by another thread. It cannot proceed until the lock is available.

Example:

synchronized (object) { // Thread will block here if another thread holds the lock }

4. Waiting

State: The thread waits indefinitely for another thread to perform a specific action (e.g., calling

notify()).Example:

synchronized (obj) { obj.wait(); // Thread enters waiting state until notified }

5. Timed Waiting

State: Similar to the waiting state but the thread waits for a specified amount of time.

Example:

Thread.sleep(2000); // Thread waits for 2 seconds

6. Terminated

State: The thread has completed its execution or was terminated due to an error or exception.

Example:

public void run() { System.out.println("Thread finished execution"); }

8.2. Native OS Threads and JVM Threads

In Java, each thread maps to a native operating system (OS) thread. The JVM relies on the OS for thread scheduling and resource management. When you create a thread in Java, the JVM allocates memory and other resources, and then requests the OS to create a native thread.

Key points:

Thread Mapping: Java threads are implemented using the native threads of the operating system.

Multicore Processors: Native OS threads allow Java applications to run efficiently on multicore processors, enabling parallelism.

Thread Scheduling: The operating system’s scheduler determines which threads should execute at any given time.

8.3. Daemon vs Non-Daemon Threads

Java distinguishes between daemon and non-daemon threads. This distinction is critical for understanding how threads behave when the JVM shuts down.

Daemon Threads

Definition: These are background threads that provide support services. They are low-priority threads (e.g., garbage collection or monitoring threads).

Behavior: The JVM does not wait for daemon threads to finish before shutting down.

Example:

Thread daemonThread = new Thread(() -> { while (true) { // Background work } }); daemonThread.setDaemon(true); // Mark as daemon daemonThread.start();

Non-Daemon (User) Threads

Definition: These threads execute the main application logic. The JVM will not shut down until all non-daemon threads have completed their execution.

Behavior: The JVM remains running as long as at least one non-daemon thread is alive.

8.4. Synchronization in JVM

Synchronization is essential for handling shared resources between multiple threads. It prevents race conditions and ensures that only one thread can access a critical section of code at any given time.

8.4.1. Locks and Monitors

Java’s synchronisation mechanism relies on locks and monitors. Every Java object has an associated monitor lock, and the synchronised keyword is used to ensure that a thread holds the monitor before executing a synchronised block of code.

Locks: When a thread acquires a lock, no other thread can access the synchronised section of code until the lock is released.

Monitors: Every object in Java has a monitor. When a thread enters a synchronised block or method, it acquires the monitor associated with the object, preventing other threads from entering the same block.

Example of a synchronised method:

public synchronized void increment() { count++; }Synchronized block:

synchronized (this) { count++; }

8.4.2. Thread Safety Mechanisms

Java provides various mechanisms for ensuring thread safety:

Volatile Keyword: Ensures visibility of changes to variables across threads. When a variable is declared

volatile, its value is always read from and written to the main memory, ensuring that threads see the latest value.Example:

private volatile boolean flag = true;

Reentrant Locks: More flexible than synchronized blocks. ReentrantLock provides explicit lock control, offering features like fair locking and the ability to attempt lock acquisition without blocking.

Example:

ReentrantLock lock = new ReentrantLock(); lock.lock(); try { // Critical section } finally { lock.unlock(); // Ensure lock is released }

Atomic Classes: Java provides classes like

AtomicInteger,AtomicBoolean, andAtomicReferencefor atomic (thread-safe) operations on variables without using locks.Example:

AtomicInteger count = new AtomicInteger(0); count.incrementAndGet(); // Atomic increment operation

Concurrent Collections: Java's

java.util.concurrentpackage provides thread-safe collections likeConcurrentHashMap,CopyOnWriteArrayList, andBlockingQueue.Example of ConcurrentHashMap:

ConcurrentHashMap<String, Integer> map = new ConcurrentHashMap<>(); map.put("key", 1); // Thread-safe put operation

8.5. Thread Management and Performance

Efficient management of threads is critical for performance in multithreaded applications. Java provides several mechanisms to manage thread lifecycles, scheduling, and optimisation.

1. Thread Pools

A thread pool reuses a fixed number of threads for executing tasks, minimizing the overhead of thread creation and destruction.

Fixed Thread Pool Example:

ExecutorService executor = Executors.newFixedThreadPool(5); executor.submit(() -> { System.out.println("Thread: " + Thread.currentThread().getName()); }); executor.shutdown();

2. Fork/Join Framework

The Fork/Join framework, introduced in Java 7, is designed for parallel tasks that can be recursively split into subtasks. It uses a work-stealing algorithm, where idle threads can "steal" work from busy threads.

Example using Fork/Join:

class SumTask extends RecursiveTask<Integer> { private final int[] array; private final int start, end; SumTask(int[] array, int start, int end) { this.array = array; this.start = start; this.end = end; } @Override protected Integer compute() { if (end - start <= 10) { // Small task int sum = 0; for (int i = start; i < end; i++) { sum += array[i]; } return sum; } else { // Split task int mid = (start + end) / 2; SumTask leftTask = new SumTask(array, start, mid); SumTask rightTask = new SumTask(array, mid, end); leftTask.fork(); // Execute left task asynchronously int rightResult = rightTask.compute(); // Right task executes synchronously int leftResult = leftTask.join(); return leftResult + rightResult; } } }

3. Thread-Safe Design

Minimise Synchronisation: Use synchronisation only when necessary, and consider fine-grained locking or lock-free algorithms to reduce contention.

Use Concurrent Utilities: Favor concurrent collections and classes like

AtomicIntegerover manual synchronisation mechanisms.

4. Avoiding Common Threading Pitfalls

Deadlock: Avoid deadlock by ensuring that threads acquire locks in a consistent order.

Thread Starvation: Ensure that low-priority threads are not indefinitely delayed by high-priority threads.

Race Conditions: Use synchronization mechanisms to ensure that multiple threads don't modify shared resources concurrently in unsafe ways.

9. Java Bytecode

Java Bytecode is the intermediate representation of Java code that the JVM understands and executes. It is a platform-independent code that allows Java applications to be run on any device equipped with a JVM.

9.1. What is Bytecode?

Bytecode is a set of instructions generated by the Java compiler (javac) from Java source code. These instructions are not tied to any specific machine or OS, allowing the JVM to interpret and execute them on different platforms. Bytecode is saved in .class files, which are then loaded by the JVM at runtime.

- Example: Java code like

int x = 10;is translated into a sequence of bytecode instructions such asbipush 10andistore_1, wherebipushpushes a byte value onto the stack andistore_1stores the value into the first local variable slot.

9.2. How Bytecode is Generated

Java source code is compiled by the Java Compiler (javac) into bytecode. Each method, variable, and operation in the source code is translated into a corresponding bytecode instruction.

Compilation Process:

Java source code (

.java) is written and compiled usingjavac.The compiler produces

.classfiles that contain the bytecode.The JVM then reads and interprets or compiles this bytecode into native machine instructions.

Example:

// Source Code int x = 5;Translated to bytecode:

0: iconst_5 // Push the constant 5 1: istore_1 // Store it in variable 1

9.3. Bytecode Structure

A .class file consists of various components that encapsulate bytecode and metadata for class execution. Key sections include:

Magic Number: Identifies the file as a valid class file (

0xCAFEBABE).Version Information: The major and minor versions of the Java class file.

Constant Pool: Contains literals like strings, class names, and method references.

Access Flags: Defines the access level of the class (public, final, etc.).

Class and Interface Info: Information about the class and interfaces it implements.

Fields and Methods: Contains field and method definitions, including bytecode instructions for each method.

Attributes: Contains additional information like source file name, line number tables, etc.

9.4. Bytecode Execution in JVM

The JVM uses two approaches to execute bytecode:

Interpretation: The JVM reads and executes bytecode instructions one at a time. This is the default mode but can be slower due to the overhead of interpretation.

Just-in-Time (JIT) Compilation: To optimize performance, the JVM compiles frequently executed bytecode into native machine code during runtime, eliminating the need for repeated interpretation.

Execution Flow:

Class Loading: Bytecode is loaded into memory by the ClassLoader.

Bytecode Verification: The bytecode is checked for correctness and security.

Execution: The JVM executes the bytecode via interpretation or JIT compilation.

9.5. Bytecode Verification

Before executing bytecode, the JVM performs a process called bytecode verification to ensure that the bytecode is valid and safe. The verification ensures that:

Type Safety: Variables are used only with their declared types.

Stack Management: The stack is used correctly, and no stack underflow or overflow occurs.

Access Control: Access to private, protected, and public members is honored.

Final Classes: Final classes or methods are not overridden.

The verifier ensures that the bytecode follows strict rules to avoid malicious or incorrect execution, preventing issues like memory corruption or crashes.

10. JVM Optimisations

The JVM uses several advanced optimisations to enhance the performance of Java applications. These optimisations are performed at runtime and include JIT compilation, profiling, and advanced code transformations.

10.1. JIT Compilation and Optimisation

The Just-In-Time (JIT) compiler dynamically compiles bytecode into native machine code at runtime, which improves performance by eliminating the need for interpretation during subsequent executions. The JIT compiler optimises frequently executed code, transforming it into highly efficient machine code.

HotSpot: A popular JIT compiler implementation in the JVM. It identifies "hot" code paths that are executed frequently and compiles them into native code.

Adaptive Optimisation: The JIT continuously monitors application execution and recompiles code as necessary based on runtime profiling.

10.2. Profiling and Inlining

The JVM collects runtime data to optimise method execution:

Profiling: The JVM monitors method execution, branch prediction, and method call frequency.

Inlining: Frequently called small methods are inlined (i.e., the method call is replaced with the method’s code). This eliminates the overhead of method calls and can significantly speed up execution.

Example:

public int add(int a, int b) { return a + b; } public void compute() { int sum = add(5, 10); // The add method could be inlined }

10.3. Escape Analysis

Escape analysis is an optimisation technique that analyses whether objects are used outside the scope of a method or thread. If an object does not "escape" the method or thread, the JVM can allocate the object on the stack instead of the heap, improving memory allocation performance.

Example: In the code below, the

tempobject is only used locally and doesn’t escape the method, allowing the JVM to allocate it on the stack.public void calculate() { MyObject temp = new MyObject(); // Escape analysis may stack-allocate this object temp.process(); }

10.4. Code Elimination

The JVM performs dead code elimination, where code that is never executed or whose results are not used is removed from the compiled output.

Example:

public void unnecessaryCalculation() { int result = 100 / 0; // This may be eliminated since it’s not used }

10.5. Loop Unrolling and Other Optimisations

Loop unrolling is a technique where small loops are expanded into a series of repeated statements to reduce loop overhead and improve performance.

Example:

// Original loop for (int i = 0; i < 4; i++) { sum += array[i]; } // Unrolled loop sum += array[0]; sum += array[1]; sum += array[2]; sum += array[3];

Other optimisations include constant folding, where constant expressions are precomputed at compile time, and branch prediction, where the JVM optimizes conditional branches.

11. JVM Parameters and Tuning

Java applications can be fine-tuned for performance by adjusting JVM parameters, such as memory allocation and garbage collection behavior. Understanding these parameters is essential for optimising application performance.

11.1. JVM Parameter Types

JVM parameters can be broadly categorised as:

Standard Options: Common options like

-versionto check the JVM version.Heap Management: Options for controlling the heap size (

-Xms,-Xmx) and garbage collection.Garbage Collection Tuning: Options to configure GC behavior, such as

-XX:+UseG1GC.Performance Tuning: Options for advanced performance tuning, such as

-XX:+AggressiveOpts.

11.2. Tuning Heap Size (-Xms and -Xmx)

Heap size directly affects memory management and application performance. The -Xms option sets the initial heap size, while -Xmx sets the maximum heap size.

Example:

java -Xms512m -Xmx4g MyApplication

Tuning heap size can improve garbage collection efficiency by reducing frequent garbage collection or preventing out-of-memory errors.

11.3. Tuning Garbage Collection with JVM Options

Various JVM options can be used to tune the garbage collector:

Choosing a GC Algorithm:

-XX:+UseG1GCfor the G1 garbage collector.-XX:+UseZGCfor low-latency applications.

Adjusting GC Threads:

-XX:ParallelGCThreads=4to set the number of GC threads.

Tuning GC Frequency:

-XX:MaxGCPauseMillis=200to aim for a maximum pause time of 200 milliseconds.

11.4. Metaspace and Permanent Generation Tuning

Starting with Java 8, the PermGen space was replaced by Metaspace, which dynamically grows to accommodate class metadata. The following parameters control its size:

Initial Metaspace Size:

-XX:MetaspaceSize=128mMax Metaspace Size:

-XX:MaxMetaspaceSize=256m

11.5. Profiling Tools for JVM Tuning

Several tools can help profile and tune Java applications:

VisualVM: A powerful tool for monitoring CPU, memory, and thread usage.

Java Flight Recorder (JFR): Collects detailed runtime information with minimal overhead.

JConsole: A GUI tool for real-time monitoring of JVM performance.

11.6. Advanced Tuning Techniques for Low-Latency Applications

For applications with low-latency requirements, consider the following techniques:

Use Low-Latency GC:

- Use ZGC or Shenandoah GC for minimal GC pauses.

Reduce Safepoint Pauses:

- Minimise safepoint pauses using options like

-XX:+UnlockDiagnosticVMOptions -XX:DisableExplicitGC.

- Minimise safepoint pauses using options like

Optimize Object Allocation:

- Avoid frequent object allocation by reusing objects or using stack allocation through escape analysis.

Tune Thread Synchronisation:

- Minimise synchronisation overhead by reducing contention and using more fine-grained locks.

12. Security Features in JVM

The Java Virtual Machine (JVM) has a robust set of security features that protect against potential vulnerabilities like unauthorized access, execution of malicious code, and security breaches. JVM security mechanisms aim to safeguard applications by regulating how classes are loaded, verified, and executed. These features are critical to ensuring that Java applications can run in potentially untrusted environments securely.

12.1. Class Loader Security

The ClassLoader is responsible for loading Java classes into the JVM. It plays a crucial role in security by ensuring that classes are only loaded from trusted sources and that there is no unauthorized modification of class definitions.

Class Loader Hierarchy: Java uses a parent-child delegation model for class loading. This hierarchical system ensures that trusted classes (e.g., Java standard libraries) are loaded by the bootstrap class loader and untrusted classes (e.g., user-defined or third-party classes) are loaded by custom class loaders.

Bootstrap ClassLoader: Loads core Java classes (e.g.,

java.lang).Extension ClassLoader: Loads extension libraries.

Application ClassLoader: Loads user-defined classes.

ClassLoader Restrictions:

Class loaders can impose security policies restricting the loading of certain classes or packages.

The JVM prevents the introduction of two classes with the same fully qualified name, ensuring that trusted classes cannot be replaced by malicious ones.

Example:

ClassLoader classLoader = MyClass.class.getClassLoader(); // Custom class loader can be configured to load classes only from specific directoriesCustom Class Loaders and Security: Custom class loaders must follow strict security rules. For example, they can define custom logic to allow only specific classes or directories to be loaded, ensuring tighter control over what code gets executed in the JVM.

12.2. Bytecode Verification

One of the key features of the JVM is bytecode verification, a security mechanism that ensures the bytecode is valid and safe to execute before it is run. This prevents malformed or malicious bytecode from exploiting the JVM, offering a second layer of protection after class loading.

Stages of Bytecode Verification:

Class File Format Check: The verifier ensures that the class file adheres to the correct format (e.g., valid magic numbers, version compatibility).

Bytecode Validity: Checks that bytecode instructions are valid and don’t break any rules of the JVM instruction set.

Operand Stack Management: Verifies that the operand stack is used correctly, preventing stack overflow or underflow.

Type Safety: Ensures that operations are performed on variables of the correct type.

Access Control: Prevents illegal access to private and protected members of classes.

Security Example: Bytecode verification prevents buffer overflow attacks, type confusion, and illegal stack manipulation, which are common exploits in lower-level languages.

Example: In this case, an invalid bytecode that tries to assign a

doublevalue to anintvariable would be rejected by the bytecode verifier:// Bytecode verifier ensures type safety iload_1 // Load int from local variable 1 dstore_2 // Incorrectly stores a double value, will cause a verification error

12.3. Security Manager

The Security Manager is a core JVM component that enforces runtime security policies. It defines a security context that restricts what code can do based on predefined rules, controlling access to system resources like file systems, network sockets, and execution of external processes.

Policy-Based Security: The Security Manager uses a security policy file to define what actions different codebases can perform. It can restrict:

File access (read/write).

Network access (opening/closing sockets).

Execution of external commands.

Reflection and manipulation of private fields or methods.

Setting Up a Security Manager:

- A custom

SecurityManagercan be implemented by extending theSecurityManagerclass, and then installed usingSystem.setSecurityManager.

- A custom

Example:

SecurityManager sm = new SecurityManager() { public void checkRead(String file) { // Restrict read access to specific directories if (!file.startsWith("/allowed/directory")) { throw new SecurityException("Access Denied"); } } }; System.setSecurityManager(sm);Typical Use Cases:

Applets: In earlier versions of Java, applets used the Security Manager to restrict file and network access.

Java Web Start: Applications can be restricted from accessing critical system resources.

12.4. JVM Sandboxing

JVM Sandboxing is a security mechanism that isolates potentially dangerous code and restricts its ability to interact with system resources or other parts of the application. This is particularly useful when running untrusted or third-party code, as in applets, Java Web Start, or cloud environments.

Key Features of JVM Sandboxing:

Isolated Execution Environment: Untrusted code runs in a restricted environment with limited access to resources.

Restricted Permissions: The sandbox restricts access to the filesystem, network, and other critical resources based on predefined policies.

ClassLoader Restrictions: Only trusted ClassLoaders can load system classes, while untrusted ClassLoaders are limited to application-specific or sandboxed classes.

Example: A web application hosted on a server might use sandboxing to prevent malicious scripts from accessing sensitive server resources.

Typical Uses:

Running Java Applets in a browser, where code is downloaded and run in a restricted environment.

Serverless Environments: When running in cloud or containerized environments, JVM sandboxing isolates tenant applications.

Real-World Example: In cloud platforms, untrusted third-party Java applications run in a sandboxed environment to ensure they do not access sensitive system files or perform unauthorized network operations.

12.5. Reflection and Security Concerns

Reflection is a powerful feature in Java that allows programs to inspect and modify their structure (methods, fields, classes) at runtime. However, it can also pose significant security risks because it allows access to private members, bypassing traditional access control mechanisms.

Reflection Features:

Inspecting Classes at Runtime: Retrieve class metadata, including constructors, methods, and fields.

Invoking Methods Dynamically: Invoke methods or change field values, even private ones, at runtime.

Security Concerns with Reflection:

Bypassing Access Control: Reflection can be used to change the value of private fields, invoke private methods, or access otherwise restricted members.

Potential for Exploits: Attackers can use reflection to manipulate the application’s internal state, bypass security checks, or tamper with object instances.

Security Example:

Class<?> clazz = MyClass.class; Field field = clazz.getDeclaredField("privateField"); field.setAccessible(true); // Potentially dangerous, as it overrides access control field.set(myObject, newValue); // Modifying private field via reflectionMitigating Reflection Risks:

Security Manager: The

SecurityManagercan restrict the use of reflection to access private fields or methods.Code Reviews: Ensure that reflection is used judiciously and only where necessary.

Final Classes and Fields: Mark critical classes and fields as

finalto prevent unauthorized modifications.

Real-World Example: Many serialization frameworks (e.g., Gson) use reflection to instantiate and populate object fields dynamically, which can be dangerous if misused.

13. Evolution of JVM from Java 7 to Java 8 and Beyond

The JVM has undergone significant evolution across different Java versions, with changes aimed at improving performance, garbage collection efficiency, memory management, and support for modern programming constructs. This section will explore these changes in detail, starting from Java 7 to Java 17 (LTS).

13.1. JVM in Java 7

Java 7 brought several improvements to the JVM, focusing on better memory management, enhanced support for dynamic languages, and the introduction of a new garbage collector.

13.1.1. PermGen Space

In Java 7, the Permanent Generation (PermGen) space was still part of the heap memory, used to store class metadata such as classes, methods, and static variables. This part of the heap was managed separately from the rest, but it had some major limitations:

Size Restrictions: PermGen had a fixed size, which meant that as more classes were loaded (especially in dynamic environments like application servers), memory could run out, leading to

OutOfMemoryErrorexceptions.Memory Leaks: Because PermGen was difficult to manage, it was prone to memory leaks, especially in environments where class unloading was not done properly, like when applications were frequently redeployed.

13.1.2. G1 Garbage Collector (Introduced)

Java 7 introduced the Garbage First (G1) Garbage Collector, a significant improvement in garbage collection designed to replace the older CMS (Concurrent Mark-Sweep) collector. G1 GC was designed for applications that required large heap sizes and more predictable garbage collection pauses.

Heap Division: G1 divides the heap into small regions rather than contiguous spaces for old and young generations, which makes it easier to collect garbage in smaller chunks and avoid long pauses.

Pause-Time Goals: G1 aims to meet user-defined pause-time goals, making it ideal for applications requiring low-latency GC behavior.

13.1.3. Invokedynamic Bytecode for JVM Languages

Java 7 introduced the invokedynamic bytecode instruction to support dynamic languages on the JVM, such as Groovy, Scala, and JRuby. This was part of the Da Vinci Machine project, which aimed to improve the JVM’s ability to handle dynamic language invocations more efficiently.

Dynamic Method Invocation: This instruction allows for optimized method invocations in dynamic languages without needing the heavy reliance on reflection.

Impact on Performance: It reduces the overhead involved in dynamic method dispatch, improving the performance of dynamic languages on the JVM.

13.2. JVM in Java 8

Java 8 was a landmark release, not only due to language features like lambdas and streams but also because of significant changes in the JVM architecture and garbage collection.

13.2.1. Removal of PermGen, Introduction of Metaspace

In Java 8, the PermGen space was removed and replaced with Metaspace, which resides in native memory (outside the heap) rather than the JVM heap. This change resolved several limitations of the PermGen space:

Dynamic Sizing: Unlike PermGen, which had a fixed size, Metaspace can grow dynamically based on the application's needs.

Reduced Memory Leaks: Metaspace improves class metadata management and reduces the risk of memory leaks during class unloading in dynamic environments.

Configuration: Metaspace has its own configuration options like

-XX:MetaspaceSizeand-XX:MaxMetaspaceSizeto control memory usage.

13.2.2. Default Garbage Collector Changes

While Java 8 continued to support the G1 garbage collector, the Parallel GC remained the default garbage collector. However, G1 became a popular choice for applications that needed more predictable performance and low-latency garbage collection behavior.

13.2.3. Lambda Expression Support and Its Impact on JVM

Java 8 introduced Lambda Expressions, allowing developers to write concise code that focuses more on logic than boilerplate. This new feature significantly impacted the JVM:

Synthetic Classes: Lambdas are compiled into synthetic classes using the

invokedynamicinstruction, improving performance and reducing bytecode size.Optimized Execution: The JVM optimizes lambda invocations through the use of

invokedynamic, reducing the overhead associated with traditional anonymous inner classes.

13.3. JVM in Java 9

Java 9 introduced major changes to the JVM, most notably the introduction of the modular system (Project Jigsaw) and enhancements to garbage collection logging and configuration.

13.3.1. Modular System (Project Jigsaw)

The Java Platform Module System (JPMS) was introduced in Java 9, which split the JDK into modules, making it more scalable and maintainable:

Modularized JVM: The JVM itself was modularized, allowing smaller runtime images for applications.

Module Class Loader: The introduction of modules created a more granular class loading system, improving application security by preventing illegal access between modules.

13.3.2. Introduction of Unified GC Logging

Java 9 introduced Unified Garbage Collection Logging, which standardizes how garbage collection events are logged. This feature simplifies GC tuning and performance monitoring:

- Unified Syntax: It uses the

-Xlog:gcoption to standardize logging across all garbage collectors, making it easier for developers to tune and optimize GC behavior.

13.3.3. G1 as Default GC

Starting from Java 9, G1 Garbage Collector became the default garbage collector, replacing the older Parallel GC. G1 offers better performance for most applications, especially those requiring predictable pause times and low-latency operations.

13.4. JVM in Java 11

Java 11, as an LTS (Long-Term Support) release, brought new features focused on performance, memory management, and the introduction of new garbage collectors.

13.4.1. New Garbage Collectors (ZGC, Shenandoah)

Java 11 introduced two new garbage collectors aimed at optimising memory management in large-scale applications:

ZGC (Z Garbage Collector): ZGC is a low-latency garbage collector that can handle multi-terabyte heaps with very low pause times (typically less than 10 milliseconds).

- Concurrent Processing: ZGC works concurrently with the application, avoiding long GC pauses.

Shenandoah GC: Shenandoah is another low-pause-time garbage collector, optimised for reducing garbage collection overhead, especially for applications with large heap sizes.

13.4.2. Removal of Java EE and CORBA Modules

As part of the JVM’s modularisation effort, Java 11 removed older, legacy modules such as Java EE (Enterprise Edition) APIs and CORBA (Common Object Request Broker Architecture). This made the JDK more lightweight and focused on core Java features.

- Impact on JVM: The removal of these modules allowed for a more streamlined JVM that was easier to maintain and deploy in cloud and containerized environments.

13.5. JVM in Java 17 (LTS)

Java 17, another LTS release, brought further improvements to garbage collection and introduced modern language features like sealed classes.

13.5.1. Further Improvements to GC (ZGC, G1)

Java 17 continued to improve the performance of the ZGC and G1 garbage collectors:

ZGC Enhancements: Improved heap allocation and support for multi-threaded environments, further reducing GC pause times.

G1 Improvements: Additional enhancements in G1 to provide more predictable pause times and better memory management.

13.5.2. Sealed Classes and JVM Impact

Java 17 introduced Sealed Classes, which restrict which other classes or interfaces can extend or implement them. This has implications for the JVM:

Class Loading: The JVM enforces sealed class hierarchies at runtime, ensuring that only allowed classes can extend or implement sealed classes.

Security: This feature improves the design of class hierarchies, making them more secure and reducing the chances of unwanted subclassing.

14. How the JVM Handles Multithreading

The Java Virtual Machine (JVM) is designed to support multithreading and concurrency, which are fundamental to building scalable and efficient applications. Multithreading allows Java programs to execute multiple threads simultaneously, improving resource utilisation and performance, especially in multi-core processors. The JVM's threading model is tightly integrated with the underlying operating system, allowing the JVM to manage thread scheduling, synchronisation, and resource sharing effectively.

14.1. Threads and Concurrency

In the JVM, each thread represents a separate path of execution for the program, enabling multiple operations to occur simultaneously. Threads in Java are managed by the java.lang.Thread class, and they are either user threads or daemon threads.

Thread Lifecycle: A Java thread goes through different states, such as New, Runnable, Blocked, Waiting, Timed Waiting, and Terminated, as it executes. The JVM manages the transitions between these states based on the thread's activity.

Concurrency: The JVM handles concurrency by sharing resources among multiple threads. However, threads can interfere with each other when accessing shared resources. This interference can lead to issues like race conditions or deadlocks, which the JVM manages through synchronisation and thread coordination mechanisms.

Java provides two main mechanisms to create threads:

Extending the Thread Class: By subclassing the

Threadclass and overriding therunmethod.Implementing the Runnable Interface: By defining a

Runnableobject and passing it to aThreadobject.

- Thread Pooling: The JVM also supports thread pooling, where a pool of reusable threads is maintained. Thread pools improve performance by reducing the overhead of thread creation and destruction. The Executor framework (

java.util.concurrent) provides a way to manage thread pools.

14.2. Synchronization in JVM

Synchronization in the JVM ensures that only one thread can access a shared resource (e.g., an object or a variable) at a time. This is crucial for avoiding data inconsistency during concurrent execution. Java provides two main synchronization techniques:

Synchronized Blocks/Methods: A thread can acquire a lock on an object or a class before accessing a critical section of the code. Only one thread can execute a synchronised block or method at a time, while other threads trying to enter the same block are blocked until the lock is released.

Synchronized Methods:

public synchronized void increment() { count++; }In this example, the method

increment()is synchronized, meaning only one thread can access it at any time.Synchronized Block:

public void increment() { synchronized(this) { count++; } }Here, only the block inside

synchronized(this)is locked, allowing finer control over which parts of the code are synchronized.

Intrinsic Locks: The JVM uses intrinsic locks (also known as monitor locks) to implement synchronization. Each object in Java has an intrinsic lock that is automatically acquired by threads when they enter a synchronized block or method.

14.3. Thread States in JVM

Java threads go through several states during their lifecycle, and the JVM manages these states to coordinate thread execution and resource allocation. The major states include:

New: A thread is created but not yet started.

Runnable: A thread that is ready to run is in the Runnable state. The thread scheduler in the JVM picks it up for execution.

Blocked: A thread is blocked when it is waiting to acquire a lock to enter a synchronized block or method.

Waiting: A thread enters the waiting state when it is waiting for another thread to perform a particular action (like releasing a lock or notifying the thread).

Timed Waiting: A thread is in a timed waiting state when it is waiting for a specified period of time (e.g.,

Thread.sleep()orObject.wait(long timeout)).Terminated: A thread has completed its execution and is no longer runnable.

The JVM manages transitions between these states depending on thread operations and resource availability.

14.4. Monitor Mechanism

The monitor mechanism in the JVM is responsible for implementing synchronized methods and blocks. A monitor is a synchronization construct that allows threads to have both mutual exclusion (only one thread can execute a critical section) and cooperation (threads must occasionally wait for others to complete).

Each object in Java has an associated monitor, and the JVM uses this monitor to implement synchronization. When a thread enters a synchronized block or method, it attempts to acquire the monitor associated with the object. If the monitor is not available (because another thread holds it), the thread is blocked until the monitor is released.

Locking and Unlocking: The JVM automatically handles the acquisition and release of locks:

Enter Monitor: The thread locks the monitor before entering the synchronized block.

Exit Monitor: The monitor is unlocked when the thread exits the block or method, either normally or due to an exception.

Wait-Notify Mechanism: Java provides the

wait(),notify(), andnotifyAll()methods to facilitate communication between threads. These methods are used in combination with synchronized blocks:wait(): Causes the current thread to release the monitor and wait until another thread invokes

notify()ornotifyAll().notify(): Wakes up a single thread waiting on the monitor.

notifyAll(): Wakes up all threads waiting on the monitor.

14.5. Impact of Parallel and Concurrent Garbage Collectors on Threads

The JVM's garbage collectors (GC) play a significant role in managing memory during multithreaded execution. Both Parallel and Concurrent garbage collectors interact with threads differently, impacting application performance.

Parallel Garbage Collectors: In a parallel GC (like the Parallel GC), multiple threads are used to perform garbage collection. However, while the garbage collection occurs, all application threads are paused, leading to "stop-the-world" pauses.

- Thread Impact: Parallel GC can cause noticeable pauses in application performance, especially when the heap size is large, as all threads are halted until GC is completed.

Concurrent Garbage Collectors: Concurrent collectors (like CMS or G1) perform garbage collection concurrently with the application threads, reducing the impact of pauses.

- Thread Impact: These collectors allow the application threads to continue running during garbage collection phases, significantly reducing the pause times. However, the trade-off is higher CPU usage as the JVM must allocate resources to both application threads and GC threads.

G1 GC and Threads: The G1 GC divides the heap into regions and performs garbage collection in parallel and concurrently. It uses thread-local data structures to ensure that garbage collection threads do not interfere with application threads, allowing smoother multithreading performance.

- Pause-Time Goals: G1 provides the ability to set pause-time goals (e.g., 100ms), allowing the JVM to adjust how aggressively garbage collection threads run, balancing between application thread performance and GC overhead.

ZGC and Shenandoah GC: These new collectors (introduced in Java 11) focus on minimising GC pauses by handling the majority of garbage collection work concurrently. Both ZGC and Shenandoah aim to keep pause times very low (in the range of milliseconds) even for large heaps.

- Thread Impact: Application threads experience minimal impact from these GCs, as they are not paused for long during collection cycles, ensuring smooth execution in highly multithreaded environments.

15. Tools for Monitoring JVM

Monitoring the JVM is essential for maintaining application performance, diagnosing issues, and ensuring resource optimization. Below are key tools for monitoring the JVM, along with instructions on how to use them effectively.

15.1. JVisualVM

JVisualVM is a versatile tool that provides monitoring and troubleshooting capabilities for Java applications.

How to Use JVisualVM:

Installation:

JVisualVM comes bundled with the JDK. If you have JDK 6u7 or later, you can find it in the

bindirectory of the JDK installation.You can also download the standalone version from the JVisualVM website.

Launching JVisualVM:

Open a terminal or command prompt.

Navigate to the

bindirectory of your JDK installation.Run the command:

jvisualvm

Connecting to a JVM:

Once JVisualVM is open, you’ll see a list of running JVMs on the left side under "Local" and "Remote" sections.

Select the JVM you want to monitor and double-click it to open a detailed view.

Monitoring:

Overview: Shows basic information about the JVM, including uptime, memory usage, and CPU load.

Threads: Displays live thread statistics, including active threads and their states.

Heap Dump: Capture and analyze heap dumps for memory analysis. Right-click the JVM in the left panel and select "Heap Dump."

Profiler: Use the CPU or memory profilers to analyze performance bottlenecks by clicking on the "Profile" tab.

Analyzing Results:

- After profiling, you can view details about method calls and memory usage to identify potential issues.

15.2. JConsole

JConsole is a built-in monitoring tool that provides information about the performance and resource consumption of Java applications.

How to Use JConsole:

Launching JConsole:

JConsole is included in the JDK. Open a terminal or command prompt.

Run the command:

jconsole

Connecting to a JVM:

In the JConsole dialog, you can connect to a local JVM or a remote JVM by providing the host and port number.

Choose the JVM you want to monitor from the list and click "Connect."

Monitoring Resources:

Memory Tab: View real-time memory usage, including heap and non-heap memory. You can trigger garbage collection manually here.

Threads Tab: Monitor thread activity, including the number of live threads, thread states, and deadlock detection.

MBeans Tab: Browse and interact with MBeans. This allows you to invoke operations and modify attributes dynamically.

Analyzing Data:

- Use the data provided to analyze memory usage patterns and thread states to diagnose performance issues.

15.3. Java Mission Control (JMC)

Java Mission Control (JMC) is a comprehensive monitoring and analysis tool designed for production environments.

How to Use Java Mission Control:

Installation:

JMC is bundled with the Oracle JDK starting from JDK 7u40. If you have an Oracle JDK, you can find it in the

bindirectory.Alternatively, download it from the Java Mission Control page.

Launching JMC:

Open a terminal or command prompt.

Run the command:

jmc

Connecting to a JVM:

Once JMC is open, you will see a list of available JVMs.

Select the JVM instance you want to monitor and click on it to connect.

Using Java Flight Recorder:

To start recording, go to the Flight Recorder section and click on "Start Recording."

You can choose predefined templates or configure custom settings for the recording duration, events to capture, and recording frequency.

Analyzing Flight Recordings:

After recording, you can open the recorded file in JMC for detailed analysis.

Use the various views (e.g., CPU, Memory, Threads) to analyze performance data and diagnose issues.

15.4. Java Flight Recorder (JFR)

Java Flight Recorder (JFR) is an advanced tool for capturing runtime data with minimal overhead.

How to Use Java Flight Recorder:

Enabling JFR:

To enable JFR, use the

-XX:StartFlightRecordingoption when starting your Java application:java -XX:StartFlightRecording=duration=60s,filename=recording.jfr -jar your-application.jar

Viewing Recorded Data:

Once recording is completed, you can analyze the

.jfrfile using Java Mission Control (JMC).Open JMC, and load the

.jfrfile to visualize and analyze the recorded data.

Real-Time Monitoring:

- You can also use JFR in real-time mode by connecting JMC to the JVM while it is running and starting a recording from the JMC interface.

Analyzing Events:

- Use the various analysis tools in JMC to interpret events related to CPU usage, memory allocation, garbage collection, and thread activity.

15.5. JVM Profiling Tools (YourKit, JProfiler)

YourKit and JProfiler are commercial profiling tools designed to provide in-depth performance analysis.

How to Use YourKit:

Installation:

- Download YourKit from the YourKit website and install it.

Integrating YourKit with Your Application:

You can run YourKit in agent mode by adding the following JVM argument:

-agentpath:/path/to/yourkit/bin/linux-x86-64/yourkit.jar

Starting Profiling:

Launch your application with the above argument, then open the YourKit GUI.

Connect to the JVM instance you want to profile.

Using Profiling Features:

CPU Profiling: Analyze method call counts and timings.

Memory Profiling: Identify memory usage patterns and find memory leaks.

Thread Profiling: Examine thread states and CPU usage per thread.

Analyzing Results:

- After profiling, YourKit provides visual reports to analyze performance bottlenecks and optimize your application accordingly.

How to Use JProfiler:

Installation:

- Download JProfiler from the JProfiler website and install it.

Integrating JProfiler with Your Application:

Start your Java application with the JProfiler agent by adding the following JVM argument:

-agentpath:/path/to/jprofiler/bin/linux-x86-64/jprofileragent.so

Starting Profiling:

- Open the JProfiler GUI and choose the appropriate profiling mode (CPU, Memory, or Threads) to connect to your running application.

Using Profiling Features:

CPU Views: Analyze call trees, hotspots, and method usage.

Memory Views: Analyze heap dumps, find memory leaks, and see object allocation.

Thread Views: Monitor thread states, identify contention issues, and deadlocks.

Analyzing Results:

- Use the detailed reports provided by JProfiler to identify performance issues and improve your code.

16. JVM in the Cloud and Microservices

With the rise of cloud computing and microservices, optimizing the JVM for these environments is crucial for resource efficiency and performance.

16.1. JVM Optimisation for Cloud Environments

How to Optimise the JVM in the Cloud:

Set Heap Size Appropriately:

- Use

-Xmsand-Xmxflags to set initial and maximum heap sizes based on your application’s memory requirements and the resources available in the cloud environment.

- Use

Choose the Right Garbage Collector:

- Depending on your application, choose an appropriate garbage collector (e.g., G1, ZGC) using

-XX:+UseG1GCor-XX:+UseZGC. Adjust GC settings based on performance tests.

- Depending on your application, choose an appropriate garbage collector (e.g., G1, ZGC) using

Use Container-Specific Settings:

- In cloud environments, JVMs can be container-aware. Use options like

-XX:MaxRAMPercentageto allow the JVM to dynamically adjust its memory limits based on the container's allocated resources.

- In cloud environments, JVMs can be container-aware. Use options like

16.2. Impact of Containerisation (Docker, Kubernetes) on JVM

How to Optimise the JVM for Containers:

Container Awareness:

Make sure you’re using a JVM version that is container-aware (Java 10 and later).

Monitor resource usage using tools like JConsole or JVisualVM to ensure you’re staying within limits.

Adjust Resource Limits:

- Configure your Docker or Kubernetes resource limits to prevent over-allocation of CPU and memory, ensuring optimal performance.

Use JVM Options for Containers:

Utilise JVM options specifically for containers to enhance performance. For example:

-XX:+UseContainerSupport -XX:MaxRAMPercentage=75.0

16.3. Optimizing JVM in Microservices Architectures

How to Optimize JVM in Microservices:

Stateless Services:

- Design microservices to be stateless whenever possible to make them easily scalable and reduce JVM memory overhead.

Service-Specific JVM Settings:

- Tune each microservice’s JVM settings based on its specific resource requirements and usage patterns.

Monitoring:

- Use monitoring tools like JMC or JProfiler to track performance and make data-driven decisions about JVM optimizations.

16.4. Using JVM with Serverless (AWS Lambda)

How to Optimise the JVM in Serverless Architectures:

Cold Start Optimisation:

Reduce cold start times by minimising the size of the deployed Lambda function and its dependencies.

Use layers for common libraries to optimise cold starts.

Memory Configuration:

- Allocate the appropriate amount of memory to the Lambda function. The CPU power scales with the memory, so balance memory allocation with expected load.

JVM Initialisation:

- Pre-warm the JVM by keeping your Lambda function alive if possible. This reduces startup time for subsequent invocations.

Use Event-Driven Patterns:

- Design Lambda functions to respond to events, using the JVM's capabilities to handle asynchronous processing efficiently.

Conclusion

The Java Virtual Machine (JVM) plays a critical role in the Java ecosystem, serving as the backbone that enables platform independence, optimizes application performance, and enhances security. From understanding its architecture and memory management to exploring the intricacies of garbage collection and multithreading, this comprehensive exploration has provided insights into how the JVM operates and evolves.

Moreover, as cloud computing and microservices architectures continue to dominate the software development landscape, the JVM has adapted to meet new demands, offering optimisations that leverage modern technologies like containerization and serverless computing. The tools available for monitoring and tuning the JVM further empower developers to ensure that their applications run efficiently and securely.

By mastering the various aspects of the JVM covered in this article, developers can not only write better Java applications but also contribute to the ongoing evolution of this vital component of the Java ecosystem. As we move forward, understanding the JVM's architecture, its optimisation techniques, and its integration with modern technologies will be key to unlocking the full potential of Java applications in a rapidly changing environment.

Subscribe to my newsletter

Read articles from Bikash Nishank directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by