Why Job Queues In Your System Architecture?

Usman Soliu

Usman Soliu

As a backend engineer, you’ve likely faced tasks that take a long time to complete, causing delays in system response or even timeouts. These tasks don't always require immediate execution, but handling them within the same request can slow everything down.

While growing as a backend engineer, I faced similar challenges. For example, generating large reports or pulling detailed account statements often led to timeouts. Why? Because I was processing them in the request instead of taking them off to be handled in the background. This is where job/worker queues come in to save the day.

By moving these long-running tasks to the background using a queue, you can free up your system to handle other requests quickly and efficiently. This not only improves performance but also enhances user experience by ensuring the system responds promptly without making them wait.

In this article, I’ll walk you through the concept of job/worker queues, how Redis can be used as the backbone for this system, and how introducing queues into your system design can significantly improve backend performance.

Understanding Queuing in System Design

Before diving into job queues specifically, it’s important to understand the broader concept of queues and their role in system design.

In computer science, a queue is a data structure that works on a first-in, first-out (FIFO) basis. Think of it as a line of people waiting for a service—the first person to arrive is the first to be served. This simple mechanism allows systems to manage and prioritize tasks in an orderly fashion.

There are several types of queues, each suited to different use cases:

Message Queues: Facilitate communication between distributed systems by allowing asynchronous message exchange without direct dependency.

Example: Microservices using RabbitMQ or Apache Kafka.Job Queues: Offload time-consuming tasks to background workers, preventing them from blocking the main application.

Example: Sending emails, processing payments or generating reports in the background.Task Queues: Manage smaller, immediate tasks that can be processed in parallel.

Example: Resizing images or updating profiles in bulk.Priority Queues: Handle tasks based on priority, ensuring critical tasks are processed first.

Example: Triggering alerts for system failures before handling routine events.

Each type enhances system performance by decoupling tasks and optimizing processing time.

What Are Job Queues and Why Do You Need Them?

Job queues allow you to offload time-consuming tasks to be processed asynchronously, meaning they run in the background, freeing up your system to handle other requests. This is crucial for tasks like:

Sending emails

Generating reports

Processing payments

Importing/exporting data

Think of it this way: you don’t want a user waiting on a page while an email is sent or a large report is generated. Instead, you can queue the task and let your system respond immediately, then complete the task later in the background.

More Real-world Scenarios

Imagine the following scenarios where job queues can make a significant difference:

Large E-commerce Platform During Peak Sales: Imagine a large e-commerce platform where users place orders during peak sales, such as Black Friday. Instead of processing each order and sending a confirmation email immediately, the system can offload tasks like sending confirmation emails, updating inventory, and notifying shipping services to the background. This ensures that the platform remains responsive for users continuing to browse or place orders.

User Registration with Email Verification: When users register for a new account, a verification email needs to be sent. Without a queue, the system might make users wait while the email is sent. By queuing the email task, the system can respond instantly with a welcome message, while the email is sent in the background, improving the user experience.

Financial Services App for Generating Account Statements: In a banking or fintech application, users may request detailed account statements that span several months or years. Generating these reports can be resource-heavy, but by queuing the task, users can continue using other features of the app while the report is prepared in the background, reducing system load and ensuring faster response times for other users.

Redis as the Backbone for Job Queues

Redis, an in-memory data structure store, is perfect for building job/worker queues. Why? Because Redis is:

Fast: Redis stores data in memory, making it quick to enqueue and dequeue jobs.

Reliable: It supports durability, so queued jobs are not lost even if your system crashes.

Scalable: Redis can handle a large number of jobs and workers distributed across multiple servers.

Redis supports list operations like LPUSH, LPOP, and BRPOP, making it easy to implement a basic queue. However, using Redis with some purpose-built tools will make your life as a backend engineer even easier.

Now that we understand Redis as the backbone for job queues, let’s explore how tools like BullMQ simplify job management in Node.js

Tools Built on Redis for Job Queues

Let’s look at two popular tools built on Redis that will simplify working with job queues in your backend system:

1. BullMQ (for Node.js)

One of the most powerful and widely used job queue libraries is BullMQ, built on Redis. It’s great for handling tasks asynchronously in Node.js applications. Whether you need to send notifications, resize images, or schedule tasks, BullMQ provides the tools to do it efficiently.

Here’s how BullMQ can improve your system:

Job retries: Automatically retry failed tasks.

Delayed jobs: Schedule tasks to be executed later, not immediately.

Concurrency: Process multiple jobs in parallel, reducing bottlenecks.

const { Queue } = require('bullmq');

// Create a new queue

const reportQueue = new Queue('reportQueue');

// Add a job to the queue

reportQueue.add('generate-report', { userId: 12345 });

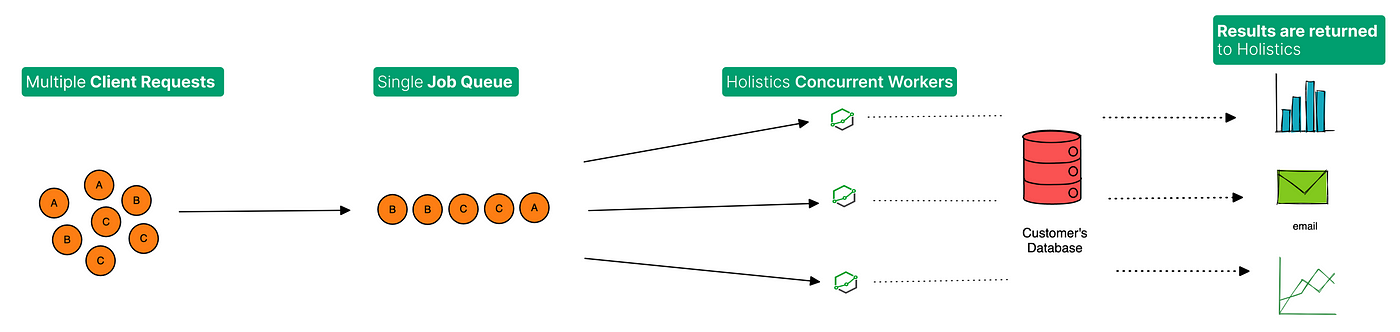

With BullMQ, you can create multiple workers to consume tasks from the same queue. This allows you to scale up your system, as workers can run in parallel, processing tasks independently. This setup ensures tasks are completed faster, without overloading your main application.

import { Worker } from 'bullmq';

// Create a worker to process jobs from the queue

const worker1 = new Worker('reportQueue', async job => {

// process the job here

console.log(`Worker 1 is processing job ${job.id}`);

});

// Create a second worker to consume from the same queue

const worker2 = new Worker('reportQueue', async job => {

// process the job here

console.log(`Worker 2 is processing job ${job.id}`);

});

In this example, worker1 and worker2 both consume tasks from the report queue. If the queue has many jobs, the workers will split the load, improving processing time and efficiency.

2. Bee-Queue (Node.js)

Bee-Queue is another lightweight, Redis-backed job queue for Node.js. It’s fast, with low overhead, making it ideal for simple use cases where you don’t need the extra complexity of tools like Bull.

While it’s simpler, Bee-Queue still gives you the essentials: delayed jobs, job retries, and concurrency. It works well for small-scale applications or services where simplicity is key.

Why Job Queues Improve Backend Performance

Integrating job queues into your system architecture has several advantages:

Improved Response Times: Offloading tasks to a queue ensures that your API responds quickly to user requests, while background workers handle the heavy lifting behind the scenes.

Scalability: Redis-based job queues allow you to scale horizontally. You can add more workers to handle more jobs as your system grows, without adding pressure on your core application.

Failure Handling: Tools like BullMQ allow for job retries and dead-letter queues (DLQ). If a task fails, it can be retried a certain number of times, and if it still fails, it’s moved to a DLQ where it can be reviewed manually.

Designing Your Backend with Job Queues

When building or scaling your backend system, consider when and where you can introduce job queues. Typical scenarios where queues can dramatically improve system performance include:

Heavy computational tasks: Media processing is CPU-intensive. Queue these tasks so they don’t block your server’s performance. Tasks like report generation or video processing can be offloaded to a queue, ensuring users don’t experience long waits or timeouts.

Third-party API interactions: API calls to third-party services (e.g., payment gateways) can be unreliable or slow. Queue them to ensure the request goes through without impacting your system’s response time.

Scheduled tasks: Need to run scheduled tasks like sending daily summaries or alerts? Queues can handle this efficiently, without adding extra complexity to your application logic. Emails, SMS, or push notifications don’t need to be sent instantly. Queue these tasks to avoid slowing down your app.

Data Import/Export: Handling large files or data exports can take time, and it’s better to let a worker process it in the background.

Conclusion

To summarise, Redis-based job queues, such as BullMQ and Bee-Queue, are vital tools in modern backend system design. They improve performance by handling background tasks asynchronously, reduce system load during peak times, and provide a reliable mechanism for scaling workers.

Incorporating job queues into your system design improves response times, ensures reliability, and allows you to scale your application effortlessly as it grows. Next time you’re faced with a task that could slow down your app, remember—queue it!

Have you encountered long-running tasks that slow down your system? How would implementing job queues benefit your current projects? Let me know in the comments.

Further Reading

If you're interested in diving deeper into implementing BullMQ in an Express.js application, be sure to check out the next post for a detailed guide.

Subscribe to my newsletter

Read articles from Usman Soliu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Usman Soliu

Usman Soliu

Usman Soliu, a seasoned software engineer with a career spanning over six years, has devoted more than three years to constructing robust backend applications. Beyond the corporate sphere, he actively contributes to open-source projects, showcasing a commitment to collaborative innovation.